HOW TO TRICK SQL INTO DOING ALL THE WORK FOR YOU

PyGotham 2018

Molly Leen

Molly Leen

Senior Software Engineer @

At Kyruus we help health systems match patients with the right providers and enhance patient access enterprise-wide

Who am I?

Talk Overview

The Problem

Current Process

The Solution

Takeaways

The Problem

- Large amount of data

- 2x the number of rows currently in our system

"BIG" data

The Data

{"fname": "Spongebob", "lname": "Squarepants", "type": "sponge"}

{"fname": "Patrick", "lname": "Star", "type": "starfish"}

{"fname": "Squidward", "lname": "Tentacles", "type": "squid"}

{"fname": "Eugene", "lname": "Krabs", "type": "crab"}

{"fname": "Sandy", "lname": "Cheeks", "type": "squirrel"}

{"fname": "Sheldon", "lname": "Plankton", "type": "plankton"}

{"fname": "Gary", "lname": "The Snail", "type": "snail"}

Current Infrastructure

raw data

API server

- Post each record 1 at a time

- Estimated time: ~48 hours

POST

{

"fname": "Spongebob",

"lname": "Squarepants",

"type": "Sponge"

}

ingestion script

INSERT

Why is this so slow?

- Network request for each record

- API commits to db on EACH request

Processing one by one is SLOW

Can we process in bulk?

Use COPY to load all the rows in one command, instead of using a series of INSERT commands. The COPY command is optimized for loading large numbers of rows; it is less flexible than INSERT, but incurs significantly less overhead for large data loads.

From the PostgreSQL docs:

How does it work?

COPY takes data from a file which is formatted to match the structure of the table

"Spongebob,Squarepants,sponge"

"Patrick,Star,starfish"

"Squidward,Tentacles,squid"

"Eugene,Krabs,crab"

"Sandy,Cheeks,squirrel"

"Sheldon,Plankton,plankton"

"Gary,The\ Snail,snail"fname | lname | type

---------------------

| | Requirements of COPY

- Imports data from a FILE

- File must be a CSV matching the FORMAT of the postgres table

Problems with COPY

- The file is not on the server

- The file is JSONLines not a CSV

Problem #1

COPY imports data from a file

The file is not on the server

Potential Solution

raw data

API server

Streaming data from s3

with requests.get(url, stream=True) as s3_data:

# Data is now a generator we can access

# in a loop or by calling data.next()

print(s3_data.next()){"fname": "Spongebob", "lname": "Squarepants", "type": "sponge"}prints...

Requirements

- Imports data from a FILE

- File must be a CSV matching the tables FORMAT

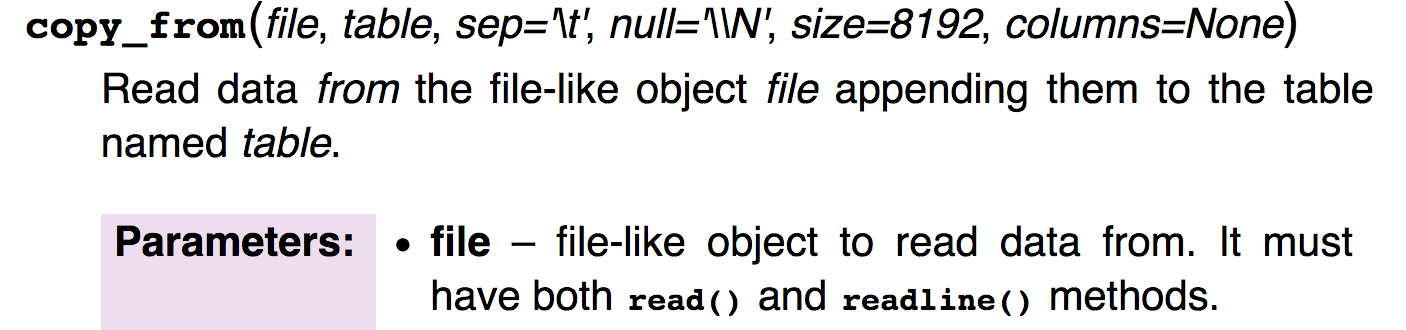

Psycopg2 COPY

- PostgreSQL adapter for use in Python apps/scripts/etc.

What is a file-like object?

According to the python docs:

- An object exposing a file-oriented API (with methods such as read() or write()) to an underlying resource. Depending on the way it was created, a file object can mediate access to a real on-disk file or to another type of storage or communication device (for example standard input/output, in-memory buffers, sockets, pipes, etc.).

- https://docs.python.org/3/glossary.html#term-file-object

Generator -> File

Can we make our generator LOOK like a file?

| Generator |

File |

|---|---|

| next() to get next line |

readline() to get next line |

| raises StopIteration when done |

returns an empty string when done |

def __init__(self, s3_data):

self.data = s3_data # generator def readline(self):

try:

return self.data.next()

except StopIteration:

return '' def read(self, size=-1):

return self.readline()Let's try it

class FileLike(object): def readline(self): def readline(self):

return self.data.next()Extracting data from s3

with requests.get(url, stream=True) as s3_data:

file_like_data = FileLike(s3_data)

print(file_like_data.readline()){"fname": "Spongebob", "lname": "Squarepants", "type": "sponge"}prints...

Requirements

- Imports data from a "FILE"

- File must be a CSV matching the tables FORMAT

Problem #2

File must be a CSV that matches the format of the table

The file is JSONLines

The Data

{"fname": "Spongebob", "lname": "Squarepants", "type": "sponge"}

{"fname": "Patrick", "lname": "Star", "type": "starfish"}

{"fname": "Squidward", "lname": "Tentacles", "type": "squid"}

{"fname": "Eugene", "lname": "Krabs", "type": "crab"}

{"fname": "Sandy", "lname": "Cheeks", "type": "squirrel"}

{"fname": "Sheldon", "lname": "Plankton", "type": "plankton"}

{"fname": "Gary", "lname": "The Snail", "type": "snail"}JSON -> CSV

{"fname": "Spongebob", "lname": "Squarepants", "type": "sponge"}"Spongebob,Squarepants,sponge"CSV Writer

- Ensures all data is properly formatted and escaped

["Spongebob", "Squarepants", "sponge"]"Spongebob,Squarepants,sponge" ["Gary", "The Snail", "snail"] "Gary,The\ Snail,snail"CSV Writer

- Writes to a file-like object that has a write() fn

- We need to format and immediately retrieve the data

class FileQueue(Queue):

def write(self, data):

self.put(data)

def read(self):

return self.get()JSON -> CSV Flow

JSON

{"fname": "Spongebob", "lname": "Squarepants", "type": "sponge"}List (prep_for_csv step)

["Spongebob", "Squarepants", "sponge"]Write to CSV (formats and "puts" to FileQueue)

Read from FileQueue and return

"Spongebob,Squarepants,sponge" def __init__(self, s3_data):

self.data = s3_data # generator def readline(self):

try:

return self.data.next()

except StopIteration:

return '' def read(self, size=-1):

return self.readline()Let's add it

class FileLike(object): except StopIteration:

return ''data = # converts JSON to list of values

data_for_csv = prep_for_csv(data) self.file_queue = FileQueue()

self.writer = csv.writer(self.file_queue)self.writer.writerow(data_for_csv)return self.file_queue.read()Extracting data from s3

with requests.get(url, stream=True) as s3_data:

file_like_data = FileLike(s3_data)

print(file_like_data.readline())"Spongebob,Squarepants,sponge"prints...

Requirements

- Imports data from a FILE

- File must be a CSV matching the tables FORMAT

Now we're ready to COPY!

with requests.get(url, stream=True) as s3_data:

file_like_data = FileLike(s3_data=data)

table = 'spongebob_characters'

columns = ['fname', 'lname', 'type']

cursor.copy_from(file_like_data, table,

columns=columns, sep=',')

But wait...

How does this "trick SQL into doing all the work for you"?

The copy_from command reads from our "file"

and all processing happens when data is read!

New Flow

API server

raw data

ingestion script

COPY

{

"file": "s3://spongebob"

}BUT WAS IT FASTER?!

Original Estimate: ~2 days

New Method: ~20 minutes!

Takeaways

Objects may not always be what they seem

If it walks like a duck and it quacks like a duck, then it must be a duck

The End

Thank's for coming!!

Come find me!

Pygotham2018

By Molly Leen

Pygotham2018

- 1,965