Data quality,

Rachel House

Great Expectations

MLOps, and Great Expectations

Senior Developer Advocate

Data quality + MLOps

Great Expectations

Live demonstration

Q&A

Agenda

25 min

8 min

6 min

21 min

Data quality 101

High quality data

is fit for its intended use.

Data quality is

Product quality

Outcome quality

Insight quality

Prediction quality

Decision quality

Data as a product

Raw material

Distributor

Retailer

Consumer

Raw data

Data lake

Data warehouse

Dashboard

Data analyst

Informational report

Decision maker

Example tangible product supply chain

Example data product supply chain

Processing facility

Warehouse

Supplier

The data supply chain

Upstream

Downstream

Data quality

Data observability

Content and quality of the data product

Health of the

data supply chain

freshness, distribution, schema, lineage, volumeaccuracy, completeness, consistency, validity, timeliness, uniqueness

Data quality and MLOps

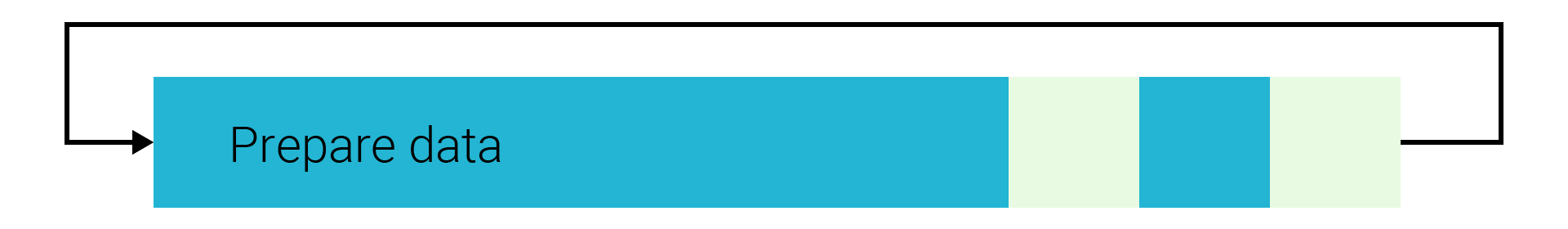

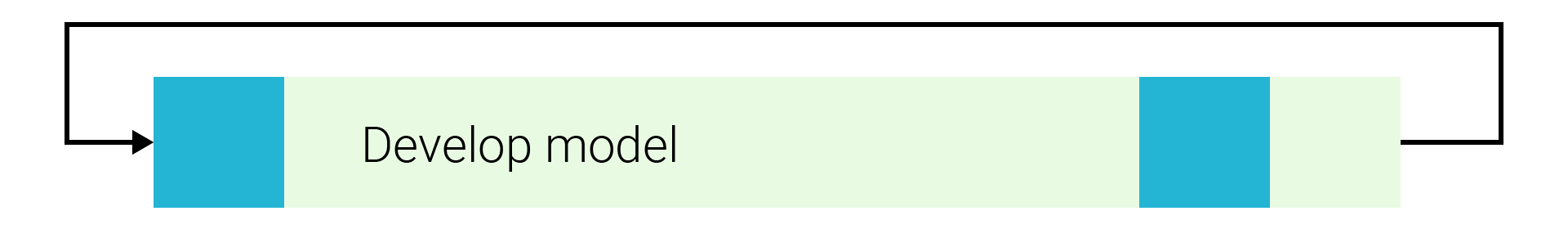

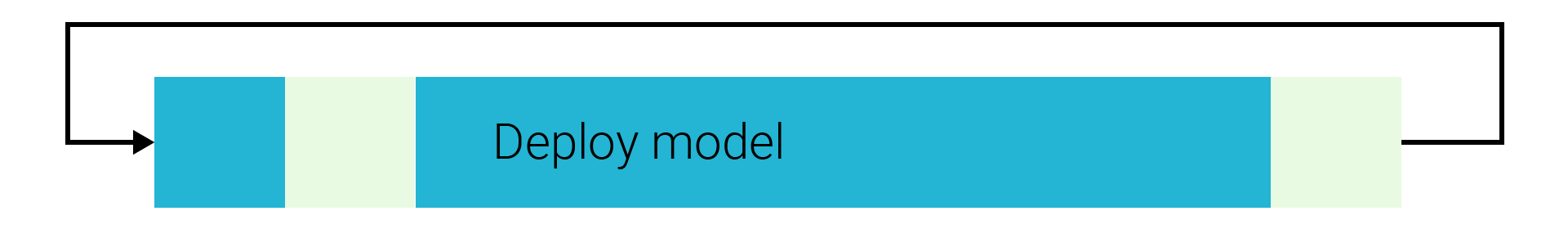

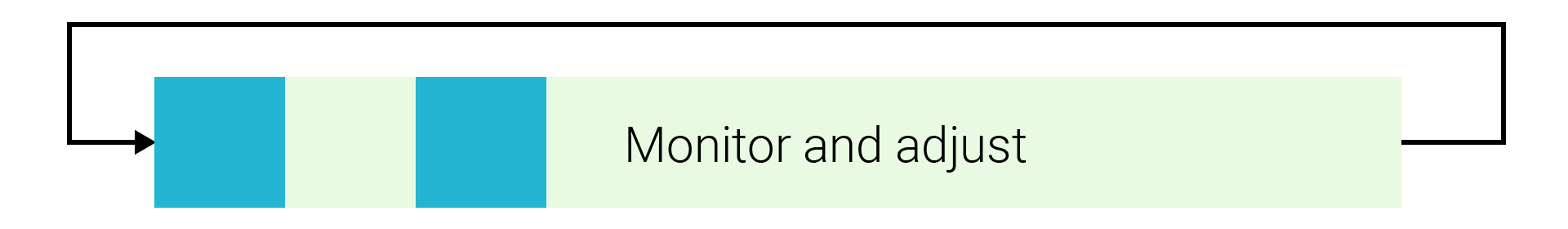

MLOps workflow

Prepare data

Develop model

Deploy model

Monitor and adjust

MLOps automates and streamlines the lifecycle of machine learning models.

Refresher: ML models and data

Train on historical data

The algorithm is programmed using explicit instructions.

Generalize and perform on new, unseen data

"The data is the algorithm."

Before ML

Using ML

Data collection

Knowledge about data

Source data

Cleaned data,

fit for modeling

Decisions and assumptions about data quality

Steps to prepare and clean data

EDA

Data cleaning

Definition of data quality

Consistent distribution for train/test/holdout sets

Candidate models

Selected model

Steps to prepare train/test/holdout sets

Feature engineering

Evalution

Experimentation

Train/test/holdout sets

Prepared dataset

Differences in training data and live data at deployment time

Deployed model in production

Deployment

Model handoff

Trained development model

Implicit knowledge about data dependencies and required quality

Potential reimplementation

Data quality definition and assumptions from development

Changes in model output

Maintained model

Newly deployed model

Evaluation and comparison

Ground truth evaluation

Input drift detection

Retraining

Data drift

Retrained model

Data quality themes for MLOps

Capture data quality standards applied during data preparation.

Codify data quality standards in a way that can be shared and applied by all MLOps personas.

Document assumptions about data quality.

Models are not deployed in a vacuum.

The definition of data quality from the preparation phase affects and is used in all other MLOps phases.

Data quality and collaboration

Data Scientist

Data Engineer

ML Engineer

Subject Matter Expert

Software Engineer

MLOps personas

DevOps Engineer

Model Governance Manager

Business Analyst

Executive

Data quality is a collaborative effort.

Technical

Business

Subject matter expertise

Subject matter expertise

Defining data quality

Driven by problem domains and desired outcomes

Informed by technical and business personas

Multidimensional

Data Scientist

Data Engineer

Subject Matter Expert

Executive

ML Engineer

distribution

validity

timeliness

schema

completeness

timeliness

distribution

accuracy

relevancy

bias

bias

accuracy

consistency

completeness

Sharing and applying definitions of data quality

- data testing and validation

- data contracts

Common language to express data quality

Single source of truth for creating and maintaining data quality definitions

Share

Apply

Tooling to apply shared data quality definitions through:

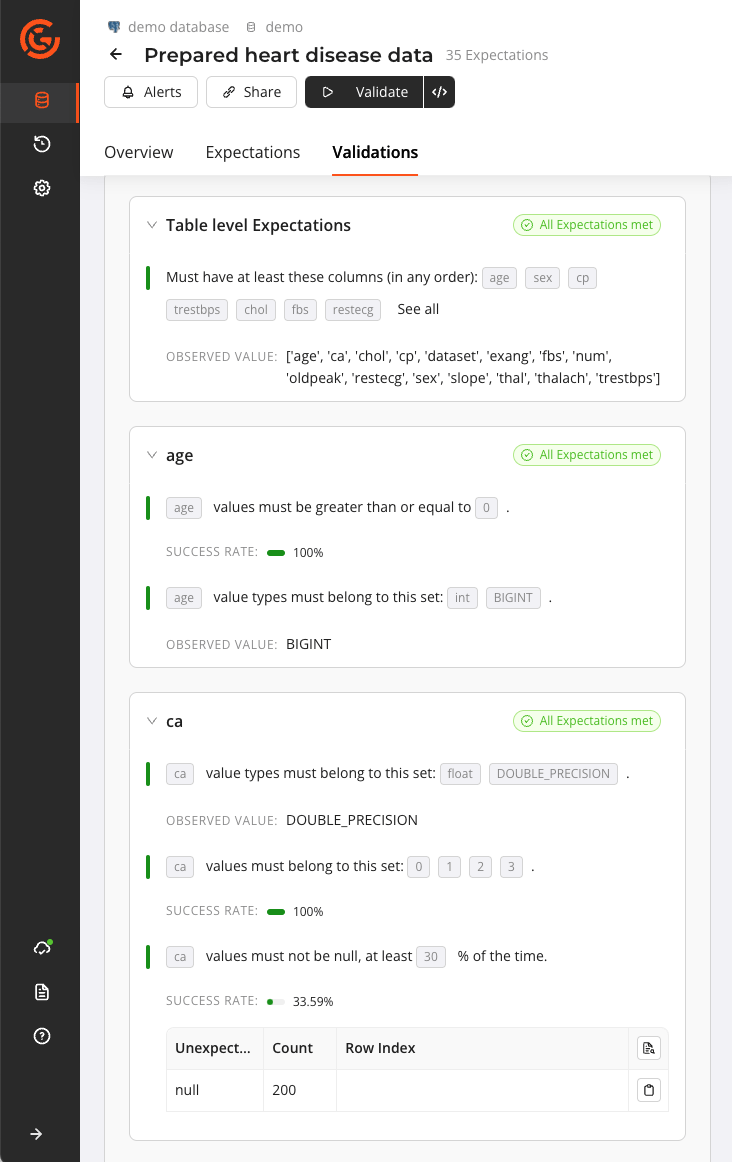

Data quality and GX

Expectation-based approach to data validation

Comprehensive solution for data quality monitoring and management

Great Expectations (GX)

Collaborative tooling that enables a single source of truth for data quality definition and application

Flexible integration with data stacks

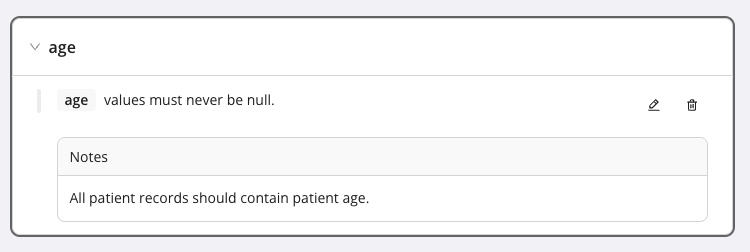

The GX Expectation

"I expect that all patient records contain the patient's age."

import great_expectations.expectations as gxe

gxe.ExpectColumnValuesToNotBeNull(column="age")

A verifiable assertion about data,

expressed in plain-language terms.

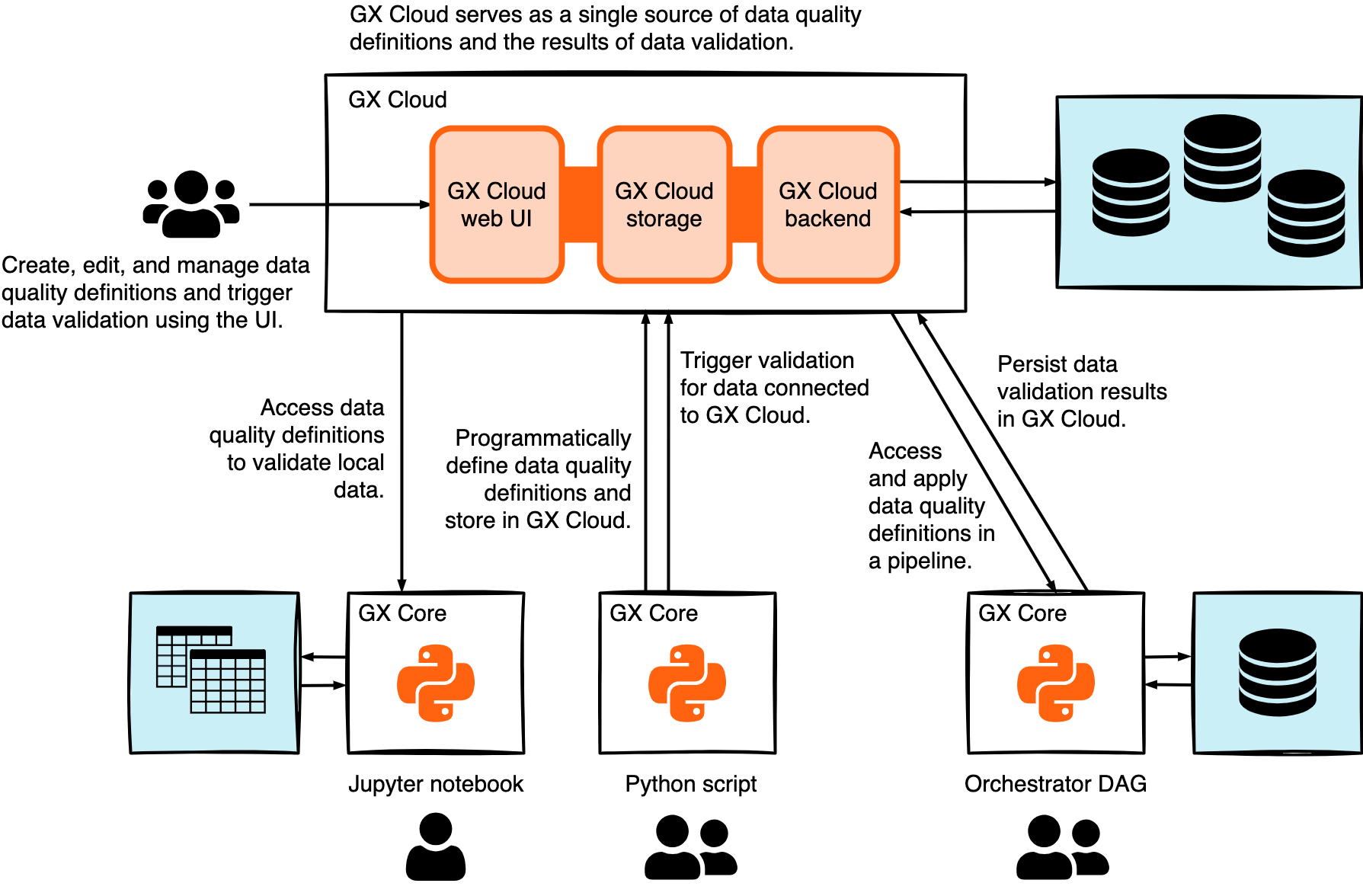

GX Cloud

Great Expectations data quality platform

GX Core

+

Open source Python framework

Fully hosted SaaS platform

UI workflows

Python workflows

GX platform workflow flexibility

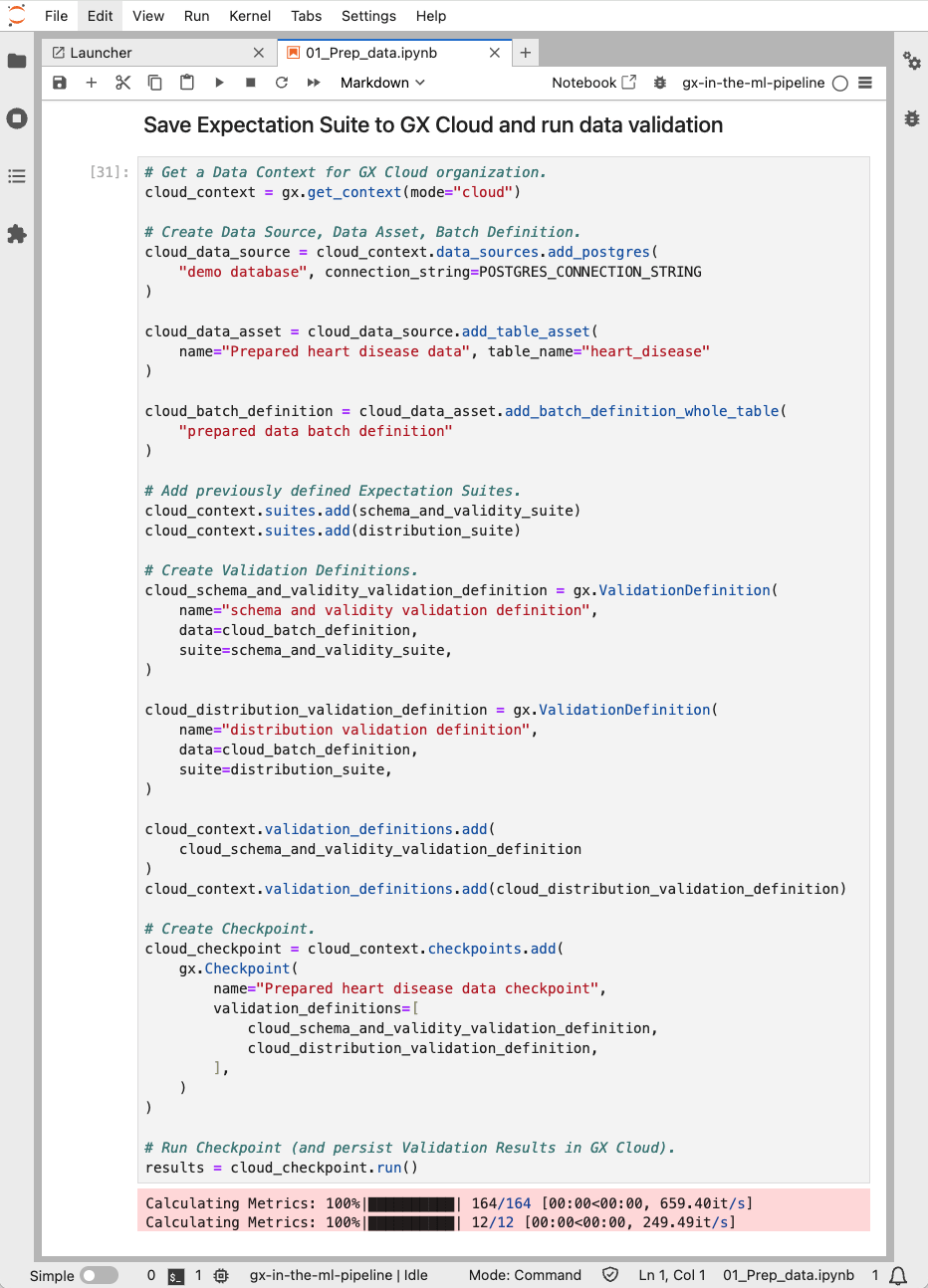

Example modes of working together with GX Cloud and GX Core.

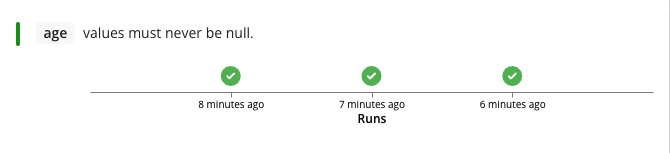

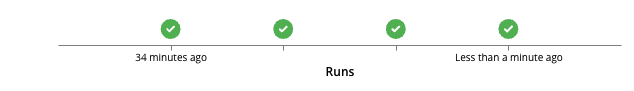

GX can enable creation and sharing of data quality definitions and data quality validation across the MLOps workflow.

Prepare data

Develop model

Deploy model

Monitor and adjust

Collaboratively define shared data quality definition for modeling

Validate train, test, and holdout sets for quality

consistency

Monitor new input data for data quality standards

Validate prepared data

Alert on issues

Evolve data quality definition as model and trends change

Enforce shared data quality standards when model is changed for deployment

Compare and validate initial live data against training data quality

GX demonstration

GX terminology 101

Data Source

Data Asset

Batch Definition

Batch

Validation Definition

Validation Result

Expectation

Expectation Suite

Checkpoint

Define your data

Define your quality

Validate your data

The GX representation of a data store.

A collection of records in a Data Source.

A verifiable assertion about data.

A collection of Expectations.

A collection of records in a Data Asset.

Defines how Data Asset records are split into Batches.

Pairs a Batch Definition to an Expectation Suite for validation.

Executes data validation using the Validation Definition.

Returns the results of data validation and related metadata.

Time to demonstrate!

Q & A

Resources

GX docs

Great Expectations website

Try GX Cloud

GX resources

GX Core GitHub repo

Tutorial series on integrating GX into your data pipeline

Demo and other code resources

Code used for the demonstration

(maintained and growing)

(not currently maintained)

Data quality, MLOps, and Great Expectations

By Rachel House

Data quality, MLOps, and Great Expectations

- 314