Data Quality

Cville Data Science Meetup

January 2024

Rachel House

Validating the Data Supply Chain

What is data quality and why do we care about it?

it correctly represents the real-world construct to which it refers.

it is fit for its intended uses in

operations, planning, and decision making.

Data is generally deemed high quality if:

The Real World Cost of Bad Data

2017, Gartner

$12.9 Million

$3.1 Trillion

2016, IBM

annual cost to the US economy

annual cost to an organization

Unity Technologies

Data Quality Wall of Shame

Public Health England

Samsung Securities

Amsterdam City Council

$110M

$300M

€188M

50k cases

Informed Decisions

Operational Efficiency

Trust

Regulation Compliance

High

Quality Data Drives

Financial Accuracy

Customer Satisfaction

Scalability

Competitive Advantage

Machine Learning

Data Science

Artificial Intelligence

High Quality Data

Data-centric AI

Systematically engineering the data needed to build successful AI systems.

Dimensions of Data Quality

The Big

Accuracy

Completeness

Validity

Consistency

Uniqueness

Timeliness

6

Completeness

All required data values are present.

Missing

Missing

Uniqueness

Distinct values appear only once.

Not unique

Not unique

Timeliness

Data represent the reality from a required point in time.

Late data

Consistency

Data characteristics are the same across instances.

Inconsistent

Validity

Data conforms to the format, type, or range of its definition.

Invalid

Invalid

Accuracy

Data values are as close as possible to real-world values.

Inaccurate

Freshness

Distribution

Volume

Schema

Timeliness

Is the data recent?

Validity, Consistency

Completeness, Accuracy, Validity

Has all the data arrived?

Is the data within accepted ranges? Is it complete?

Completeness, Timeliness, Consistency

What is the schema, and has it changed?

Data Quality Fundamentals, Barr Moses, Lior Gavish, Molly Vorwerck, O'Reilly Media, Inc., 2022

Related Data Observability Terms

Lineage

What are the upstream sources and downstream assets impacted by this data?

Who generates the data, and who relies on it for decision making?

Data Quality Fundamentals, Barr Moses, Lior Gavish, Molly Vorwerck, O'Reilly Media, Inc., 2022

Related Data Observability Terms

Data quality is context-dependent and multidimensional.

The Data Supply Chain

Information is manufactured from raw data.

Data is a resource.

material

supplier

manufacturer

distributor

retailer

consumer

decision maker

raw data

Data as a Product

warehouse

data lake

dashboard

Tangible Products

Example Supply Chains

Data Products

data analyst

informational report

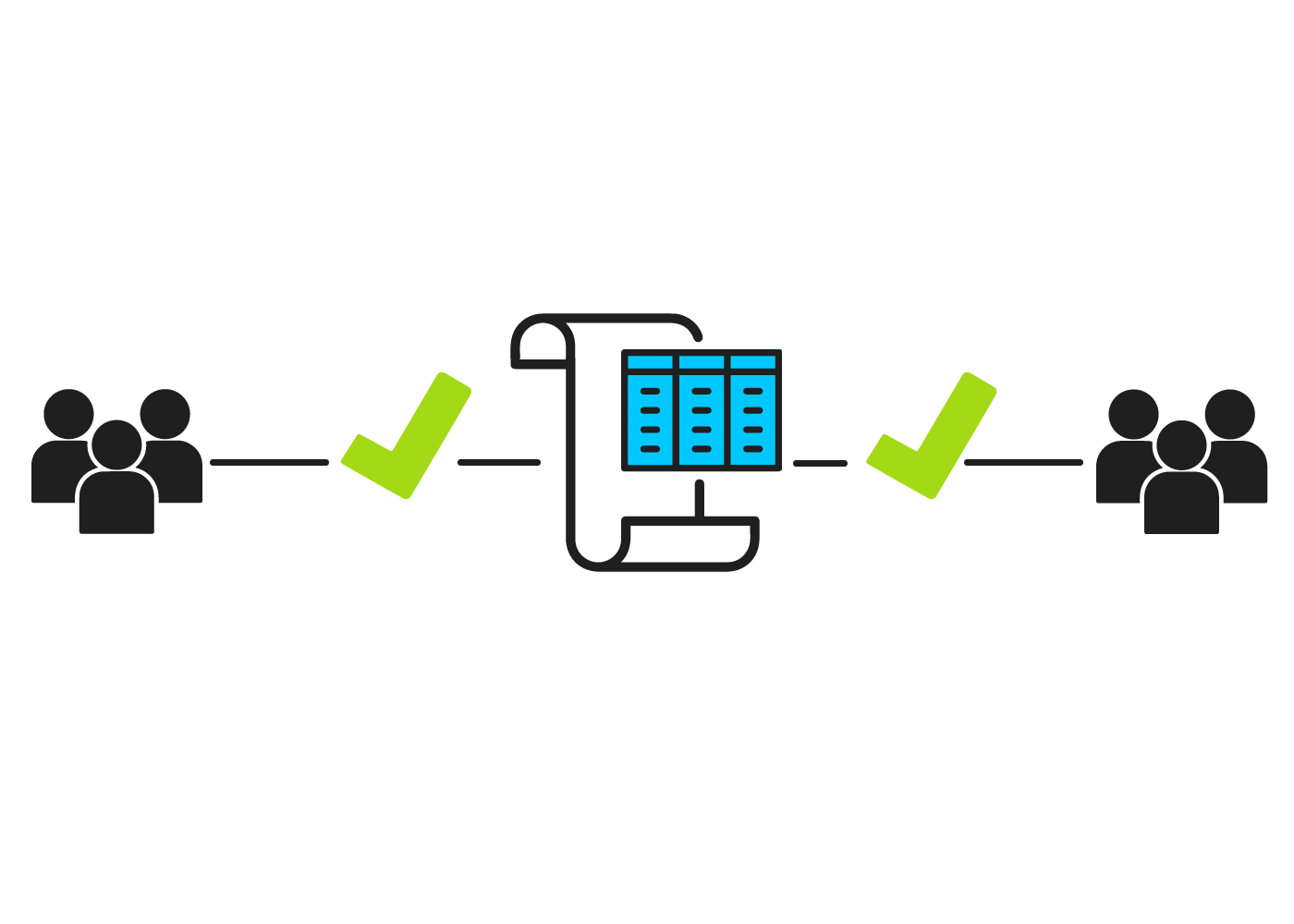

Source Data Store

Data Pipeline

Destination Data Store

Transformation

Ingestion

Storage

data in transit

Data Stores

data at rest

Pipeline

User

Source Data

Pipeline

Pipeline

Upstream

Downstream

Target Data

Upstream

Downstream

Data Quality

Across the Organization

Data quality dimensions vary in definition

and importance between stakeholders.

schema

completeness

timeliness

Data Developer

Data Analyst

Executive

validity

Data Scientist

distribution

lineage

freshness

accuracy

validity

consistency

Data quality is a collaborative effort.

Data

Subject Matter Expertise

Business

Subject Matter Expertise

🤓

🤠

common languages

to express

data quality

shared, human-friendly artifacts

data quality democratization

🥸

trust in organization data

Assessing Data Quality

Data Profiling

Data Testing

Anomaly Detection

Data Contracts

Open Core Python Tooling

for Data Quality

ydata-profiling

- Formerly pandas-profiling

github.com/ydataai/ydata-profiling

profiling

- Produces human-friendly, shareable reports

- Pandas and Spark dataframe support

- "One line data quality profiling and EDA"

great-expectations

github.com/great-expectations/great_expectations

testing & validation

- Expectations: unit tests for data

- Library of common, core Expectations

- Ability to create custom Expectations

- User-friendly validation results hosted in shared "Data Docs" interface

- Support for pandas, Spark, Snowflake, Postgres, and other SQL data sources

pandera

github.com/unionai-oss/pandera

testing & validation

- Variety of supported dataframe formats: pandas, pyspark, dask, modin, fugue

- Schema definition and validation for data

- Testing for data transformation functions

- Data science and ML focus

pydeequ

github.com/awslabs/python-deequ

testing & validation

- Python API for Deequ (built in Scala, runs on Spark)

- "Unit tests for data"

- Built in anomaly detection

- Measure quality across very large datasets

Live Demo!

In Summary

Data quality has a direct effect on an organization's success.

Data-centric AI is an emerging field focused on model data instead of model algorithms.

Raw data is a resource transformed into information

via the data supply chain.

Data quality is multidimensional and context-dependent.

Achieving high data quality within an organization is a collaborative effort.

1

2

3

4

5

Thank You!

Data Quality: Validating the Data Supply Chain

By Rachel House

Data Quality: Validating the Data Supply Chain

Data fuels the modern world. Organizations increasingly leverage data to build complex ecosystems, infrastructure, and products. Whether your interest is analytics-informed decision making, training the latest model, building a data-intensive application, or something in between, the quality of your data is fundamental to your outcomes. In this introductory talk, we’ll discuss the importance of data quality, common data quality dimensions, data quality across the organization, mechanisms to assess data quality, and open source Python tooling for data quality.

- 472