ENPM809V

Dynamic Memory Vulnerabilities

Today's Lesson

-

Objective: Memory Allocator/Heap Vulnerabilities

- Learn about different areas in memory

- The Dangers of Heap

- Memory Allocators and Their Vulnerabilities

- Exploiting Memory Allocators

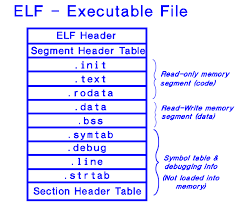

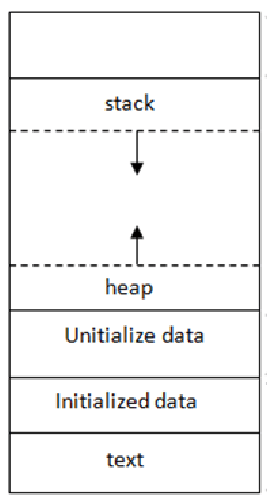

Review: ELF Regions of Memory

- ELF .text: where the code lives

- ELF .plt: where library function stubs live

- ELF .got: where pointers to imported symbols live

- ELF .bss: used for uninitialized global writable data (such as global arrays without initial values)

- ELF .data: used for pre-initialized global writable data (such as global arrays with initial values)

- ELF .rodata: used for global read-only data (such as string constants)

- stack: local variables, temporary storage, call stack metadata

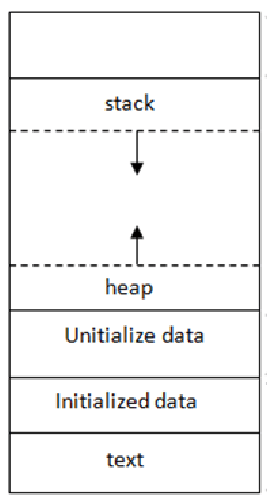

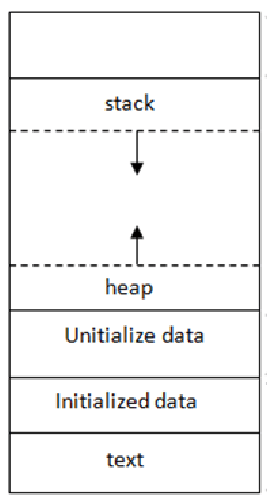

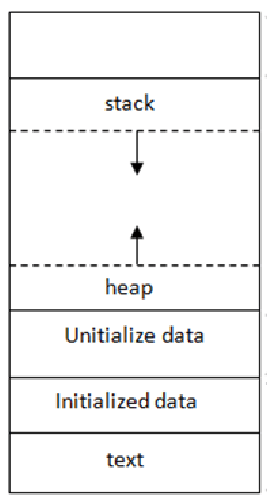

Stack Vs Heap

- stack: local variables, temporary storage, call stack metadata

- heap: long-lasting dynamically allocated memory

Stack data lasts per function while heap memory is there until freed.

Kernel Space

Stack

Memory Mapping Region

Heap

BSS Segment

Data Section

Text Segment (ELF)

...

Dynamic Memory Handling

The Basics

- Dynamic memory is synonymous with the heap of a program

- C Programmers use functions like malloc and realoc to allocate memory while the program is running.

- Not possible with the stack.

int main()

{

// Initial Variables declared

char *buffer = NULL;

int buffSize = 0;

//Getting size of the buffer

printf("Give me size of buffer: ");

scanf("%d", &buffSize);

// Allocating Buffer

malloc(buffSize)

//Get input and print it.

printf("Input: ");

scanf("%s", buffer);

printf("%s\n", buffer);

return 0;

}Why is this helpful?

- The stack can't solve all of our memory problems

- What if:

- You don't know how much memory you need to allocate the data?

- You want to access an object beyond the context of a single function?

- Your data structures grow over time?

- We don't want a structure to de-allocate when a function returns?

Caveats

- All dynamically allocated memory must be freed by the program

- Any memory not freed is considered leaked

- Dynamically allocated memory is referenced by pointers

- Meaning we can access memory that has been freed.

Heap Implementations

- There are many different implementations that use different algorithms

- Goal is to either make it faster or more secure

- They all have some constant concepts:

- Chunks - Allocations of the heap

- Bins - Linked lists of free chunks.

- To make it faster to reallocate memory of the same size

- Each bin is of the same size

Goal of Heap Implementations

- Per the original malloc() author here:

- Maximizing Compatibility

- Maximizing Portability

- Minimizing Space

- Minimizing Time

- Maximizing Tunability

- Maximizing Locality

- Maximizing Error Detection

Key Terms for Dynamic Memory

- Arena:

- Allocated memory space from system

- Each thread has an arena

- Main arena is the arena for the main thread

- Heap

- SIngle continuous memory region, subdivided into chunks

- Chunks

- Allocated sections of the heap

- Bins

- Linked Lists of free chunks (based on a chunk size)

- Bins have chunks of the same size

- Different kinds of bins - fast bins, small bins, unsorted bins, large bins, etc.

What is a chunk?

Common Dynamic Allocators

- malloc() - allocate some memory

- Based on ptmalloc

- free() - free an allocated memory chunk

- realloc() - change the size of an allocated memory chunk

- calloc() - allocate and zero-out memory chunk.

For Linux, use the GNU Libc implementation

Ideas for Getting Heap Memory

- mmap()

- Dynamically allocate pages of memory (4096 bytes)

- Requires Kernel Involvement

- System call

Potentially create a dynamic allocation around it, but not ideal.

Various Dynamic Allocators Implementations

- dlmalloc() - First Dynamic allocator release by Doug Lea

- ptmalloc() - POSIX Thread aware fork of dlmalloc

- jemalloc() - FreeBSD, FireFox, and Android

- Segment Heap and NT Heap - Windows

- Kernel Allocators

- kmalloc

Let's Focus on ptmalloc

- ptmalloc() does not use mmap

- Uses the GNU Allocator in Libc

- Utilizes the Data Segment to dynamically allocate memory

- Historic Oddity

- Managed by the brk and sbrk system calls

Note: Only for small allocations. For large allocations, it uses mmap.

What is mmap?

mmap is a system call in Linux that creates a new mapping in virtual address space in the calling process. Has six parameters:

- addr - address for the memory to be located (NULL means let the kernel choose)

- length - size of allocation (rounded up to the nearest page)

- protection - the kind of memory protections that the page should have (read, write, executable)

- flags - Generally determines visibility to other processes

- MAP_SHARED, MAP_ANON, etc.

- file descriptor - if we are mapping based on memory in file descriptor

- offset - offset into the file.

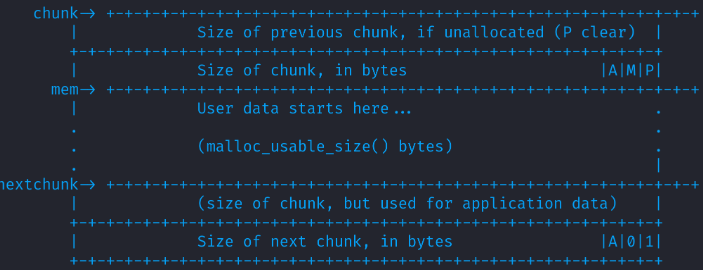

Metadata

Per Chunk Metadata

- Also generated by ptmalloc

- Tracks addresses that are allocated

-

malloc(x) returns the memory address, but ptmalloc tracks the size (and previous size)

- prev_size is not used by tcache

- the last three bytes of size are flags

-

Bit 0: PREV_IN_USE

-

Bit 1: IS_MMAPPED

-

Bit 2: NON_MAIN_ARENA

-

unsigned long mchunk_prev_size;

unsigned long mchunk_size;

Dynamically Allocated Memory Chunk

chunk_addr

mem_addr:

prev_size

prev_size

size

a[0]

a[1]

size

b[0]

b[1]

chunk1: int *a = malloc(0x8)

chunk1: int *b = malloc(0x8)

Per Chunk Metadata Overlapping

Note: Only applies if PREV_INUSE flag is set

prev_size

prev_size

size

a[0]

a[1]

size

b[0]

b[1]

chunk1: int *a = malloc(0x8)

chunk1: int *b = malloc(0x8)

Per Chunk Metadata Overlapping

Note: Only applies if PREV_INUSE flag is set

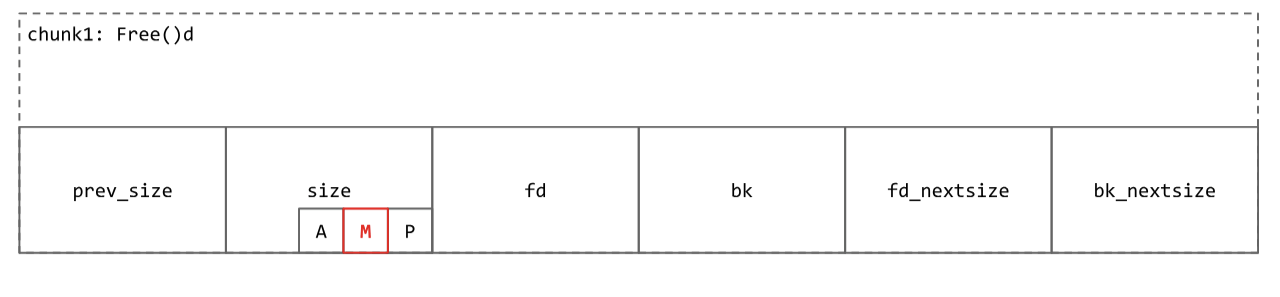

What about being freed?

unsigned long mchunk_prev_size;

unsigned long mchunk_size;

Dynamically Allocated Memory Chunk

b[1]

unsigned long mchunk_prev_size;

unsigned long mchunk_size;

Cache-Specific Metadata

How do I know if my memory was alloced by mmap?

Chunks allocated by mmap are marked via the M bit.

From pwn.college

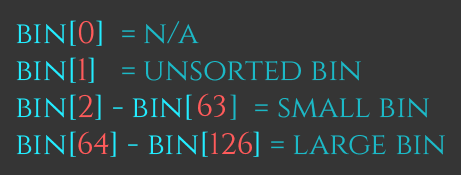

Bins

What are they?

- Bins are chunks of freed memory of similar size so that they can be quickly be re-used.

- They are put in as the very last step if all else fails during the free process.

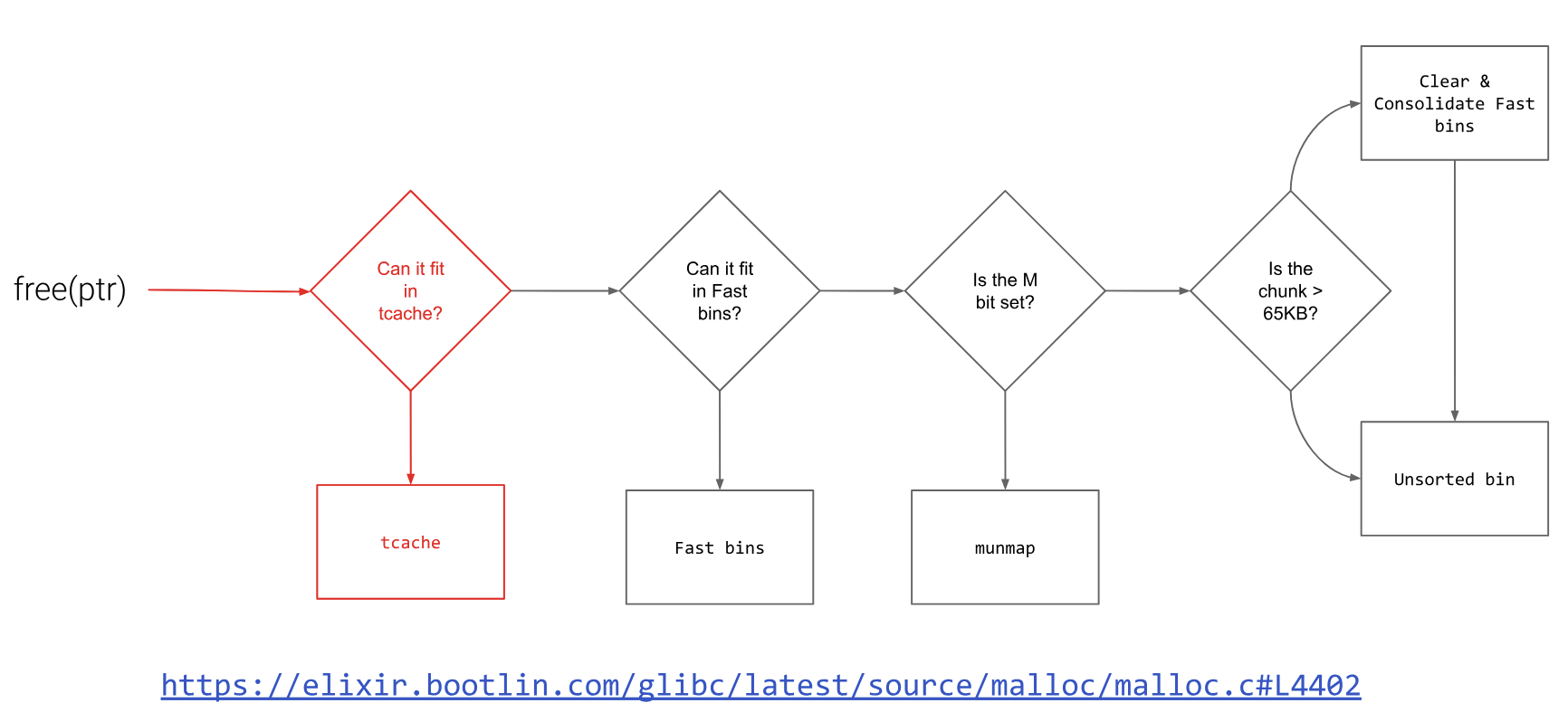

What are the steps for freeing?

- If the chunk has the M bit set in the metadata, the allocation was allocated off-heap and should be munmaped.

- Otherwise, if the chunk before this one is free, the chunk is merged backwards to create a bigger free chunk.

- Similarly, if the chunk after this one is free, the chunk is merged forwards to create a bigger free chunk.

- If this potentially-larger chunk borders the “top” of the heap, the whole chunk is absorbed into the end of the heap, rather than stored in a “bin”.

- Otherwise, the chunk is marked as free and placed in an appropriate bin.

Implementations

- Multiple Implementations

- Small Bins, Large Bins, Fast Bins, Tcache (per-thread)

- Each have their own benefits and drawbacks

- Generally implemented as a linked list

Unoptimized Bins

- Unsorted, Small and Large bins are the unopimized version of the heap-manager binning system.

- Each contain various sizes of freed chunks

- Simple implementation, bins shared by all processes/threads

Small/Large Bins

- Small bins

- Stores up to 512/1024 byte chunks (32/64 bit systems)

- 62 total bins

- Large Bins

- Stores chunks greater than 512/1024 bytes (32/64 bit systems)

- 63 total bins

- Small/Large bins can be merged together to free up bins

Bin #

prev_size

Chunk Size

FD Pointer

BK Pointer

Unused Space

prev_size

Chunk Size

FD Pointer

BK Pointer

Unused Space

Small/Large Bins

- This is incredibly useful because we have a pre-ordered

- Differences:

- Small bins are binned based on exact size

- Large bins are based on size range

- Small bins are binned based on exact size

Bin #

prev_size

Chunk Size = 10

FD Pointer

BK Pointer

Unused Space

prev_size

Chunk Size = 10

FD Pointer

BK Pointer

Unused Space

Unsorted Bin

- Optimization by the heap manager

- Based on the observation that many frees are followed by allocations of similar sizes

- Saves time from putting chunks in the correct bin

Bin #

prev_size

Chunk Size = 10

FD Pointer

BK Pointer

Unused Space

prev_size

Chunk Size = 64

FD Pointer

BK Pointer

Unused Space

Fast Bins

- A further optimization of the previous binning strategies

- Keeps recently released small chunks on a fast turnaround queue.

- Only 10 such fast bins of sizes 16 through 80 (incrementing by 8).

- Bins are never merged by their neighbors

- Cons: Fast-Bins are never truly freed/merged with other bins

Bin #

prev_size

Chunk Size = 10

FD Pointer

BK Pointer

Unused Space

prev_size

Chunk Size = 64

FD Pointer

BK Pointer

Unused Space

More Informatioon

tcache

What is tacache?

- Definition: Thread-Local-Caching

- Used in ptmalloc to speed up small-repeated allocations in a single thread

- Implemented in a singly-linked list

- Each thread has its own list of headers containing metadata about the memory regions

What is tcache?

typedef struct tcache_perthread_struct

{

char counts[TCACHE_MAX_BINS];

tcache_entry *entries[TCACHE_MAX_BINS];

} tcache_perthread_struct;

typedef struct tcache_entry

{

struct tcache_entry *next;

struct tcache_perthread_struct *key;

} tcache_entry;

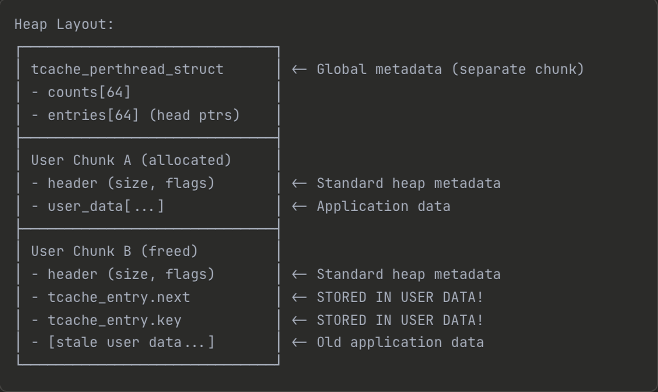

What is tcache?

The tcache entry is stored in user data (right after the header) in a freed chunk!

Example In Use

a = malloc(16);

b = malloc(16);

c = malloc(32);

d = malloc(32);

free(b);

free(a);

free(c);

free(d);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 0

count_32 = 0

entry_16 = NULL

entry_32 = NULL

tcache entry B

next: NULL

Key: NULL

tcache entry C

next: NULL

Key: NULL

tcache entry A

next: NULL

Key: NULL

tcache entry D

next: NULL

Key: NULL

Example In Use

a = malloc(16);

b = malloc(16);

c = malloc(32);

d = malloc(32);

free(b);

free(a);

free(c);

free(d);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 1

count_32 = 0

entry_16 = B

entry_32 = NULL

tcache entry B

next: NULL

Key: Mike

tcache entry C

next: NULL

Key: NULL

tcache entry A

next: NULL

Key: NULL

tcache entry D

next: NULL

Key: NULL

Example In Use

a = malloc(16);

b = malloc(16);

c = malloc(32);

d = malloc(32);

free(b);

free(a);

free(c);

free(d);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 2

count_32 = 0

entry_16 = B

entry_32 = NULL

tcache entry B

next: A

Key: Mike

tcache entry C

next: NULL

Key: NULL

tcache entry A

next: NULL

Key: Mike

tcache entry D

next: NULL

Key: NULL

Example In Use

a = malloc(16);

b = malloc(16);

c = malloc(32);

d = malloc(32);

free(b);

free(a);

free(c);

free(d);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 2

count_32 = 1

entry_16 = B

entry_32 = C

tcache entry B

next: A

Key: Mike

tcache entry C

next: NULL

Key: Mike

tcache entry A

next: NULL

Key: Mike

tcache entry D

next: NULL

Key: NULL

Example In Use

a = malloc(16);

b = malloc(16);

c = malloc(32);

d = malloc(32);

free(b);

free(a);

free(c);

free(d);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 2

count_32 = 2

entry_16 = B

entry_32 = C

tcache entry B

next: A

Key: Mike

tcache entry C

next: D

Key: Mike

tcache entry A

next: NULL

Key: Mike

tcache entry D

next: NULL

Key: Mike

What is actually happening on Free?

- tcache selects the right bin to put the freed memory

- Based on size

- Ensures that the entry hasn't been freed already

- Prevent double frees

- Put the freed memory into it's proper list

- Record it inside of the tcache_perthread_struct

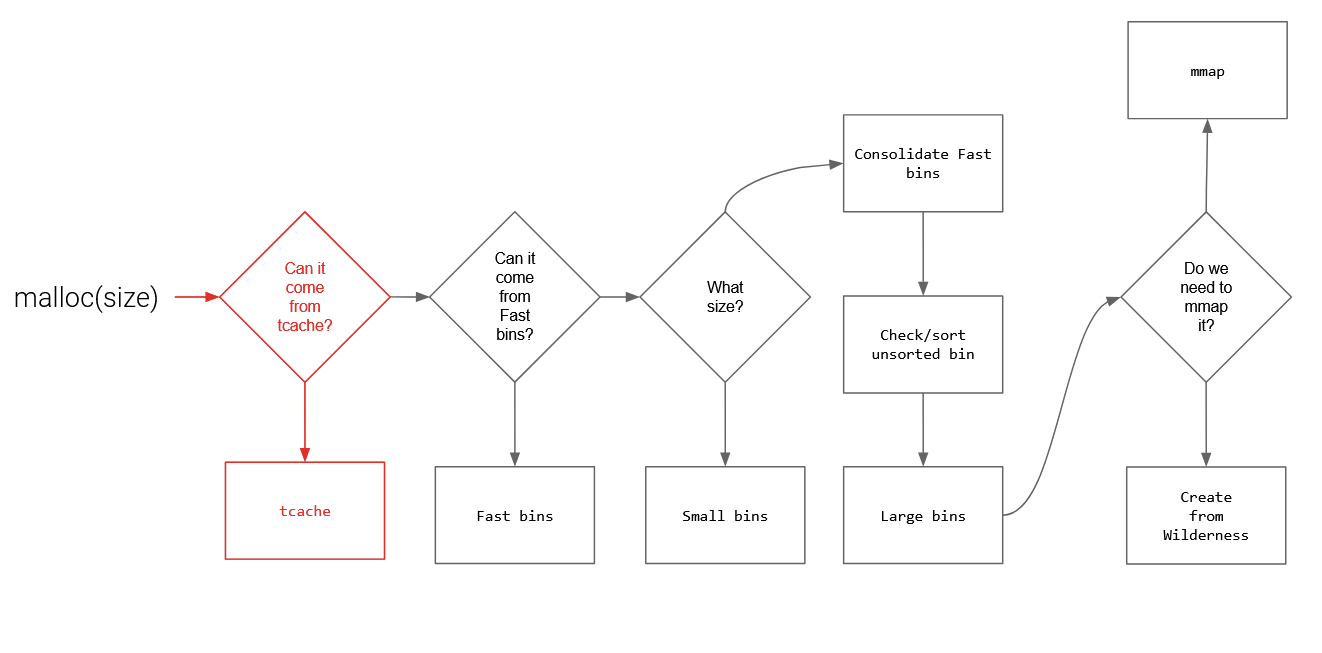

How do we retrieve a cached memory chunk?

- Choose the bin based on size of chunk requested

- Check to see if there areany entries

- If there are entries, reuse them

What is not done?

- Clearing all sensitive pointers (only key is cleared)

- Checking if the next address makes sense

Example In Use

a2 = malloc(16);

b2 = malloc(16);

c2 = malloc(32);

d2 = malloc(32);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 2

count_32 = 2

entry_16 = B

entry_32 = C

tcache entry B

next: A

Key: Mike

tcache entry C

next: D

Key: Mike

tcache entry A

next: NULL

Key: Mike

tcache entry D

next: NULL

Key: Mike

Example In Use

a2 = malloc(16);

b2 = malloc(16);

c2 = malloc(32);

d2 = malloc(32);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 1

count_32 = 2

entry_16 = A

entry_32 = C

tcache entry B

next: NULL

Key: NULL

tcache entry C

next: D

Key: Mike

tcache entry A

next: NULL

Key: Mike

tcache entry D

next: NULL

Key: Mike

Example In Use

a2 = malloc(16);

b2 = malloc(16);

c2 = malloc(32);

d2 = malloc(32);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 0

count_32 = 2

entry_16 = NULL

entry_32 = C

tcache entry B

next: NULL

Key: NULL

tcache entry C

next: D

Key: Mike

tcache entry A

next: NULL

Key: NULL

tcache entry D

next: NULL

Key: Mike

Example In Use

a2 = malloc(16);

b2 = malloc(16);

c2 = malloc(32);

d2 = malloc(32);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 0

count_32 = 1

entry_16 = NULL

entry_32 = D

tcache entry B

next: NULL

Key: NULL

tcache entry C

next: NULL

Key: NULL

tcache entry A

next: NULL

Key: NULL

tcache entry D

next: NULL

Key: Mike

Example In Use

a2 = malloc(16);

b2 = malloc(16);

c2 = malloc(32);

d2 = malloc(32);tcache_perthread_struct -- Mike

tcache_perthread_struct -- Mike

counts:

entries:

count_16 = 0

count_32 = 0

entry_16 = NULL

entry_32 = NULL

tcache entry B

next: NULL

Key: NULL

tcache entry C

next: NULL

Key: NULL

tcache entry A

next: NULL

Key: NULL

tcache entry D

next: NULL

Key: NULL

Protections with GLIBC 2.32 +

Added the concept of safe-linking

- Next pointers are XOR encrypted, but still stored in user data

Putting this all together

Summary

- 64 singly-linked tcache bins allocations of size 16 to 1032

- 10 singly-linked fast bins for allocations up to 160

- 1 doubly linked unsorted bin to quickly stash freed chunks that don't fit into any fast bins

- 64 doubly-linked small bins for allocations up to 512

- doubly linked large bins that contain different-sized chunk

Credit to pwn.college

Credit to pwn.college

Dangers of the Heap

Developer Mindset for Building Dynamic Allocators

- Allocator Developers focus on speed over security

- Create optimizations with unintended consequences

- Security researchers then create exploits

- After unintended consequences are exploited, patches are applied

Non-Allocator Vulnerabilities

- Forgetting to free memory

- DDOS, system crashing, resource exhaustion

- Forgetting memory was already freed

- Using freed memory

- Double Free

- Heap buffer overflow

- Corrupting metadata used to trach state

- tcache poisoning (will get to soon)

How Can This be Vulnerable?

int main()

{

char *some_data = malloc(256);

scanf("%s", some_data);

printf("%s\n", some_data);

char really_long_string[512] = {'A'}

strncpy(

some_data,

really_long_string,

strnlen(really_long_string, 512));

free(some_data);

return 0;

}int main()

{

char *some_data = malloc(256);

scanf("%s", some_data);

printf("%s\n", some_data);

// Make sure it is freed.

free(some_data);

scanf("%s", some_data);

if (strncmp(some_data, PASSWORD, 256))

{

printf("I am authenticated\n");

}

return 0;

}int main()

{

char *some_data = malloc(256);

scanf("%s", some_data);

printf("%s\n", some_data);

/* You put code here */

/* End of your code */

return 0;

}int main()

{

char *some_data = malloc(256);

scanf("%s", some_data);

printf("%s\n", some_data);

free(some_data);

free(some_data);

return 0;

}Use After Free

What is it?

- Anytime memory gets used after it has been freed.

- Can produced undefined behavior in a program

- Can use memory that is reallocated

- Can cause the program to crash

- Why is this the case?

- The memory in question can be allocated by another program.

- Implications

- Information leakage

Use After Free

int vuln()

{

char *buffer;

int size = 0;

printf("Size of buffer: ");

scanf("%d", &size);

// Allocating Memory

buffer = malloc(size);

printf("Input: ");

scanf("%s", buffer);

printf("%s\n", buffer);

// What happens here?

free(buffer);

printf("Print again: %s\n", buffer);

return 0;

}int vuln()

{

char *buffer;

int size = 0;

printf("Size of buffer: ");

scanf("%d", &size);

// Allocating Memory

buffer = malloc(size);

printf("Input: ");

scanf("%s", buffer);

printf("%s\n", buffer);

// What happens here?

free(buffer);

buffer = NULL;

printf("Print again: %s\n", buffer);

return 0;

}Heap-Overflow

Heap-Overflow

- The same as a buffer overflow, except we are doing it in the heap.

- We use this to manipulate data or pointers in dynamic memory

- If a function calls a function pointer

- Changing the value of a variable

Double Free

Double Free

int vuln()

{

char *buffer;

int size = 0;

printf("Size of buffer: ");

scanf("%d", &size);

// Allocating Memory

buffer = malloc(size);

printf("Input: ");

scanf("%s", buffer);

printf("%s\n", buffer);

// What happens here?

free(buffer);

free(buffer);

return 0;

}Double Free

- Causes a write what where exploit which allows you to do arbitrary writes

- Allows us to change registers and important data

- Especially valuable for binning/heap allocator vulnerabilities

- Varies depending on bins?

- How? we are about to answer this.

Classwork/Demo

Exploiting Bins

Bin Exploitation

- Bin Exploitation come and go based on version of Libc

- The one for the homework is for < version LIBC 2.29

- They are based on the core heap-based vulnerabilities

- Double Free

- Use After Free

- Etc.

Consolidation/Moving Between Bins

- A correctly sized malloc can change which bins a chunk is located

- Cause Consolidation by moving from unsorted to small/large bin, clearing fast bins

- Move from small bin into tcache

- etc.

Fake Chunks

Carefully creating a region of memory with heap-metadata in it can trick the heap allocator to think it's another chunk of memory!

- Depends on specifics of the program (and sometimes LIBC too)

- Can result in broken heap

Fake Chunks

What are some things we might need to include in the metadata structure?

- Might need a prev chunk pointer

- Might need a prev_size value

- All depends on what the implementation of the meatdata is like

What about in the context of previous exploits?

Double Free in Context with Bins

- Very much depends on the bin and which glibc you are using.

- Generally, there are double free protections in newer glibc.

- In older GLIBC not so much.

- What happens to the bin if successful?

- Fastbin - Can create a cycle in Fastbin due to corrupting the next pointer

- tcache - Corrupts the metadata - Can create a cycle like in Fastbin

- Unsorted bins - Corrupts the doubly linked list resulting in arbitrary memory

Use After Free

- Often this is the pathway to corrupting the metadata

- We free a chunk, and then corrupt the pointers in the linked list to point at our desired memory address.

- This can sometimes be also achieved by creating a fake chunk

- On the next malloc, we will get memory that wasn't part of the bin.

Heap Overflow

- We can use a heap-overflow to parts of the heap we normally didn't have access to.

- If we have access to a freed chunk, we can manipulate the metadata to point to something we want.

What all of these have in common

- We need to have a way of triggering the exploit

- Older GLIBC

- Vulnerable code

- etc.

- Our memory has to be lined up properly

- Particularly for heap overflows

- A good understanding of which bin our memory chunk is in.

Homework

Memory Corruption in the Heap

- Various in-class heap-based exploitation

- Overwriting Heap Data

- Bin poisoning

Additional Resources

- Azeria Labs

- How2Heap

-

Pwn College

- Dynamic Allocator Misuse

- Dynamic Allocator Exploitation

- Part of this course's module as well

ENPM809V - Memory Vulnerabilities

By Ragnar Security

ENPM809V - Memory Vulnerabilities

- 442