semistructured.ai

Elevator Pitch

- Small AI Models (LLMS)

- Running on end user devices (phone/laptop)

- Enterprise-grade audit and monitoring

The Problem

- AI is expensive

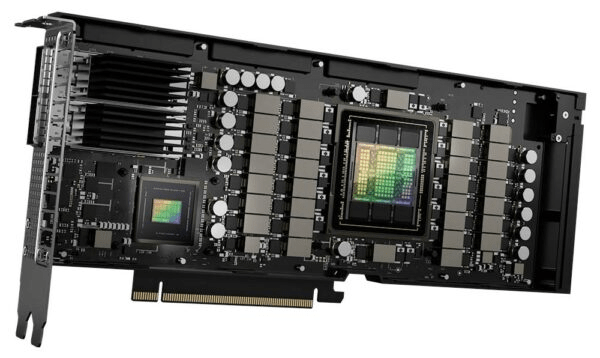

- An Nvidia H100 GPU card costs ~$34k

- Serving a mid-size model takes 8-16x H100

- For one customer!

Unit Economics of AI

- Suppose your 16x H100 hardware costs 750k

- Let's say the hardware has a 2 year service life

- Define a typical user session as ~10 minutes

- At 100% utilization over 2 years, that is 1.05m sessions

- So our baseline is $1.40 for a 10-minute user session

- If your model is bigger, or sessions are longer, $$$

- If utilization is lower, $$$

- OpenAI loses money on their $200/month pro plan

- My wild guess is that a DeepResearch session is $20

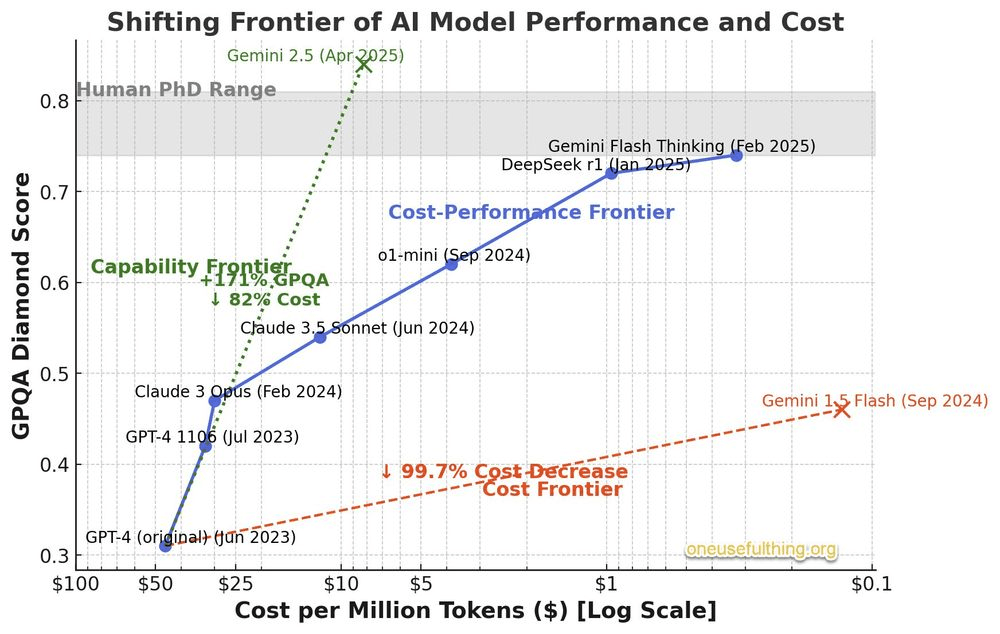

Scaling Laws for AI

- AI is not getting cheaper every year like transistors

- Gains are coming from more hardware and more data

- Algorithmic breakthroughs bend the cost curve down

- Hardware advances have been very slow

- SOTA labs focus "PhD level" models at any cost

- Other research directions focus on lower-cost models

Open-Source Models

- AI is also an active academic research community

- Research models are often freely available

- Meta, DeepSeek, and others have given models away

- Why?

- Published research attracts the best talent

- Free models erode the lead of the big players

Edge Hardware

- Modern phones and laptops also have GPU chips

- The lowest-cost iPhone 16e can run small AI models

- So can most laptops, many Android phones

- No one has figured out how to use this commercially

deck

By Richard Whaling

deck

- 100