History of AI

1943 - 2022

Why History?

AI is not new

- the fundamental algorithms of neural networks have been known since the 1950's

- the field has seen repeated booms and busts over the last 70 years

- in the last 10 years, problems that have frustrated researchers for decades, like computer vision and machine translation, have been largely solved, due to better hardware and vastly increased scale

- understanding the factors that led to the failure of previous AI booms helps us assess the trend-line we are currently on, and judge the impact of new developments, papers, models, etc.

- this helps us ground our thinking about: "Have we hit a wall?", i.e., where is the point of diminishing returns

Part 1

1943-1969

1943

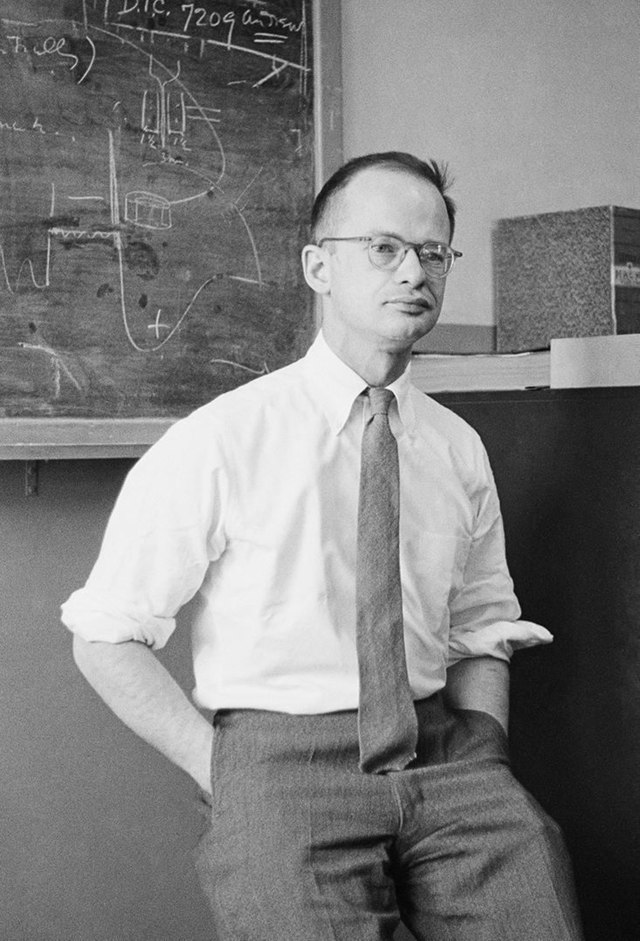

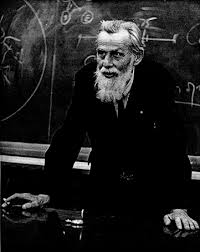

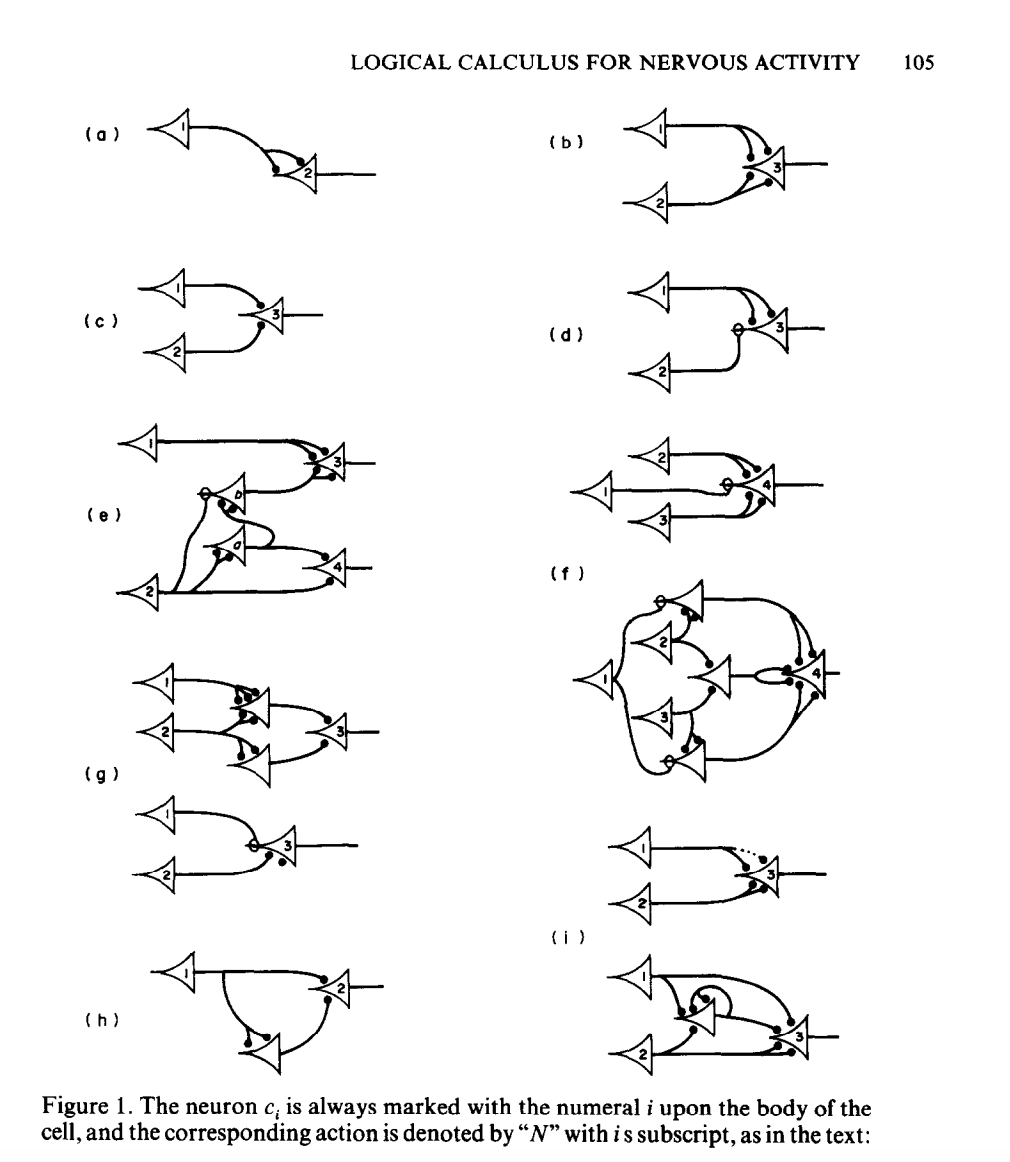

McCulloch and Pitts describe the first neural network

- Closely tied to the interdisciplinary field called "cybernetics"

- McCulloch was a neuropsychologist at UIC

- Pitts was a frequently unhoused mathematical genius without any formal degrees

- Presented as an attempt to describe biological neurons and their interconnections with propositional logic

- Not especially interested in computers - mentions computability and Turing machines in passing

1956

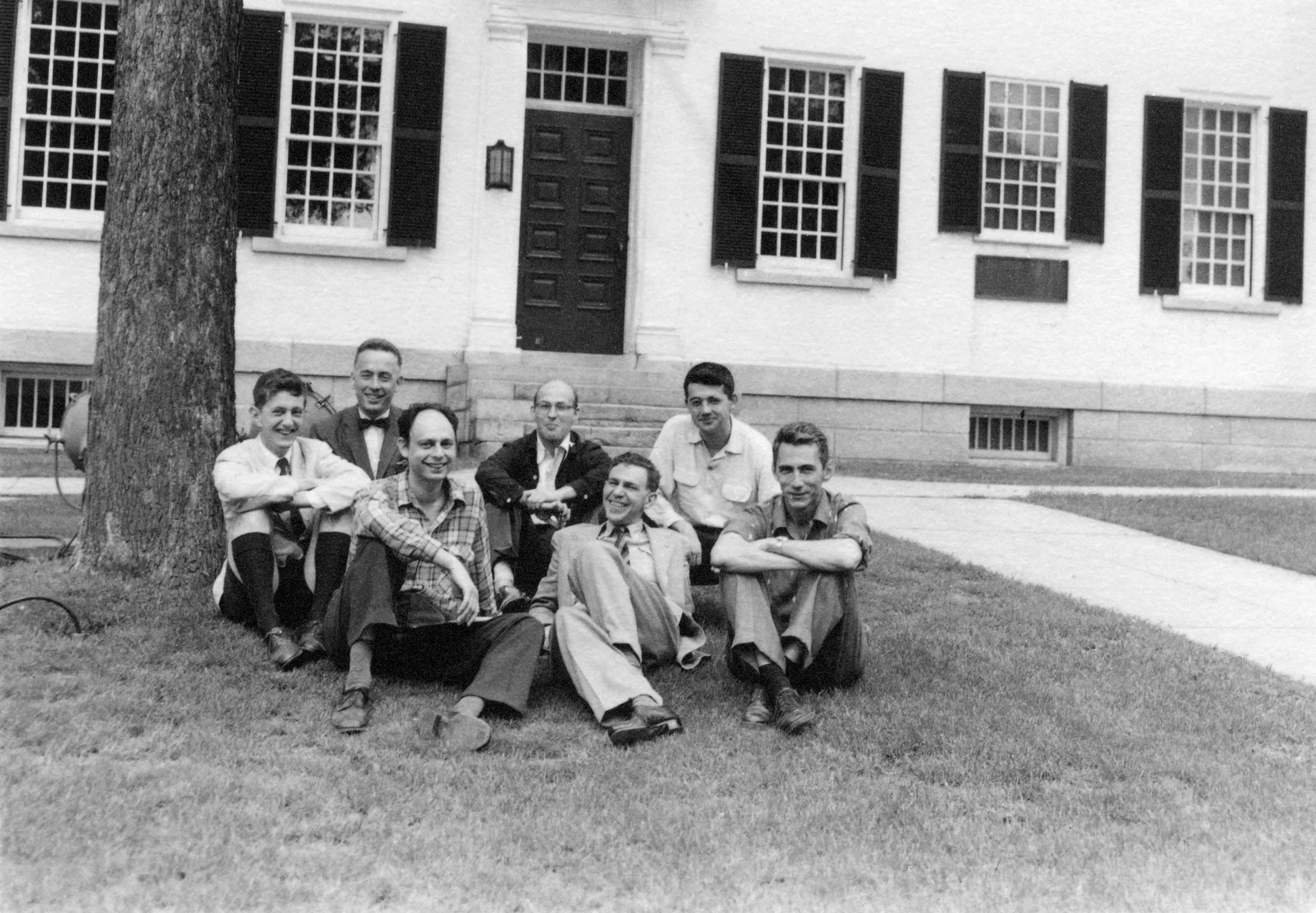

The term "Artificial Intelligence" is coined in a grant proposal for a summer workshop by 4 scientists:

- John McCarthy (inventor of LISP)

- Claude Shannon (information theory)

- Nathaniel Rochester (chief architect of the IBM 701)

- Marvin Minsky (AI lifer, early neural net research)

They assembled a wide-ranging group of researchers at Dartmouth in the summer of 1956 and explored many techniques and approaches.

According to McCarthy, the "stars" of the conference were Allen Newell Herbert Simon, whose Logic Theorist program found novel proofs of fundamental mathematical theorems - without using neural networks.

This emerging rivalry between pure logic-programming approaches and neural networks would persist in AI research for many decades, up to the present.

1958

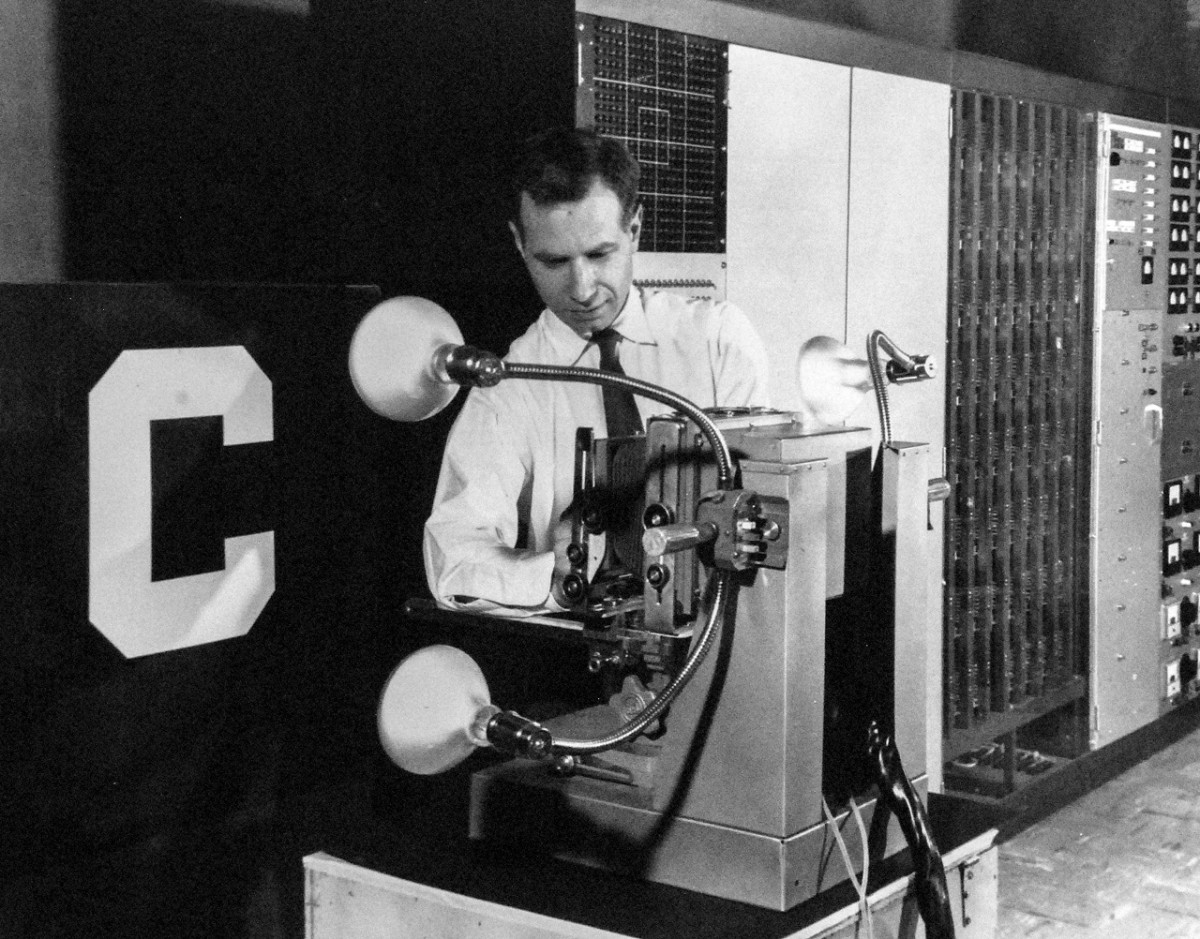

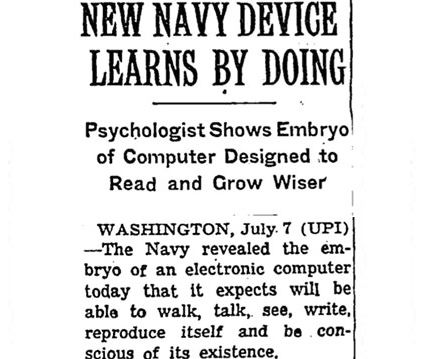

Frank Rosenblatt, a psychologist at the Cornell Aeuronatical Laboratory (and a very strong computer engineer) successfully runs a neural network on an IBM 704 mainframe

He dubbed the algorithm the "perceptron", and proceeded to secure large amounts of funding from the US Naval Research Office, which was interested in 2 particular AI applications:

- Computer vision, i.e., recognizing objects in images, such as missiles in a spy photo

- Machine translation, especially for intelligence applications

Rosenblatt promised them results in a few years.

1960-1969

Progress throughout the sixties was sluggish.

Rosenblatt's Navy funding ran out around 1964, although he continued working on perceptrons.

Likewise, the National Research Council recommended the end of all funding for machine translation research in 1966.

Minsky and his students continued to work on a variety of AI applications, but in 1969, Minsky and Papert published a book, "Perceptrons", that contained proofs of fundamental limits of single-layer perceptrons, and neural network research was largely abandoned afterwards.

Although they admitted that a multi-layer perceptron could overcome those limits, there was no known algorithm at the time to train such a network.

Part 2

1970-1989

1970-1980

The mainstream of AI in the 70's continued the most successful approaches from the 60's, and much of it was in LISP, invented by McCarthy ca. 1960.

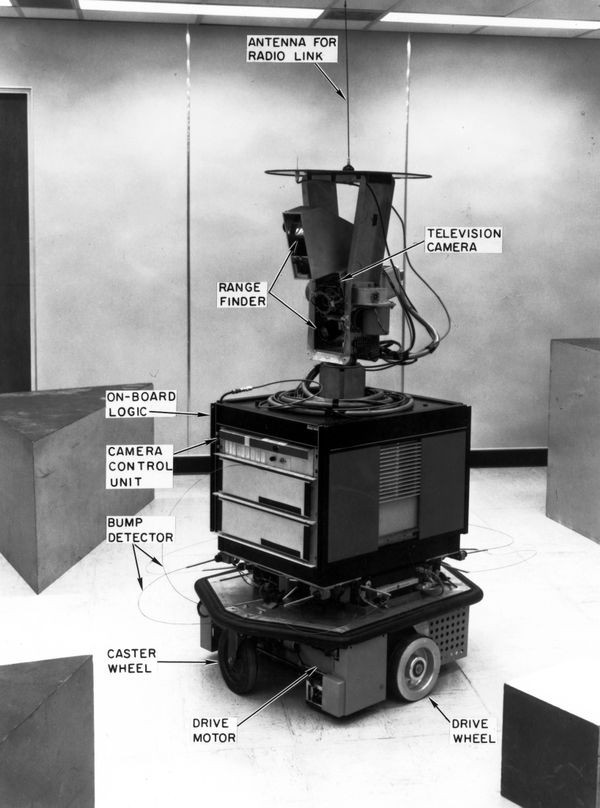

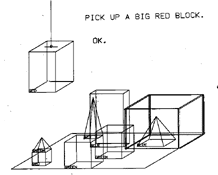

Throughout the 1970's, 80's, and 90's, LISP and Scheme were synonymous with "Artificial Intelligence", and their facility for symbolic programming, sophisticated data structures, and functional programming produced some early working demos of computer vision and natural language interaction - in strictly controlled laboratory settings.

The 1970's also saw huge progress in logic programming, the development of the Prolog language, type theory and practical type inference.

1980-1987

The 1980's saw another AI boom, largely centered around LISP, logic-programming, and "expert systems" which applied formal logic to carefully maintained databases of facts and rules.

One of the most famous products of this era were the "LISP Machines" produced by Symbolics and other manufactures, specially-designed computers to run LISP at scale; specialized parallel computing architectures for concurrent Prolog were popular as well.

The Japanese government announced an enormous "Fifth-Generation Computer" initiative along these lines in 1981, and the US responded with a $1 billion "Strategic Computing Initiative", which led to a frothy array of startups in the field.

1988

The 1980's also saw the beginning of the personal computer era, with the release of the IBM PC in 1981, and the consequent mass availability of fast, cheap microprocessors was a factor in the collapse of the 80's AI boom.

With ordinary desktop computers just as a capable of running LISP or Prolog as an expensive workstation or specialized supercomputer, most of the hardware-oriented startups collapsed or pivoted to become software providers

Likewise, expert systems found real-world applications in logistics, business planning, and finance, but developing and maintaining databases of facts and rules proved to be unfeasible outside of narrow domains - however, many of these systems are still in use today.

Another legacy of this era: LISP machine manufacturer Symbolics registered the world's first .com domain, symbolics.com, on March 15, 1985.

1970-1989

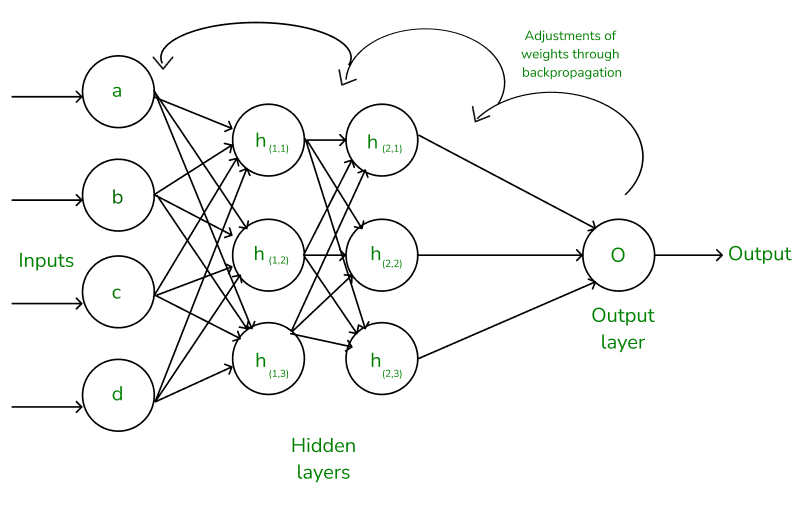

While the AI mainstream was focused on symbolic reasoning and logic programming, research did continue on neural networks, and a solution to the problem of training multilayer neural networks, the celebrated "backpropagation" algorithm, was discovered in 1970.

However, the AI mainstream took little notice, and backpropagation was independently discovered at least 3 different times before finally being popularized by David Rumelhart in 1985.

The academic stigma and isolation of neural network research played a role in this continued obscurity, but so did the limitations of computer hardware; computers in 1985 were roughly 1000x faster/cheaper than in 1970, and by the later 80's, neural networks began to show limited but successful applications.

Many of the fundamental neural network architectures discovered in this era, especially Convolutional Neural Networks (CNN's) and Recurrent Neural Networks (RNN's) are still in use today.

Part 3

1990-2009

1990-2002

In the 90's, personal computers continued to advance, the internet and the World Wide Web connected the globe, and both compute and storage continued to become cheaper, year over year.

A full history of tech in the 90's is outside the scope of this deck, as is a detailed history of the incremental progress in AI in this decade.

However, many of the leaders of modern AI research began their career in the late 80's and early 90's, and neural networks began to see real applications in computer vision and speech recognition - but the field remained a niche academic specialty.

2003-2010

The years after the dot-com bubble nonetheless saw the rapid continued development of computing technology, especially cloud computing. And for the first time, AI and related techniques began to appear in consumer products.

Google, which became a household name, was built upon the PageRank algorithm, described in Larry Page's PhD thesis. The algorithm uses large amounts of computation and storage over a large network to score search results via a probability-based model of the graph of hyperlink references between web pages.

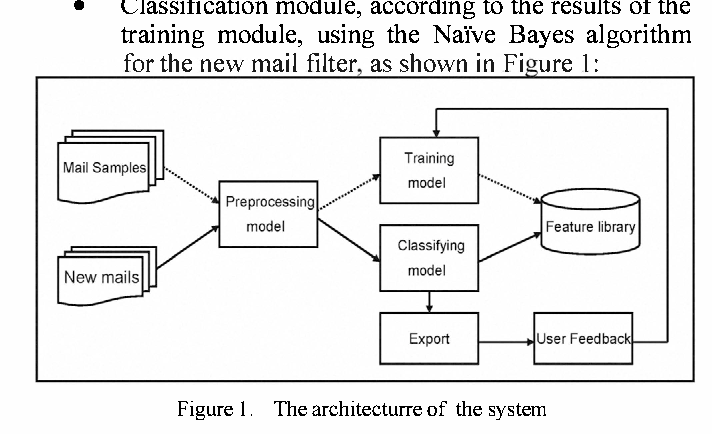

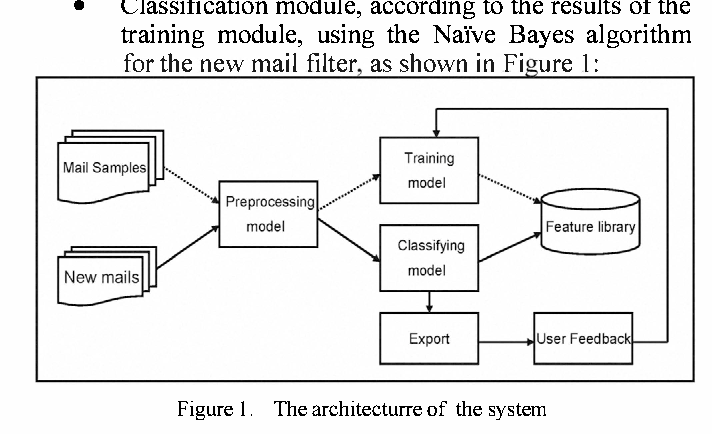

Likewise, this era saw the problem of email spam largely solved by the success of bayesian spam filters, which demonstrated that a very simple statistical model could be trained with the vast amount of data being generated and collected by internet-scale service providers.

2003-2010

Notably, neither PageRank nor spam filters relied on neural networks, LISP, logic programming techniques, or other outputs of academic AI research.

Instead, they applied state-of-the-art computer science and distributed systems theory to older math, particularly probability, statistics, graph theory, and linear algebra.

This was very characteristic of the era - the term "AI" had such a stigma from repeated disappointments that even research scientists avoided it, and instead, terms like "data mining", "machine learning", and most famously, "data science" became popular to describe these techniques.

Part 4

2010-2022

2010-2015

The Deep Learning Renaissance <TODO>

2017-2019

Transformers, Scaling Laws, and Double Descent <TODO>

2019-2022

AI Ethics, Alignment, and ChatGPT <TODO>

History of AI 1943 - 2014ish

By Richard Whaling

History of AI 1943 - 2014ish

- 77