Neural Nets

What is an Artificial Neural Network?

An artificial neural network is a computational model that can be trained to recognize correlations between input data and a desired output

What can Neural Nets do?

Rooms

Age

Price

Predict House Price

Rooms: 5

Age: 10 years

Price: ?

Input Layer

Network Topology

Output Layer

Rooms

Age

100,000

-20,000

Price

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

4

10

?

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

4

10

200000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

4

10

200000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

4

5

?

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

4

5

300000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

4

5

300000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

4

0

?

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

Rooms

Age

100,000

-20,000

Price

4

0

400000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 | $400,000 | $188,000 |

Rooms

Age

100,000

-20,000

Price

4

0

400000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 | $400,000 | $188,000 |

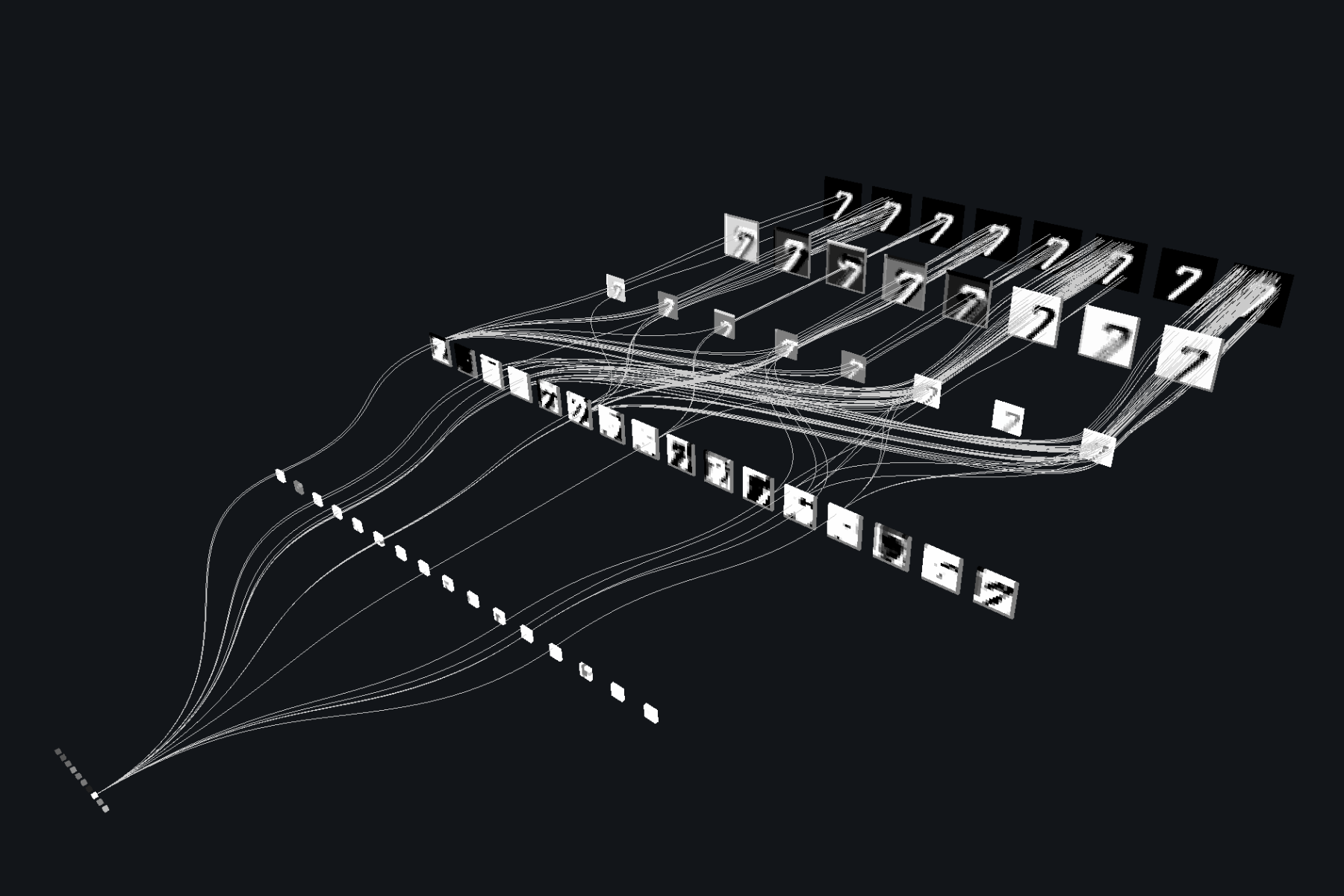

Input Layer

Output Layer

Rooms

Age

Price

Hidden Layer(s)

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

Rooms

Age

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

4

10

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

?

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

4

10

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

4

10

0

?

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

4

10

0

2

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | |||

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

4

10

0

2

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

?

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | |||

| 4 | 0 | $588,000 |

4

10

0

2

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

200000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

4

5

0

3

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

300000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

4

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

4

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

?

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

4

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

4

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

4

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

4

?

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

4

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

4

4

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

4

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

4

4

?

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 |

4

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

4

4

600000

| Rooms | Age | Price | Predicted Price | Loss | |

|---|---|---|---|---|---|

| 4 | 10 | $212,000 | $200,000 | $12,000 | |

| 4 | 5 | $302,000 | $300,000 | $2,000 | |

| 4 | 0 | $588,000 | $600,000 | $12,000 |

4

0

Rooms

Age

Price

-0.2

1

-100

1

50,000

100,000

ReLU

ReLU

4

4

600000

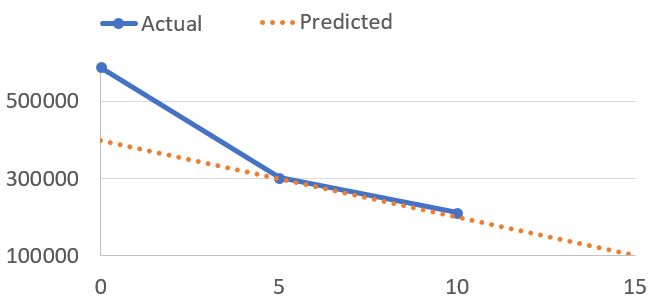

Gradient Decent

"Suppose you are at the top of a mountain, and you have to reach a lake which is at the lowest point of the mountain (a.k.a valley). A twist is that you are blindfolded and you have zero visibility to see where you are headed. So, what approach will you take to reach the lake?"

- analyticsvidhya.com

Gradient Decent

Hello Keras Demo

-Create neural network in Keras

-Train model

-Underfitting

-Epochs and batch size

-Visualize accuracy

-numPy basics

Hello Keras Demo

-Create neural network in Keras

-Train model

-Underfitting

-Epochs and batch size

-Visualize accuracy

-numPy basics

Regression Demo

- Prepare data

- Pandas

- Train/validation split

- Tuning network topology

Regression Demo

- Prepare data

- Pandas

- Train/validation split

- Tuning network topology

Binary Classifier Demo

- Overfitting

- Normalize data

- Generate training data

- Feature selection

tinyurl.com/onking

Binary Classifier Demo

- Overfitting

- Normalize data

- Generate training data

- Feature selection

Multi-Class Classifier Demo

- Encode labels

- Softmax activation

- Prediction confidence scores

Multi-Class Classifier Demo

- Encode labels

- Softmax activation

- Prediction confidence scores

Types of Problems (Recap)

Regression

Input Layer

Output Layer

Hidden Layer(s)

ReLU

ReLU

Regression

model = Sequential();

model.add(Dense(20, activation='relu', input_dim=10))

model.add(Dense(20, activation='relu'))

model.add(Dense(20, activation='relu'))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mean_squared_error')Binary Classifier

Input Layer

Output Layer

Hidden Layer(s)

ReLU

ReLU

Sigmoid

model = Sequential();

model.add(Dense(20, activation='relu', input_dim=10))

model.add(Dense(20, activation='relu'))

model.add(Dense(20, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(

optimizer='adam',

loss='binary_crossentropy'

metrics=['accuracy']

)Binary Classifier

Multi-Class Classifier

Input Layer

Output Layer

Hidden Layer(s)

ReLU

ReLU

Softmax

Softmax

Softmax

model = Sequential();

model.add(Dense(20, activation='relu', input_dim=10))

model.add(Dense(20, activation='relu'))

model.add(Dense(20, activation='relu'))

model.add(Dense(5, activation='softmax'))

model.compile(

optimizer='adam',

loss='categorical_crossentropy'

metrics=['accuracy']

)Multi-Class Classifier

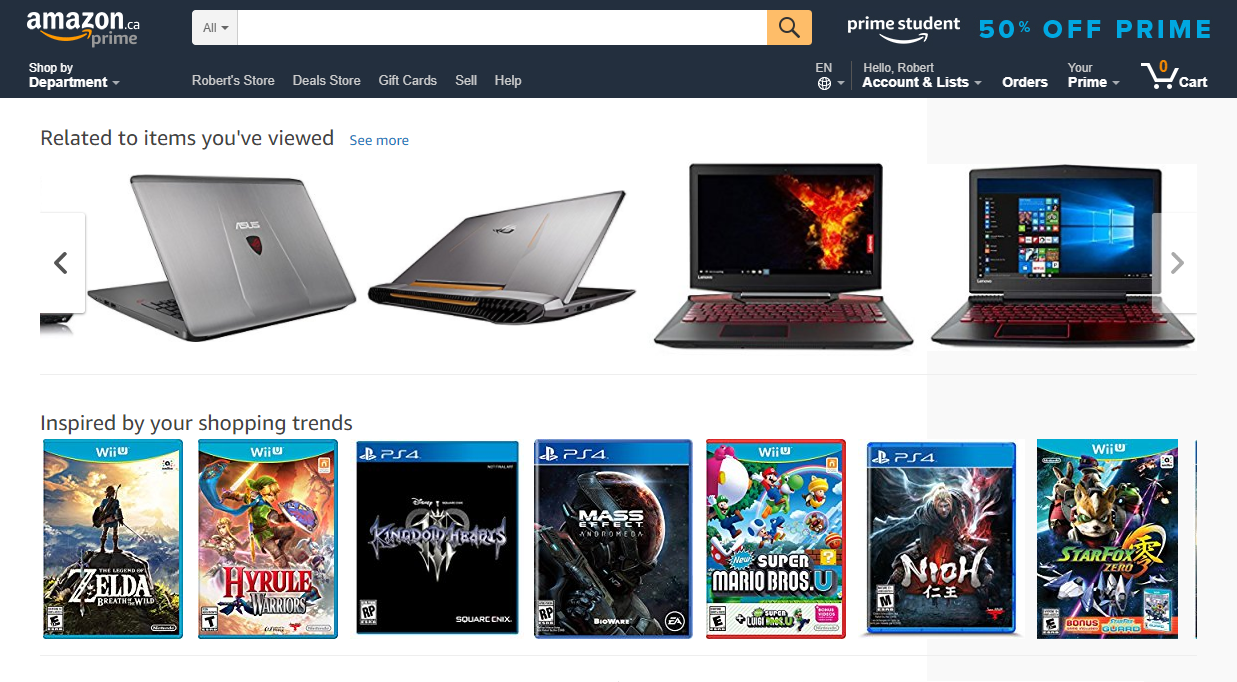

Network Optimizing Techniques (Recap)

Improve Data

- Clean data

- Get more data

- Generate more data

- Remove unnecessary inputs

- Normalize data

- Use validation split

- Make sure test/validation data is representative of real world data

Improve Algorithm

- Change number of layers

- Change number of nodes in each layer

- Change number of epochs / batch size

Takeaways

- Visualize everything

- Measure your accuracy

- Try things until your accuracy improves

Resources

Machine Learning Mastery

Datacamp

Rob McDiarmid

tinyurl.com/fitc-nn

@robianmcd

Neural Nets for Devs Ep1

By Rob McDiarmid

Neural Nets for Devs Ep1

- 1,271