Agenda

-

Introduction to DevSecOps

-

Demystifying Basics of Docker & Kubernetes

-

TerraScan - Cloud Native Security Tool

tools

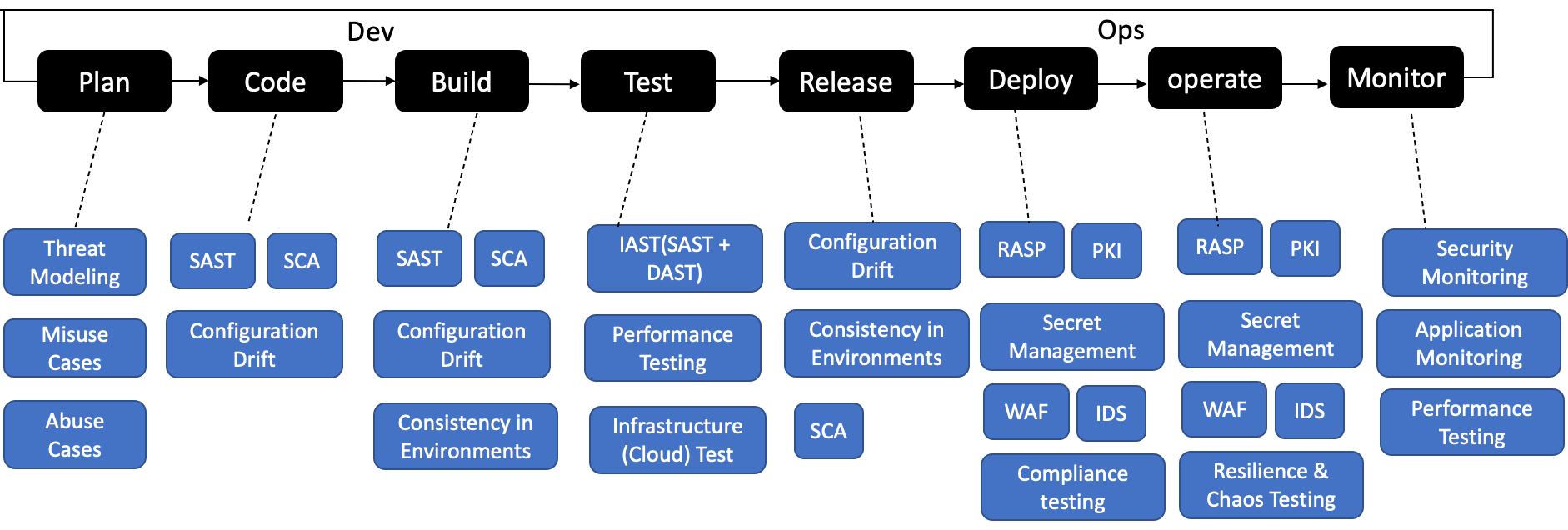

DevSecOps

skills

Culture

Development software releases & updates

Operation Reliability performance scaling

Security Reliability performance scaling

DevSecOps

-

Integrate Security Tools

-

Create Security as Code Culture

-

Promote Cross

Secure Software Development lifecycle

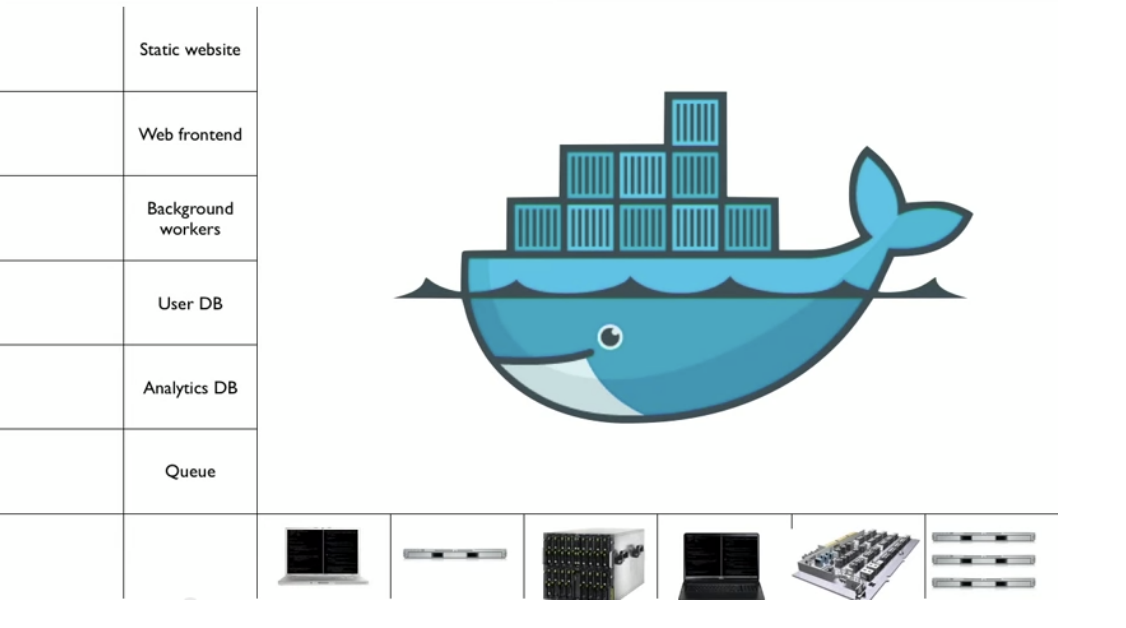

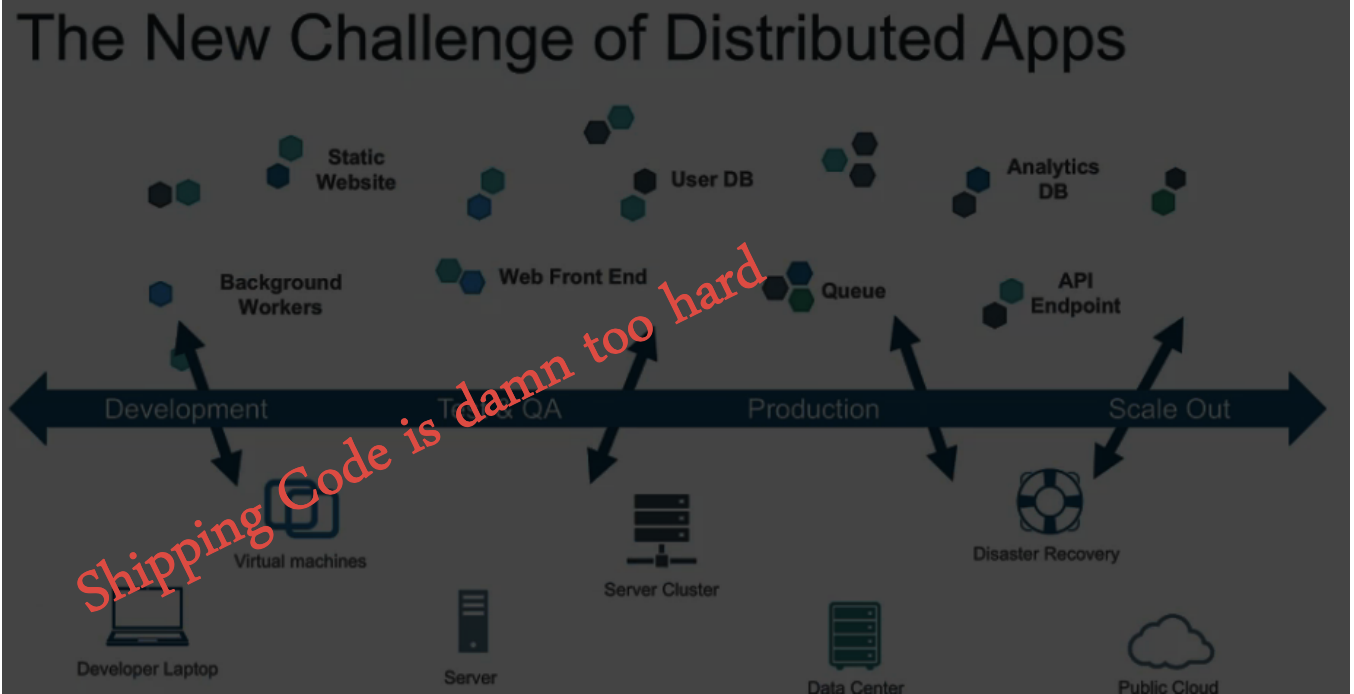

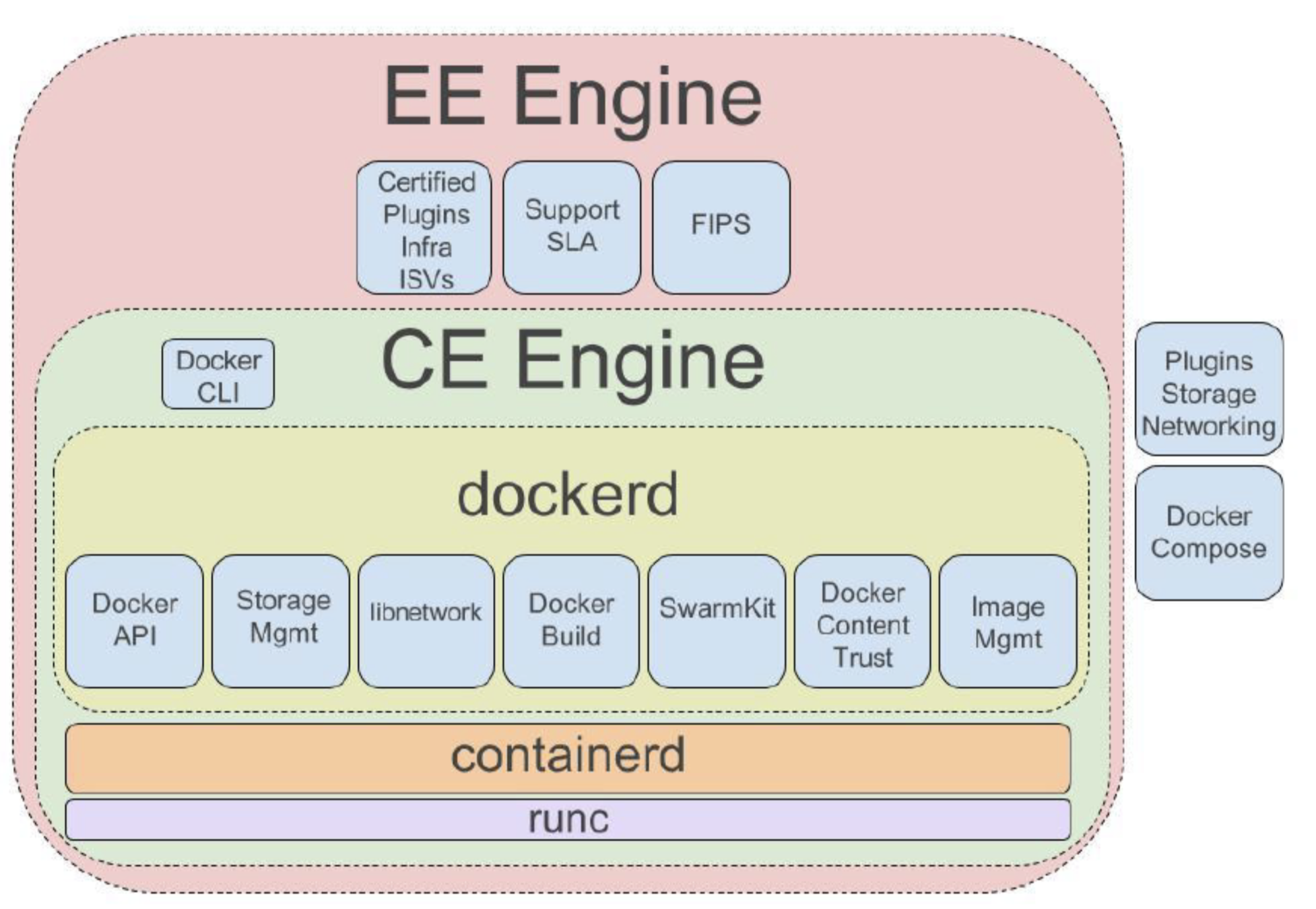

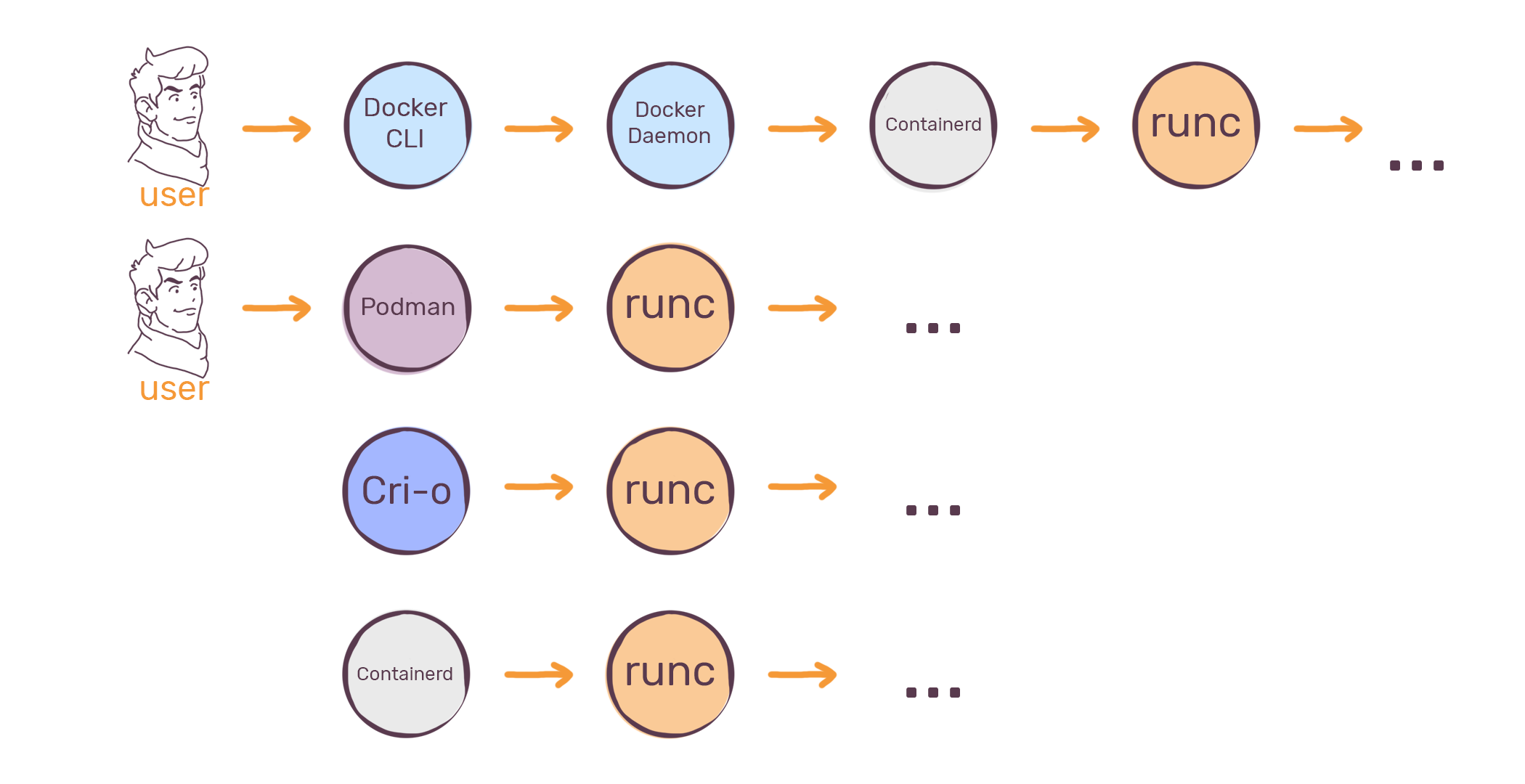

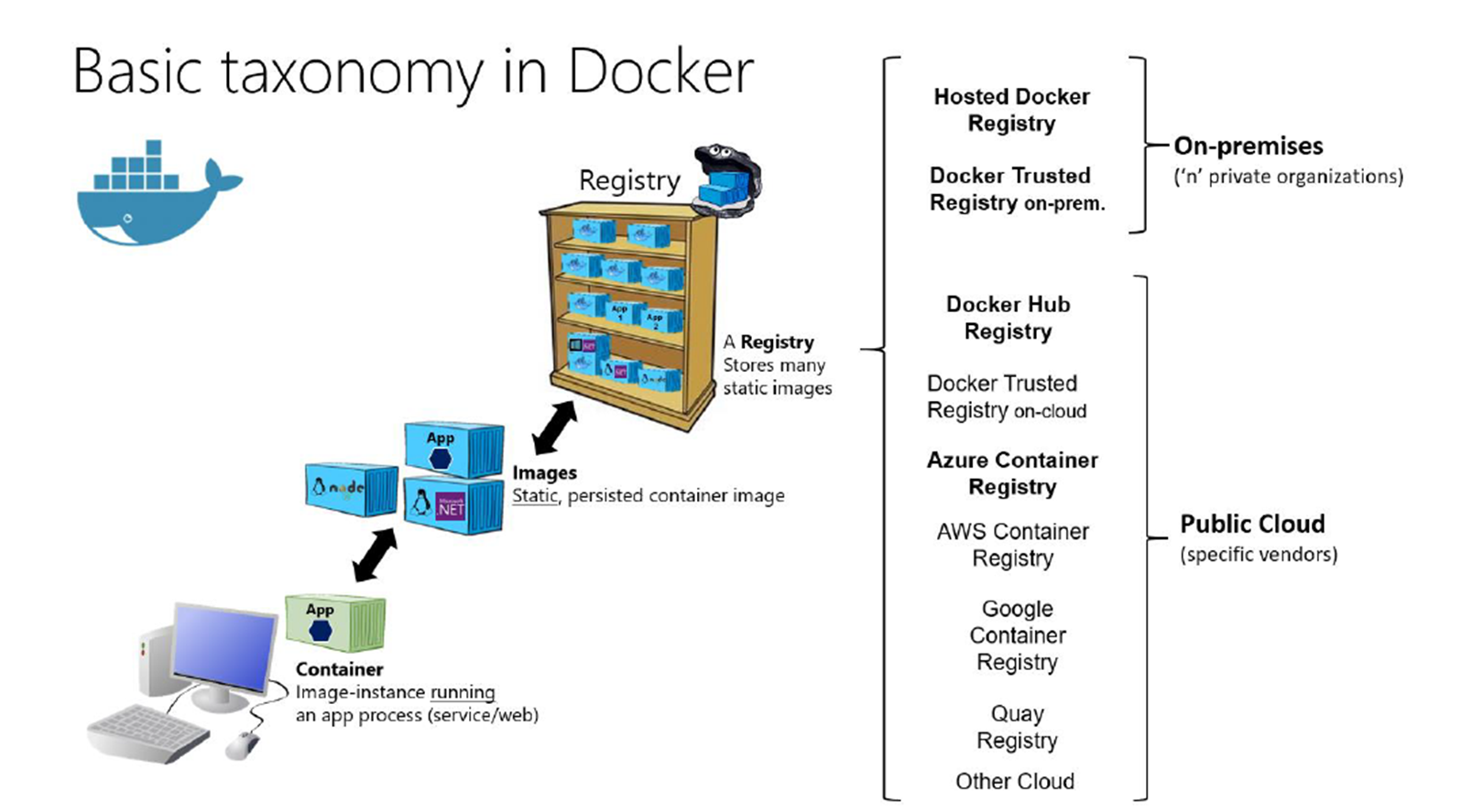

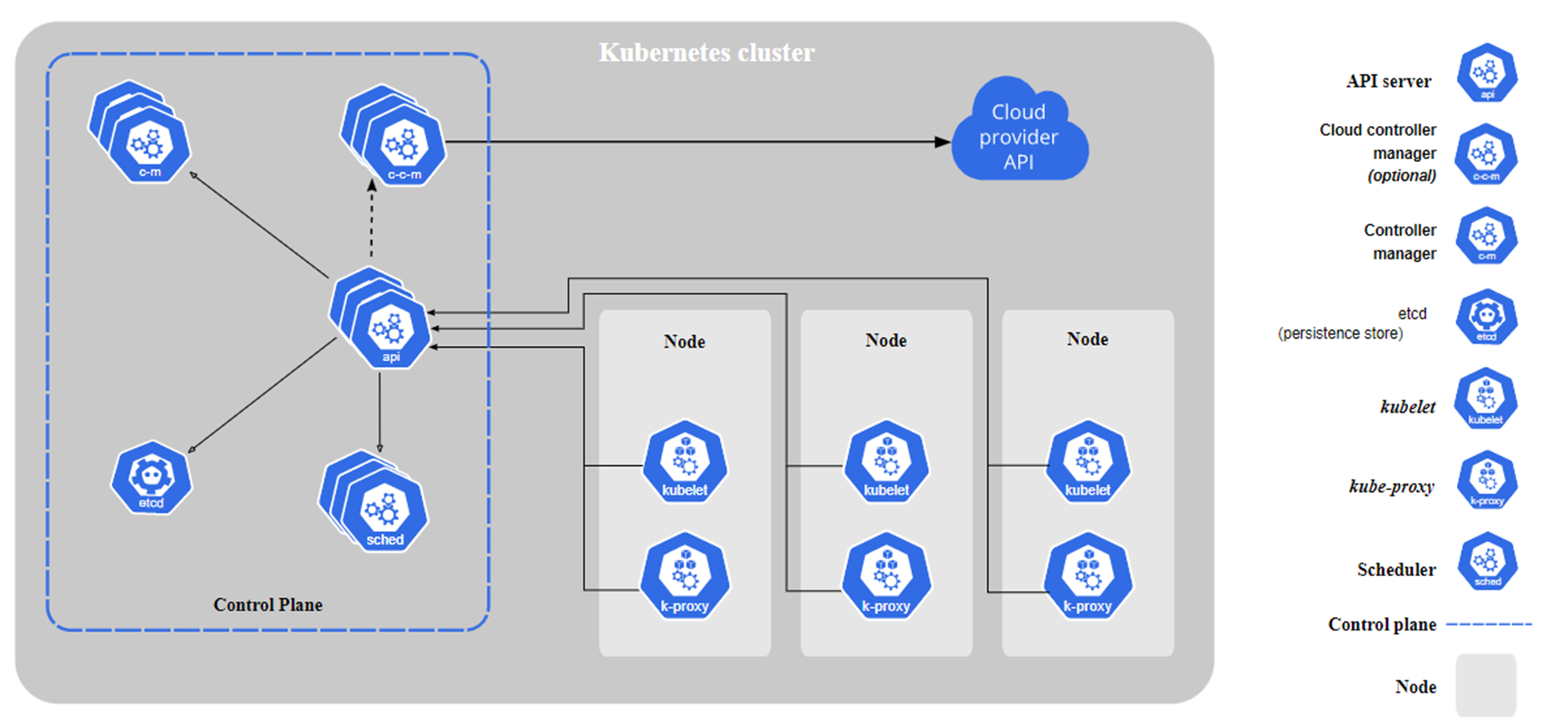

Docker

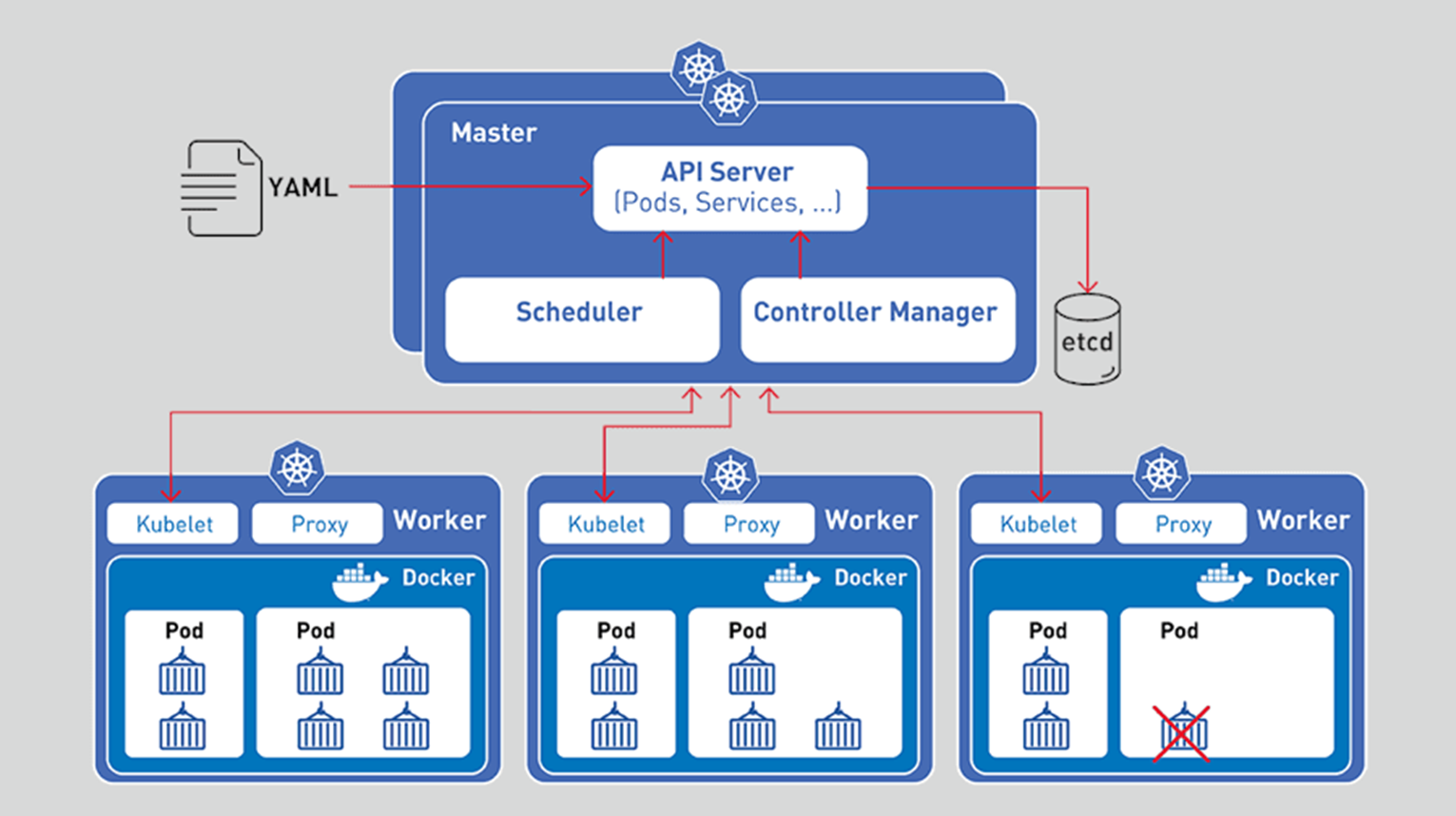

kubernetes

Terrascan - Cloud Native Security Tool

Key features

- 500+ Policies for security best practices

- Scanning of Terraform (HCL2)

- Scanning of Kubernetes (JSON/YAML), Helm v3, and Kustomize

- Scanning of Dockerfiles

- Support for AWS, Azure, GCP, Kubernetes, Dockerfile, and GitHub

- Integrates with docker image vulnerability scanning for AWS, Azure, GCP, Harbor container registries.

support project by giving a gitstar !

Demo :-

K8s Creating Pod - Declarative Way (With Yaml File)

apiVersion: v1

kind: Pod # type of K8s object: Pod

metadata:

name: firstpod # name of pod

labels:

app: frontend # label pod with "app:frontend"

spec:

containers:

- name: nginx

image: nginx:latest # image name:image version, nginx downloads from DockerHub

ports:

- containerPort: 80 # open ports in the container

env: # environment variables

- name: USER

value: "username"K8s Creating Pod - K8s Creating Pod - Imperative Way

minikube start

kubectl run firstpod --image=ngnix --restart=Never

kubectl get pod -o wide

kubectl decsribe pods firstpod

kubectl logs fisrtpod

kubectl exec firstpod -- hostname

kubectl exec firstpod -- ls

kubectl get pods -o wide

kubectl delete pods fisrtpod

MultiContainer Pod , Init Container

- Best Practice: 1 Container runs in 1 Pod normally, because the smallest element in K8s is Pod (Pod can be scaled up/down).

- Multicontainers run in the same Pod when containers are dependent of each other.

-

Multicontainers in one Pod have following features:

- Multi containers that run on the same Pod run on the same Node.

- Containers in the same Pod run/pause/deleted at the same time.

- Containers in the same Pod communicate with each other on localhost, there is not any network isolation.

- Containers in the same Pod use one volume commonly and they can reasch same files in the volume.

apiVersion: v1

kind: Pod

metadata:

name: initcontainerpod

spec:

containers:

- name: appcontainer # after initcontainer closed successfully, appcontainer starts.

image: busybox

command: ['sh', '-c', 'echo The app is running! && sleep 3600']

initContainers:

- name: initcontainer

image: busybox # init container starts firstly and look up myservice is up or not in every 2 seconds, if there is myservice available, initcontainer closes.

command: ['sh', '-c', "until nslookup myservice; do echo waiting for myservice; sleep 2; done"]# save as service.yaml and run after pod creation

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376apiVersion: v1

kind: Pod

metadata:

name: multicontainer

spec:

containers:

- name: webcontainer # container name: webcontainer

image: nginx # image from nginx

ports: # opening-port: 80

- containerPort: 80

volumeMounts:

- name: sharedvolume

mountPath: /usr/share/nginx/html # path in the container

- name: sidecarcontainer

image: busybox # sidecar, second container image is busybox

command: ["/bin/sh"] # it pulls index.html file from github every 15 seconds

args: ["-c", "while true; do wget -O /var/log/index.html https://raw.githubusercontent.com/sangam14/bugtroncon-demo/main/index.html/; sleep 15; done"]

volumeMounts:

- name: sharedvolume

mountPath: /var/log

volumes: # define emptydir temporary volume, when the pod is deleted, volume also deleted

- name: sharedvolume # name of volume

emptyDir: {} # volume type emtpydir: creates empty directory where the pod is runnningKubectl apply -f multicontainer.yaml

kubectl get pods

kubectl get pods -w create muticontainer on the pod ( web container and sidecarcontainer)

Connect ( bin/sh of the web container and install net-tools to show ethernet interface

Kubectl exec -it muticontainer -c webcontainer -- /bin/sh

# apt update

# apt install net-tools

# ifconfig

### you can check same for sidecarcontainer

Kubectl exec -it muticontainer -c sidecarcontainer -- /bin/sh

kuectl logs -f multicontainer -c sidecarcontainer

kubectl port-forward pod/multicontainer 8080:80

kubectl delete -f multicontainer.yaml apiVersion: v1

kind: pod

metadata:

name: firstpod

labels:

app: frontend

tier: frontend

mycluster.local/team: team1

spec:

containers:

- name: ngnix

image: ngnix:latest

ports:

- containerPort: 80

env:

- name: USER

value: "username"

kubectl label pods pod1 team=development #adding label team=development on pod1

kubectl get pods --show-labels

kubectl label pods pod1 team- #remove team (key:value) from pod1

kubectl label --overwrite pods pod1 team=test #overwrite/change label on pod1

kubectl label pods --all foo=bar # add label foo=bar for all pods

Label & Selector , Annotation ,

Namespace

imperative way to add label to the pod

Selector

we can select/filter pod with Kubectl command

kubectl get pods -l "app=firstapp" --show-labels

kubectl get pods -l "app=firstapp,tier=frontend" --show-labels

kubectl get pods -l "app=firstapp,tier!=frontend" --show-labels

kubectl get pods -l "app,tier=frontend" --show-labels #equality-based selector

kubectl get pods -l "app in (firstapp)" --show-labels #set-based selector

kubectl get pods -l "app not in (firstapp)" --show-labels #set-based selector

kubectl get pods -l "app=firstapp,app=secondapp" --show-labels # comma means and => firstapp and secondapp

kubectl get pods -l "app in (firstapp,secondapp)" --show-labels # it means or => firstapp or secondapp

apiVersion: v1

kind: pod

metadata:

name: firstpod

labels:

app: frontend

spec:

containers:

- name: ngnix

image: ngnix:latest

ports:

- containerPort: 80

nodeSelector:

hddtype: ssd

NodeSelector

kubectl apply -f podnode.yaml

kubectl get pods -w #always watch

kubectl label nodes minikube hddtype=ssd

#after labelling node, pod11 configuration can run, because node is labelled with hddtype:ssd

apiVersion: v1

kind: pod

metadata:

name: annotationpod

annotations:

owner: "sangam "

notificatin-email: "owner@email.com"

realesedate: "1.1.2022"

ngnix.ingress.kubenetes.io/force-ssl-redirect: "true"

spec:

containers:

- name: annotationcontainer

image: ngnix:latest

ports:

- containerPort: 80

env:

- name: USER

value: "username"

annotation

kubectl apply -f podannotation.yaml

kubectl describe pod annotationpod

kubectl annotate pods annotationpod foo=bar #imperative way

kubectl delete -f podannotation.yaml

- Namespaces provides a mechanism for isolating groups of resources within a single cluster. It provides a scope for names.

- Namespaces cannot be nested inside one another and each Kubernetes resource can only be in one namespace.

- Kubectl commands run on default namespaces if it is not determined in the command.

kubectl get pods --namespaces kube-system #get all pods in the kube-system namespaces

kubectl get pods --all-namespaces # get pods from all namespaces

kubectl create namespace development #create new development namespace in imperative way

kubectl get pods -n development # get pods from all namespaceapiVersion: v1

kind : Namespace

metadata:

name: developement

---

apiVersion: v1

kind: pod

metadata:

namespace: developement

name: naespacepod

spec:

containers:

- name: namespacecontainer

image: ngnix:latest

ports:

- containerPort: 80 kubectl apply -f namespace.yaml

kubectl get pods -n development #get pods in the development namespace

kubectl exec -it namespacedpod -n development -- /bin/sh

#run namespacepod in development namespace

kubectl config set-context --current --namespace=development #now default namespace is development

kubectl get pods #returns pods in the development namespace

kubectl config set-context --current --namespace=default #now namespace is default

kubectl delete namespaces development #delete development namespaceWe can avoid -n for all command by changing of default namespace

- A Deployment provides declarative updates for Pods and ReplicaSets.

- We define states in the deployment, deployment controller compares desired state and take necessary actions to keep desire state.

- Deployment object is the higher level K8s object that controls and keeps state of single or multiple pods automatically.

kubectl create deployment firstdeployment --image=nginx:latest --replicas=2

kubectl get deployments

kubectl get pods -w #on another terminal

kubectl delete pods <oneofthepodname> #we can see another terminal, new pod will be created (to keep 2 replicas)

kubectl scale deployments firstdeployment --replicas=5

kubectl delete deployments firstdeployment apiVersion: apps/v1

kind: Deployment

metadata:

name: firstdeployment

labels:

team: development

spec:

replicas: 3

selector: # deployment selector

matchLabels: # deployment selects "app:frontend" pods, monitors and traces these pods

app: frontend # if one of the pod is killed, K8s looks at the desire state (replica:3), it recreats another pods to protect number of replicas

template:

metadata:

labels: # pod labels, if the deployment selector is same with these labels, deployment follows pods that have these labels

app: frontend # key: value

spec:

containers:

- name: nginx

image: nginx:latest # image download from DockerHub

ports:

- containerPort: 80 # open following portskubectl apply -f deployement1.yaml

kubectl get pods

kubectl delete pods <podname>

kubectl get pods

kubectl scale deployements firstdeployement --replicas=5

kubectl get deployements

kubectl scale deployements firstdeployement --replicas=3

kubectl get pods -o wide

kubectl exec -it -c <podname> -- bash

# apt get update

# apt install net-tools

# apt install iputils-ping

# ipconfig

kubectl port-forward <podname> 8085:80

kubectl delete -f deployment1.yaml

Rollout - Rollback

apiVersion: apps/v1

kind: Deployment

metadata:

name: rcdeployment

labels:

team: development

spec:

replicas: 5 # create 5 replicas

selector:

matchLabels: # labelselector of deployment: selects pods which have "app:recreate" labels

app: recreate

strategy: # deployment roll up strategy: recreate => Delete all pods firstly and create Pods from scratch.

type: Recreate

template:

metadata:

labels: # labels the pod with "app:recreate"

app: recreate

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80kuectl apply -f recrete-deployement.yaml

kubectl get pods

kubectl get rs

kubectl set image deployement rcdeplotemnt ngnix=httpd

kubectl get pod

kubectl get rs

kubectl delete -f recreate-deplotment.yaml

kubectl apply -f rolling-deployment.yaml --record

kubcetl get pods

kubectl get rs

kubectl edit deployment rolldeployment --record

kubectl get pods

kubectl get rs

kubectl set image deployment rolldeployment ngnix=httpd:alpine --record-true

kubectl rollout history deployment rolldeployment

kubectl delete -f rolling-deployment.yaml Terrascan - Cloud Native Security Tool

Install Terrascan

$ curl -L "$(curl -s https://api.github.com/repos/accurics/terrascan/releases/latest | grep -o

-E "https://.+?_Darwin_x86_64.tar.gz")" > terrascan.tar.gz

$ tar -xf terrascan.tar.gz terrascan && rm terrascan.tar.gz

$ install terrascan /usr/local/bin && rm terrascan

$ terrascan

Install terrascan via brew

$ brew install terrascan

Docker Image

$ docker run accurics/terrascan

push images

Source Code Repository

CI/CD pipeline

git action

pre-commit

Container Registry

Atlantis

GitOps repo

Sync changes

test

Dev

Prod

commit code

GitOps namespace

Kubernetes Cluster

pull images

Pre-Sync Hook

Terraform Pull Request Automation

Kubernetes API

etcd

persistent to database ( if valid)

Custom Security Policies

Kubernetes API Response

Deployment Creation Request

Webhook

validation decision

validating

Admission

admission Controller

terrascan CLI

terrascan as Server

DockerFile

Kubernetes

Helm

terraform

Github

Slack Notification

$ terrascan scan -i <IaC provider> --find-vuln

kustomize

Thank You !

Demo Code:-https://github.com/sangam14/bugtroncon-demo

Introduction to DevSecOps , K8s , Docker, Terrascan

By Sangam Biradar

Introduction to DevSecOps , K8s , Docker, Terrascan

- 848