Scaling Ethereum 2.0

Saulius Grigaitis

The Scaling Problem

- Most popular blockchains handle only 7-15 transactions/second (Visa - 4000 transactions/second)

- How can we reach performance close to the traditional systems while maintaining properties found among various blockchains:

- Decentralized

- Censorship free

- Fault-tolerant

- Tamper-resistant

- Ability to run on commodity devices

...

A Few Ways to Solve Scaling Problem

-

Larger blocks - simply increasing the size of a block in order to make space for more transactions

- Sharding - transactions distributed across parallel chains that execute transactions separately

- Off-chain execution - probably the most realistic short- term solution with multiple different proposals (State Channels, Plasma, Rollups, etc.)

Larger Blocks

- Increases processing time

- Increases storage requirements

- Increases network load

- Increases forking

All this leads to higher centralization as full nodes (supernodes) demand high-end hardware and infrastructure. Not considered as a proper solution.

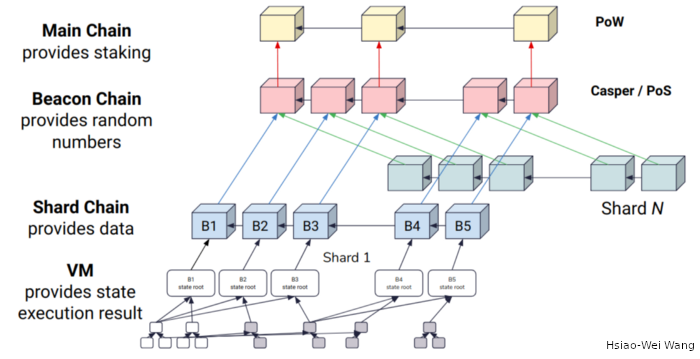

Sharding

(Initial Ethereum 2.0 Plan)

Sharding

- The idea is relatively simple - split transactions across multiple shard chains, however, this approach has multiple complicated problems such as:

- Atomic cross-shard transactions (especially complex Smart Contracts) are complicated, some workarounds:

- Design the use-case on a single shard

- Move assets to destination shard and execute transactions in later blocks

- Risk of accepting invalid chain (the main benefit of sharding is that every node doesn't need to verify all shards data)

- Atomic cross-shard transactions (especially complex Smart Contracts) are complicated, some workarounds:

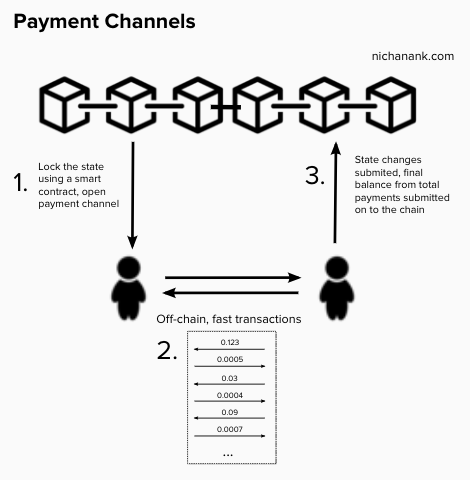

State Channels

- Participants must deposit

- Bidirectional payments

- Composition (A -> B -> C)

- Can't send payments

off-chain to non-participants

- Transactions balance can't

exceed deposits

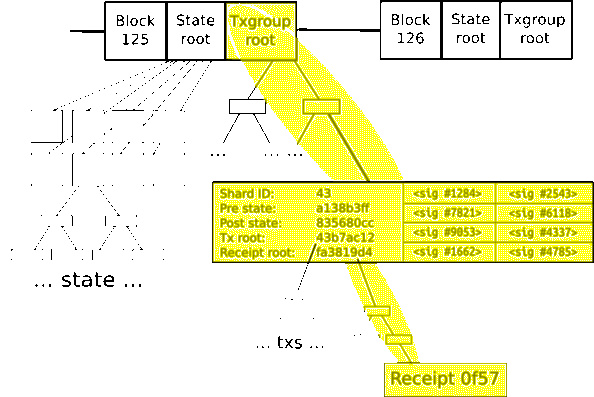

Plasma

- Transactions in Off-chain

Merkle trees - Possible to send to

non-participant - Requires publishing hash at

regular intervals - Somebody needs to

challenge fraudulent

transactions - Long exit time (7-14 days)

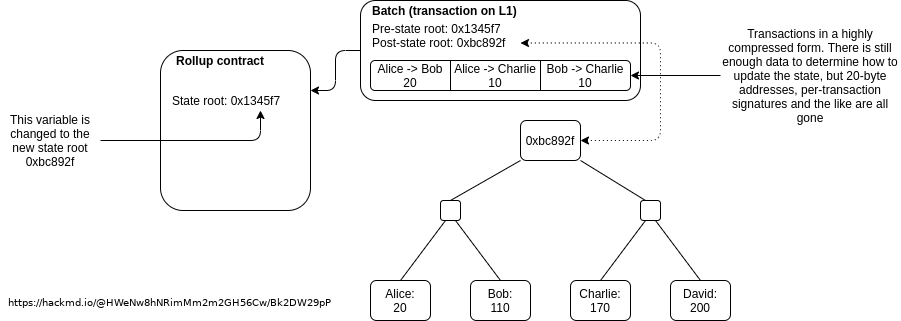

Rollups

.

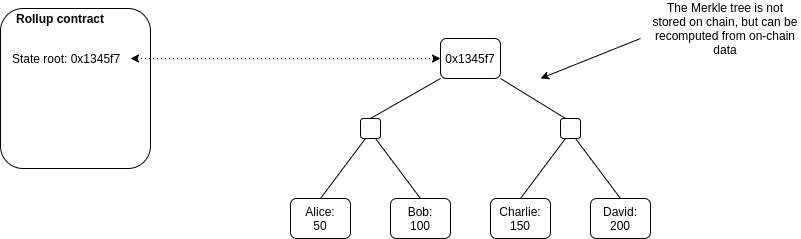

Rollups

- Hybrid approach - moves computation off-chain, keeps partial data on-chain (the rest of the data can be recomputed)

- On-chain data is part of the consensus, everyone who wants can verify it

- Speed-up limited by on-chain part (ERC20 transfer costs ~150x less gas)

- Possible to send/receive assets from/to non-participants

- Two main types:

- Optimistic Rollups - uses fraud proofs

- ZK-Rollup - uses cryptographic validity proofs

Optimistic

- Low complexity

- Easier generalization

- Long withdrawal period

- Lower fixed costs of

batch (state root change)

- Higher per transaction cost

ZK Rollups

- High complexity

- Harder generalization

- Short withdrawal period

- Higher fixed costs of batch (expensive ZK-SNARK computation)

- Lower per transaction cost (no need to publish all data)

Data Sharding for Rollups

- Full blockchain sharding (separate execution on shards) still has multiple unresolved problems

- Rollups are limited by available data in a block (~25kb in Ethereum 1.0)

- Data increase inside a block isn't a real solution

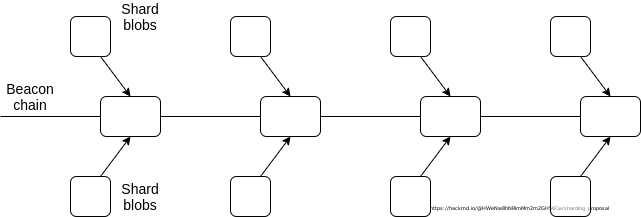

- Ethereum 2.0 proposes Data Sharding:

- Up to 16 megabytes per slot

- Every node doesn't needs to download all the data

Data Sharding (DS)

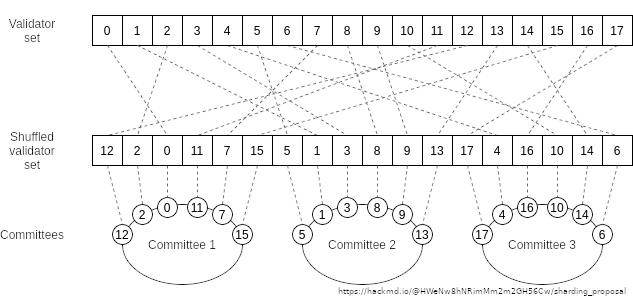

DS: Randomly Sampled Commitees

- Committee votes only for assigned shard

- Validators are shuffled after validators' indexes are fixed, so it's very hard for an attacker to control a committee

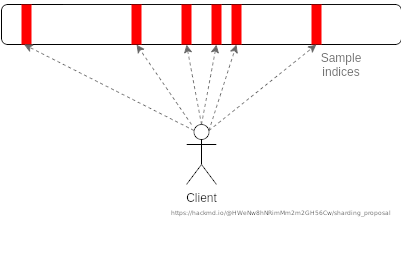

DS: Data Availability

Sampling (DAS)

- A client randomly chooses samples (every client needs to download only a small part of the data)

- It's complicated for an attacker with high control to convince clients to accept unavailable blob

DS: Erasure Coding

- Polynomial oversampling can be used against attackers that avoid publishing full blobs by publishing only the chunks that clients requested:

- Split blob into N data chunks

- Interpolate through those data points

- Calculate redundant data for next N+1 - 2N

- It's possible to recover the original data from any N points of the 2N data samples

DS: Kate Polynomial Commitments

- Kate polynomial commitment scheme allows a prover to commit to a polynomial using only a single elliptic curve point

- This commitment can be later opened at any position (prover can prove that the value of the polynomial at some position is equal to the claimed value)

- A pairing-friendly elliptic curve is needed for this scheme (for example, BLS12-381)

- Such a commitment hides polynomial

- A trusted setup is needed (for example, via Secure Multiparty Computation)

My Further Research Options

- Proceed with the latest design (Data-only Sharding) Ethereum 2.0 and propose improvements

- Stick to the original Ethereum 2.0 Executable Shards proposal and focus on unsolved problems

Questions

Scaling Ethereum 2.0

By saulius

Scaling Ethereum 2.0

- 280