Agentic Workflows

in LlamaIndex

2025-04-12 CMU AI Agents Hackathon

What are we talking about?

- What is LlamaIndex

- What is an Agent?

- Agent Patterns in LlamaIndex

- Chaining

- Routing

- Parallelization

- Orchestrator-Workers

- Evaluator-Optimizer

- Giving Agents access to data

- How RAG works

What is LlamaIndex?

Python: docs.llamaindex.ai

TypeScript: ts.llamaindex.ai

LlamaParse

World's best parser of complex documents

Free for 1000 pages/day!

cloud.llamaindex.ai

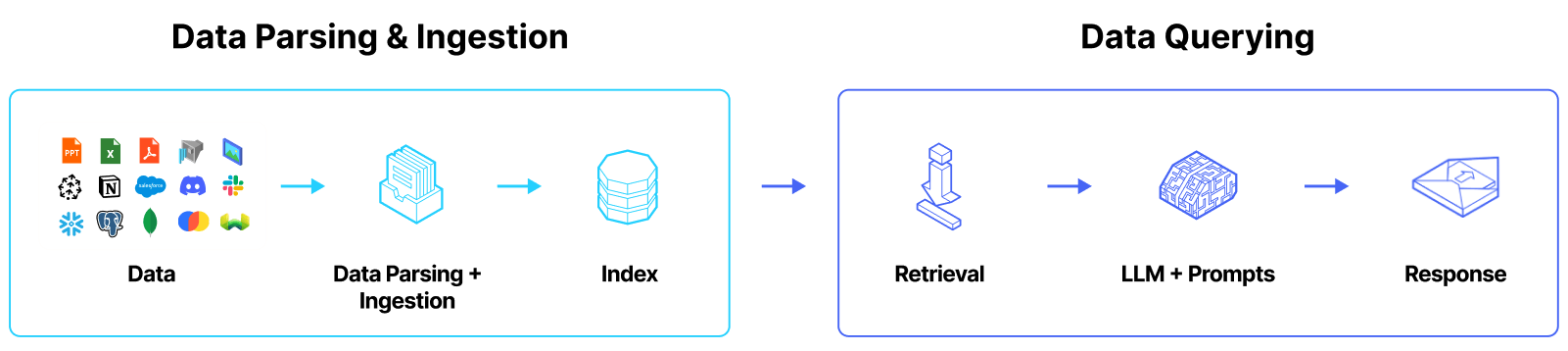

LlamaCloud

Turn-key RAG API for Enterprises

Available as SaaS or private cloud deployment

Sign up at cloud.llamaindex.ai

LlamaHub

Why LlamaIndex?

- Build faster

- Skip the boilerplate

- Avoid early pitfalls

- Get best practices for free

- Go from prototype to production

What is an agent?

A new paradigm for programming

Building Effective Agents

- Chaining

- Routing

- Parallelization

- Orchestrator-Workers

- Evaluator-Optimizer

Chaining

Workflows

class MyWorkflow(Workflow):

@step

async def step_one(self, ev: StartEvent) -> FirstEvent:

print(ev.first_input)

return FirstEvent(first_output="First step complete.")

@step

async def step_two(self, ev: FirstEvent) -> SecondEvent:

print(ev.first_output)

return SecondEvent(second_output="Second step complete.")

@step

async def step_three(self, ev: SecondEvent) -> StopEvent:

print(ev.second_output)

return StopEvent(result="Workflow complete.")

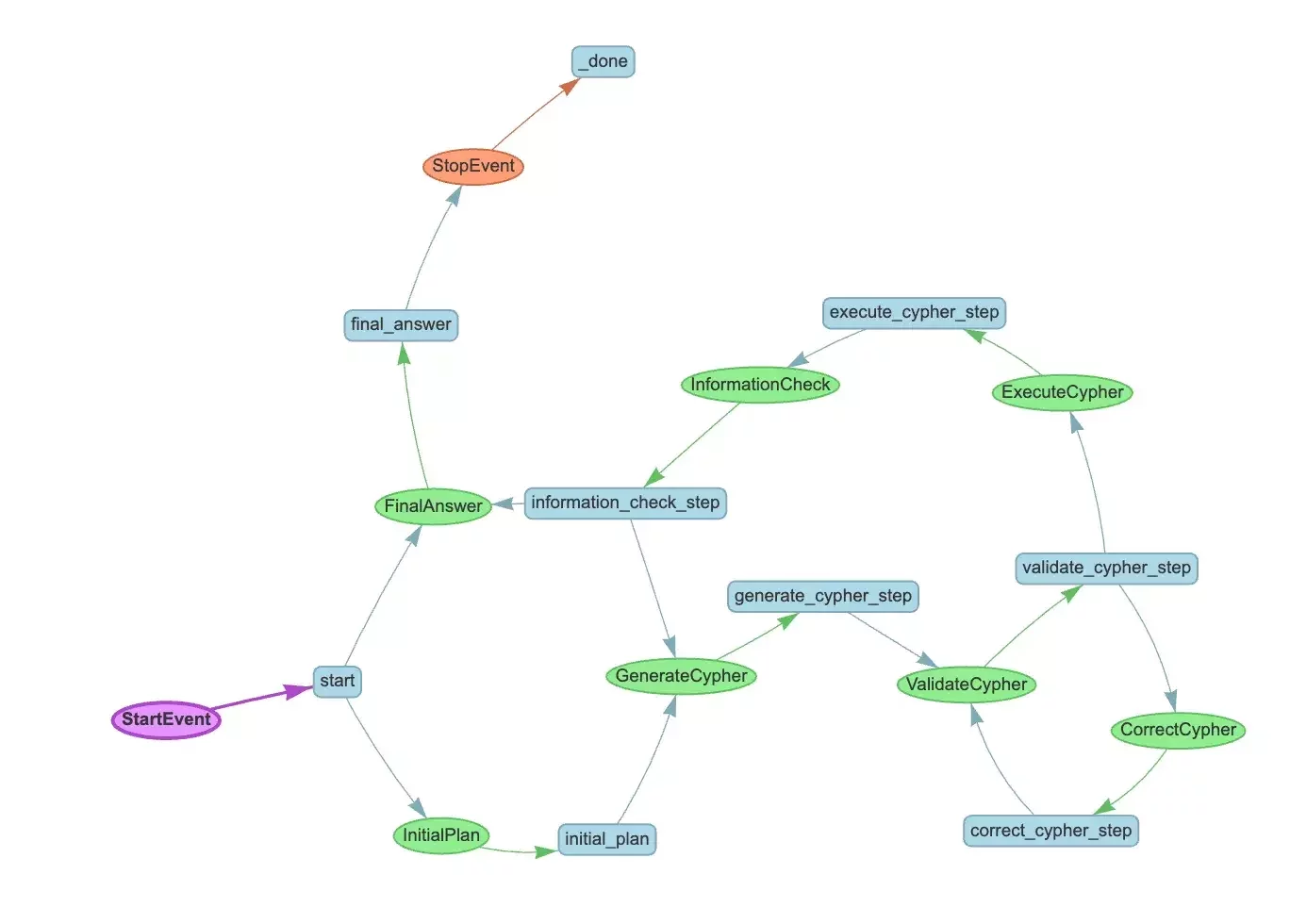

Workflow visualization

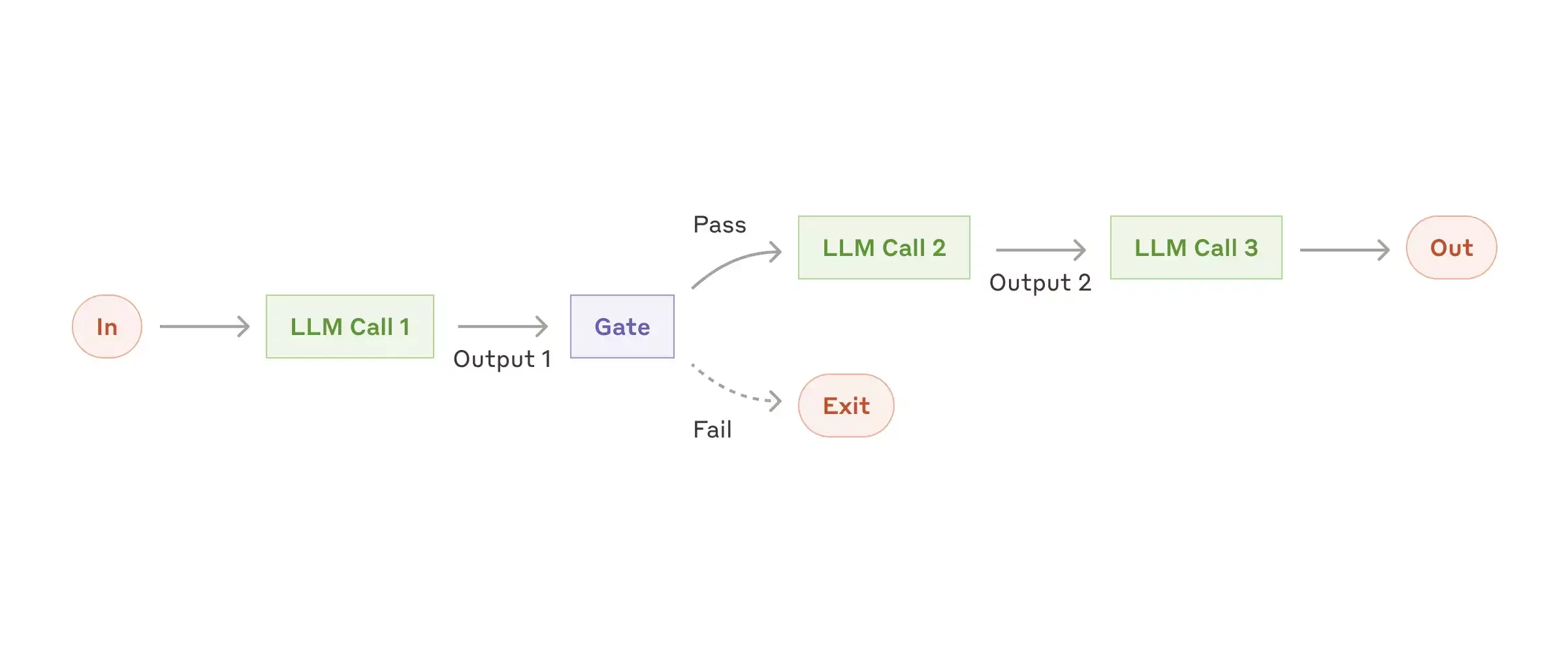

Routing

Branching

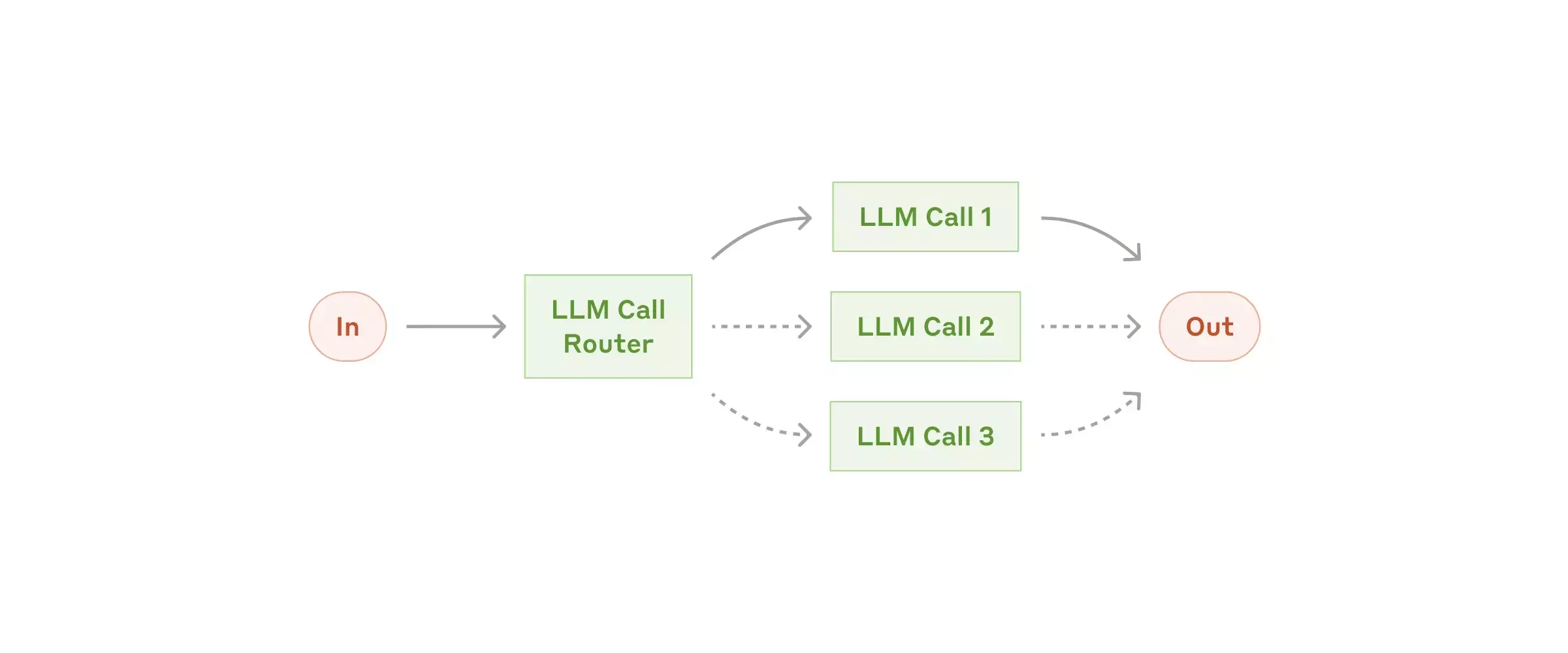

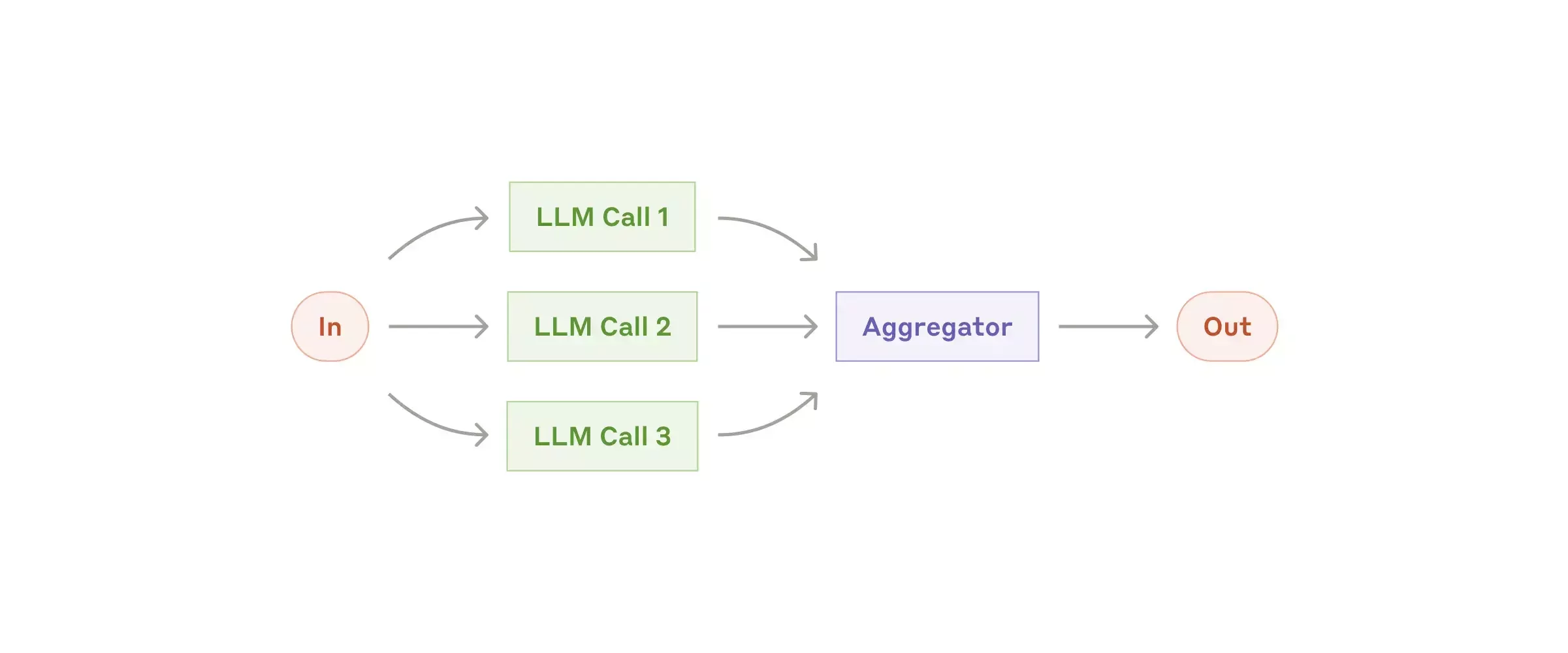

Parallelization

Sectioning

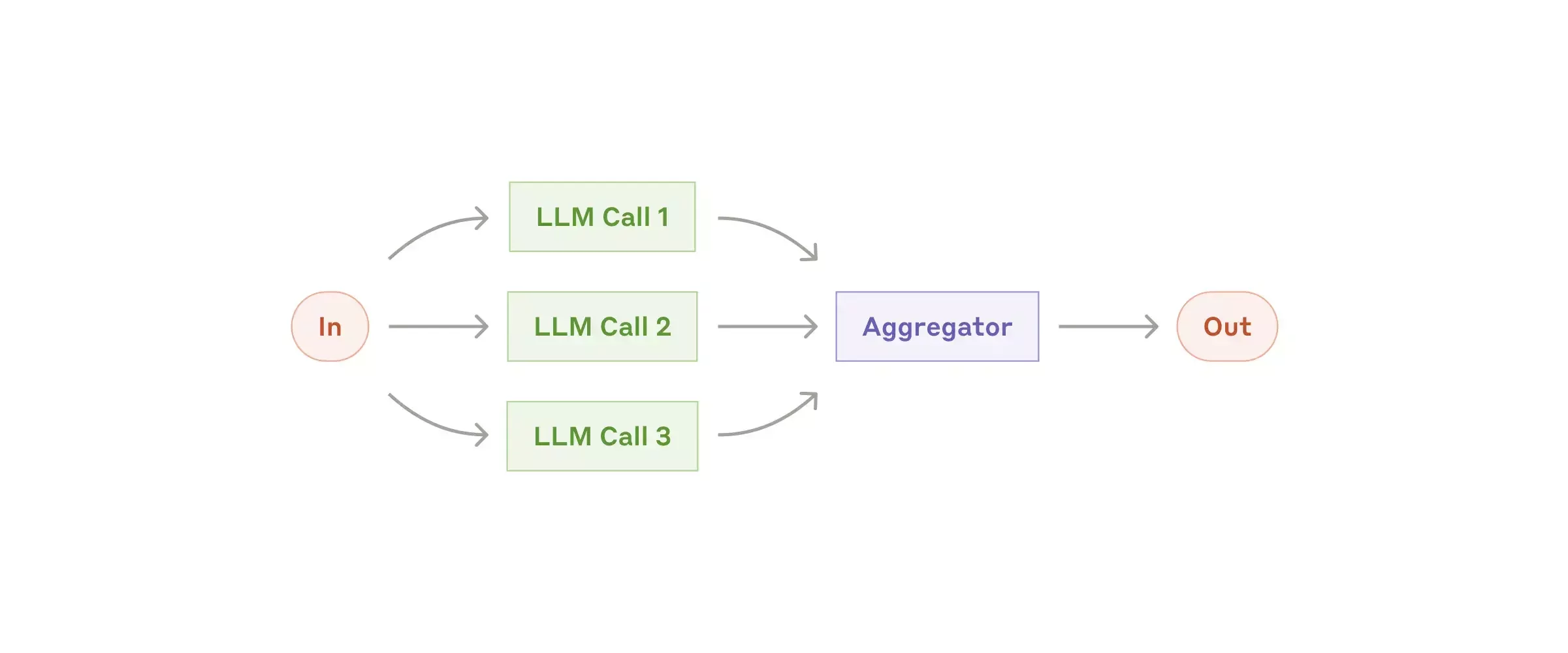

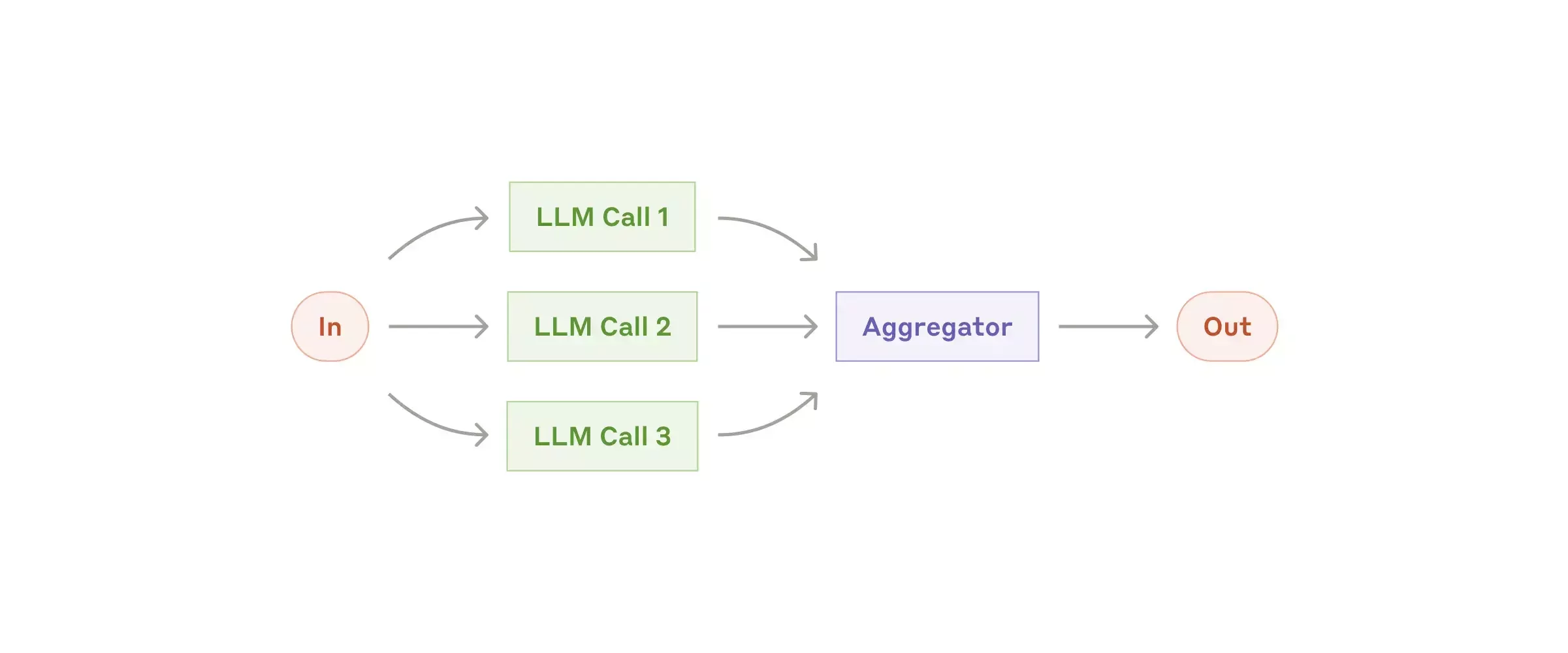

Parallelization: flavor 1

Voting

Parallelization: flavor 2

Concurrency

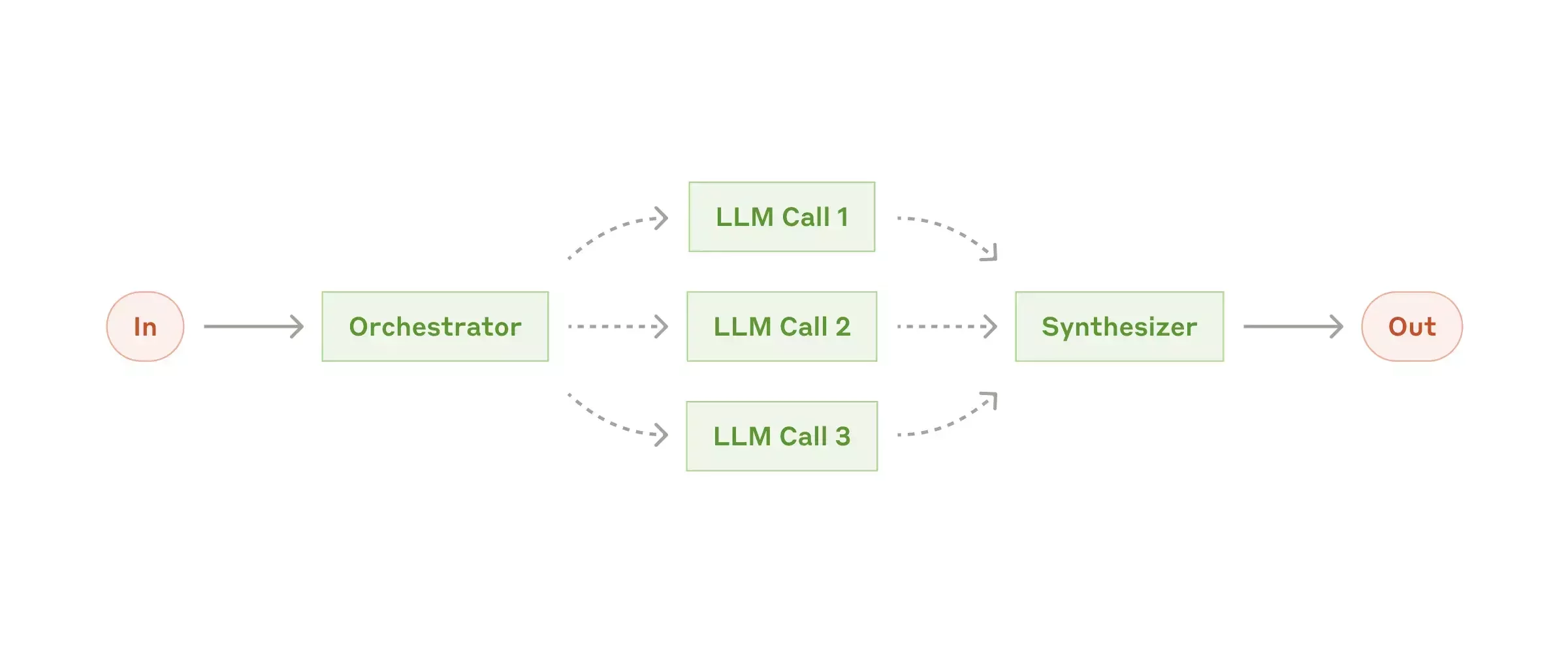

Orchestrator-Workers

Concurrency

(again)

Orchestration in LlamaIndex

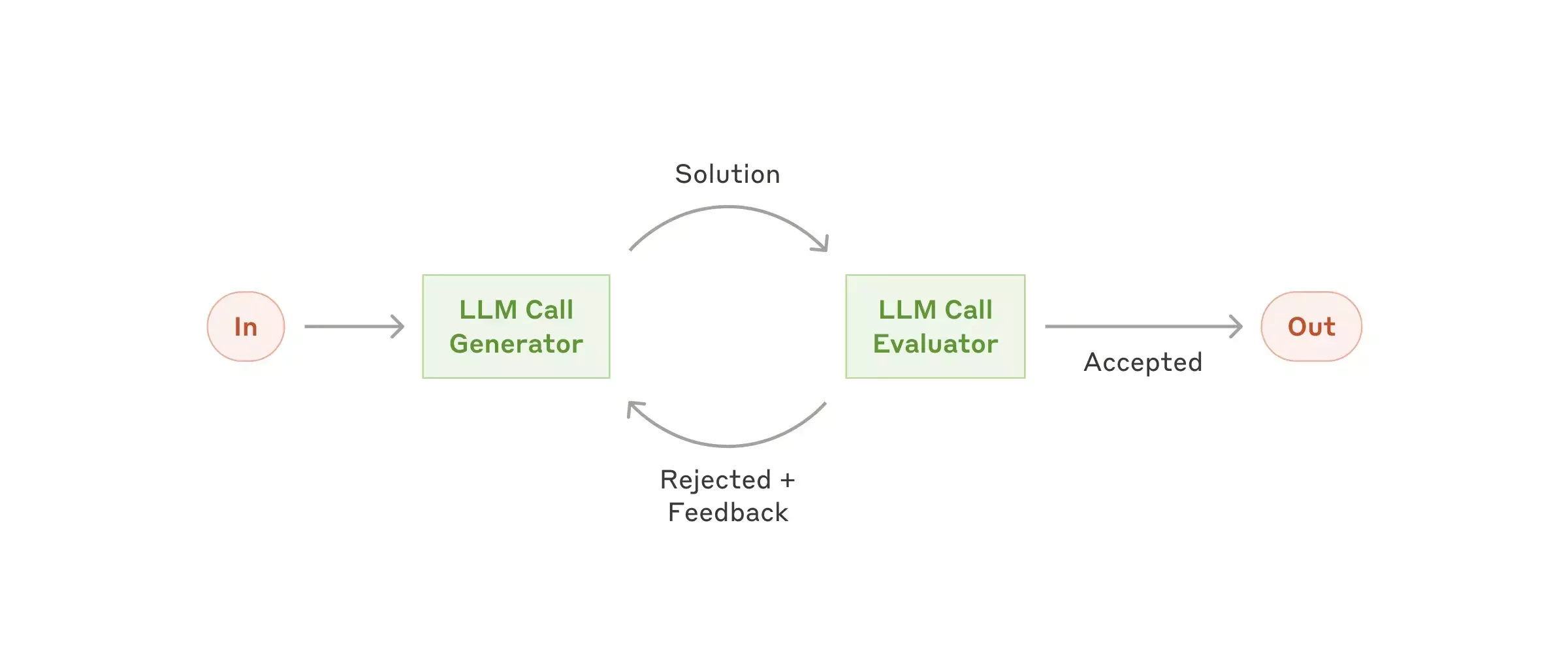

Evaluator-Optimizer

aka Self-reflection

Looping

Arbitrary complexity

Tool use

def multiply(a: int, b: int) -> int:

"""Multiply two integers and return the result."""

return a * b

multiply_tool = FunctionTool.from_defaults(fn=multiply)FunctionCallingAgent

agent = FunctionCallingAgent.from_tools(

[multiply_tool],

llm=llm,

)

response = agent.chat("What is 3 times 4?")Multi-agent systems

AgentWorkflow

research_agent = FunctionAgent(

name="ResearchAgent",

description="Useful for searching the web for information on a given topic and recording notes on the topic.",

system_prompt=(

"You are the ResearchAgent that can search the web for information on a given topic and record notes on the topic. "

"Once notes are recorded and you are satisfied, you should hand off control to the WriteAgent to write a report on the topic."

),

llm=llm,

tools=[search_web, record_notes],

can_handoff_to=["WriteAgent"],

)Multi-agent system

as a one-liner

agent_workflow = AgentWorkflow(

agents=[research_agent, write_agent, review_agent],

root_agent=research_agent.name,

initial_state={

"research_notes": {},

"report_content": "Not written yet.",

"review": "Review required.",

},

)Full AgentWorkflow and Workflows tutorial

Giving agents

access to data

RAG provides

infinite context

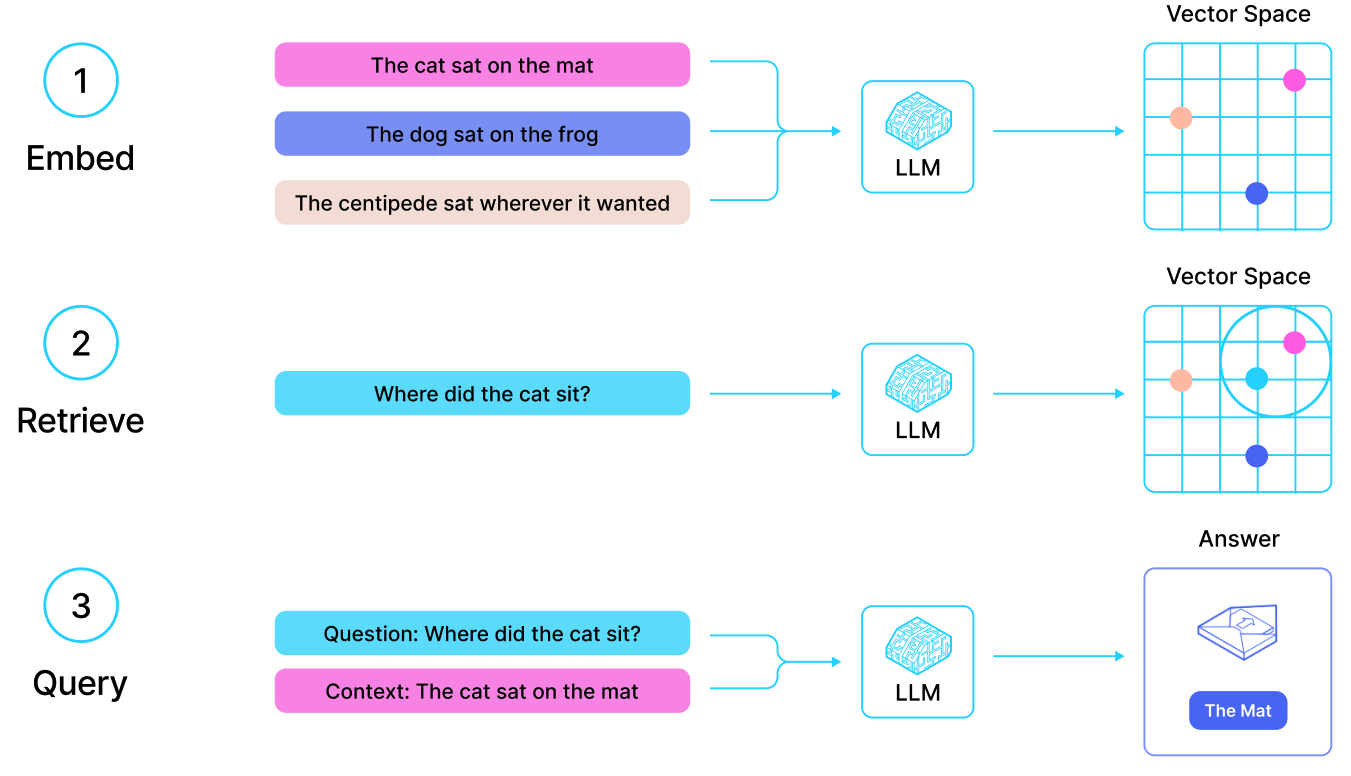

How RAG works

Basic RAG pipeline

RAG in 5 lines

documents = LlamaParse().load_data("./myfile.pdf")

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

response = query_engine.query("What did the author do growing up?")

print(response)RAG limitations

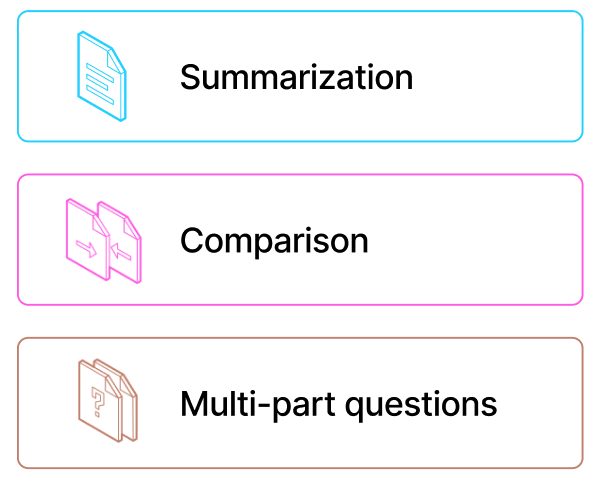

Summarization

Solve it with: routing, parallelization

Comparison

Solve it with: parallelization

Multi-part questions

Solve it with: chaining, parallelization

What's next?

Thanks!

Follow me on BlueSky:

@seldo.com

Agentic Workflows in LlamaIndex (CMU AI Agents Hackathon)

By Laurie Voss

Agentic Workflows in LlamaIndex (CMU AI Agents Hackathon)

- 1,089