RAG in 2024:

advancing to agents

2024-06-06 YouTube

What are we talking about?

- What is LlamaIndex

- Limitations of RAG

- Building an agent in 2024

- Components of an agent

- Routing

- Memory

- Planning

- Tool use

- Agentic reasoning

- Sequential

- DAG-based

- Tree-based

What is LlamaIndex?

Python: docs.llamaindex.ai

TypeScript: ts.llamaindex.ai

LlamaCloud

LlamaParse

Free for 1000 pages/day!

The RAG pipeline

5 line starter

# load and parse

documents = SimpleDirectoryReader("data").load_data()

# embed and index

index = VectorStoreIndex.from_documents(documents)

# generate query engine

query_engine = index.as_query_engine()

# run queries

response = query_engine.query("What did the author do growing up?")

print(response)Naive RAG is limited

Summarization

Naive RAG failure modes:

Comparison

Naive RAG failure modes:

Implicit data

Naive RAG failure modes:

Multi-part questions

Naive RAG failure modes:

RAG is necessary

but not sufficient

Two ways

to improve RAG:

- Improve your data

- Improve your querying

Improving data quality with LlamaParse

Quality through quantity: LlamaHub.ai

RAG pipeline

⚠️ Single-shot

⚠️ No query understanding/planning

⚠️ No tool use

⚠️ No reflection, error correction

⚠️ No memory (stateless)

Agentic RAG

✅ Multi-turn

✅ Query / task planning layer

✅ Tool interface for external environment

✅ Reflection

✅ Memory for personalization

From simple to advanced agents

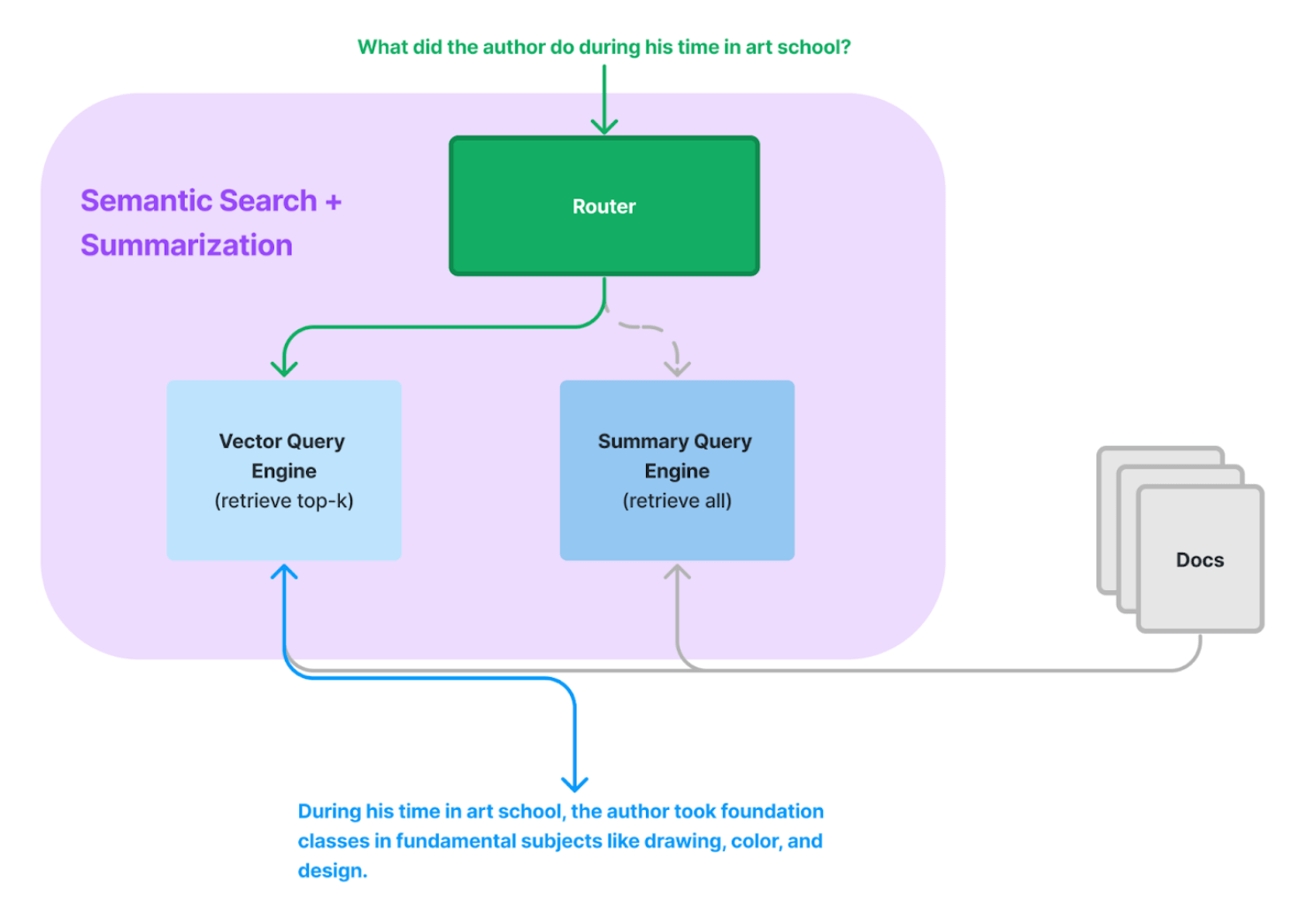

Routing

RouterQueryEngine

list_tool = QueryEngineTool.from_defaults(

query_engine=list_query_engine,

description=(

"Useful for summarization questions related to Paul Graham eassy on"

" What I Worked On."

),

)

vector_tool = QueryEngineTool.from_defaults(

query_engine=vector_query_engine,

description=(

"Useful for retrieving specific context from Paul Graham essay on What"

" I Worked On."

),

)

query_engine = RouterQueryEngine(

selector=LLMSingleSelector.from_defaults(),

query_engine_tools=[

list_tool,

vector_tool,

],

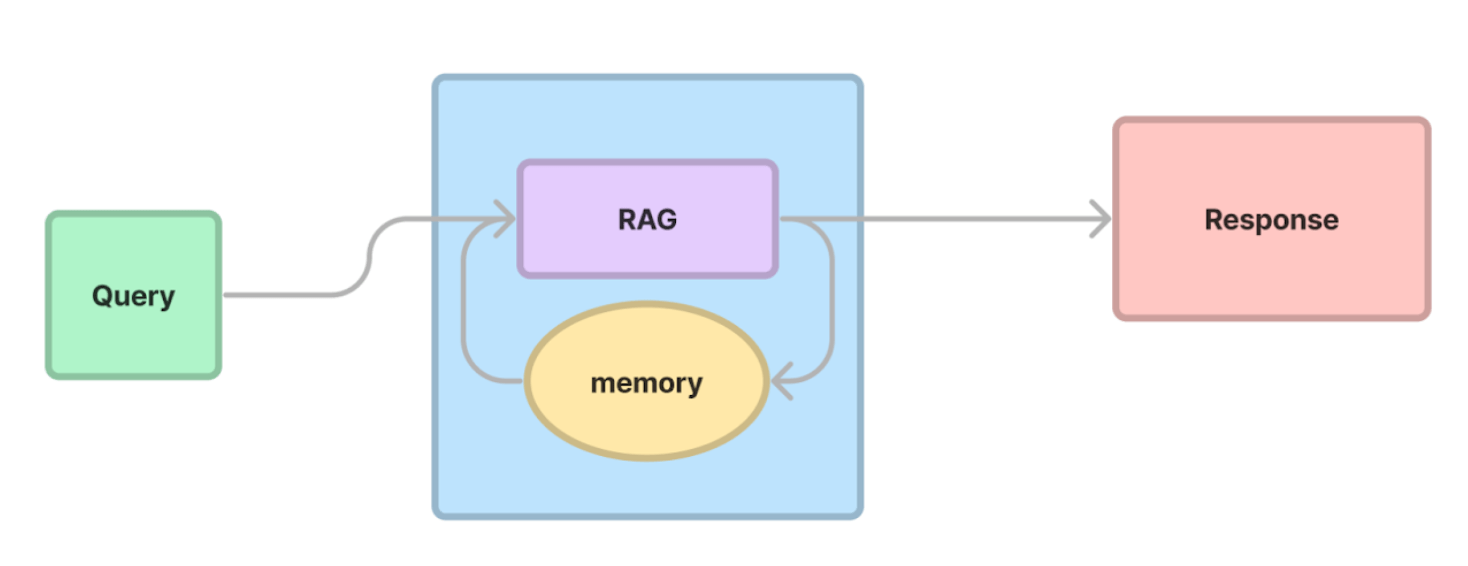

)Conversation memory

Chat Engine

# load and parse

documents = SimpleDirectoryReader("data").load_data()

# embed and index

index = VectorStoreIndex.from_documents(documents)

# generate chat engine

chat_engine = index.as_chat_engine()

# start chatting

response = query_engine.chat("What did the author do growing up?")

print(response)Query planning

Sub Question Query Engine

# set up list of tools

query_engine_tools = [

QueryEngineTool(

query_engine=vector_query_engine,

metadata=ToolMetadata(

name="pg_essay",

description="Paul Graham essay on What I Worked On",

),

),

# more query engine tools here

]

# create engine from tools

query_engine = SubQuestionQueryEngine.from_defaults(

query_engine_tools=query_engine_tools,

use_async=True,

)Tool use

Basic ReAct agent

# define sample Tool

def multiply(a: int, b: int) -> int:

"""Multiply two integers and returns the result integer"""

return a * b

multiply_tool = FunctionTool.from_defaults(fn=multiply)

# initialize ReAct agent

agent = ReActAgent.from_tools(

[

multiply_tool

# other tools here

],

verbose=True

)Combine agentic strategies

and then go further

- Routing

- Memory

- Planning

- Tool use

Agentic strategies

- Multi-turn

- Reasoning

- Reflection

Full agent

3 agent

reasoning loops

- Sequential

- DAG-based

- Tree-based

Sequential reasoning

ReAct in action

Thought: I need to use a tool to help me answer the question.

Action: multiply

Action Input: {"a": 2, "b": 4}

Observation: 8

Thought: I need to use a tool to help me answer the question.

Action: add

Action Input: {"a": 20, "b": 8}

Observation: 28

Thought: I can answer without using any more tools.

Answer: 28DAG-based reasoning

Self reflection

Structured Planning Agent

# create the function calling worker for reasoning

worker = FunctionCallingAgentWorker.from_tools(

[lyft_tool, uber_tool], verbose=True

)

# wrap the worker in the top-level planner

agent = StructuredPlannerAgent(

worker, tools=[lyft_tool, uber_tool], verbose=True

)

response = agent.chat(

"Summarize the key risk factors for Lyft and Uber in their 2021 10-K filings."

)The Plan

=== Initial plan ===

Extract Lyft Risk Factors:

Summarize the key risk factors from Lyft's 2021 10-K filing. -> A summary of the key risk factors for Lyft as outlined in their 2021 10-K filing.

deps: []

Extract Uber Risk Factors:

Summarize the key risk factors from Uber's 2021 10-K filing. -> A summary of the key risk factors for Uber as outlined in their 2021 10-K filing.

deps: []

Combine Risk Factors Summaries:

Combine the summaries of key risk factors for Lyft and Uber from their 2021 10-K filings into a comprehensive overview. -> A comprehensive summary of the key risk factors for both Lyft and Uber as outlined in their respective 2021 10-K filings.

deps: ['Extract Lyft Risk Factors', 'Extract Uber Risk Factors']Tree-based reasoning

Exploration vs exploitation

Language Agent Tree Search

agent_worker = LATSAgentWorker.from_tools(

query_engine_tools,

llm=llm,

num_expansions=2,

max_rollouts=3,

verbose=True,

)

agent = agent.as_worker()

task = agent.create_task(

"Given the risk factors of Uber and Lyft described in their 10K files, "

"which company is performing better? Please use concrete numbers to inform your decision."

)Taking stock

Still to come:

- Controllability

- Observability

- Customizability

- Multi-agents

Observability

Controllability

Customizability

What's next?

Multi-agent interactions

Recap

All resources:

- Basic RAG

- Agent components

- Routing

- Memory

- Planning

- Tool use

- Agentic reasoning

- Sequential

- DAG-based

- Tree-based

- Observability

- Controllability

- Customizability

Thanks!

All resources:

Follow me on Twitter:

@seldo

Please don't add me on LinkedIn.

RAG in 2024: advancing to agents (YouTube edition)

By Laurie Voss

RAG in 2024: advancing to agents (YouTube edition)

- 1,330