AIACE Project

Data Preparation

Who am I?

Shoichi Yip

Physics BSc @ UniTrento

Actually research assistant for prof. Luca Tubiana

Interested in Stat Mech, Complex Systems, Data Analysis, ML

What am I doing

The goal of this project is the development and application of statistical physics and information theory methods to optimize data collection and forecast epidemics.

In particular I take care of the data retrieval, cleaning, preparation and exploratory data analysis part.

What data do we need

Epidemics on complex networks can be simulated given data-informed models and parameters.

In our case, we would mainly like to take account of mobility factors of humans: how they move, who do they spend their time with and what is the purpose of their movements.

We also need data about the epidemic itself: we would like to know cases, recovers and deaths.

What data do we need

Also the quality of the data is crucial: since we aim to make metapopulation models, and evaluate the different coarse-graining scales with proper metrics, we would like to have a wide range of data scales in order to have meaningful comparisons.

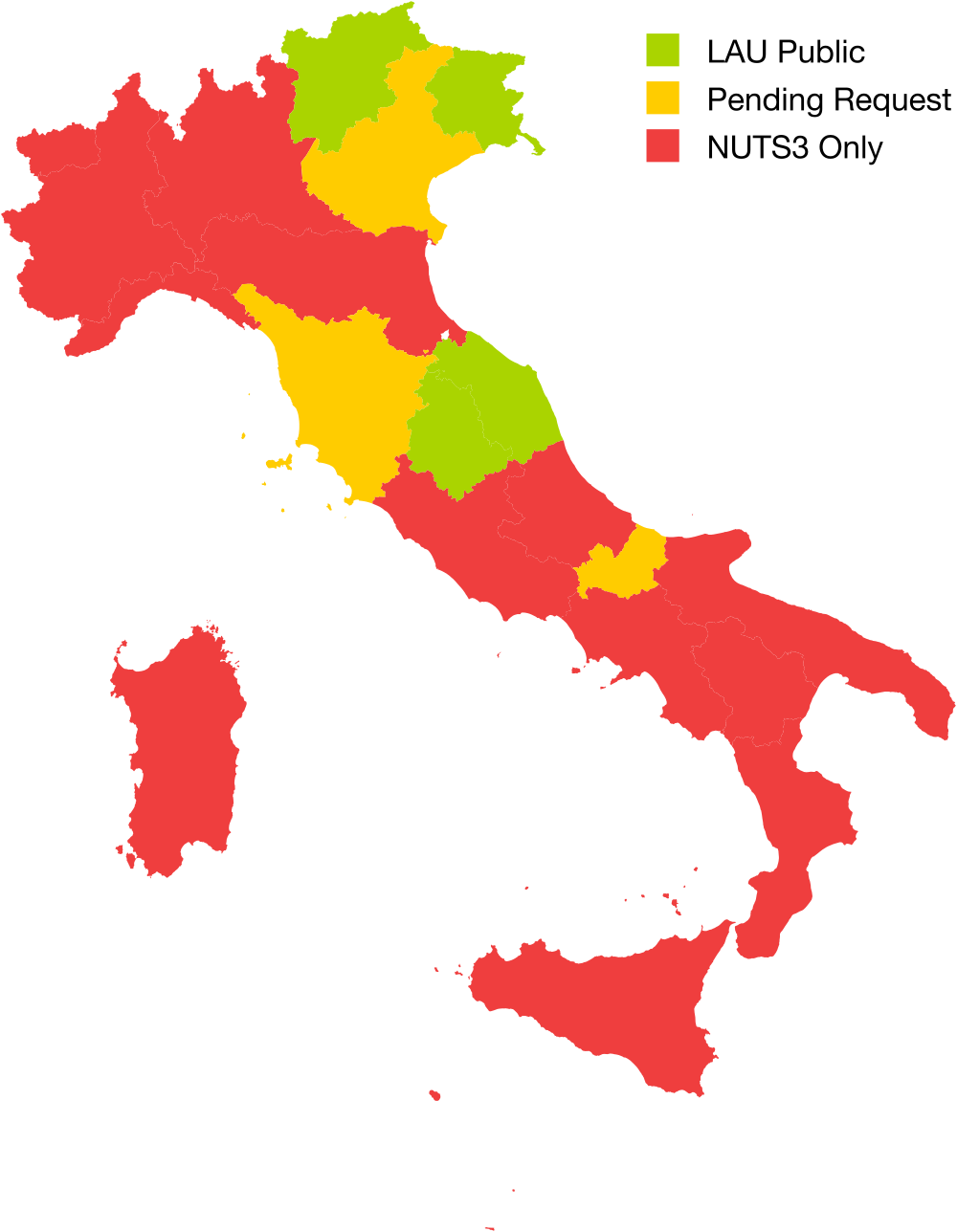

What data do we have

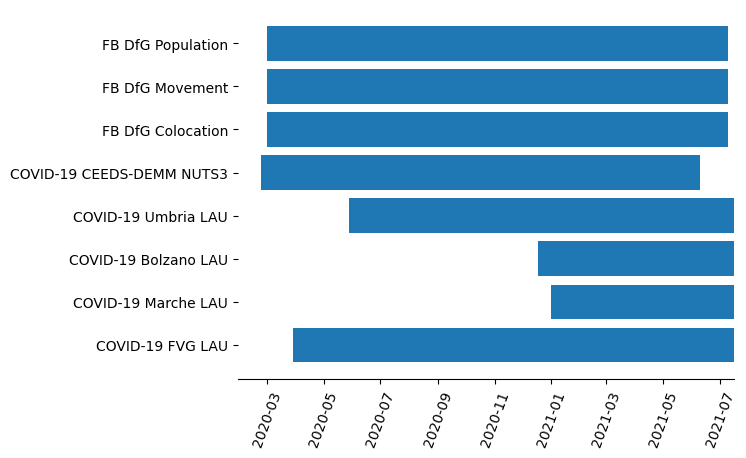

The public (Italy) data we have:

- COVID-19 cases, recovers and deaths at NUTS3 level (CEEDS-DEMM);

- daily deaths baseline and crisis counts at LAU level (ISTAT);

- touristicity index at LAU level (ISTAT);

- NUTS3 and LAU unit shapefiles (OpenPolis).

- OD matrix, gyration radius and average degree distribution at NUTS3 level (ISI / Cuebiq)

- COVID-19 cases, recovers and deaths at LAU level:

- Bolzano/Bozen;

- Umbria;

- Marche;

- Friuli-Venezia Giulia.

What data do we have

The restricted access data (Italy) we have:

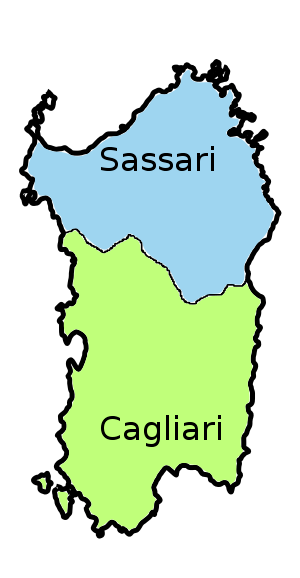

- Colocation data at NUTS3 level (Facebook DfG);

- Movement data at NUTS3 level (Facebook DfG);

- Population data at NUTS3 level (Facebook DfG);

- Movement data at Bing Tiles 16 level (Facebook DfG);

- Population data at Bing Tiles 16 level (Facebook DfG);

- COVID-19 cases, recovers and deaths at LAU level:

- Toscana;

- Molise;

- Veneto.

🌍 Data Avilability

📆 Data Avilability

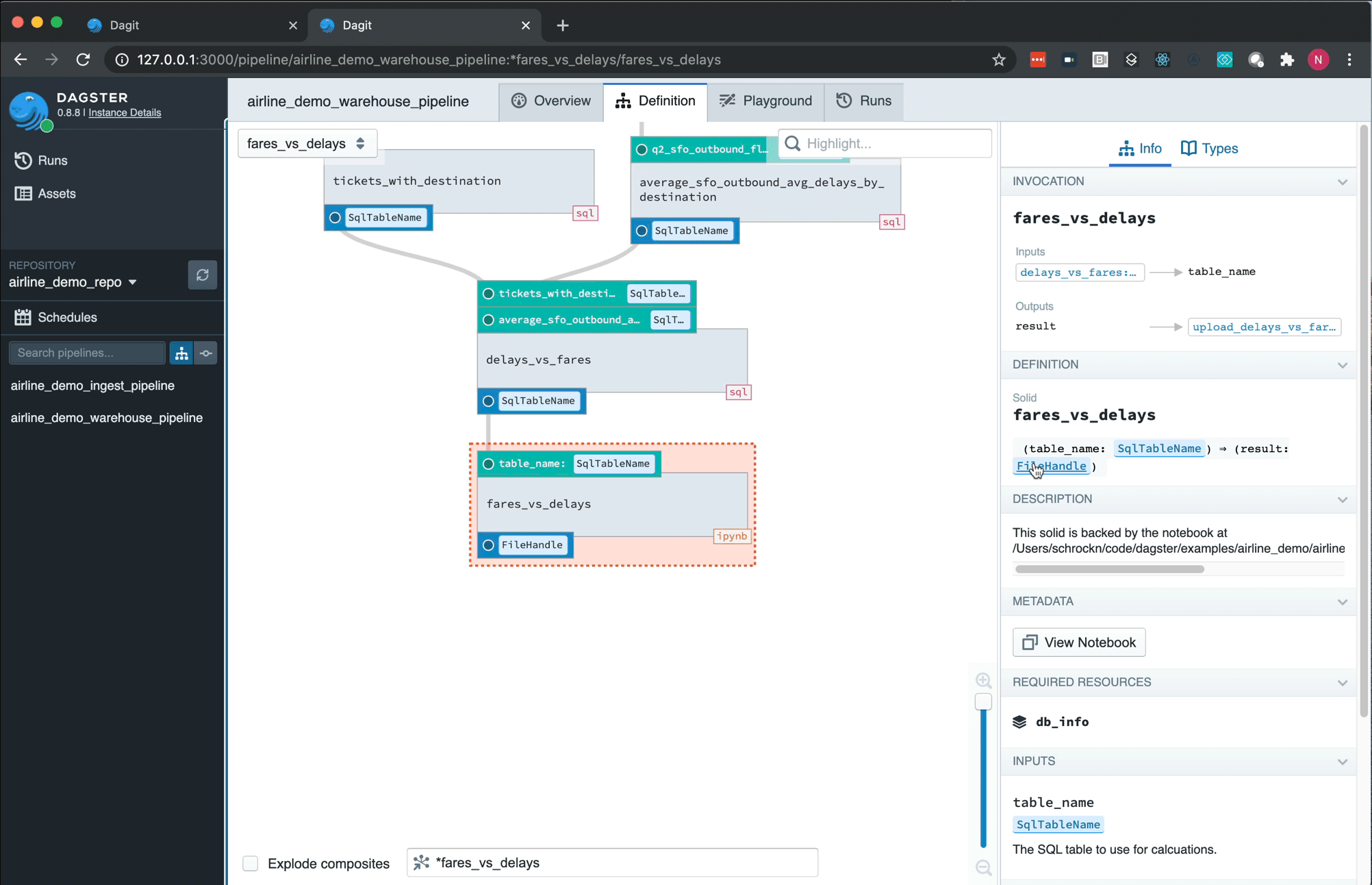

Retrieval

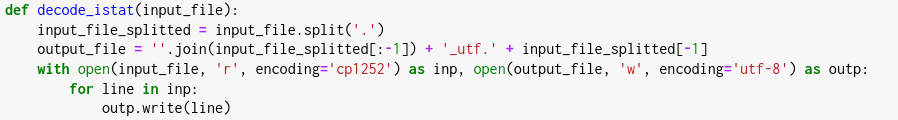

ISTAT datasets

.csv files

ISI Cuebiq dataset

CEEDS-DEMM dataset

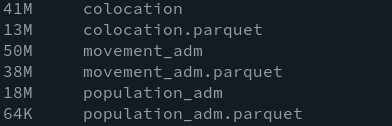

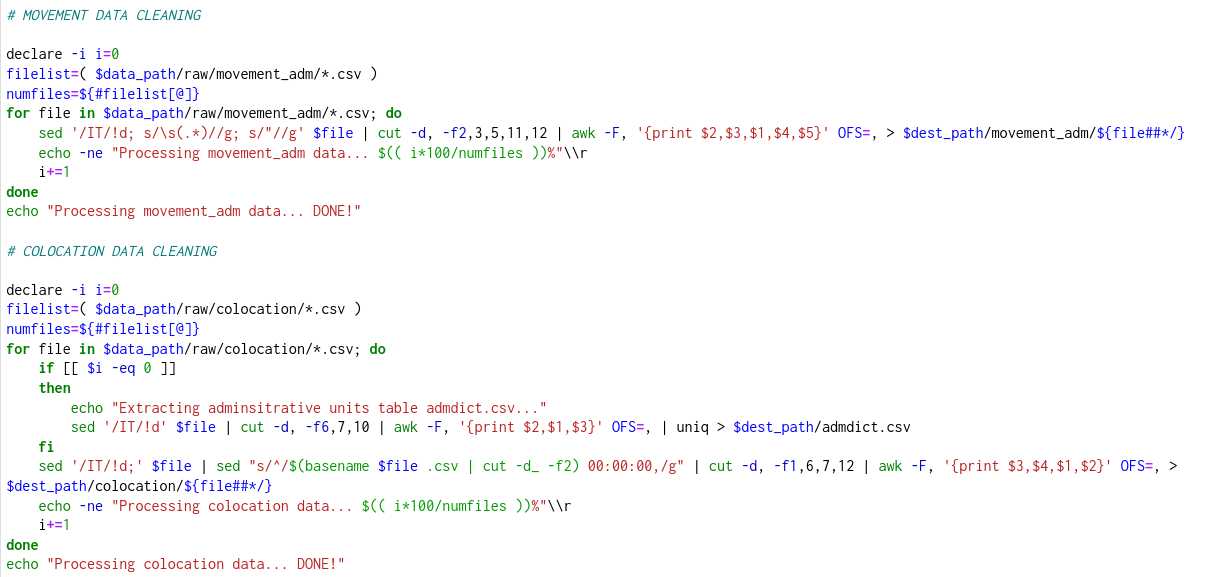

Facebook datasets

UNZIP

folders of zips

UNZIP

OpenPolis shapefiles

topojson file

Folders of csvs

BASH

BASH

clean csvs

dictionary

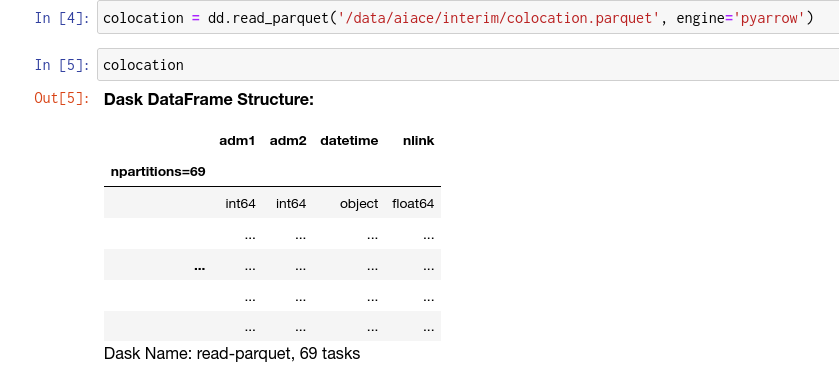

DASK

parquet files

Why parquet files?

- Column-oriented data storage

- Fast retrieval for columnar operations

- Smaller storage space

Preprocessing

- Ensure utf8 encoding

- Reduce file size and correctly select only useful data (WIP)

- Ensure that columns are read correctly

- Ensure consistency across datasets for common features (aka admin units)

Encoding

Text Wrangling

Consistency

Admin units

Admin Units

Preparation

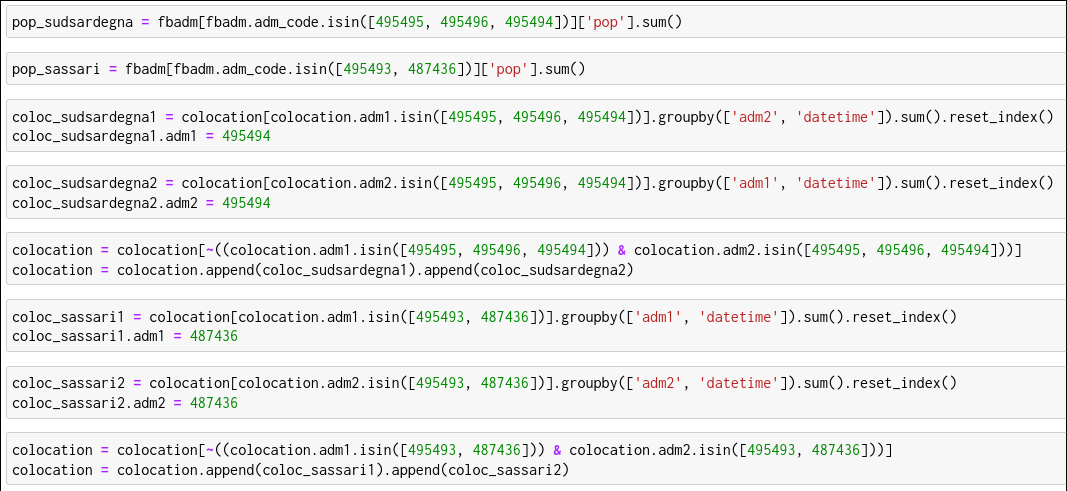

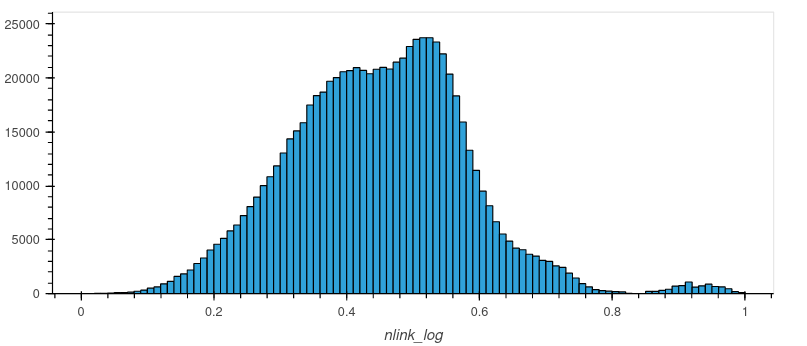

- Transformations and scaling

- Tabular data preparation

- Writing functions for graph preparation

- Preparing dataviz applications (WIP)

- Preparing pipeline for automation (WIP)

- Documenting datasets and scripts (WIP)

def create_network(coloc_df, nodecolor, datetime, cutoff):

"""

Create network from mobility data.

"""

dt_datetime = pd.to_datetime(datetime)

df = coloc_df[(coloc_df.datetime==dt_datetime) & (coloc_df.nlink_log>cutoff)].compute()

print(f"Dataframe is {len(df)} elements long")

G = nx.from_pandas_edgelist(df, 'adm1', 'adm2', edge_attr='nlink_log')

nodecolordict_fordate = nodecolor.loc[dt_datetime].set_index('adm1').compute().to_dict()['nlink_log']

nx.set_node_attributes(G, nodecolordict_fordate, 'self_nlink')

return G

Stack

Thanks!

Aiace Data Prep

By Shoichi Yip

Aiace Data Prep

- 480