Going Serverless with Azure Functions

Agenda

- What is serverless

- What is Azure Functions

- What can we build with Azure Functions

- How do we build Azure Functions

- How do we deploy Azure Functions

- Tips and tricks

Just ask questions along the way

About me

- + 15 years web developer (started with classic ASP)

- + 8 years professional Dev/Architect (.NET, SharePoint and Azure, first Azure cert in 2013)

- Working exclusively with Azure last 2.5 years

- Managing Consultant @ Delegate A/S (MS Gold Partner)

- Lives in Malmö, Sweden

- Author of Lets Encrypt Site Extension for Azure Web Apps

- CTO at tiimo ApS

mail@sjkp.dk

@simped

http://wp.sjkp.dk

What is Serverless

- Nothing is serverless, obviously

- Gained significant momentum lately

- Aka. Backend as a Service, Funciton as a Service

- AWS Lambda

- Typical use-case

- Event processing

- Dynamic Scale

- Dynamic Pricing

Serverless compared

| Virtual Machines | Container | Serverless | |

|---|---|---|---|

| Unit of scale | Machine | Application | Function |

| Abstraction | Hardware | OS | Language Runtime |

| Configure | Machine, Storage, Networking, OS | Run server, configure applications, scaling | Run code on demand |

| Execution | Multi-threaded, multi-task | Multi-threaded, single task | Single threaded, single taks |

| Execution time | Hours to months | Minutes to days | Microseconds to seconds |

| Unit of Cost | Per VM per hour | Per VM per hour | Per memory/second per request |

| Amazon | EC2 | ECS | AWS Lambda |

| Azure | Azure Virtual Machines | ACS | Azure Functions |

What is Azure Functions

- Microsofts answer to AWS Lambda

- The natural evolution of Azure Web Jobs

- The platform to write code for Logic Apps / Microsoft Flow

- A site extension on Azure Web Apps + More

- The easiest way to get started with code in Azure

DEMO

Getting started with Azure Functions

History of Azure Web Apps

Azure Functions vs Web Jobs vs Microsoft Flow vs Logic Apps

| Functions | Web Jobs | Flow | Logic Apps | |

|---|---|---|---|---|

| Audience | Developers | Developers | End users | IT Pros |

| Design Tools | VS, Browser | VS | Browser | VS, Browser |

| Scale | Dynamic/App plan | App Plan | ? | App Plan/Dynamic |

What can we build with Azure Functions

- Triggered Execution

- Code in

- C#, F#, JavaScript (Bash, PHP, Python, PowerShell)

Typical Azure Function Scenarios

- Simple Backends

- Web Backends, Mobile Backends, Bot Backends

- Data Processing

- IoT, Image Processing, Data pipelines

- Maintenance tasks

- Index Rebuild, Scheduled jobs

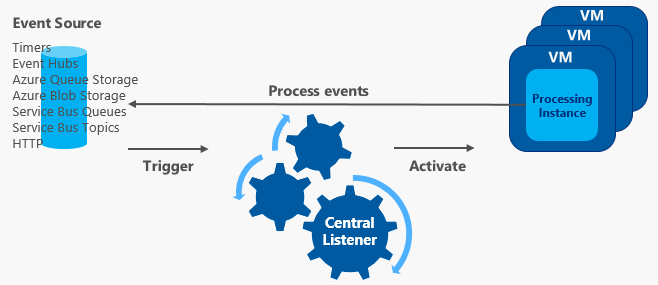

Azure Functions Architecture

Service Plan

Web App

Func App 1

Func App 2

Azure Blob Storage

Central Listener

Function Runtime

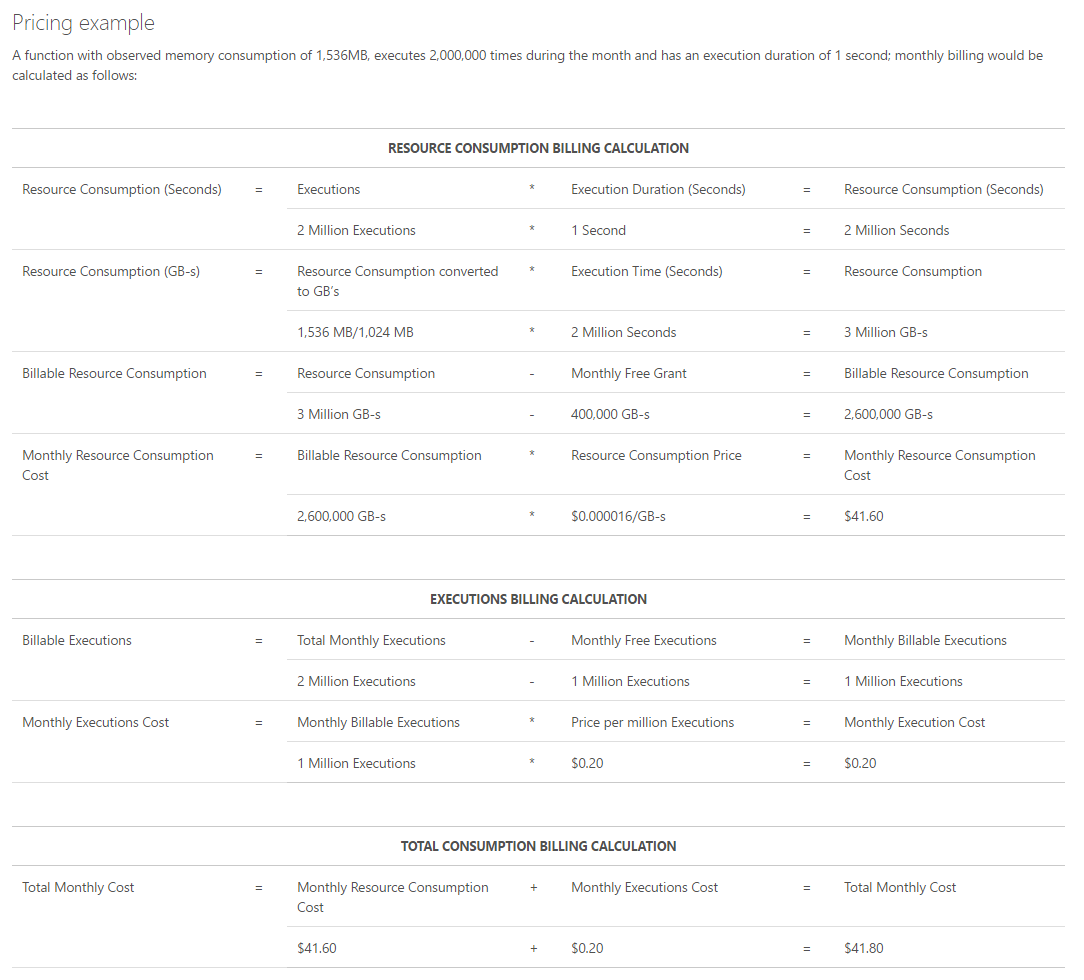

Azure Function Pricing

- Consumption Plan

- Computed as a combination of memory size and execution time for all functions running in a function app

- Number of executions

- App Service Plan

- Tiered billing (Free, Shared, Basic, Standard, Premium)

- Flat rate

DEMO

Create a Function using the portal

Function structure

|---data

| |---Functions

| |---sampledata

| | QueueTriggerCSharp1.dat

| |

| |---secrets

| host.json

| queuetriggercsharp1.json

|

|---LogFiles

| |---Application

| | |---Functions

| | |---function

| | | ----QueueTriggerCSharp1

| | |---Host

| | debug_sentinel

| |

| |---kudu

| |---deployment

| |---trace

| 2017-03-05T09-50-51_e92a00_001_Startup_GET__0s.xml

| RD000D3AB10CF5-fa094f48-f993-47b4-8b65-8a435c854163.txt

|

|---site

|---deployments

| ----tools

|---diagnostics

| settings.json

|

|---locks

|---tools

|---wwwroot

| host.json

| hostingstart.html

|

|---QueueTriggerCSharp1

function.json

readme.md

run.csxImportant files

- function.json

- project.json

- run.csx

- host.json

function.json

{

"bindings": [

{

"type": "queueTrigger",

"direction": "in",

"queueName": "image-resize"

},

{

"type": "blob",

"name": "original",

"direction": "in",

"path": "images-original/{name}"

},

{

"type": "blob",

"name": "resized",

"direction": "out",

"path": "images-resized/{name}"

}

]

}{

"disabled": true,

"scriptFile": "test.js",

"bindings": [

{

"type": "timerTrigger",

"direction": "in",

"schedule": "0 0 */5 * * *",

"runOnStartup": true

}

]

}project.json

{

"frameworks": {

"net46":{

"dependencies": {

"Microsoft.ProjectOxford.Face": "1.1.0"

}

}

}

}- reference 3rd party nugets

using System;

using Microsoft.ProjectOxford.Face; //Just reference the nuget with using

public static void Run(string myQueueItem, TraceWriter log)

{

log.Info($"C# Queue trigger function processed : {myQueueItem}");

}run.csx

#r "Microsoft.WindowsAzure.Storage"

//#r "MyCustomDll.dll"

#load "../shared/models.csx"

using System;

using System.Net.Http;

using Microsoft.ProjectOxford.Face;

using Newtonsoft.Json;

using Microsoft.WindowsAzure.Storage;

public static void Run(string myQueueItem, TraceWriter log)

{

log.Info($"C# Queue trigger function processed : {myQueueItem}");

var client = new HttpClient();

var obj = JsonConvert.SerializeObject(new MyTestClass {Name = "hello world"});

}public class MyTestClass {

public int Id {get;set;}

public string Name {get;set;}

}models.csx

host.json

{

// The unique ID for this job host. Can be a lower case GUID

// with dashes removed (Required)

"id": "9f4ea53c5136457d883d685e57164f08",

// Value indicating the timeout duration for all functions.

// In Dynamic SKUs, the valid range is from 1 second to 5 minutes and the default value is 5 minutes.

// In Paid SKUs there is no limit and the default value is null (indicating no timeout).

"functionTimeout": "00:05:00",

// Configuration settings for 'http' triggers. (Optional)

"http": {

// Defines the default route prefix that applies to all routes. Default is 'api'.

// Use an empty string to remove the prefix.

"routePrefix": "api"

},

// Set of shared code directories that should be monitored for changes to ensure that

// when code in these directories is changed, it is picked up by your functions

"watchDirectories": [ "Shared" ],

// Array of functions to load. Only functions in this list will be enabled.

"functions": [ "QueueProcessor", "GitHubWebHook" ]

// Configuration settings for 'queue' triggers. (Optional)

"queues": {

// The maximum interval in milliseconds between

// queue polls. The default is 1 minute.

"maxPollingInterval": 2000,

// The number of queue messages to retrieve and process in

// parallel (per job function). The default is 16 and the maximum is 32.

"batchSize": 16,

// The number of times to try processing a message before

// moving it to the poison queue. The default is 5.

"maxDequeueCount": 5,

// The threshold at which a new batch of messages will be fetched.

// The default is batchSize/2.

"newBatchThreshold": 8

},

// Configuration settings for 'serviceBus' triggers. (Optional)

"serviceBus": {

// The maximum number of concurrent calls to the callback the message

// pump should initiate. The default is 16.

"maxConcurrentCalls": 16,

// The default PrefetchCount that will be used by the underlying MessageReceiver.

"prefetchCount": 100,

// the maximum duration within which the message lock will be renewed automatically.

"autoRenewTimeout": "00:05:00"

},

// Configuration settings for 'eventHub' triggers. (Optional)

"eventHub": {

// The maximum event count received per receive loop. The default is 64.

"maxBatchSize": 64,

// The default PrefetchCount that will be used by the underlying EventProcessorHost.

"prefetchCount": 256

},

// Configuration settings for logging/tracing behavior. (Optional)

"tracing": {

// The tracing level used for console logging.

// The default is 'info'. Options are: { off, error, warning, info, verbose }

"consoleLevel": "verbose",

// Value determining what level of file logging is enabled.

// The default is 'debugOnly'. Options are: { never, always, debugOnly }

"fileLoggingMode": "debugOnly"

},

// Configuration settings for Singleton lock behavior. (Optional)

"singleton": {

// The period that function level locks are taken for (they will auto renew)

"lockPeriod": "00:00:15",

// The period that listener locks are taken for

"listenerLockPeriod": "00:01:00",

// The time interval used for listener lock recovery if a listener lock

// couldn't be acquired on startup

"listenerLockRecoveryPollingInterval": "00:01:00",

// The maximum amount of time the runtime will try to acquire a lock

"lockAcquisitionTimeout": "00:01:00",

// The interval between lock acquisition attempts

"lockAcquisitionPollingInterval": "00:00:03"

}

}Consumption Plan scaling

- Startup delay, up to 10 minute

- Max execution time per function job 5 min

- Scales up to 60 concurrent function job hosts

- Each function job host can run multiple function jobs

How to Develop with Visual Studio

- Azure SDK 2.9.3

- Visual Studio Function Tools

- Uses Azure Function CLI (node tools) for local debugging

DEMO

Develop a Azure Function blob trigger with Visual Studio

Continues Integration

- Azure function runs on Azure Web Apps

- So git is available (makes the function readonly)

- Webdeploy (like VS did is available)

- FTP

- Kudu API

DEMO

Publish using Github

Is that good ALM?

- Pushing source code to production - I dont like it

- Might be ok for simple solutions

- We could push to different environments using branches (which I also don't like)

- Improve the ALM story

- We need compiled code

- We need a build step

- We need a test step

- Preferably we need multiple environments

Precompiled Functions

- Problems using the Web IDE (.csx)

- No intelisense

- Hard to maintain large code bases

- Debugging -> Console.WriteLine (not cool)

- Precompiled functions

- Fixes all of the above

- We lose some simplicity/agility

- Conclusion

- Portal is okay for prototypes

- Portal is okay for simple solutions

- If you want a traditional .NET experience -> precompiled functions is your friend

Precompiled functions, how to

- Move the code to a .NET class library

- Add nuget (Microsoft.Azure.WebJobs.Extensions / Microsoft.Azure.WebJobs)

- Update function.json

{

"disabled": false,

"scriptFile": "bin/SJKP.PrecompiledFunctions.dll",

"entryPoint": "SJKP.PrecompiledFunctions.Functions.Run",

"bindings": [

{

"authLevel": "function",

"name": "req",

"type": "httpTrigger",

"direction": "in"

},

{

"name": "res",

"type": "http",

"direction": "out"

}

]

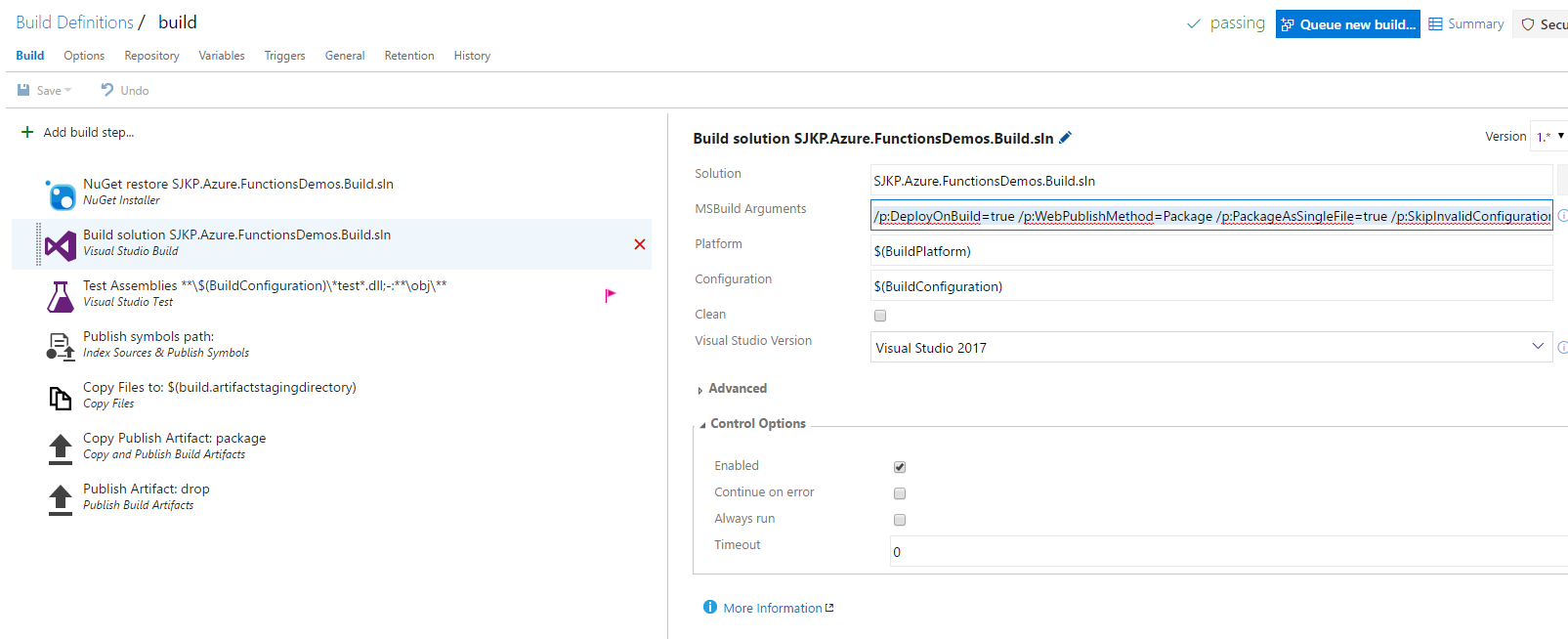

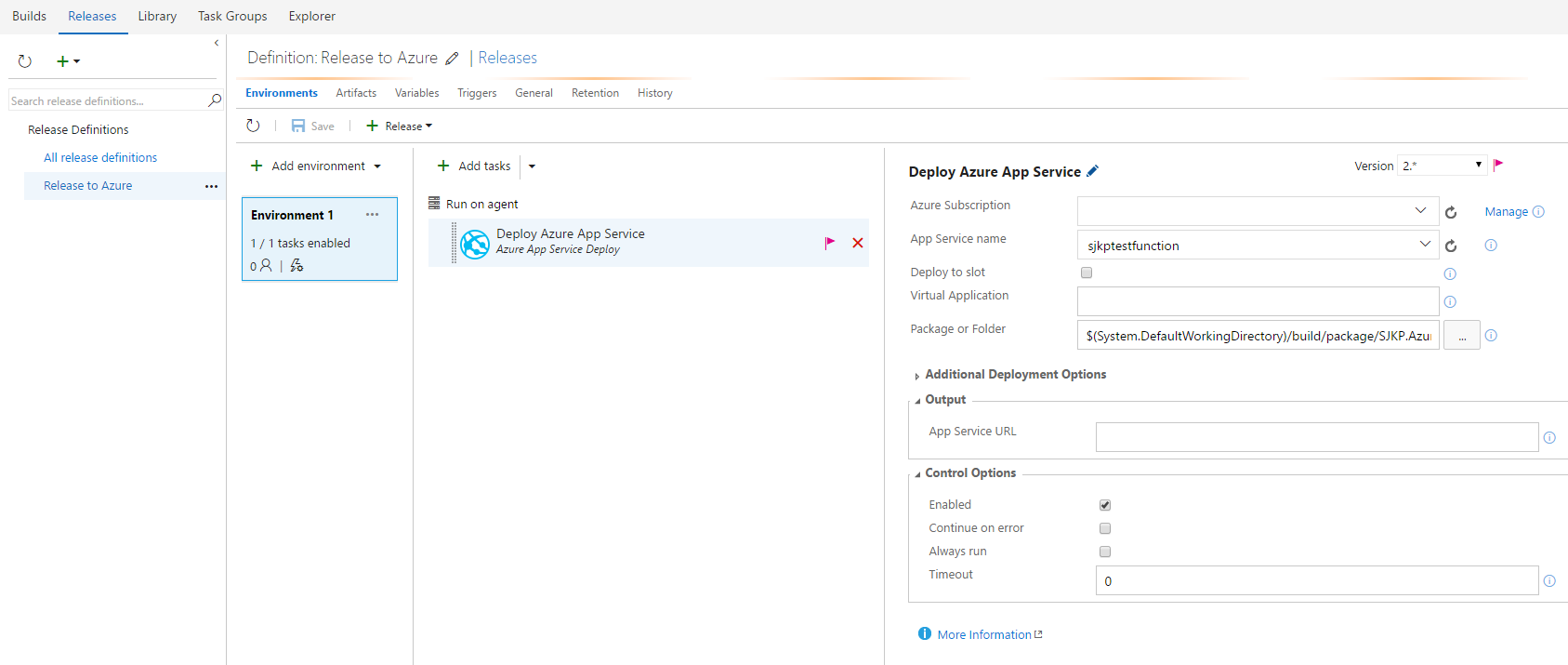

}Build and Deploy

- Building the project is now like building any .NET solution

- Deploy we need to copy the function app folders to the web app

- Normally we would use a webdeploy package (but that is broken in the function app project template)

- So we have to copy the files around

DEMO

Setting up webdeploy Continuous Integration for Azure Function

Building with VSTS

/p:DeployOnBuild=true /p:WebPublishMethod=Package /p:PackageAsSingleFile=true /p:SkipInvalidConfigurations=true /p:PackageLocation="$(build.stagingDirectory)"

Save WebDeploy Package

Setup Release Definition

Creating an Azure Function Environment Using ARM Templates

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"storageAccountType": {

"type": "string",

"defaultValue": "Standard_LRS",

"allowedValues": [

"Standard_LRS",

"Standard_GRS",

"Standard_ZRS",

"Premium_LRS"

],

"metadata": {

"description": "Storage Account type"

}

},

"functionAppName": {

"type": "string",

"defaultValue": "sjkptestfunction"

},

"functionAppDomainName": {

"type": "string",

"defaultValue": "myfunc.sjkp.dk"

}

},

"variables": {

"storageAccountName": "[concat('storage', uniqueString(resourceGroup().id))]",

"dynamicAppServicePlanName": "[concat('dynamic', uniqueString(resourceGroup().id))]"

},

"resources": [

{

"type": "Microsoft.Storage/storageAccounts",

"name": "[variables('storageAccountName')]",

"apiVersion": "2015-06-15",

"location": "[resourceGroup().location]",

"properties": {

"accountType": "[parameters('storageAccountType')]"

}

},

{

"type": "Microsoft.Web/serverfarms",

"apiVersion": "2015-04-01",

"name": "[variables('dynamicAppServicePlanName')]",

"location": "[resourceGroup().location]",

"properties": {

"name": "[variables('dynamicAppServicePlanName')]",

"computeMode": "Dynamic",

"sku": "Dynamic"

}

},

{

"apiVersion": "2015-08-01",

"type": "Microsoft.Web/sites",

"name": "[parameters('functionAppName')]",

"location": "[resourceGroup().location]",

"kind": "functionapp",

"properties": {

"name": "[parameters('functionAppName')]",

"serverFarmId": "[resourceId('Microsoft.Web/serverfarms', variables('dynamicAppServicePlanName'))]"

},

"dependsOn": [

"[resourceId('Microsoft.Web/serverfarms', variables('dynamicAppServicePlanName'))]",

"[resourceId('Microsoft.Storage/storageAccounts', variables('storageAccountName'))]"

],

"resources": [

{

"apiVersion": "2015-08-01",

"type": "hostNameBindings",

"name": "[parameters('functionAppDomainName')]",

"dependsOn": [

"[concat('Microsoft.Web/sites/', parameters('functionAppName'))]"

],

"tags": {

"displayName": "hostNameBinding"

},

"properties": {

"domainId": null,

"hostNameType": "Verified",

"siteName": "variables('webAppName')"

}

},

{

"apiVersion": "2016-03-01",

"name": "appsettings",

"type": "config",

"dependsOn": [

"[resourceId('Microsoft.Web/sites', parameters('functionAppName'))]",

"[resourceId('Microsoft.Storage/storageAccounts', variables('storageAccountName'))]"

],

"properties": {

"AzureWebJobsStorage": "[concat('DefaultEndpointsProtocol=https;AccountName=',variables('storageAccountName'),';AccountKey=',listkeys(resourceId('Microsoft.Storage/storageAccounts', variables('storageAccountName')), '2015-05-01-preview').key1,';')]",

"AzureWebJobsDashboard": "[concat('DefaultEndpointsProtocol=https;AccountName=',variables('storageAccountName'),';AccountKey=',listkeys(resourceId('Microsoft.Storage/storageAccounts', variables('storageAccountName')), '2015-05-01-preview').key1,';')]",

"FUNCTIONS_EXTENSION_VERSION": "latest"

}

},

{

"apiVersion": "2015-08-01",

"name": "TestFunction",

"type": "functions",

"dependsOn": [

"[resourceId('Microsoft.Web/sites', parameters('functionAppName'))]"

],

"properties": {

"config": {

"bindings": [

{

"authLevel": "anonymous",

"name": "req",

"type": "httpTrigger",

"direction": "in"

},

{

"name": "res",

"type": "http",

"direction": "out"

}

]

},

"files": {

"run.csx": "using System.Net;\r\npublic static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)\r\n{\r\n log.Info($\"C# HTTP trigger function processed a request. RequestUri={req.RequestUri}\");\r\n\r\n // parse query parameter\r\n string name = req.GetQueryNameValuePairs()\r\n .FirstOrDefault(q => string.Compare(q.Key, \"name\", true) == 0)\r\n .Value;\r\n\r\n // Get request body\r\n dynamic data = await req.Content.ReadAsAsync<object>();\r\n\r\n // Set name to query string or body data\r\n name = name ?? data?.name;\r\n\r\n return name == null\r\n ? req.CreateResponse(HttpStatusCode.BadRequest, \"Please pass a name on the query string or in the request body\")\r\n : req.CreateResponse(HttpStatusCode.OK, \"Hello \" + name);\r\n}"

}

}

}

]

}

],

"outputs": {

}

}ARM template more info

- Same as the when deploying web apps

- Remember to set App Settings for storage account

- Both dynamic and standard app service plan can be used

- It is possible to deploy files into the function by embedding them in the template

- It is also possible to assign custom host names (Requires DNS is setup)

Tips and Tricks

Nothing Runs Forever

- Max run time for a dynamic function 5 mins

- 5 seconds to clean up -> termination

- Use cancellationtoken if you need to do things on shutdown

using System;

using System.Threading;

public static async Task Run(string input, TraceWriter log, CancellationToken cancel)

{

log.Info($"C# manually triggered function called with input: {input}");

do {

log.Info($"Output {DateTime.Now}");

await Task.Delay(2500);

} while(!cancel.IsCancellationRequested);

log.Info($"stopping: {cancel.IsCancellationRequested}");

}Queues and Host.json

- Use queues in your function design

- When you use queues, ensure that you have configured host.json

- Check the poison queue

"queues": {

// The maximum interval in milliseconds between

// queue polls. The default is 1 minute.

"maxPollingInterval": 2000,

// The number of queue messages to retrieve and process in

// parallel (per job function). The default is 16 and the maximum is 32.

"batchSize": 16,

// The number of times to try processing a message before

// moving it to the poison queue. The default is 5.

"maxDequeueCount": 5,

// The threshold at which a new batch of messages will be fetched.

// The default is batchSize/2.

"newBatchThreshold": 8

},Reprocess Poison Queue

#r "Microsoft.WindowsAzure.Storage"

using System;

using System.Configuration;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Blob;

using Microsoft.WindowsAzure.Storage.Queue;

public static void Run(string input, TraceWriter log)

{

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(ConfigurationManager.AppSettings["AzureWebJobsStorage"]);

var queueClient = storageAccount.CreateCloudQueueClient();

var queue = queueClient.GetQueueReference("binfiles-poison");

var outqueue = queueClient.GetQueueReference("binfiles");

CloudQueueMessage msg = null;

int i = 0;

do

{

msg = queue.GetMessage();

if (msg != null)

{

log.Info($"Processing queue item {i++}");

outqueue.AddMessage(new CloudQueueMessage(msg.AsString));

queue.DeleteMessage(msg);

}

} while (msg != null);

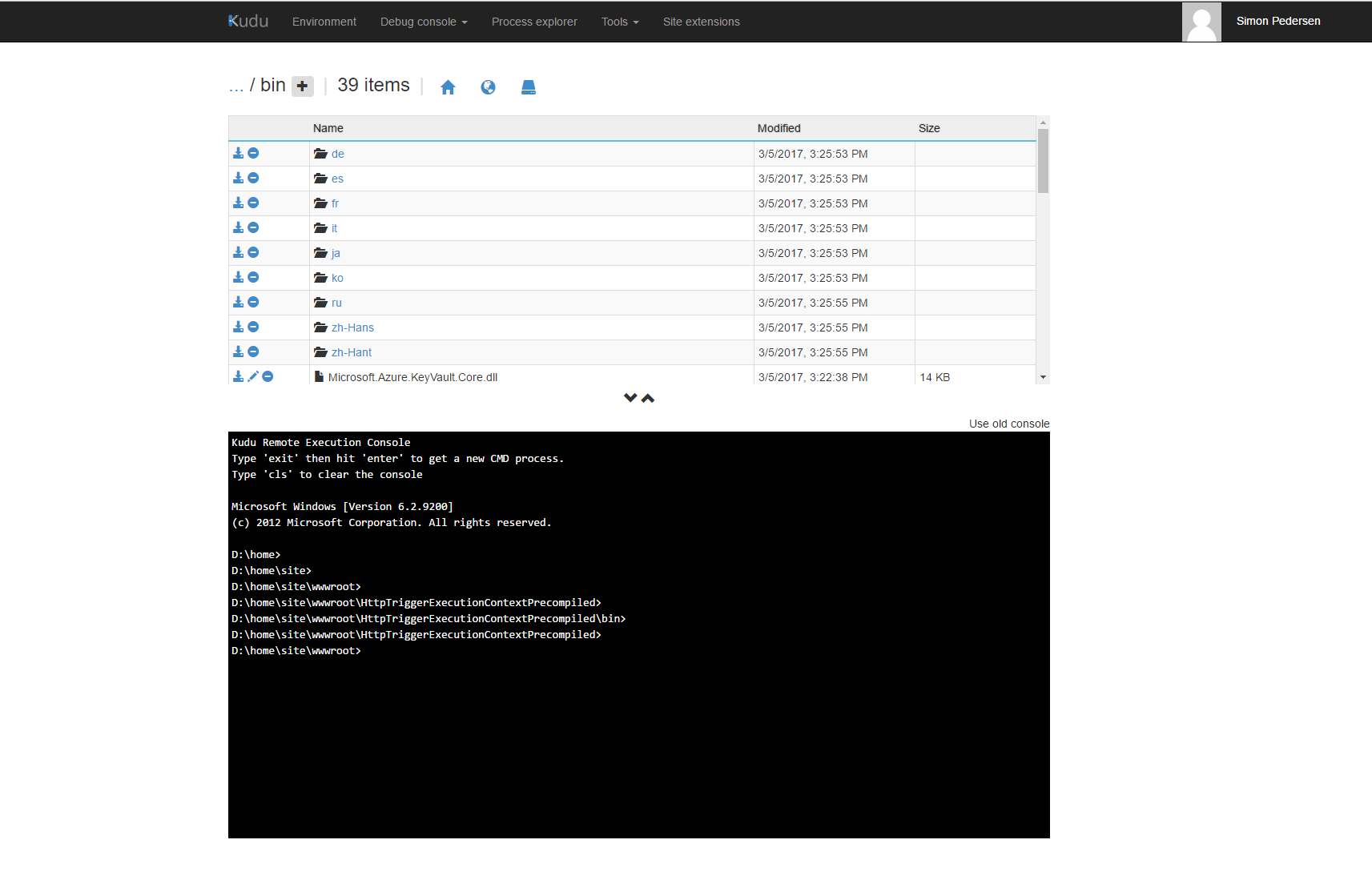

}The KUDU portal

https://[function-app-name].scm.azurewebsites.net

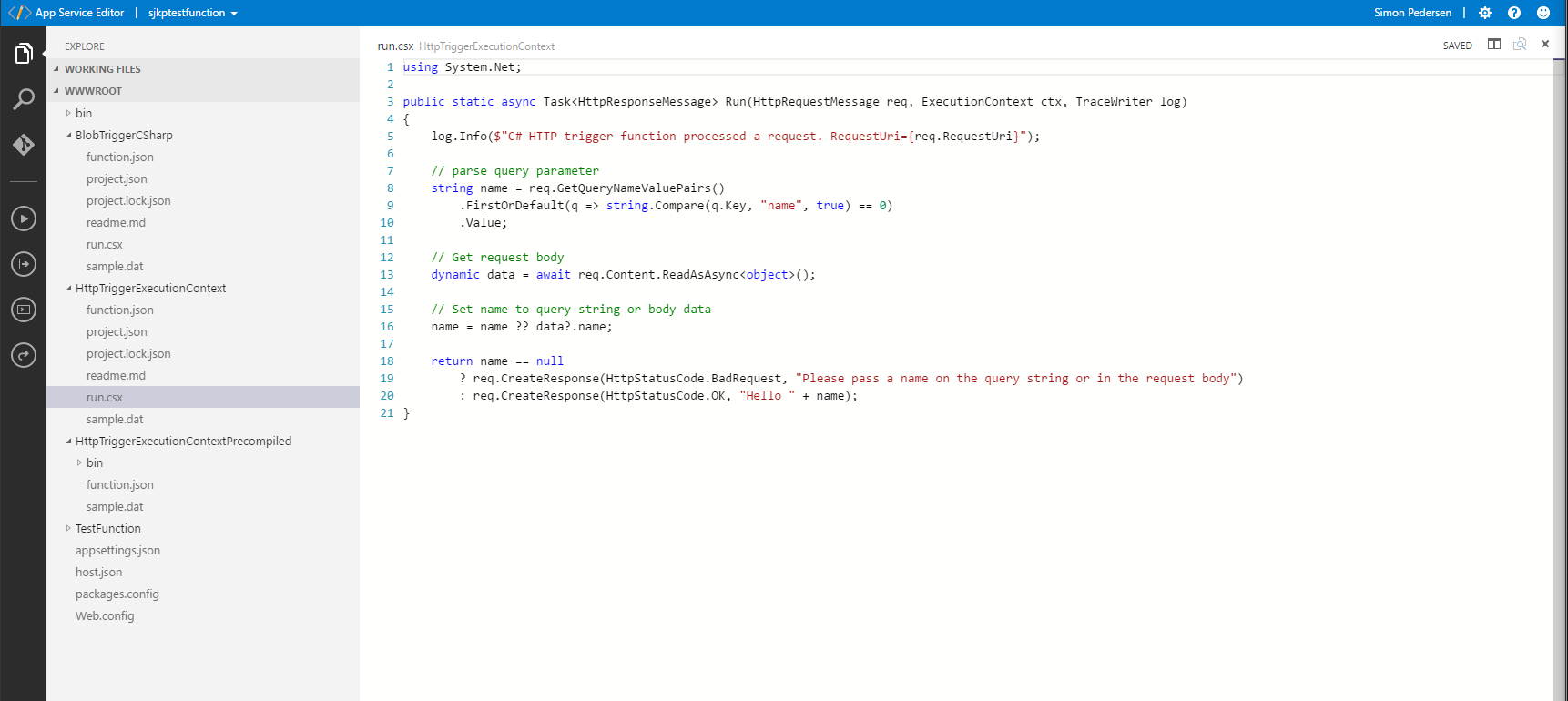

App Service Editor aka Monaco

Execution Context

- Get the name of the specific instance

using System.Net;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, ExecutionContext ctx, TraceWriter log)

{

log.Info($"C# HTTP trigger invocationId: {ctx.InvocationId}");

return req.CreateResponse(HttpStatusCode.OK, "ctx.InvocationId");

}Application Insights

- No first class support for application insights (yet)

- Standard .net diagnostics logging can be used (same as web apps)

- TraceWriter for debugging

- Monitoring tab, only last 20 executions

- To use application insights, treat the function as a console app

- Configure application insights in code

- Ensure to flush to application insight on termination

Where to get help

Thank you for your time!

Going Serverless with Azure Functions

By Simon J.K. Pedersen

Going Serverless with Azure Functions

- 2,360