Journal Club

Stephen M

4/21/21

The Hope

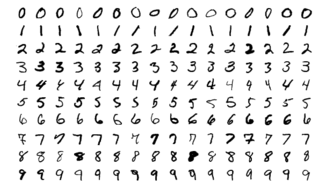

Demonstrate the feasibility of decoding human thought by translating brain activity into an image

The Plan

- Train an end-to-end mental image generation framework

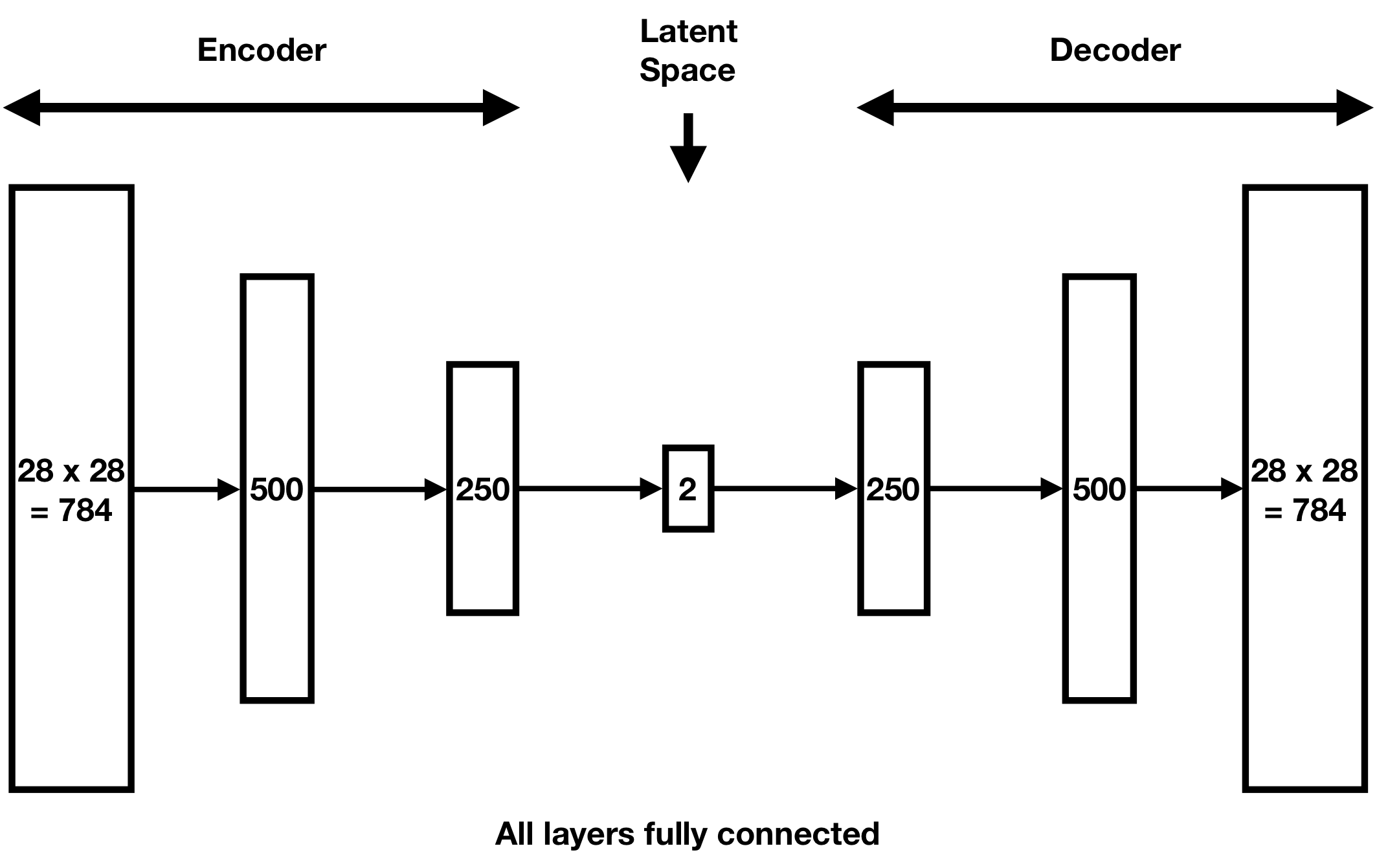

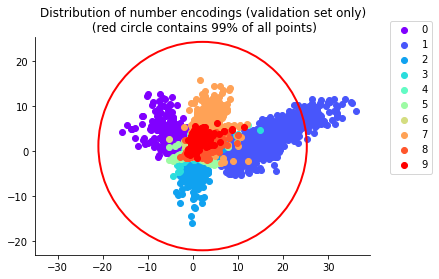

- General architecture is to use a VAE to map brain signals and images to a latent space, and then "align" these latent spaces

The Data

- Two sessions: image presentation session and a mental imagery session

Encoder Overview

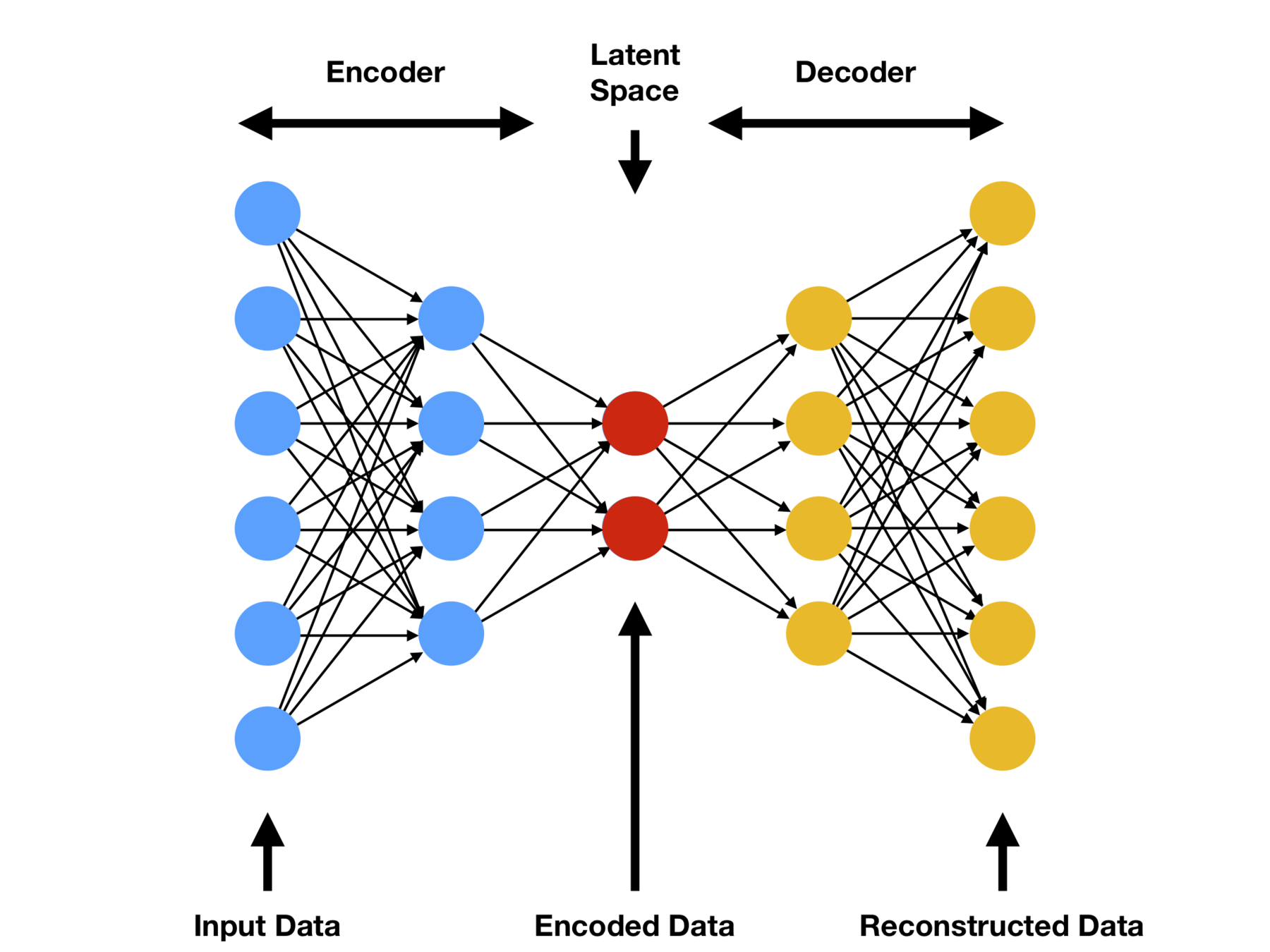

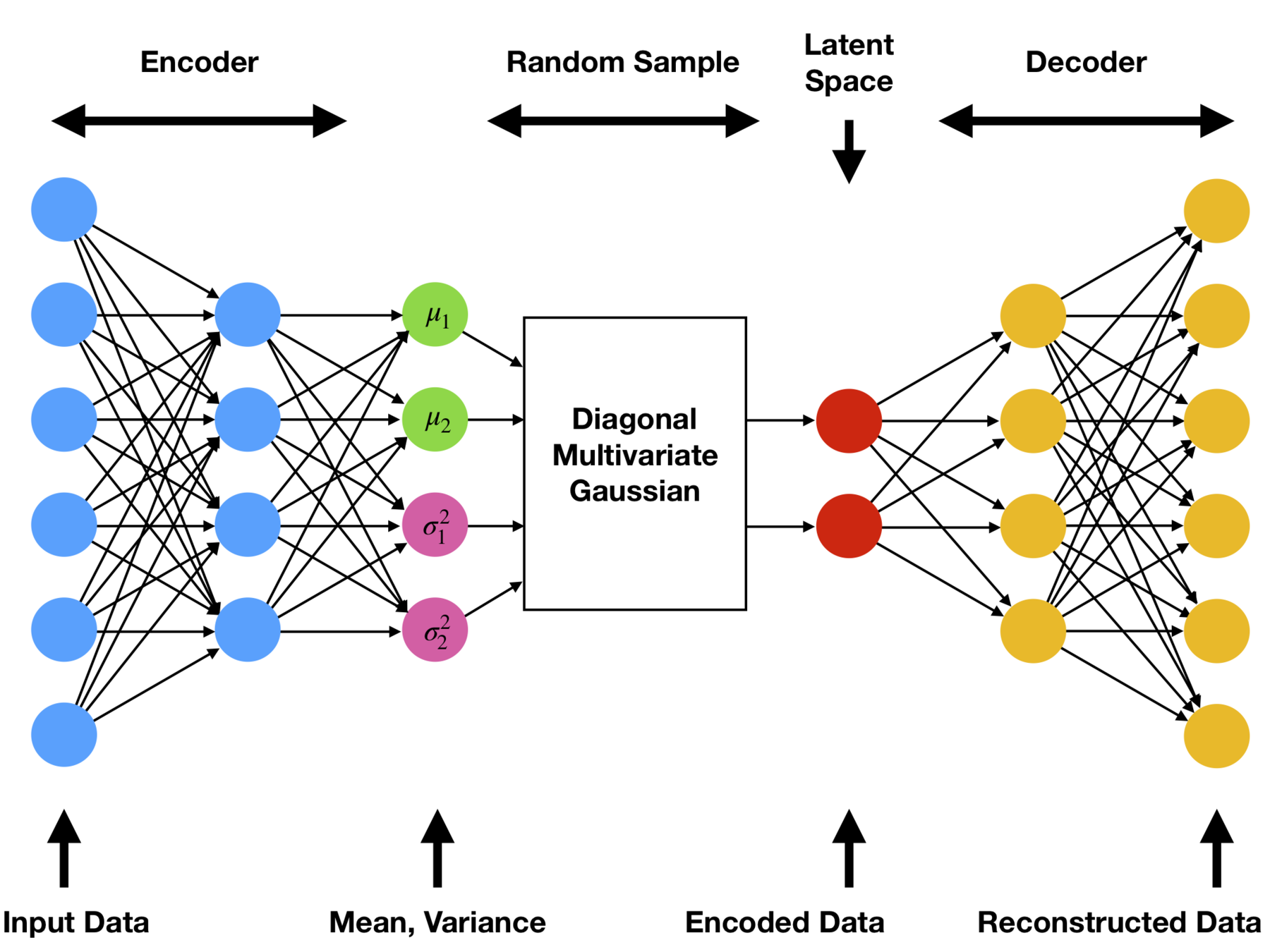

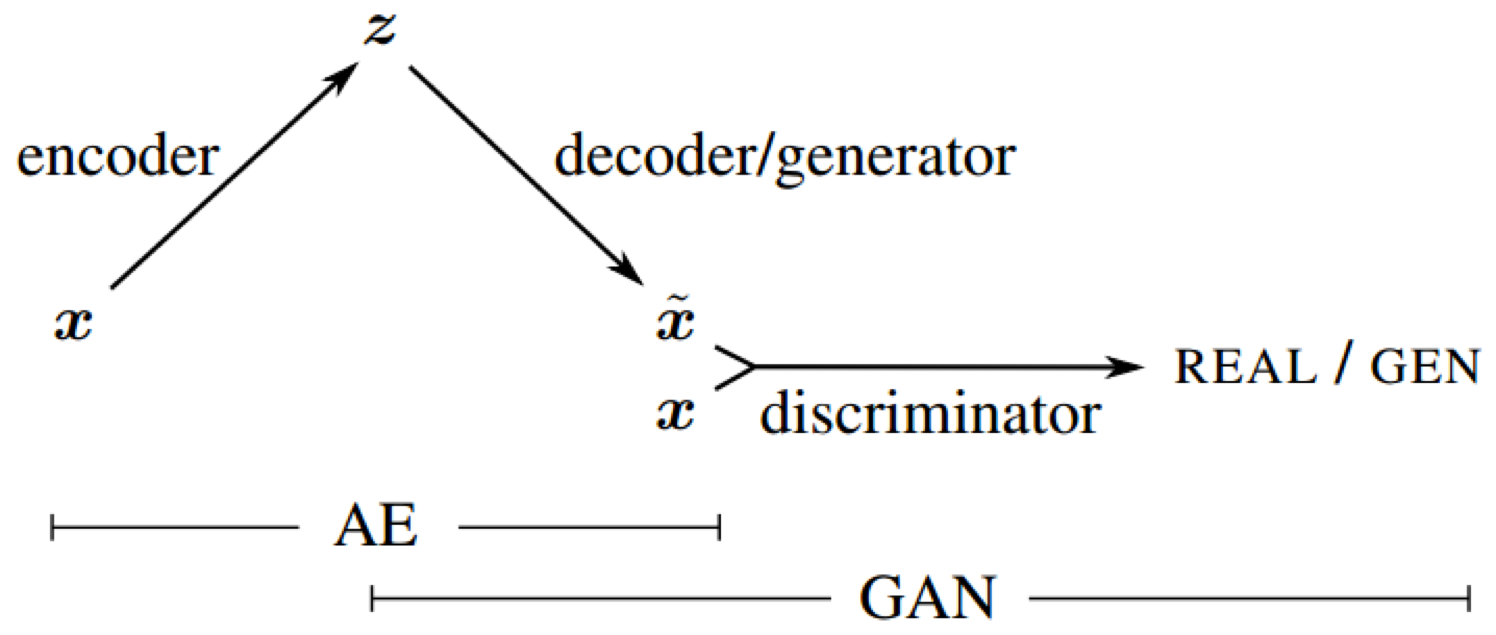

Auto Encoder

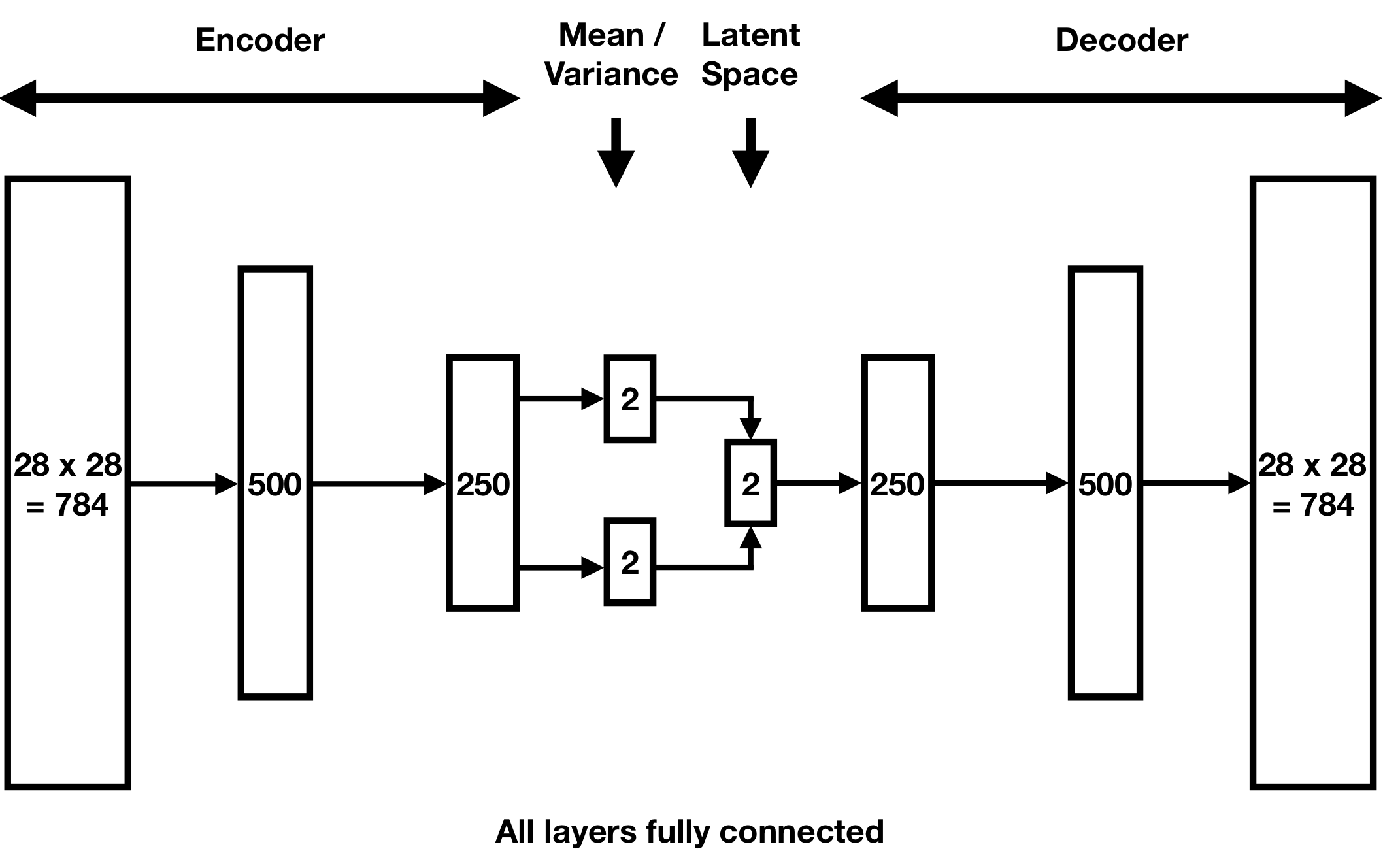

Variational AE

Variational AE

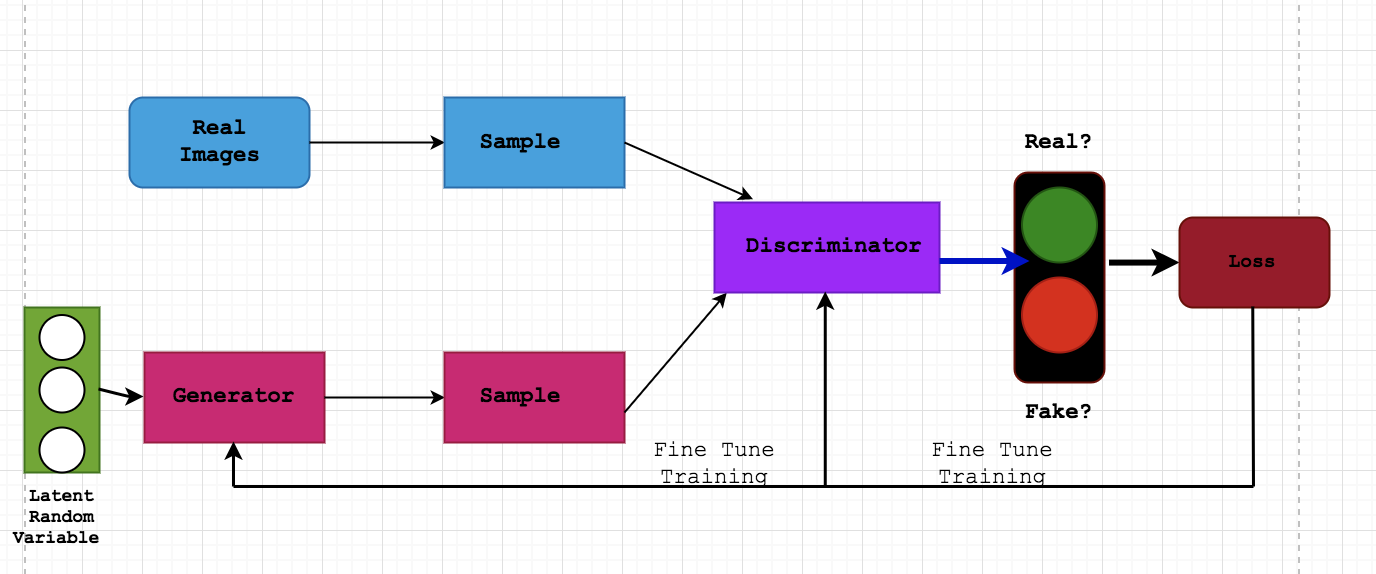

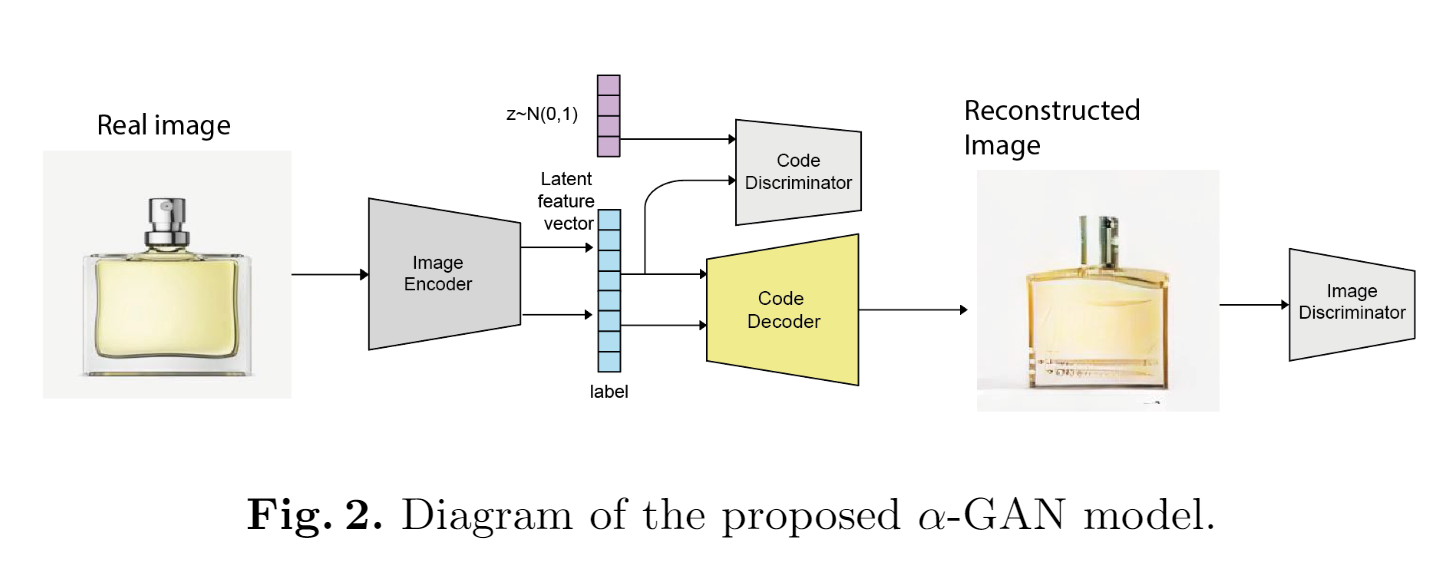

\(\alpha\)-GAN

- Combines VAE with GAN

Combining The Approaches

Framework

EEG Encoder

fMRI Encoder

Image Reconstructor

Overall, a pretty simple idea. I don't think the architecture is too convoluted

Now that we have a "latent space" that is created from the image features, we can look at the other components

Encoders

- Literature on EEG signal extraction

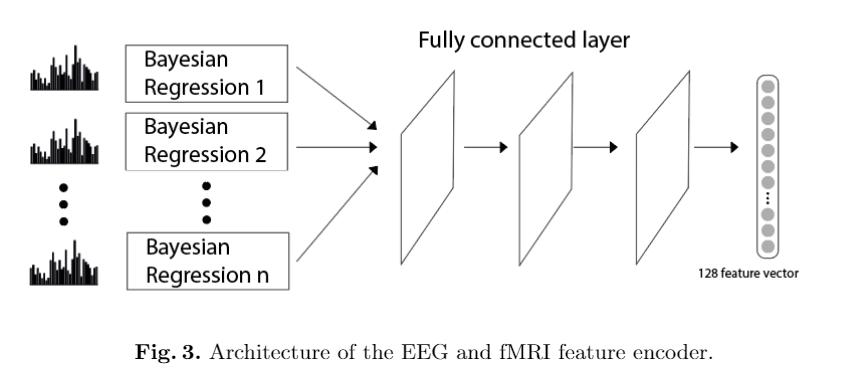

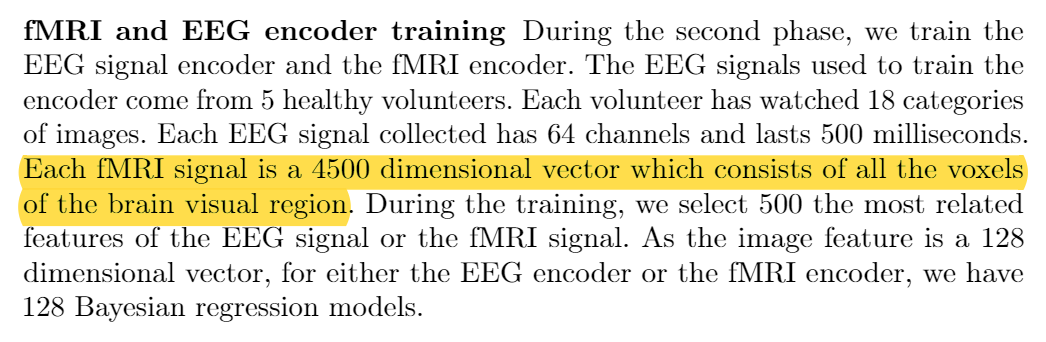

Unlike the EEG signal which can be directly used to train the learning model [...]

Encoders use the same architectures

Encoders

We firstly employ Pearson Correlation Coefficient to select k most related features of the EEG signal or the fMRI signal according to each dimension of the m dimension image feature representation vector

Then, we construct m

parallel Bayesian regression sub-models. Each Bayesian regression predicts the

value of one corresponding dimension of the image feature representation vector

based on k most related features of the EEG signal or the fMRI signal

Data

- fMRI - EEG

- 5 subjects. Pretty low resolution imaging

- Visual Stimuli

- 20 categories with 50 examplars per category

- Data collection

- 5 sessions for image experiment

Training

- \( \alpha\)-GAN architecture is given in text

- Training doesn't make sense to me

- What atlas do they use?

- Bad assumptions

- Bad model

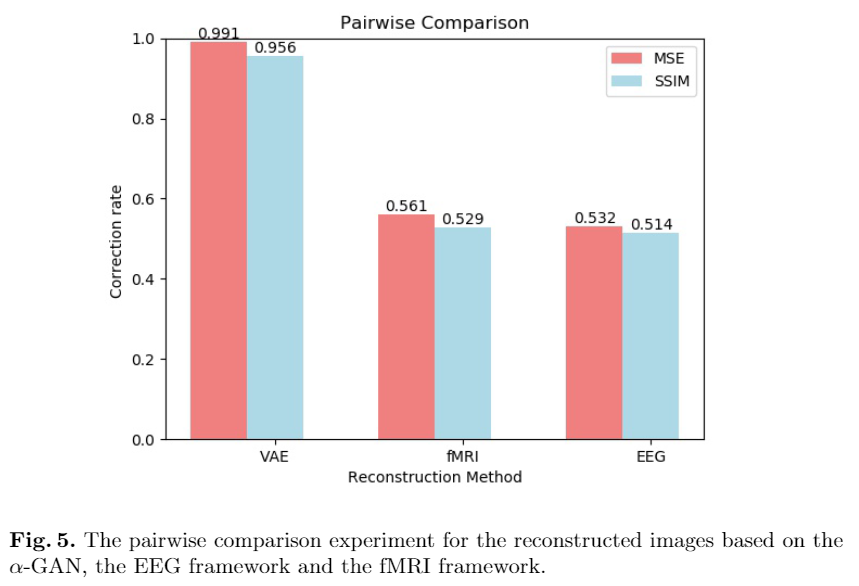

- Asses model using SSIM and MSE

Results

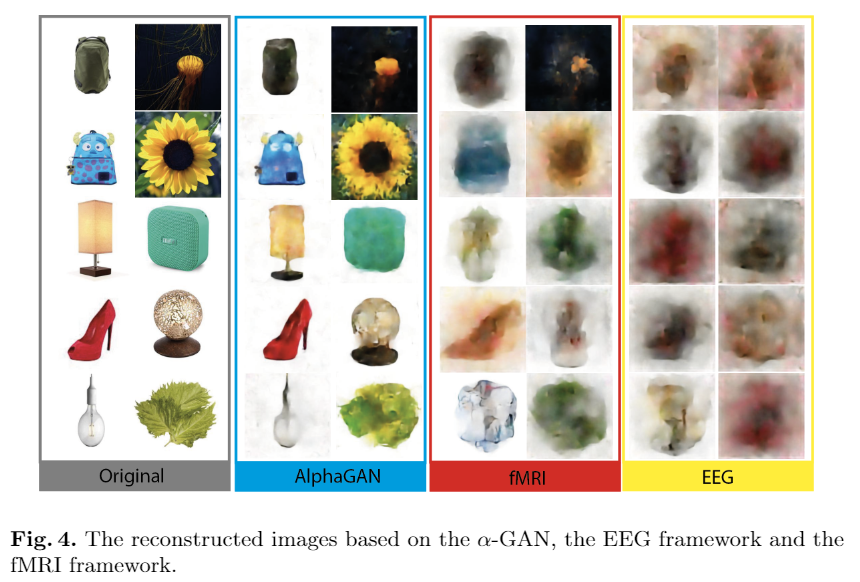

Fig 4

Summary and Discussion

- There is talk about split-test data on training?? No validation?

- What about the imagining dataset? Never talks about it

Thoughts

- I would NOT accept this paper

- Methods needs more detail

- Really coarse approach

I think that there is a better way:

HCP (along with Haxby) has publically available datasets of people watching movies

VAE-GAN Brain Decoder Paper

By smazurchuk

VAE-GAN Brain Decoder Paper

This is a slide deck I made for a journal club presentation. It presents an arxiv pre-print that tries to "decode human imagination"

- 170