Stephen Merity, Bryan McCann, Richard Socher

Motivation: Better baselines

Fast and open baselines are the

building blocks of future work

... yet rarely get any continued love.

Given the speed of progress within our field, and the many places progress arises, revisiting these baselines is important

Baseline numbers are frequently copy + pasted from other papers, rarely being re-run or improved

Revisiting RNN regularization

Standard activation regularization is easy,

even part of the Keras API ("activity_regularizer")

Activation regularization has nearly no impact on train time and is compatible with fast black box RNN implementations (i.e. NVIDIA cuDNN)

... yet they haven't been used recently?

Activation Regularization (AR)

Encourage small activations, penalizing any activations far from zero

For RNNs, simply add an additional loss,

where m is dropout mask and α is a scaler.

We found it's more effective when applied to the dropped output of the final RNN layer

\alpha L_2(m \cdot h_t)

αL2(m⋅ht)

Temporal Activation Regularization (TAR)

Loss penalizes changes between hidden states,

where h is the RNN output and β is a scaler.

Our work only penalizes the hidden output h of the RNN, leaving internal memory c unchanged.

\beta L_2(h_t - h_{t-1})

βL2(ht−ht−1)

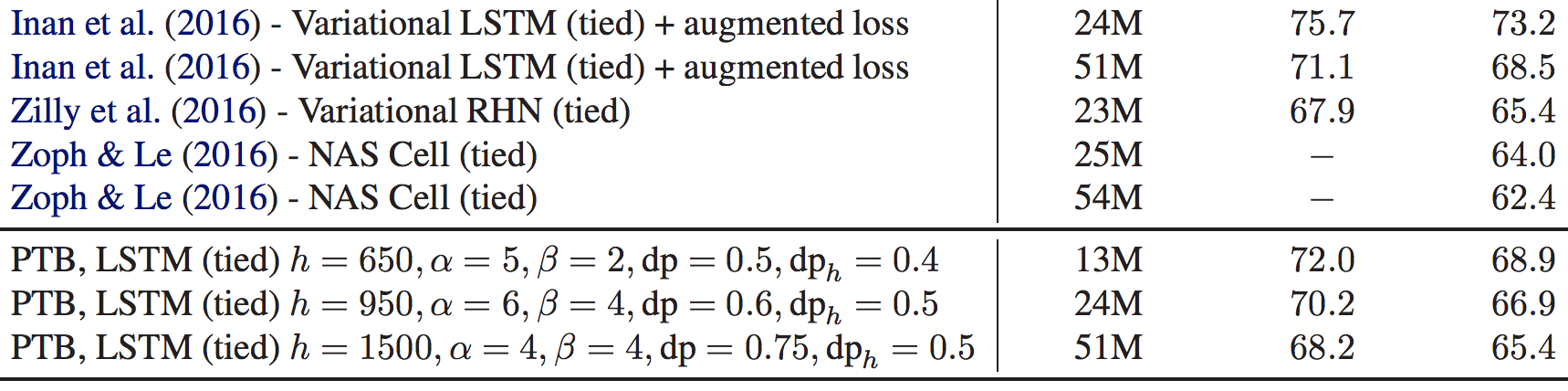

Penn Treebank Results

Note: these LSTMs have no recurrent regularization,

all other results here used variational recurrent dropout

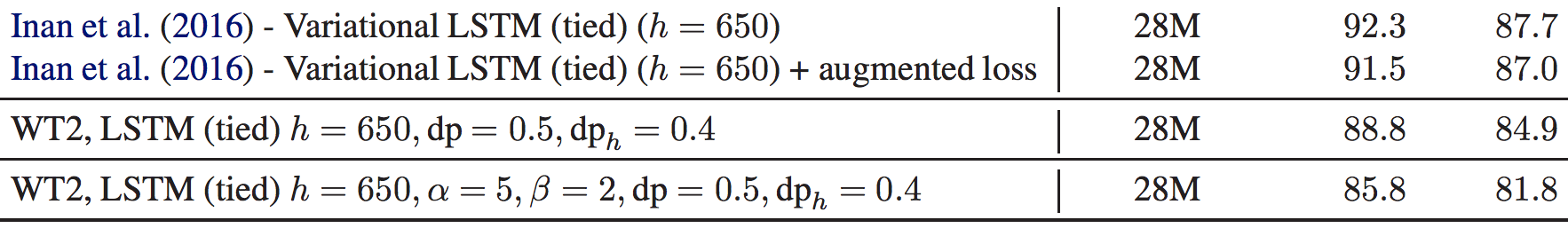

WikiText-2 Results

Note: these LSTMs have no recurrent regularization,

all other results here used variational recurrent dropout

Related work

Independently LSTM LM baselines are revisited in Melis et al. (2017) and Merity et al. (2017)

Both, with negligible modifications to the LSTM, achieve strong gains over far more complex models on both Penn Treebank and WikiText-2

Melis et al. 2017 also investigate "hyper-parameter noise" / experimental setup of recent SotA models

Summary

Baselines should not be set in stone

As we improve the underlying fundamentals of our field, we should revisit them and improve them

Fast, open, and easy to extend baselines should be first class citizens for our field

We'll be releasing our PyTorch codebase for this and follow-up SotA (PTB/WT-2) numbers soon

Contact me online at @smerity / smerity.com

Check out

Revisiting Activation Regularization for Language RNNs

and our follow up work

Regularizing and Optimizing LSTM Language Models

Revisiting Activation Regularization for Language RNNs

By smerity

Revisiting Activation Regularization for Language RNNs

- 2,767