Neural QA

Suriyadeepan Ramamoorthy

Who Am I?

- AI Research Engineer, SAAMA Tech.

- I work in NLU

- I am a Free Software Evangelist

- I'm interested in

- AGI

- Community Networks

- Data Visualization

- Generative Art

- Creative Coding

- And I have a blog

Natural Language Understanding

- Disassembling and Parsing natural language text

- Complexity of understanding

- AI-Complete / AI-hard

equivalent to that of solving the central AI problem

Natural Language Understanding

- Natural Language Inference

- Semantic Parsing

- Relation Extraction

- Sentiment Analysis

- Summarization

- Dialog Systems

- Question Answering

Question Answering

- Question Answering as a test for NLU

- Natural Language Model

- Understanding Context

- Reasoning

What has changed?

- Availability of labelled data

- (document, question, answer)

- 100,000+ QA pairs on 500+ wikipedia articles

-

Neural Network based Models

- Representation Power

- Specialized Networks

Problem Definition

Given \((d,q)\), find \(a \epsilon A \)

- document

- query

- answer

- Answer Vocabulary

- Global Vocabulary

Standard Tasks

Cloze Test

- Context : document or a passage

- Select a sentence

- Pose it as a query by removing a word from it

Cloze Test

"Spinoza argued that God exists and is abstract and impersonal. Spinoza's view of God is what Charles Hartshorne describes as Classical Pantheism. Spinoza has also been described as an "Epicurean materialist," specifically in reference to his opposition to Cartesian mind-body dualism. _______ , however, deviated significantly from Epicureans by adhering to strict determinism .... "

Spinoza

Cloze Test

- Complexity of QA depends on the nature of the word removed

- Answer Type

- Named Entity

- Common Noun

- Preposition

- Verb

Cloze Test

"Spinoza argued that God exists and is abstract and impersonal. Spinoza's view of God is what Charles Hartshorne describes as Classical Pantheism. Spinoza has also been described as an "Epicurean materialist," specifically __ reference to his opposition to Cartesian mind-body dualism. Spinoza, however, deviated significantly from Epicureans by adhering to strict determinism .... "

in

Children's Book Test

designed to measure directly how well language models can exploit wider linguistic context

- Cloze-style query generation mechanism

- Applied to Children's books from Project Gutenberg

- 669,343 questions from 77 passages

- (context, query, candidates, answer)

Children's Book Test

(1) So they had to fall a long way . (2) So they got their tails fast in their mouths . (3) So they could n't get them out again . (4) That 's all . (5) `` Thank you , " said Alice , `` it 's very interesting . (6) I never knew so much about a whiting before . " (7) `` I can tell you more than that , if you like , " said the Gryphon . (8) `` Do you know why it 's called a whiting ? " (9) `` I never thought about it , " said Alice . (10) `` Why ? " (11) `` IT DOES THE BOOTS AND SHOES . ' (12) the Gryphon replied very solemnly . (13) Alice was thoroughly puzzled . (14) `` Does the boots and shoes ! " (15) she repeated in a wondering tone . (16) `` Why , what are YOUR shoes done with ? " (17) said the Gryphon . (18) `` I mean , what makes them so shiny ? " (19) Alice looked down at them , and considered a little before she gave her answer . (20) `` They 're done with blacking , I believe . "

(21) `` Boots and shoes under the sea , " the XXXXX went on in a deep voice , `` are done with a whiting ".

gryphon

CNN/Daily Mail

- (2015) DeepMind

- ~1M news stories and summaries

- (context, query, answer) triples

- CNN : 90K documents, 380K queries

- Daily News : ~200K documents, 880K queries

- Anonymized named entities

CNN/Daily Mail

"(@entity0) don't listen to the haters , @entity2 : you made your famous mother proud . the "@entity6" actor received mixed reviews for her appearance this weekend on " @entity9. "despite a few crowd - pleasing moments , a controversial sketch starring @entity2 as an @entity12 recruit dominated conversation of the episode . amid the furor , @entity2 's mother , @entity17 , chimed in sunday with a totally unbiased view . " she killed it ! ! ! wow ! i loved her poise , her comic timing , her grace , loved everything she did ! ! " @entity26 said on @entity27 .

but some reacted negatively to @placeholder 's @entity12 - related sketch

@entity2

@entity17:Melanie Griffith, @entity2:Johnson, @entity0:CNN, @entity26:Griffith, @entity6:Fifty Shades, @entity9:Saturday Night Live, @entity12:ISIS, @entity27:Twitter,

Stanford QA Dataset (SQuAD)

- ~100K QA pairs from 536 wikipedia articles

- High Quality Questions

- Human-annotated data

- Requires complex reasoning to solve

While asking questions, avoid using the same words/phrases as in the paragraph. Also, you are encouraged to pose hard questions.

Stanford QA Dataset (SQuAD)

In meteorology, precipitation is any product of the condensation of atmospheric water vapor that falls under gravity. The main forms of precipitation include drizzle, rain, sleet, snow, graupel and hail... Precipitation forms as smaller droplets coalesce via collision with other rain drops or ice crystals within a cloud. Short, intense periods of rain in scattered locations are called “showers”.

o What causes precipitation to fall?

o What is another main form of precipitation besides drizzle, rain, snow, sleet and hail?

o Where do water droplets collide with ice crystals to form precipitation?

bAbI

-

Synthetic Data

- Simulated Natural Language

- Simple Rule based Generation

- (2015) Facebook Research

- 20 Tasks based on type and complexity of reasoning

- (supporting facts, query, answer)

bAbI

(1) Lily is a swan

(2) Bernhard is a lion

(3) Greg is a swan

(4) Bernhard is white

(5) Brian is a lion

What color is Brian? white

bAbI

- Size Reasoning

- Agents Motivations

- Basic Induction

- Compound Coreference

- Two Supporting Facts

- Three Supporting Facts

- Conjunction

- Basic Deduction

- Simple Negation

- Positional Reasoning

- Time Reasoning

- Basic Coreference

- Yes No Questions

- Three Arg Relations

- Two Arg Relations

- Single Supporting Fact

- Lists Sets

- Path Finding

- Counting

- Indefinite Knowledge

Visual QA

- Open-ended questions about images

- understanding of vision, language and common-sense knowledge

CLEVR

- Compositional Language and Elementary Visual Reasoning

- Synthetic Images

CLEVR

- Are there an equal number of large things and metal spheres?

- What size is the cylinder that is left of the brown metal thing that is left of the big sphere?

- There is a sphere with the same size as the metal cube; is it made of the same material as the small red sphere?

- How many objects are either small cylinders or red things?

Architectures

End-to-End Memory Networks

- set of inputs (facts), \(\{x_i\}\)

- memory vectors, \(\{m_i\}\)

- output vectors, \(\{c_i\}\)

- query, \(q\) to internal state, \(u\)

- match, \(p_i = softmax(u^T m_i)\)

- blended representation, \(o = \sum_i p_ic_i\)

- new internal state, \(u_{k+1} = o_k + u_k\)

- prediction, \(a = softmax(W(u+o))\)

End-to-End Memory Networks

End-to-End Memory Networks

- 16/20 bAbI tasks

- Children's Book Test

- 66.6% on named entities

- 63.0% on common nouns

- 69.4% on CNN QA

Attention Sum Reader

- (document, query, candidates, answer)

- Contextual Embedding, \(f_i(d)\)

- Query Embedding, \(g(q)\)

- Dot product (match), \(s_i = exp(f_i(d).g(q))\)

- Pointer Sum Attention

- \(P(w | q,d) = \sum_{i \epsilon I(w,d)} s_i\)

Attention Sum Reader

Attention Sum Reader

- Children's Book Test

- 71.0% on named entities

- 68.9% on common nouns

- 75.4% on CNN QA

- 77.7% on Daily Mail

Intuitions

Partial Reduction

- Context contains more information than necessary

- Represent context at the right level of abstraction

- What is the right level of abstraction?

Partial Reduction

How many objects are either small cylinders or metal things?

Multi-hop Reasoning

- Facilitates Partial Reduction

- Context conditioned on the query

Variable Depth Reasoning

- Variable number of hops

- Diversity of questions in SQuAD

- Variation in complexity of reasoning

Reasoning over Multiple Facts

- Mary moved to the bathroom.

- Sandra journeyed to the bedroom.

- Mary got the football there.

- John went back to the bedroom.

- Mary journeyed to the office.

- John travelled to the kitchen.

- Mary journeyed to the bathroom.

Where is the football?

Reasoning over Multiple Facts

- Context as a list of facts

- Object of interest : football

- Associate Mary with football

- Follow the trail of clues relevant to the query

- arrive at the answer

Reasoning over Multiple Facts

Episodic Memory

- Memory Slots to represent each fact

- Attention Mechanism to select memories relevant to the query

- By visualizing attention scores/weights

- observe supporting facts

Episodic Memory

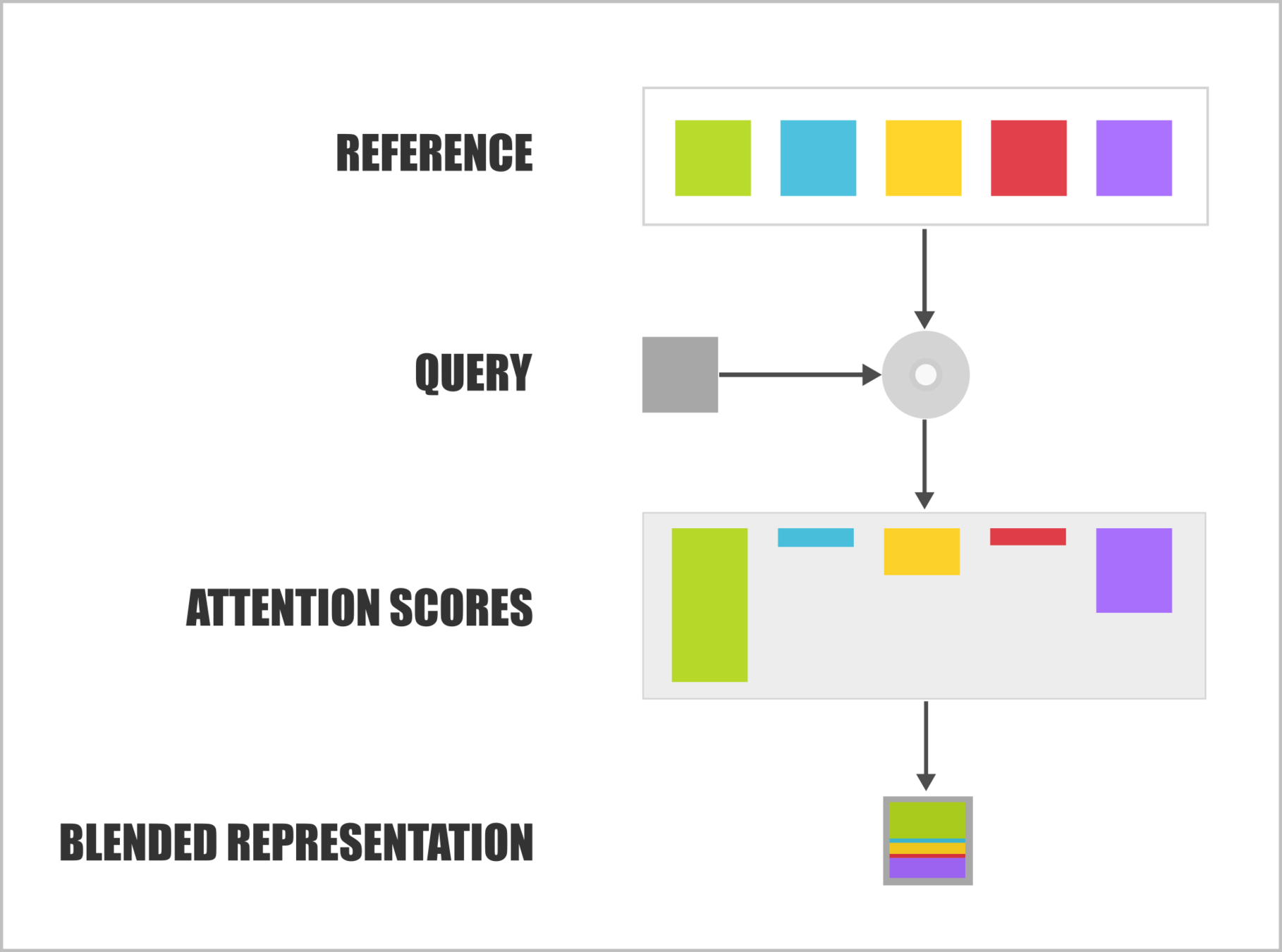

Attention Mechanism

- Reference, an array of units

- Query

- Attention weights

- signify which parts of reference are relevant to query

- Which parts of the context are relevant to the query?

- Weighted or Blended Representation

Attention Mechanism

-

Multiplicative attention

- \(a_{ij} = h_i^TW_as_j\)

- \(a_{ij} = h_i^Ts_j\)

-

Additive attention

- \(a_{ij} = v_a^T tanh(W_1h_i + W_2s_j)\)

- \(a_{ij} = v_a^T tanh(W_a [h_i ; s_j])\)

-

Blended Representation

- \(c_i = \sum_j a_{ij} s_j\)

Attention Mechanism

Recurring Patterns

Layered Architecture

- Embedding Layer

- Encoder

- Query Encoder

- Passage Encoder

- Interaction Layer

- Decoder

- Answer Pointer Layer

- Chunking and Ranking

Embedding Layer

- Tokenized Passage and Query

- Project to vector space

- word2vec, Glove

tf.get_variable(

name="w_embedding",

shape=[vocab_size, emb_dim],

dtype=tf.float32,

initializer=tf.constant_initializer(embedding),

trainable=False

)Embedding Layer

- OOV (out-of-vocabulary) tokens

- Average word vectors of neighbours

- Character-level Word Representation

- vocabulary of unique characters

- fixed and small

- morphological features

- word as a sequence (RNN)

- word as a 2D [count x dim] image (CNN)

- Jointly training along Model Objective

- vocabulary of unique characters

Embedding Layer

- Hybrid embedding

- Combines character-level and word-level representations

- Semantic information from word-level representation

- Morphological information from character-level representation

- Combination method

- Concatenation : \( embedding(w_i) = [ W_{w_i} ; C_{w_i} ] \)

- Gated mixing

- \( cg_{w_i} = f(W_{w_i}, C_{w_i})\)

- \( wg_{w_i} = g(W_{w_i}, C_{w_i})\)

- \( embedding(w_i) = cg_{w_i}.C_{w_i} + wg_{w_i}.W_{w_i} \)

Encoder

- Primary Representation of Context and Query

- Bi-directional LSTM

- Parameter sharing

- Context loosely conditioned on Query

Encoder

Interaction Layer

- Inference Layer

- Facilitates interaction between Context and Query

- Query-aware Context Representation

- strongly conditioned on query

- enrich the context representation with information from query

- Answer layer entirely depends on this representation

- Most research in MRC, focused on this layer

Interaction Layer

Interaction Layer

- Encoded Context, \( \{ h_i^p \} \)

- Encoded Query, \(H^q = \{ h^q_1, h^q_2 , ...\}\)

- Attention weights, \(a_i\)

- Weighted Representation, \(\overline{H}^q = \{ \overline{h}^q_1, \overline{h}^q_2, ... \}\)

- \( z_i = [ \overline{h}^q_i ; h^p_i ] \)

- Conditional Representation

-

\( h^r_i = LSTM( z_i, h^r_{i-1}) \)

-

Iterative Inference

Answer Layer

- Single word answers

- \(a \epsilon C \), list of candidates

- \(a \epsilon V_d \), document vocabulary

- Probability distribution over \(A\)

- Span of text

- number of candidates increases exponentially

Answer Layer

In meteorology, precipitation is any product of the condensation of atmospheric water vapor that falls under gravity. The main forms of precipitation include drizzle, rain, sleet, snow, graupel and hail... Precipitation forms as smaller droplets coalesce via collision with other rain drops or ice crystals within a cloud. Short, intense periods of rain in scattered locations are called “showers”.

Where do water droplets collide with ice crystals to form precipitation?

Answer Layer

- span of text requires sophisticated answer layer

- Mechanisms

- Pointer Network

- 2 probability distributions [ START, END ]

- boundaries of answer span

-

Chunking and Ranking

- Rank possible candidate answer spans

- Pointer Network

Answer Pointer Layer

- Select a subset of elements given a constraint

- Pointer Network

- RNN encodes input sequence

- Decoder, during each step

- creates a probability distribution across the input sequence

- attention mechanism

- Sequence vs Boundary Model

- [ START, END ] 2 probability distributions across the context

Answer Pointer Layer

Chunking and Ranking

- Stanford Core NLP : Constituency Parser

- Answer candidates : separate passage into phrases

- List of PoS patterns to select phrases

- Ranker scores chunks based on cosine similarity

Case Study

Dynamic Coattention Network

Architecture - Encoder

Architecture - Dynamic Decoder

Architecture - Highway Maxout Network

Encoder

- Document, \(\{ x_1^D, x_2^D, ... x_m^D \}\)

- Query, \(\{ x_1^Q, x_2^Q, ... x_m^Q \}\)

- Document Encoding

- \(d_t = LSTM_{enc} (d_{t-1}, x_t^D)\)

- Query Encoding

- \(q_t = LSTM_{enc} (q_{t-1}, x_t^Q)\)

- Query Projection

- \(Q = tanh(W^{(Q)}Q' + b^{(Q)})\)

Attention Weights

- Affinity Matrix, \(L = D^TQ\)

affinity = tf.matmul( document, tf.transpose(query, [0, 2, 1]) )

# [(B,Ld,d]x[B,d,Lq]=[B,Ld,Lq]- Row-wise normalization, \(A^Q\)

Aq = tf.nn.softmax(tf.transpose(affinity, [0, 2, 1]))

# [B,Ld,Lq] - normalize along Ld- Column-wise normalization, \(A^D\)

Ad = tf.nn.softmax(affinity)

# [B,Ld,Lq] - normalize along LqAttention Contexts

- Query Attention Context, \(C^Q = DA^Q\)

Cq = tf.matmul(Aq, document)

# [B,Lq,Ld] x [B,Ld,d] = [B,Lq,d]- Coattention Context, \(C^D = [Q;C^Q]A^D\)

Cd = tf.transpose(tf.matmul( # [B,Lq,d*2] x [B,Ld,Lq] = [B,Ld,2*d]

tf.transpose(tf.concat([query, Cq], axis=-1), [0, 2, 1]),

tf.transpose(Ad, [0, 2, 1])

), [0, 2, 1])Temporal Fusion

- Fusion of temporal information

- \(u_t = Bi-LSTM(u_{t-1}, u_{t+1}, [d_t; c_t^D])\)

context_states, _ = tf.nn.bidirectional_dynamic_rnn(

tf.nn.rnn_cell.LSTMCell(hdim),

tf.nn.rnn_cell.LSTMCell(hdim),

tf.concat([document, Cd], axis=-1), # [B, Ld, 2*d + d]

tf.count_nonzero(_document, axis=1), # sequence lengths

dtype=tf.float32)

context = tf.concat(context_states, axis=-1) # final representationDynamic Pointing Decoder

- \(h_i = LSTM_{dec} (h_{i-1}, [u_{s_{i-1}}; u_{e_{i-1}}])\)

- \(u_t\) : coattention representation of \(t^th\) word

- \(u_{s_{i-1}}\) : previous estimate of start position

- \(u_{e_{i-1}}\) : previous estimate of end position

- Current estimate

- \(s_t = argmax_t (\alpha_1, ..., \alpha_m)\)

- \(e_t = argmax_t (\beta_1, ..., \beta_m)\)

- Scores

- \(\alpha_t\) : start score of \(t^{th}\) word in document

- \(\beta_t\) : end score of \(t^{th}\) word in document

Highway Maxout Network

- Start Score

- \(\alpha_t = HMN_{start}(u_t, h_i, u_{s_{i-1}}, u_{e_{i-1}})\)

-

End Score

- \(\beta_t = HMN_{end}(u_t, h_i, u_{s_{i-1}}, u_{e_{i-1}})\)

Highway Maxout Network

- \(r = tanh( W^{(D)} [h_i; u_{s_{i-1}}; u_{e_{i-1}}])\)

- \(m_t^{(1)} = max(W^{(1)}[u_t ; r ] + b^{(1)}) \)

- \(m_t^{(2)} = max(W^{(2)}m_t^{(1)} + b^{(2)}) \)

- \(HMN(u_t, h_i, u_{s_{i-1}}, u_{e_{i-1}}) = max(W^{(3)}[m_t^{(0)}; m_t^{(1)}]) + b^{(3)}\)

Highway Network

- Non-Linear Transformation at layer \(l\)

- Affine transformation followed by a non-linearity

- \(y = H(x, W_H)\)

- Highway Connection

- \(y = H(x, W_H).T(x, W_T) + x.C(x, W_C) \)

Dynamic Pointer Decoder

The iterative procedure halts when both the estimate of the start position and the estimate of the end position no longer change, or when a maximum number of iterations is reached.

Thank You!

Neural QA

By Suriyadeepan R

Neural QA

- 4,645