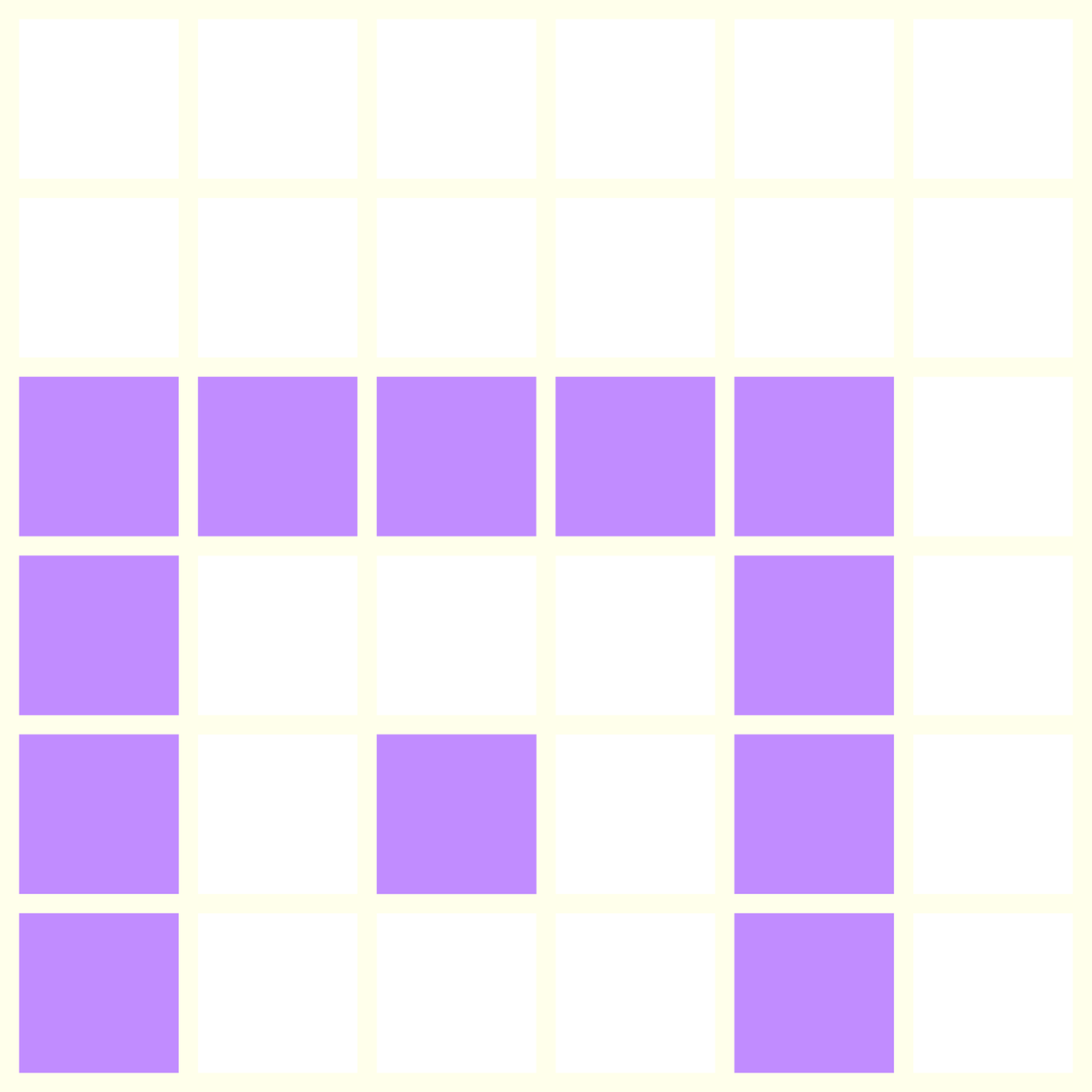

Continuous

Prioritized Sweeping

with

Gaussian Processes

- Sample-efficiency!

- Learning with fewer interactions.

GOAL

- Data is in tuple form: <s,a,s',r>

GOAL

- Data is in tuple form: <s,a,s',r>

GOAL

MODEL FREE

- Prioritize experience replay based on past

TD errors

- Experience replay

(randomly feed collected data to learning algorithm)

- Data is in tuple form: <s,a,s',r>

GOAL

MODEL FREE

- Prioritize experience replay based on past

TD errors

- Experience replay

(randomly feed collected data to learning algorithm)

MODEL BASED

- Use model to generate new data/backup the value function

- Prioritize based on past

TD errors AND learned dynamics

PRIORITIZED SWEEPING

Q(s,a) \mathrel{+}= \alpha\left [ R(s,a) + \gamma\max_{a'}Q(s',a') - Q(s,a) \right ]

PRIORITIZED SWEEPING

Q(s,a) \mathrel{+}= \alpha\left [ R(s,a) + \gamma\max_{a'}Q(s',a') - Q(s,a) \right ]

PRIORITIZED SWEEPING

Q(s,a) \mathrel{+}= \alpha\left [ R(s,a) + \gamma\max_{a'}Q(s',a') - Q(s,a) \right ]

PRIORITIZED SWEEPING

Q(s,a) \mathrel{+}= \alpha\left [ R(s,a) + \gamma\max_{a'}Q(s',a') - Q(s,a) \right ]

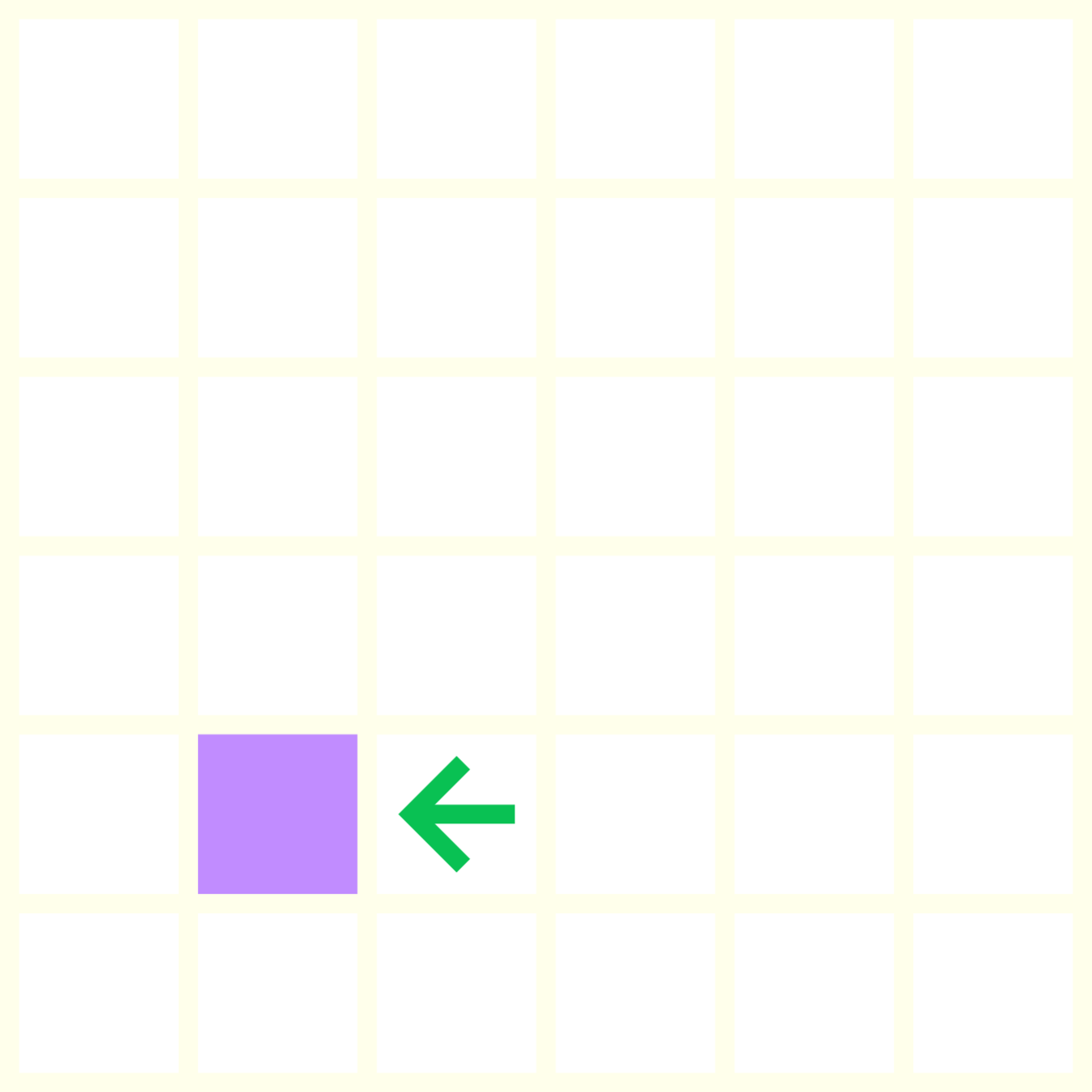

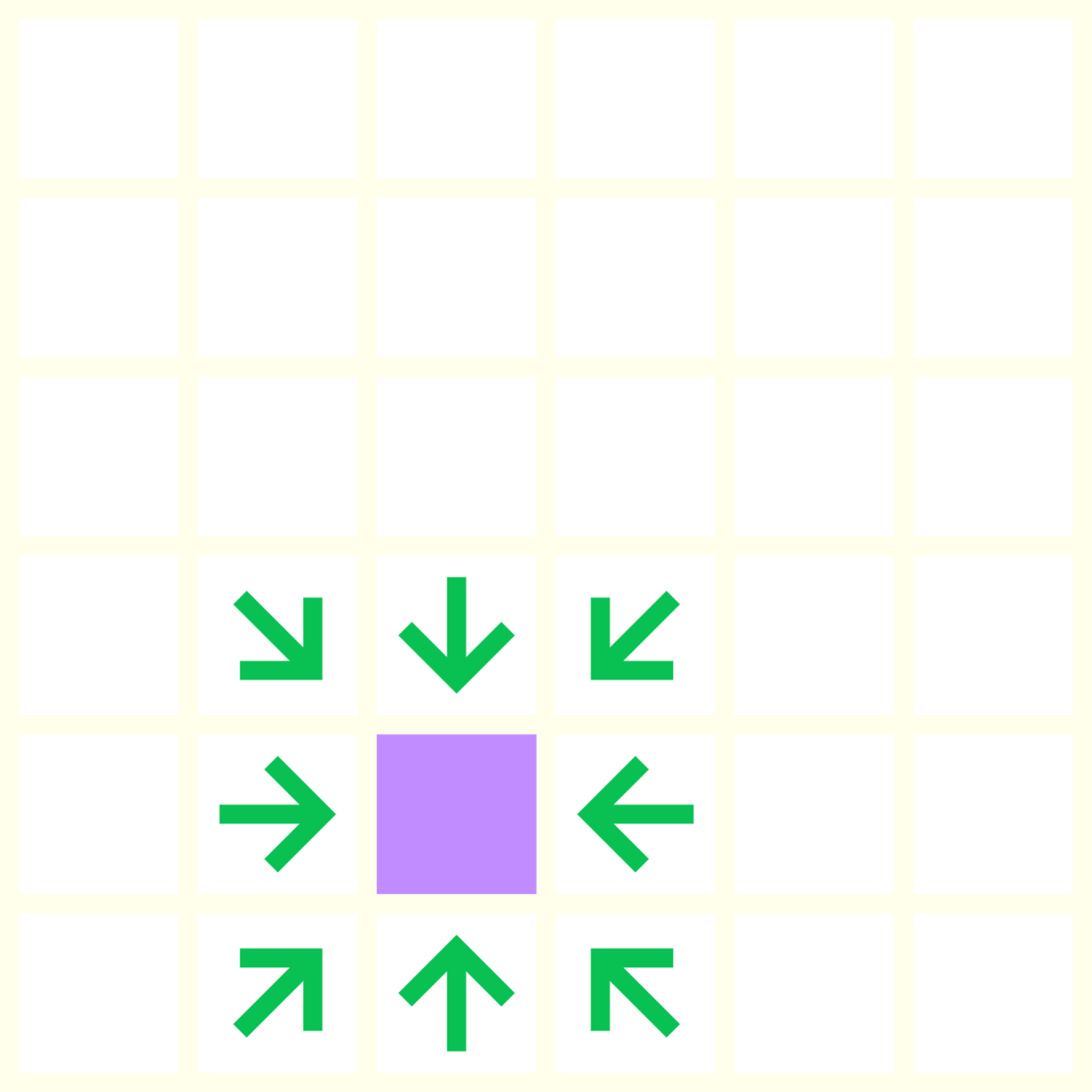

PRIORITIZED SWEEPING

p_{s,a} = \left | \Delta_{s'} \right |\ \ \cdot \ \ T(s' | s, a)

- Update V(s'), which gives

- Iterate all s,a pairs

- Compute all priorities

- Pick highest and repeat

How to prioritize?

\Delta_{s'}

Model

TD error

CONTINUOUS SETTING

- Learn continuous T

- Iterate s,a pairs to update priority

PROBLEMS

CONTINUOUS SETTING

- Learn continuous T

- Iterate s,a pairs to update priority

PROBLEMS

Gaussian Process!

SOLUTION

GAUSSIAN PROCESS

- Infinite dimensional multivariate Gaussian

- Sample-efficient & Bayesian function approximator

GAUSSIAN PROCESS

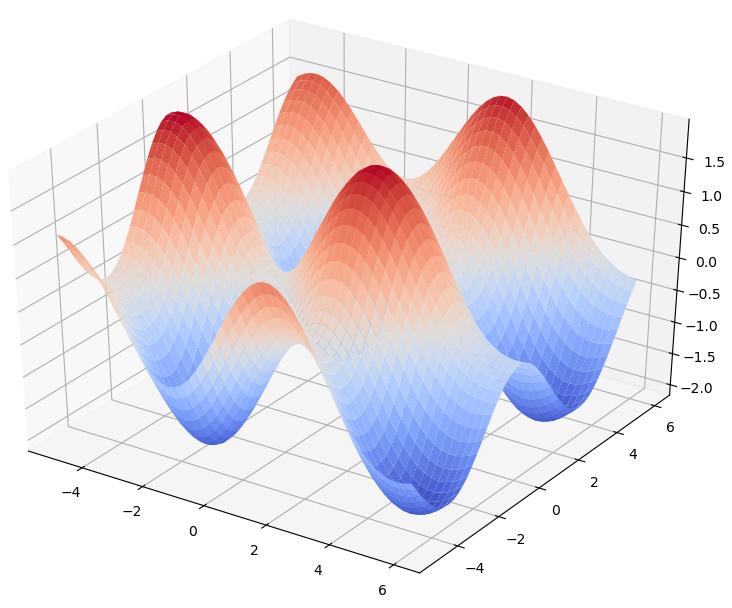

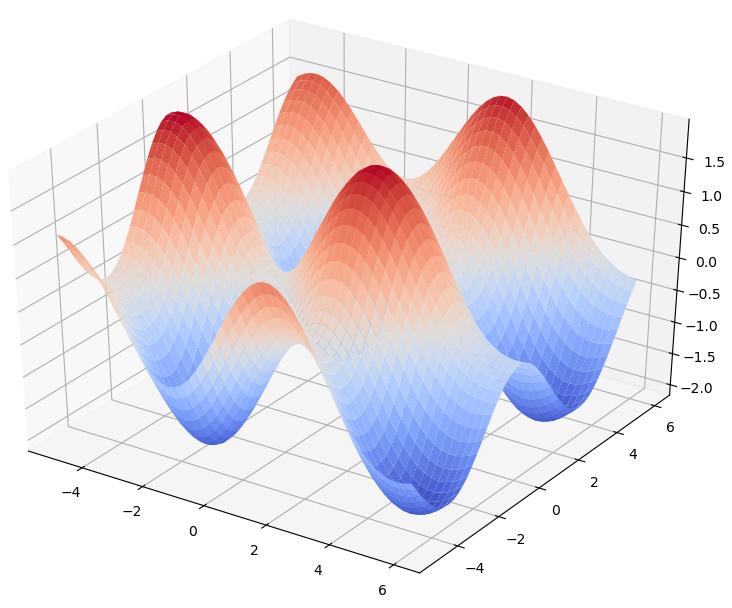

GAUSSIAN PROCESS

GAUSSIAN PROCESS

GAUSSIAN PROCESS

GAUSSIAN PROCESS

GAUSSIAN PROCESS

GAUSSIAN PROCESS

GAUSSIAN PROCESS

- It's a DISTRIBUTION over functions!

- It can be learned easily and sample-efficiently (there are some gotchas)

- It keeps track of uncertainty!

CONTINUOUS PS

p_{s,a} = \left | \Delta_{s'} \right |\ \ \cdot \ \ T(s' | s, a)

Model

TD error

This is what we need:

CONTINUOUS PS

T(s' | s, a)

with a GP

s

a

s'

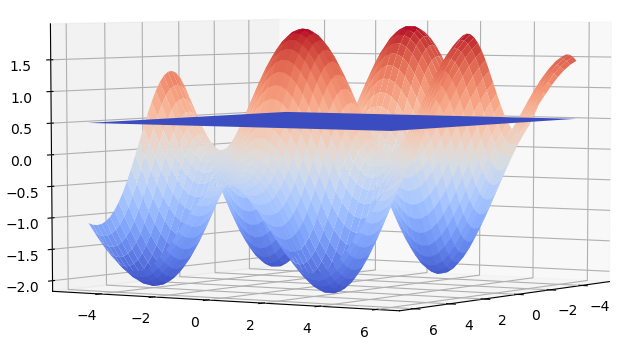

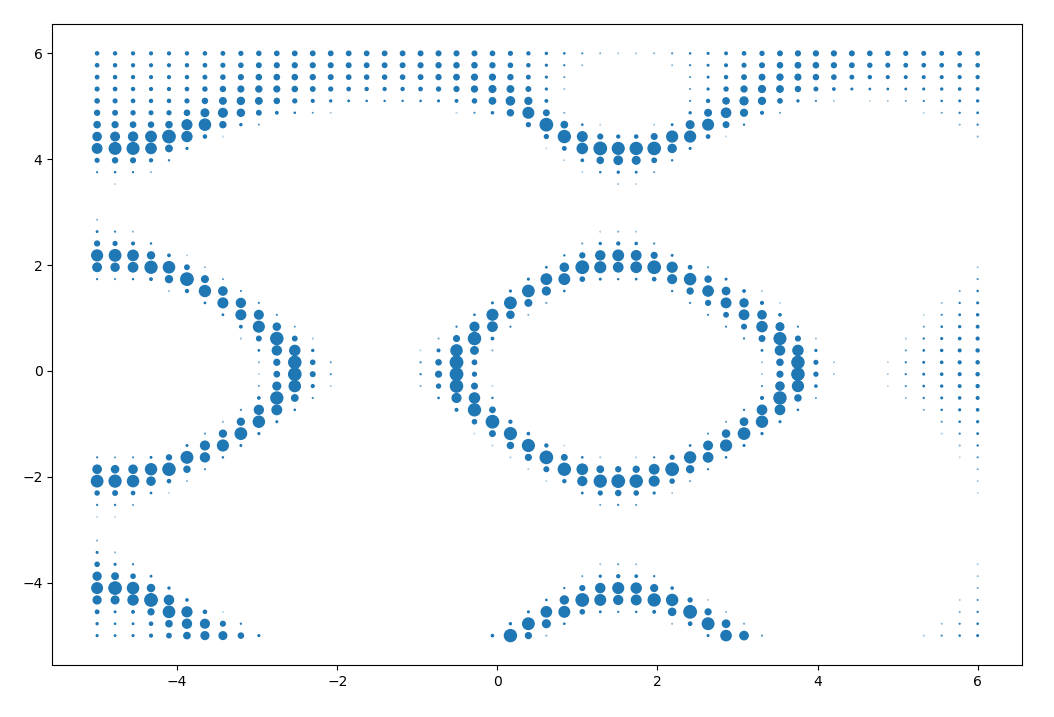

CONTINUOUS PS

Plot shows deterministic mean, but GP handles stochastic transitions!

s

a

s'

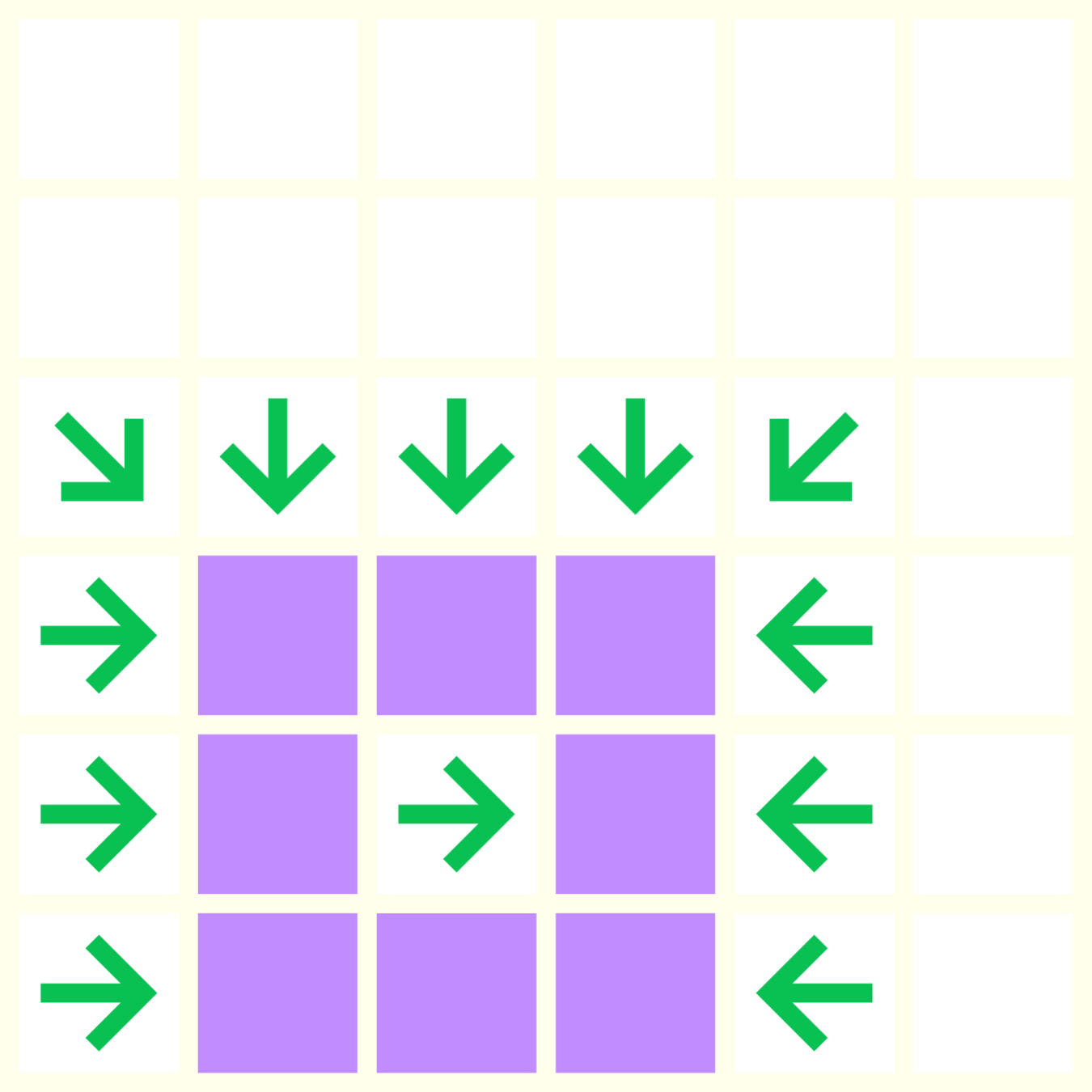

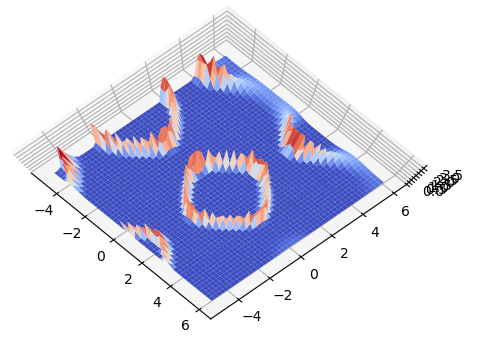

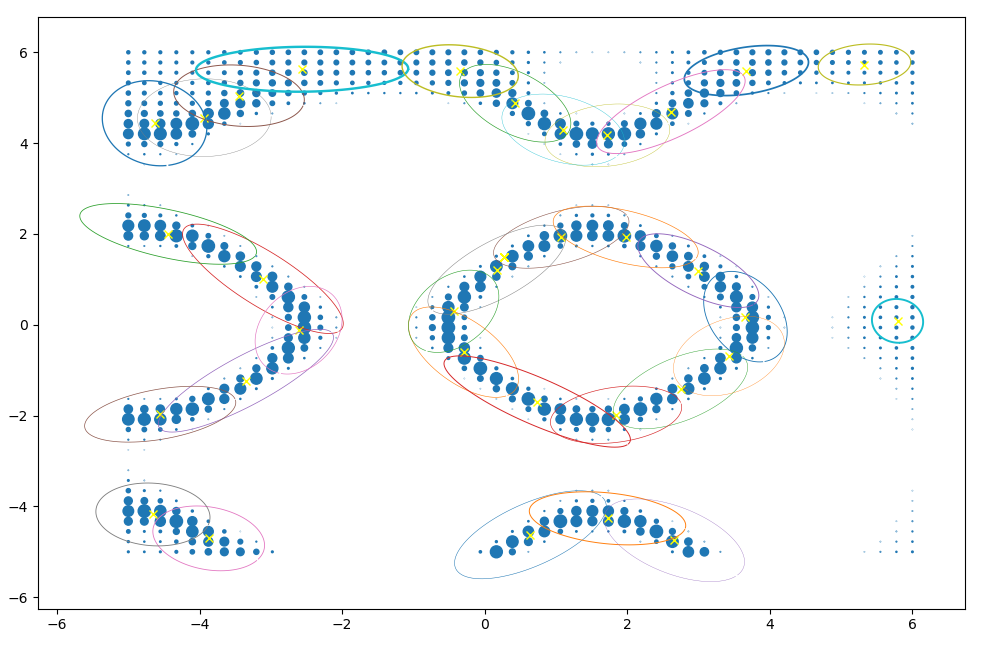

CONTINUOUS PS

Iterating over parents equals slicing GP at a given height

matplotlib screws the projection of

overlapping surfaces, sorry!

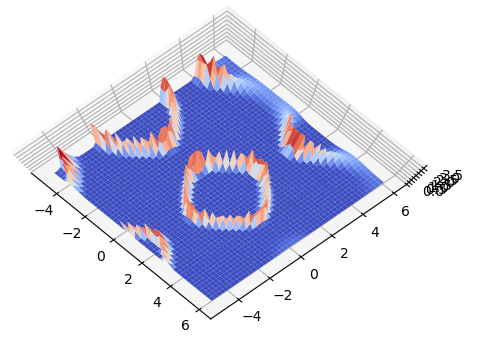

CONTINUOUS PS

Taking into account uncertainty, it'd look like this

CONTINUOUS PS

- Now we only need to go over all parents, and we can compute the priorities

- But wait.. this is still a continuous function!

- Approximate using sampling!

CONTINUOUS PS

- Sample arbitrary s,a

-

Some ways to optimize this:

- Latin Hypercube sampling

- Gradient descent (depending on GP kernel)

- Compute PDF using GP, and add the resulting priority to a queue

CONTINUOUS PS

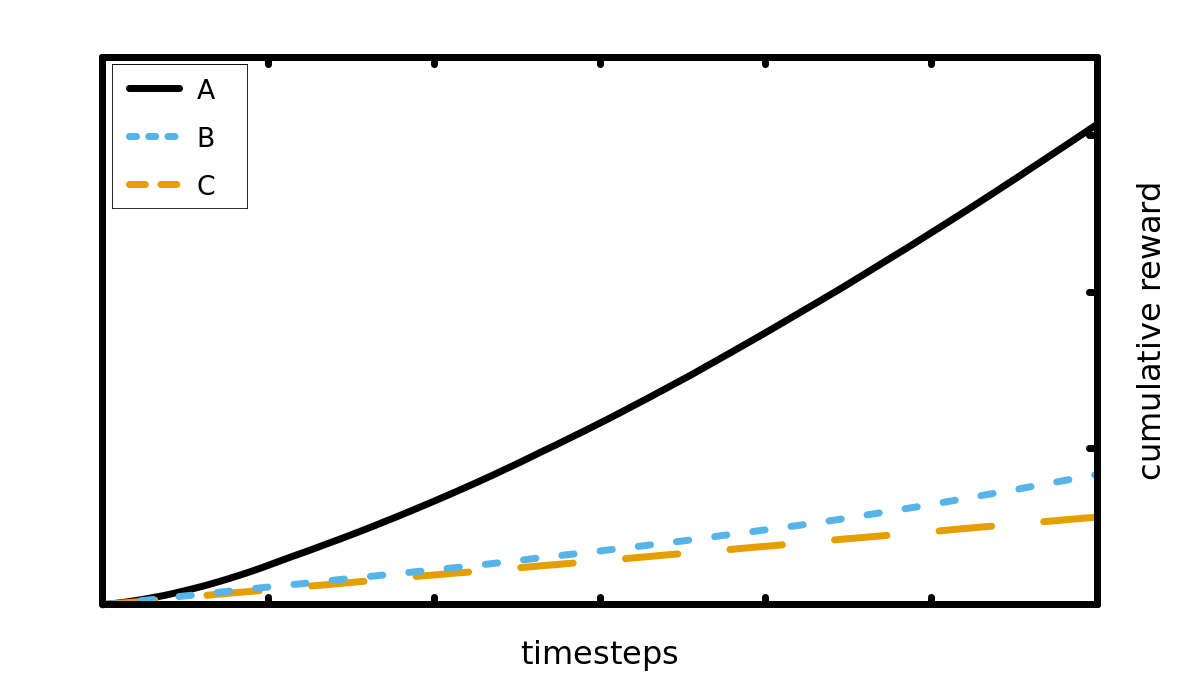

EXPERIMENTAL SETTING

-

Test DQN as:

- vanilla

- experience replay

- prioritized experience replay

- use GP to prioritize experience replay

- use GP to fully sample new experience

Multiagent Extension

- Already works for discrete settings (under domain knowledge assumptions)

- Main idea is that priorities are factored as sum of smaller functions

- Each function only depends on some state features and agents

Multiagent Extension

- Important being able to represent priorities as functions

- Since we are already there, use mixtures of Gaussians!

Multiagent Extension

- Cluster samples using variational Expectation Maximization (weighted)

Multiagent Extension

- Now we can represent priorities as actual functions, and can do fancy things with them!

- Enhanced buzzword level

- As long as everything works...

Questions?

Continuous PS with GPs

By svalorzen

Continuous PS with GPs

- 609