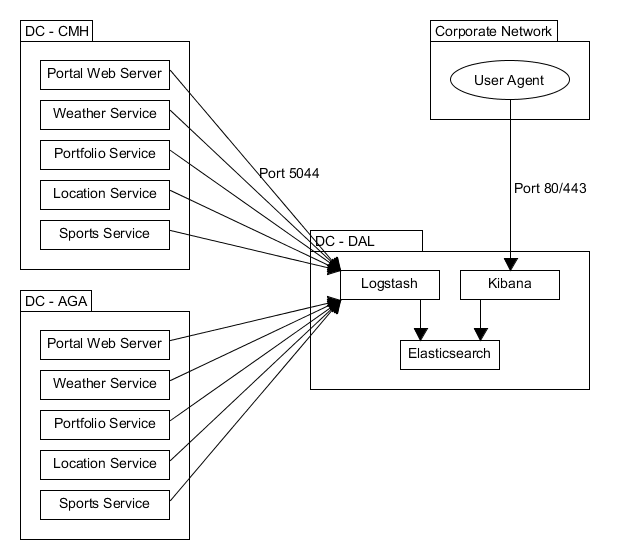

Portal Webserver ELK Logging Architecture

Central place for portal-based logs

- ELK stack implementation to ship logs from all of our portal webservers to one central location

- No more searching each individual server in a cluster for a needle in a haystack!

- See the actual input to phoenix APIs!

ELK Stack

- Stands for:

- Elasticsearch

- Logstash

- Kibana

Currently:

- We have the E and L implemented. K is a goal of ours

- Logstash and Elasticsearch organize and store the data for retrieval

- Kibana is just a user agent, a frontend to access Elasticsearch with

Logging and Filebeat

- Filebeat is another service in the ELK stack that doesn't have an initial

- It helps but it isn't necessary for the stack

- Still, it's the easiest solution

- Filebeat monitors logs that it's configured to monitor and sends the diff of any updates to Logstash

In summary

- Our software (e.g. the Phoenix API) creates some log in a plaintext file, readable on disk by Filebeat

- Filebeat monitors these logs and sends updates to Logstash

- Logstash transforms into an Elasticsearch-friendly format and sends logs to Elasticsearch

- Logs are then accessible via a REST API on the Elasticsearch endpoint

More info

- Many portal webservers

- web01-web08.[cluster].[datacenter].synacor.com

- iterate through each number and cluster

- Each of them running Filebeat locally

- Shipping logs to one central Logstash location

- Which then sends logs to one central Elasticsearch location

So what can you do?

- Currently, we log all Phoenix calls this way

- Instead of grepping through 8 servers to find the exact log entry you're looking for, search in one spot on ElasticSearch!

- Currently only available via command line and with access to credentials

In the future:

- Log other portal-based output

- Log other services adjacent to the portal

- Improve logging format

- Actually stand up an instance of Kibana so we don't need to use command line queries anymore

Elasticsearch API Credentials

- The DBA team wants us to keep them secret and secure

- Do not transmit them over email or any on-the-record chat service (like HipChat)

- A few people have the username/password for PS' ES user

- This is why I'd rather get Kibana stood up sooner rather than later -- more secure that way

Limitation:

- Hard disk space

- Currently we're storing 14 days' worth of logs and deleting any older than that. A cron job runs nightly to delete anything older than that.

- If we add more services, need to be mindful of how much space usage each service consumes

- Might just be able to ask for more storage as we need it, though

Practical example:

- TSS ticket for the CI on-call rotation

- They ask when a user was deleted and why

- Now we have the ability to look back in time and extract what the parameters to the API call were

- Given username to search for,

- TODO: insert command line query to do this here

Documentation:

deck

By tdhoward

deck

- 914