AWS

Lambda Practice

Andrii Chykharivskyi

Solution

Experience

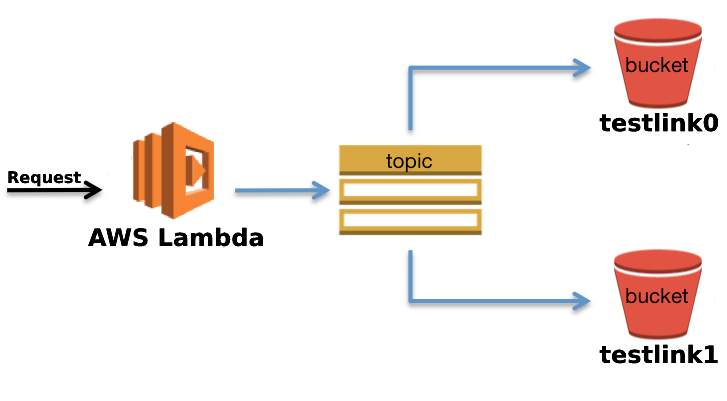

Initially, both for the local and for the pipeline, we used a function that was hosted on AWS.

Solution

Experience

Over time, we ran into a major problems with this approach:

- depending on the Internet connection

- limited invoking count for free tier

Solution

Experience

What do we need?

- should work locally

- should work with AWS S3 (Minio locally)

Creating

Creating

import os

from src import s3_client

from PyPDF3 import PdfFileReader, PdfFileWriter

def handler(event, context):

input_s3_bucket_name = event['input_s3_bucket_name']

input_file_token = event['input_file_token']

input_file_path = event['input_file_path']

aws_bucket = s3_client.get_client().Bucket(input_s3_bucket_name)

local_directory = '/tmp' + os.sep + input_file_token

os.makedirs(local_directory, exist_ok=True)

local_path = local_directory + os.sep + 'input.pdf'

aws_bucket.download_file(input_file_path, local_path)

local_input_pdf = PdfFileReader(open(local_path, "rb"))

return {

"statusCode": 200,

"pagesCount": local_input_pdf.getNumPages(),

"generatedFilePath": s3_output_path

} Get PDF file pages count

Creating

FROM public.ecr.aws/lambda/python:3.8

# Copy function code

COPY src/app.py ${LAMBDA_TASK_ROOT}

# Install the function's dependencies using file requirements.txt

# from your project folder.

COPY requirements.txt .

RUN pip3 install -r requirements.txt --target "${LAMBDA_TASK_ROOT}"

ADD . .

ARG AWS_ACCESS_KEY_ID

ARG AWS_SECRET_ACCESS_KEY

ARG AWS_DEFAULT_REGION

ARG AWS_S3_ENDPOINT_URL

ENV AWS_ACCESS_KEY_ID ${AWS_ACCESS_KEY_ID}

ENV AWS_SECRET_ACCESS_KEY ${AWS_SECRET_ACCESS_KEY}

ENV AWS_DEFAULT_REGION ${AWS_DEFAULT_REGION}

ENV AWS_S3_ENDPOINT_URL ${AWS_S3_ENDPOINT_URL}

# Set the CMD to your handler (could also be done as a parameter override outside of the Dockerfile)

CMD [ "app.handler" ]

File Dockerfile

Creating

version: '3.7'

services:

pdf-pages:

build:

context: ./

dockerfile: Dockerfile

restart: unless-stopped

environment:

AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY_ID:-tenantcloud}

AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_ACCESS_KEY:-tenantcloud}

AWS_DEFAULT_REGION: ${AWS_DEFAULT_REGION:-us-east-1}

AWS_S3_ENDPOINT_URL: ${AWS_S3_ENDPOINT_URL}

volumes:

- ./src:/var/task/src

ports:

- "${DOCKER_PDF_PAGES_PORT:-3000}:8080"

networks:

home:

networks:

home:

name: "${COMPOSE_PROJECT_NAME:-tc-lambda}_network"File docker-compose.yml

Creating

networks:

home:

name: "${COMPOSE_PROJECT_NAME:-tc-lambda}_network"File docker-compose.yml

If we have Minio S3 deployed inside this network, it will be available inside the container of our function

Creating

import os

import boto3

s3_endpoint_url = os.getenv('AWS_S3_ENDPOINT_URL')

def get_client():

if s3_endpoint_url:

return boto3.resource('s3',

endpoint_url=s3_endpoint_url if s3_endpoint_url else None,

config=boto3.session.Config(signature_version='s3v4'),

verify=False

)

return boto3.resource('s3')Updated S3 client, that supports Minio

Creating

version: '3.7'

services:

minio:

image: ${REGISTRY:-registry.tenants.co}/minio:RELEASE.2020-10-28T08-16-50Z-48-ge773e06e5

restart: unless-stopped

entrypoint: sh

command: -c "minio server --address :443 --certs-dir /root/.minio /export"

environment:

MINIO_ACCESS_KEY: ${AWS_ACCESS_KEY_ID:-tenantcloud}

MINIO_SECRET_KEY: ${AWS_SECRET_ACCESS_KEY:-tenantcloud}

volumes:

- ${DOCKER_MINIO_KEY:-./docker/ssl/tc.loc.key}:/root/.minio/private.key

- ${DOCKER_MINIO_CRT:-./docker/ssl/tc.loc.crt}:/root/.minio/public.crt

networks:

home:

aliases:

- minio.${HOST}

networks:

home:

name: "${COMPOSE_PROJECT_NAME:-tc-lambda}_network"

File docker-compose.minio.yml

Creating

Invoking Docker container function after build

curl -XPOST "http://localhost:3000/2015-03-31/functions/function/invocations" -d '{

"input_s3_bucket_name": "bucketname",

"input_file_token": "randomstring",

"input_file_path": “tmp/original.pdf",

}'

http://localhost:3000/2015-03-31/functions/function/invocations

Port from docker-compose.yml

Creating

S3 dependency

Previously, we used a separate bucket for each function.

Disadvantages of this approach:

- new function for application that use own bucket

Creating

S3 dependency

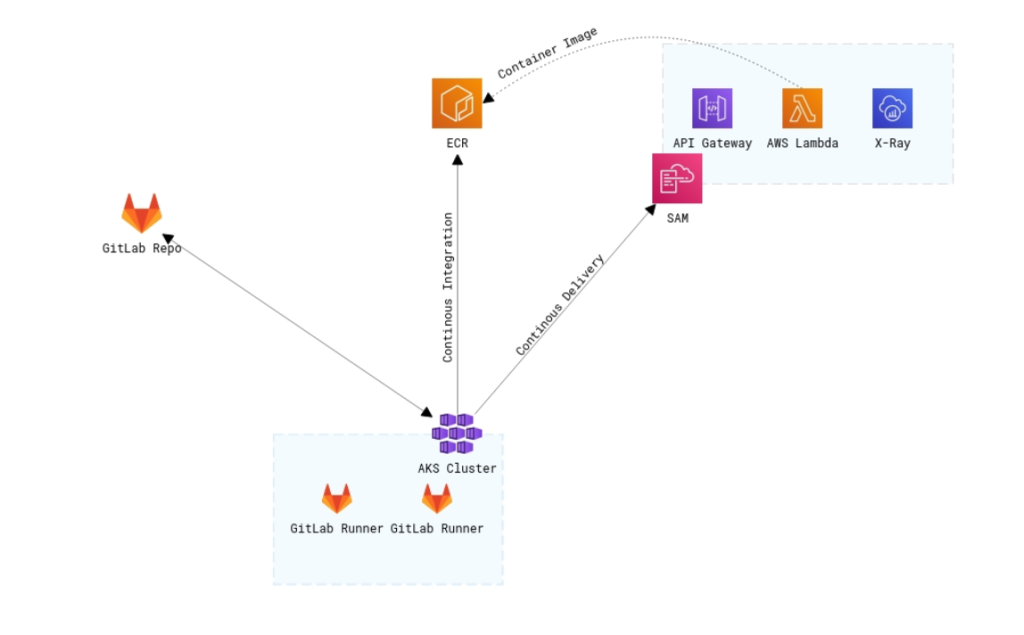

CI/CD

Build. File .gitlab.ci.yml

image: ${REGISTRY}/docker-pipeline:19.03.15-dind

variables:

FF_USE_FASTZIP: 1

FF_SCRIPT_SECTIONS: 1

COMPOSE_DOCKER_CLI_BUILD: 1

DOCKER_BUILDKIT: 1

DOCKER_CLIENT_TIMEOUT: 300

COMPOSE_HTTP_TIMEOUT: 300

COMPOSE_FILE: "docker-compose.yml:docker-compose.minio.yml"

REPOSITORY_NAME: $CI_PROJECT_NAME

SOURCE_BRANCH_NAME: $CI_COMMIT_BRANCH

SLACK_TOKEN: $DASHBOARD_SLACK_TOKEN

COMPOSE_PROJECT_NAME: ${CI_JOB_ID}

GIT_CLONE_PATH: $CI_BUILDS_DIR/$CI_PROJECT_NAME/$CI_JOB_ID

SLACK_CHANNEL: "#updates"

stages:

- build

- deployCI/CD

Build. File .gitlab.ci.yml

Build container:

<<: *stage_build

tags: ["dind_pipeline1"]

extends:

- .dind_service

script:

- sh/build.sh

rules:

- if: $CI_PIPELINE_SOURCE == 'push' && ($CI_COMMIT_BRANCH == 'master')

when: on_successCI/CD

Build. File sh/build.sh

function getLatestTag() {

# Get your latest version and increment current version

}

function ecrLogin() {

#Login to AWS ECR

}

# Login to Production account

ecrLogin "$AWS_ACCESS_KEY_ID" \

"$AWS_SECRET_ACCESS_KEY" \

"$AWS_DEFAULT_REGION" \

"$AWS_ACCOUNT_ID"

function pushContainer() {

AWS_ACCOUNT_ID=$1

docker push "${1}.dkr.ecr.us-west-2.amazonaws.com/$REPOSITORY_NAME:${NEW_VERSION}"

}

echo "Building containers..."

docker build \

--tag "$AWS_ACCOUNT_ID".dkr.ecr."$AWS_DEFAULT_REGION".amazonaws.com/"$REPOSITORY_NAME":"${NEW_VERSION}" .

echo "Pushing container to ECR..."

pushContainer "$AWS_ACCOUNT_ID"CI/CD

Deploy. File .gitlab-ci.yml

Deploy to production:

<<: *stage_deploy

tags: ["dind_pipeline1"]

needs:

- job: Build container

extends:

- .dind_service

script:

- sh/deploy.sh

after_script:

- |

if [ $CI_JOB_STATUS == 'success' ]; then

echo "$SLACK_TOKEN" > /usr/local/bin/.slack

slack chat send --color good ":bell: $REPOSITORY_NAME function updated" "$SLACK_CHANNEL"

fi

rules:

- if: $CI_PIPELINE_SOURCE == 'push' && ($CI_COMMIT_BRANCH == 'master')

when: manual

CI/CD

Deploy. File sh/deploy.sh

#!/bin/bash

# shellcheck disable=SC2034,SC2001

set -e

export AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID

export AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY

export AWS_DEFAULT_REGION=$AWS_DEFAULT_REGION

export AWS_ACCOUNT_ID=$AWS_ACCOUNT_ID

# Update lambda function

echo "Update lambda function..."

aws lambda update-function-code \

--function-name "$REPOSITORY_NAME" \

--image-uri "$AWS_ACCOUNT_ID".dkr.ecr."$AWS_DEFAULT_REGION".amazonaws.com/"$REPOSITORY_NAME""

CI/CD

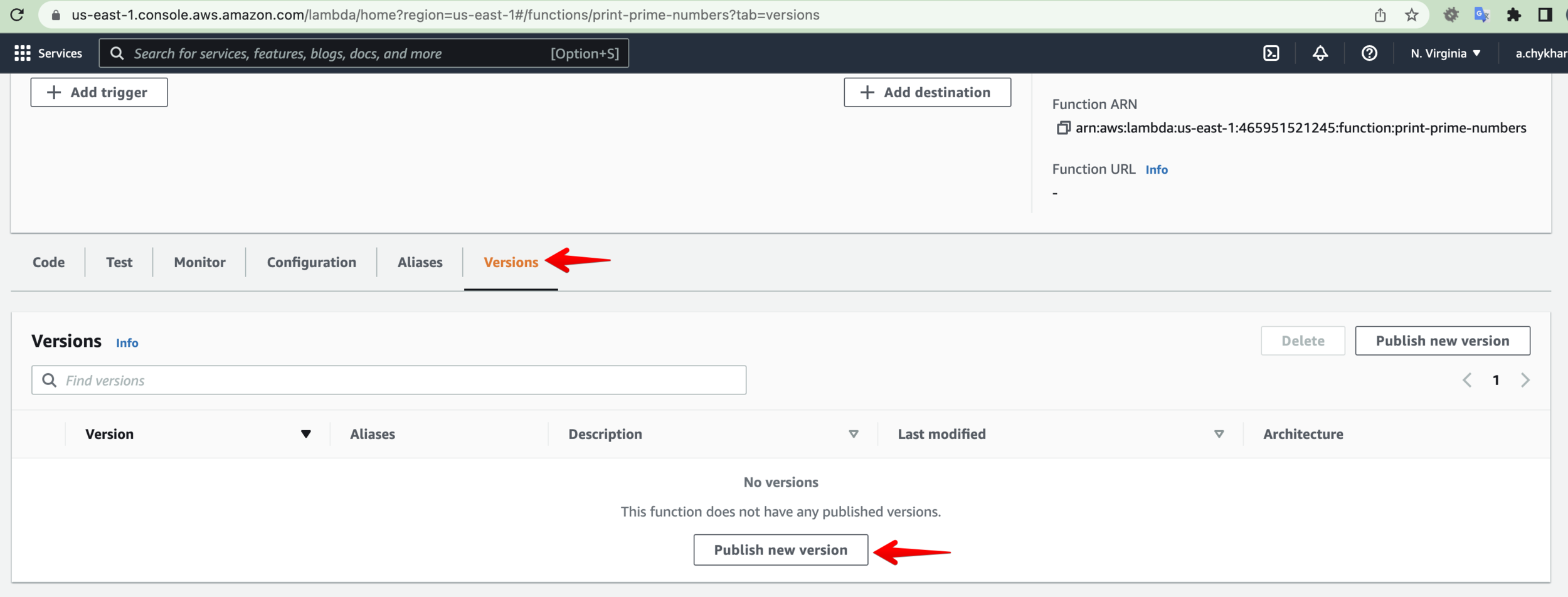

Versioning

Lambda allows publish one or more immutable versions for individual Lambda functions. Each function version has a unique ARN.

Versioning

Where to find?

Versioning

Lambda allows provide a description for published version.

The Function consumers can continue using a previous version without any disruption.

Advantages:

Versioning

ARN - Amazon Resource Name, unique identifier for resources in the AWS Cloud.

arn:aws:lambda:us-east-1:465951521245:function:print-prime-numbers:1

version

name

region

resource name

When the version is included, it`s called qualified ARN. When the version is omitted is said to be unqualified ARN.

ARN

Versioning

- only incremented value (bad for hotfixes)

- can`t split routing traffic to different versions

Disadvantages:

Versioning

Don`t forget that published function is fully immutable(including configuration)

Reminder.

Versioning

Aliases.

- each alias points to a certain function version

- can be random string or random numeric

- cannot point to another alias, only to a function version

- useful in routing traffic to new versions after proper testing

Versioning

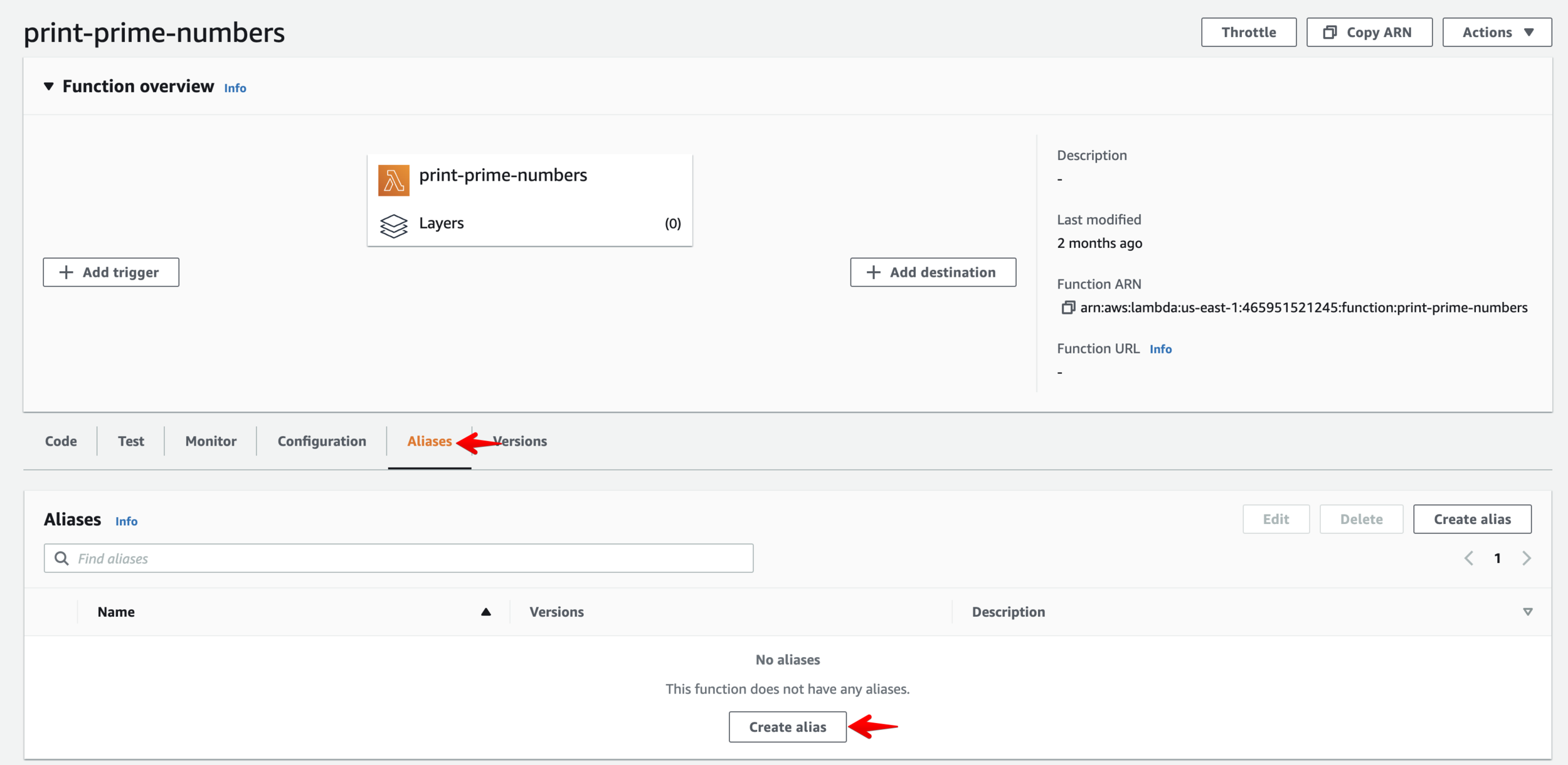

Aliases.

Versioning

Realization.

# Update lambda function

echo "Update lambda function..."

aws lambda update-function-code \

--function-name "$REPOSITORY_NAME" \

--image-uri "$AWS_ACCOUNT_ID".dkr.ecr."$AWS_DEFAULT_REGION".amazonaws.com/"$REPOSITORY_NAME":"${CURRENT_VERSION}"

Versioning

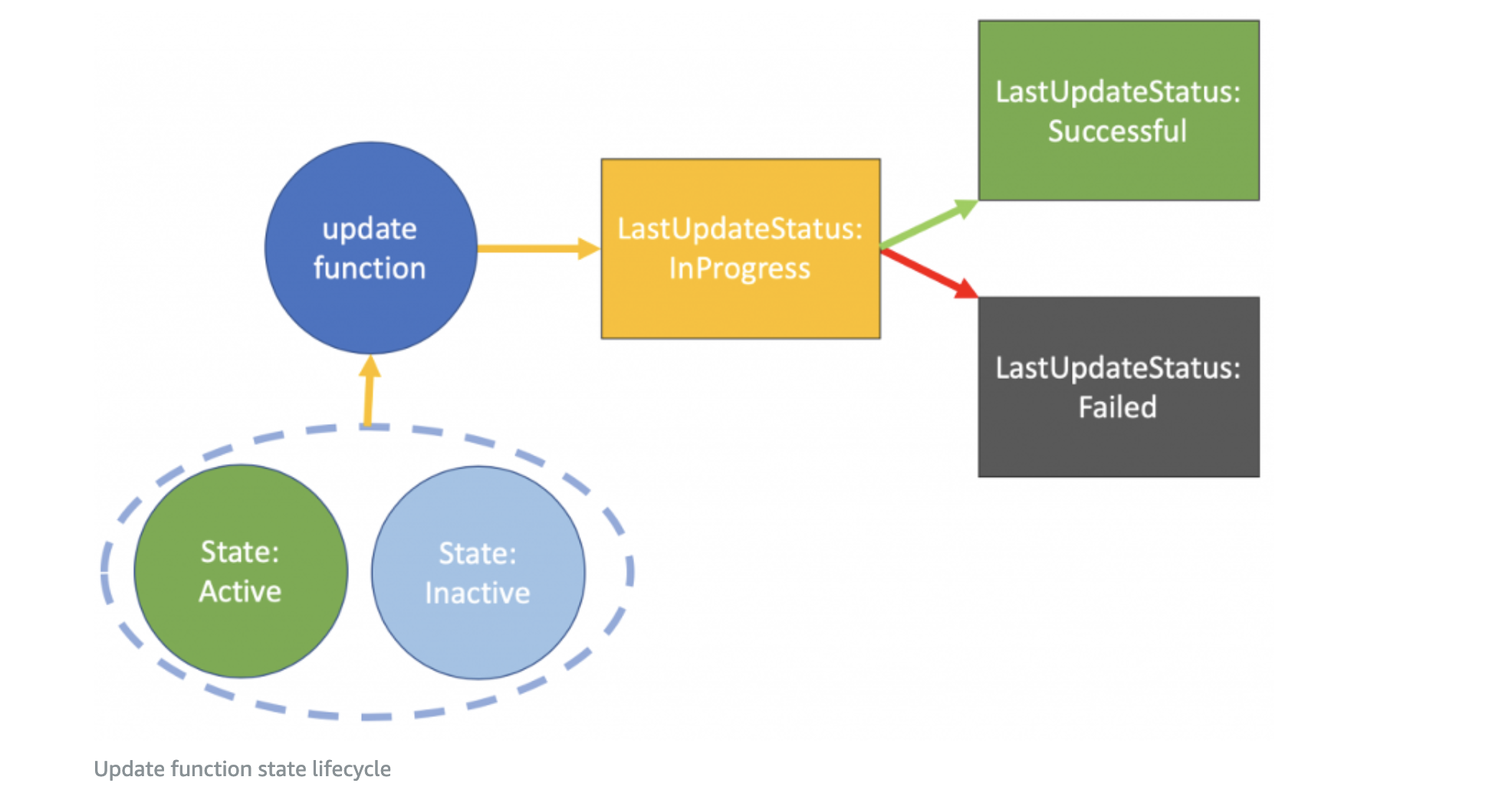

Realization. Blocking.

# Waiting for successfully deployment

echo "Waiting for successfully update lambda function..."

aws lambda wait function-updated --function-name "$REPOSITORY_NAME"You cannot publish new version when function updating status "in progress"

Versioning

Realization. Publishing.

# Publish new lambda function version

echo "Publish new lambda function version..."

aws lambda publish-version \

--function-name "$REPOSITORY_NAME" \

--description "${CURRENT_VERSION}"# Create new alias

echo "Create new lambda function alias..."

FUNCTION_VERSION=$(echo "$CURRENT_VERSION" | sed 's/^.//')

aws lambda create-alias \

--function-name "$REPOSITORY_NAME" \

--description "$CURRENT_VERSION" \

--function-version "$FUNCTION_VERSION" \

--name "$CURRENT_VERSION"Versioning

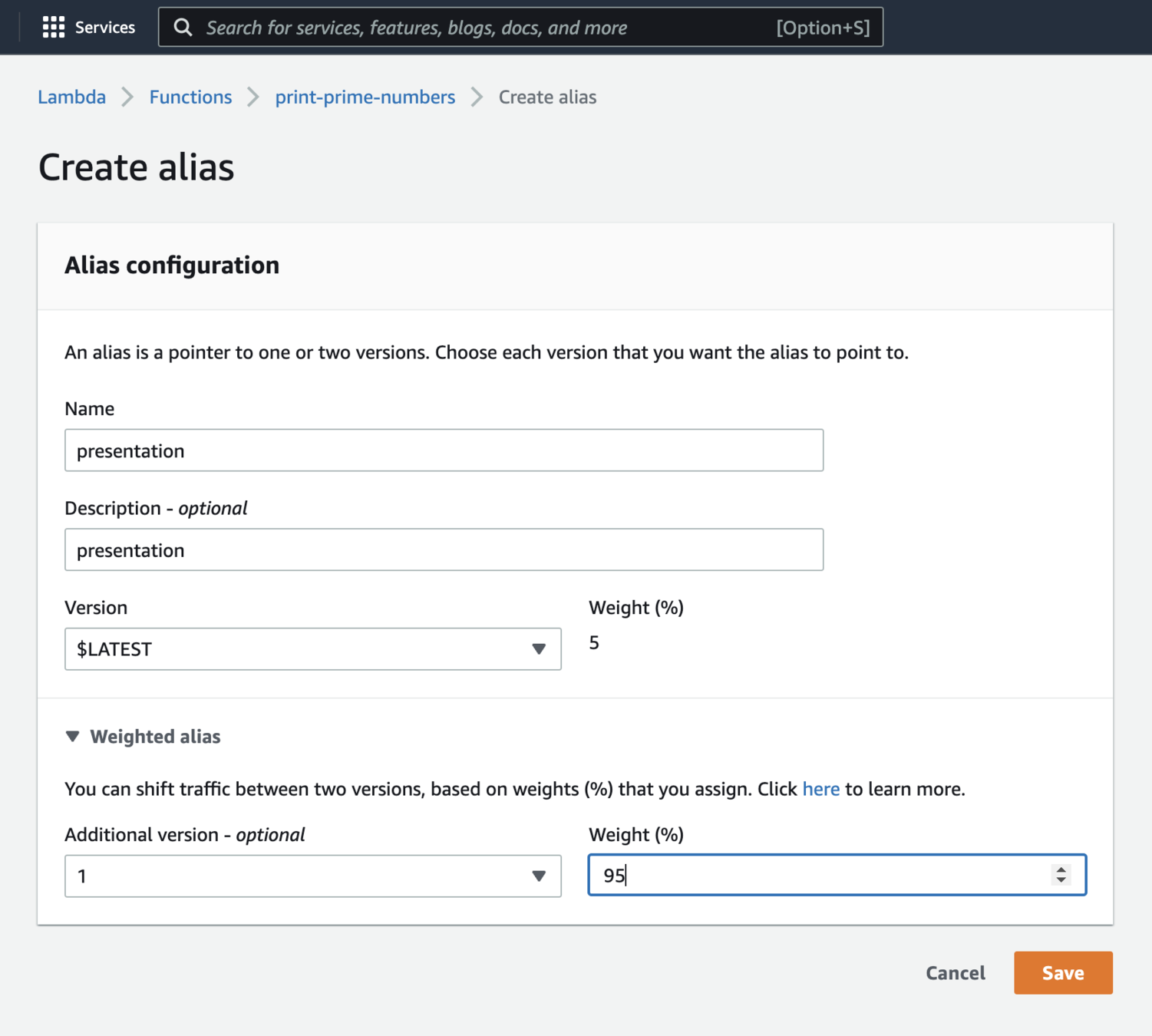

Traffic shifting

Versioning

Traffic shifting

Pluses:

- easy implementing Rolling or Canary deployment strategy (current and new versions can coexist, each receiving traffic)

- development team can identify any possible issues before active using

Credits to Maksym Bilozub and Viktor Fedkiv

Andrii Chykharivskyi

AWS Lambda Practice

By TenantCloud

AWS Lambda Practice

AWS Lambda, serverless architecture. Function-as-a-Service.

- 532