Kubernetes Dynamic Log Tailing Utility

Mahmut Bulut

- Rust & Haskell Contributor

- Tokamak Author

- Engineer at Delivery Hero

- Topics: Embedded Systems, ML, Network Intelligence, Data Eng., DSP

@vertexclique

vertexclique

What's KØRQ?

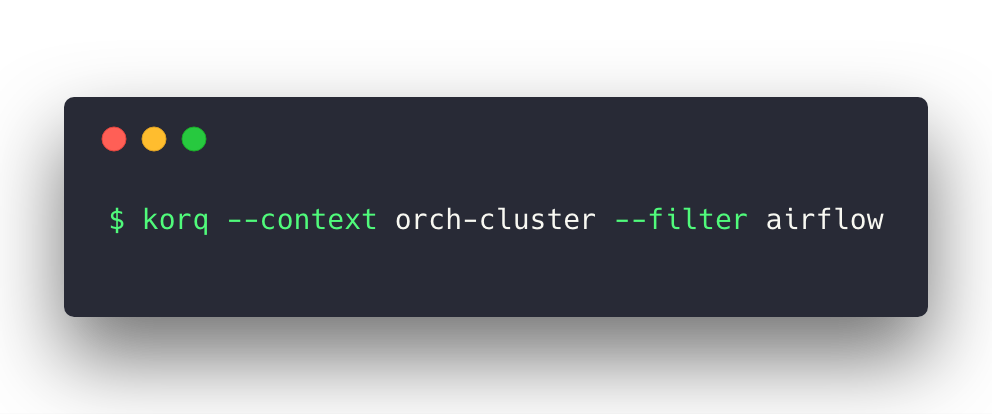

A utility to tail pod logs in the cluster (includes the container with filtering too) for Kubernetes. It will be enhanced to do more.

Motive behind it?

- Our tool at work was not very efficient enough to watch deployments.

- Some pods we run were composed of multiple containers (needs to be watched, sometimes individually)

- Concurrently tail all pods when needed without suffering from Kubernetes API or launching a bunch of commands.

- I miss writing Rust (for work; wrote Machine Learning model server and tooling for the company, nothing after that)

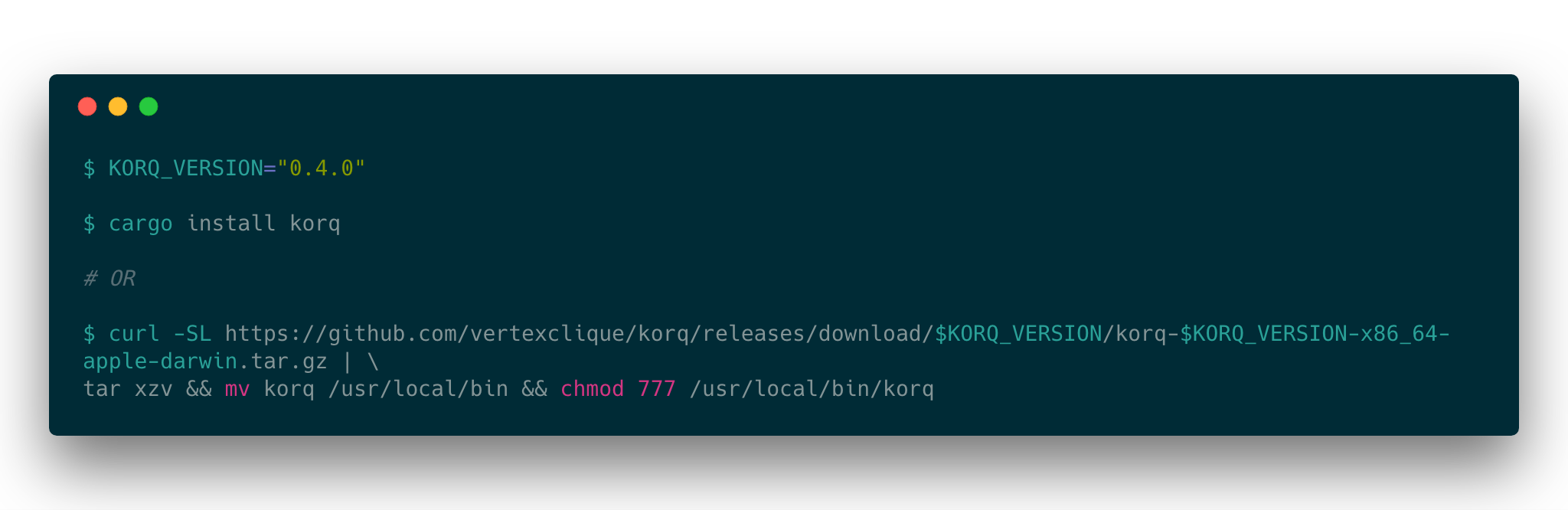

Requirements:

- Latest stable rust (or download directly from project page for your platform – currently MacOS and Linux)

- Kubernetes installation (1.9 >=)

What do I need for

?

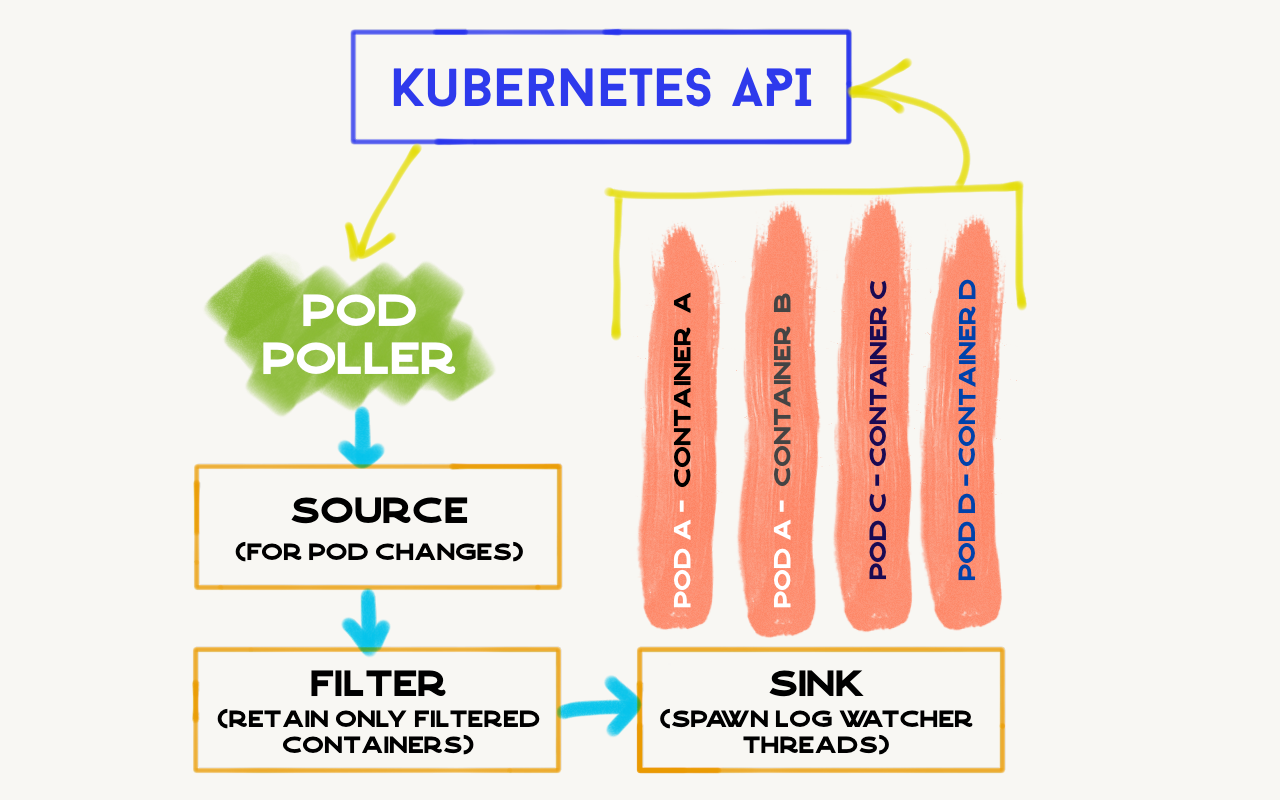

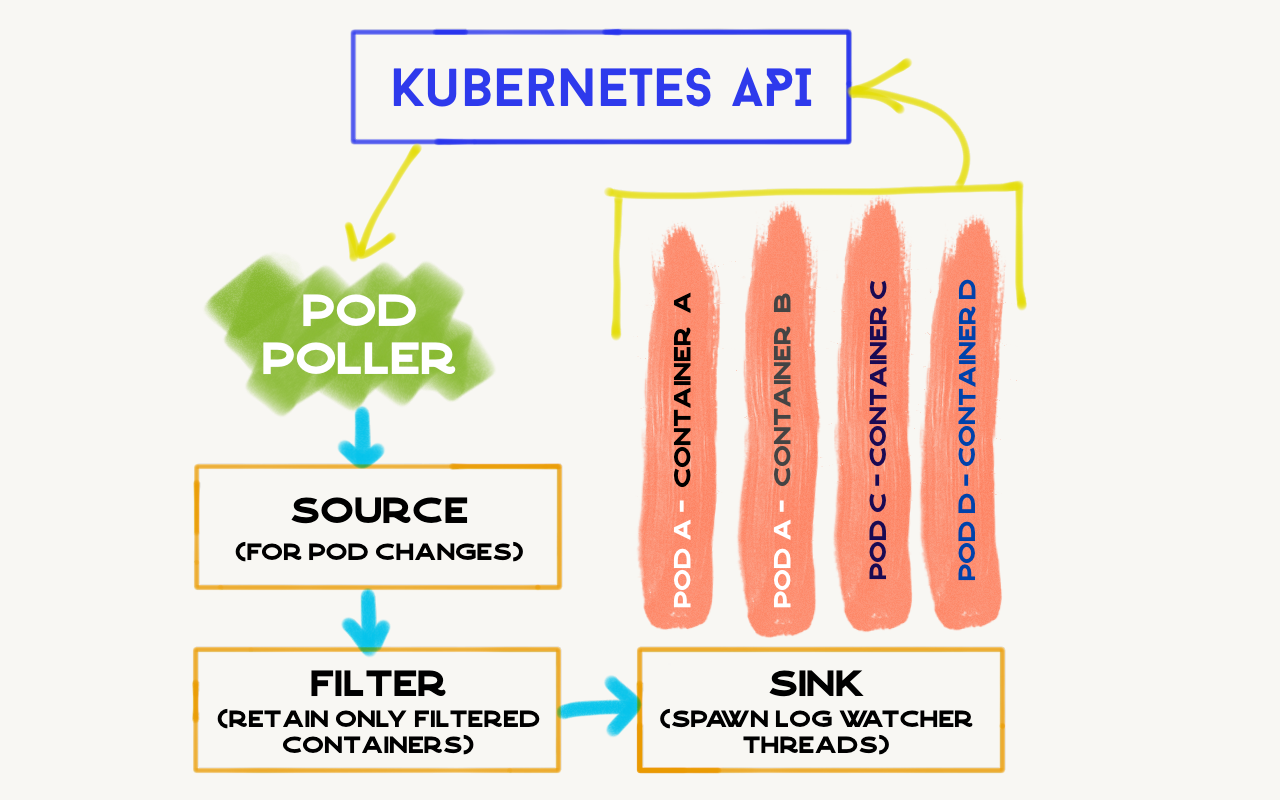

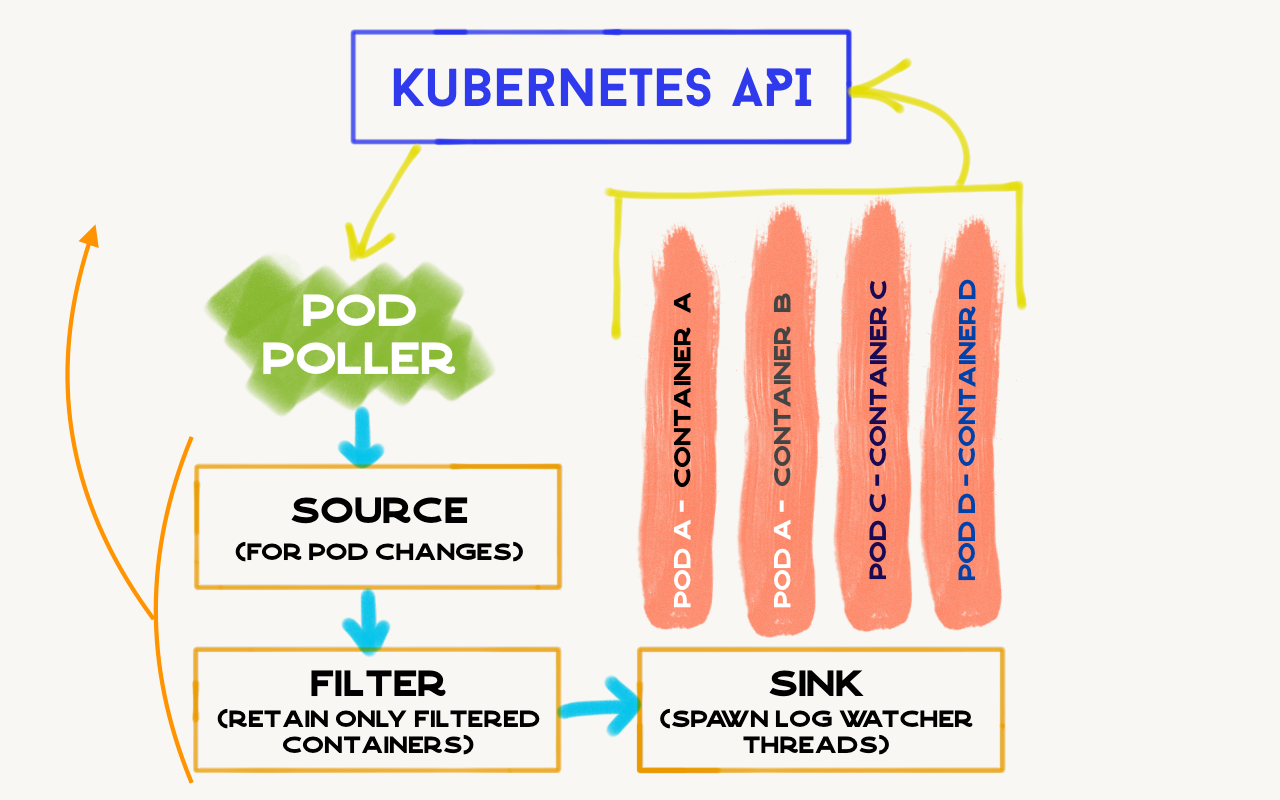

How does this work?

How does this work?

Consume HTTP Stream continuously

Polls periodically

How does this work?

Polls periodically

Consume HTTP Streams continuously

Carboxyl crate supplies reactive application elements

( Internally uses std::sync::mpsc::* )

Initial Development

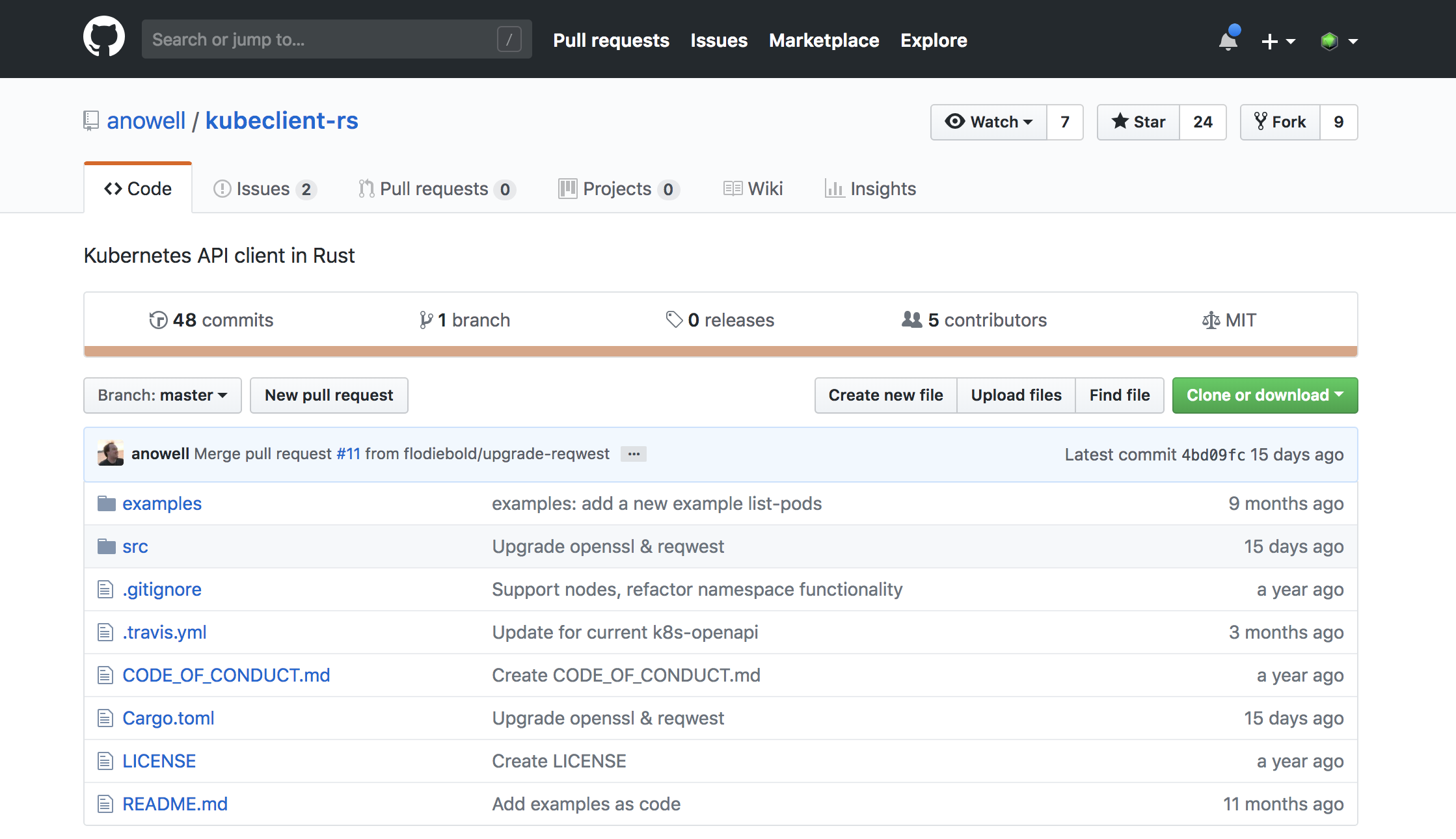

- There is no official K8S client written in Rust.

- People generated client "impl"s from openapi spec of K8S

- They were still incomplete.

Client Selection

Client Selection

ONLY ONE THAT HAS A GOOD API !

"Oh Snap!" Moments

"Oh Snap!" Moments

Then QUBE comes.

- I forked the kubeclient, seems like not very active.

- Gazed into the code, seems like it doesn't have latest dependencies.

- To use HTTP streaming I need to write a bunch of code and update the dependencies.

- Then I realized it lacks some authentication methods too. (Kubernetes OICD (openid-connect) auth, which is what GKE uses as default).

"Oh Snap!" Moments

Then QUBE comes.

QUBE

When it was done…

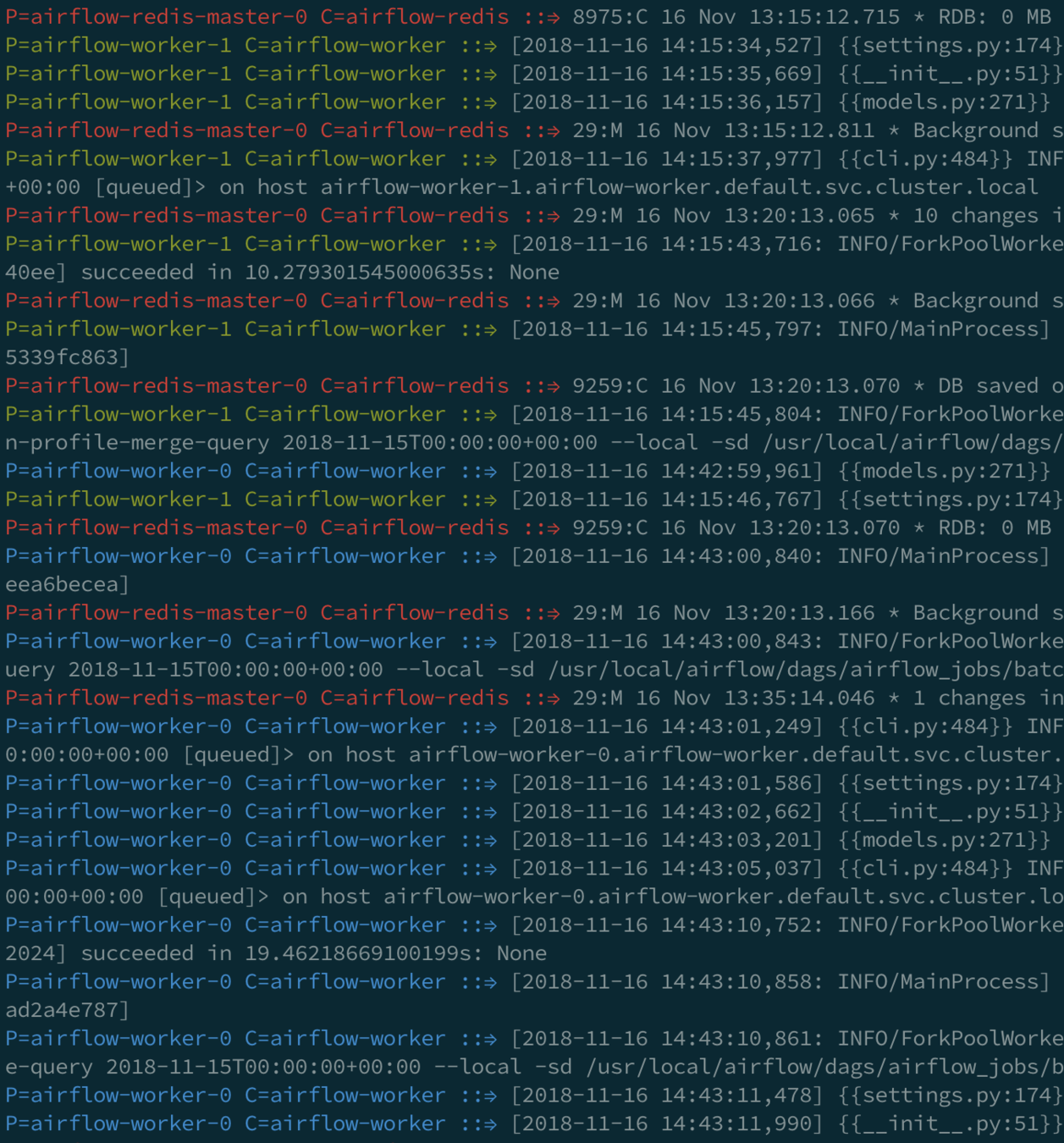

- For a simple tool, it was very performant.

- No need to deal with that KUBECTL!

- Watch the whole cluster and get insights on applications.

- You can see in-flight updates of the pods. Identify service startup problems immediately.

- Two-days development and it worked.

- It has room for improvement and to become a watcher of resources.

- Works out-of-the-box with GKE (Google Kubernetes Engine).

$ export POD_NAME=`kubectl get pods -l "app=a_pod_name_may_or_may_not_exist" -o json | jq -r ".items[0].metadata.name"`

$ kubectl logs $POD_NAME -f

Error from server (NotFound): pods "null" not found

$ korq -f a_pod_name_ma

Looking to the whole group! 😱

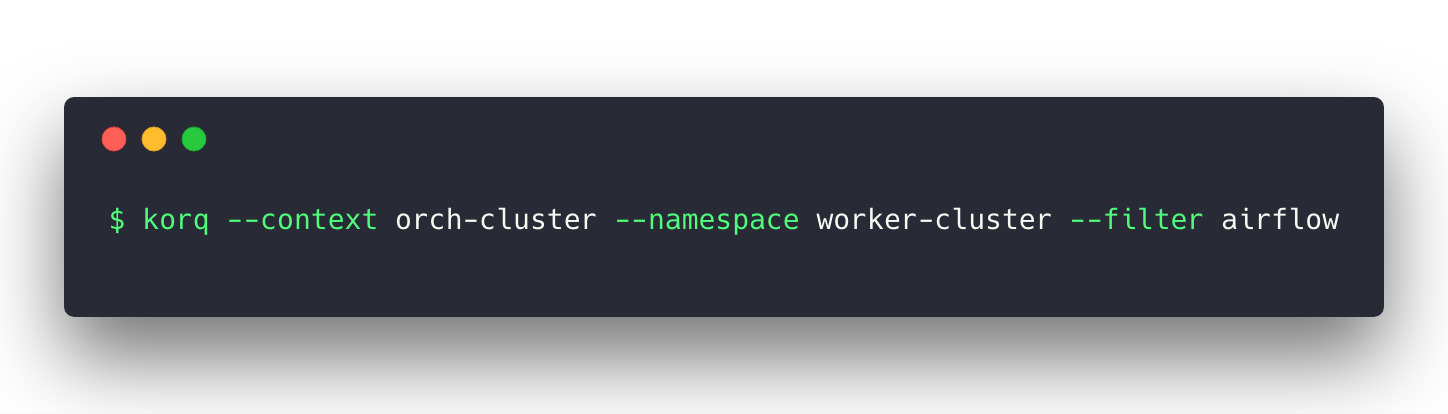

Multiple namespaces?

😎 Kein Problem! 😎

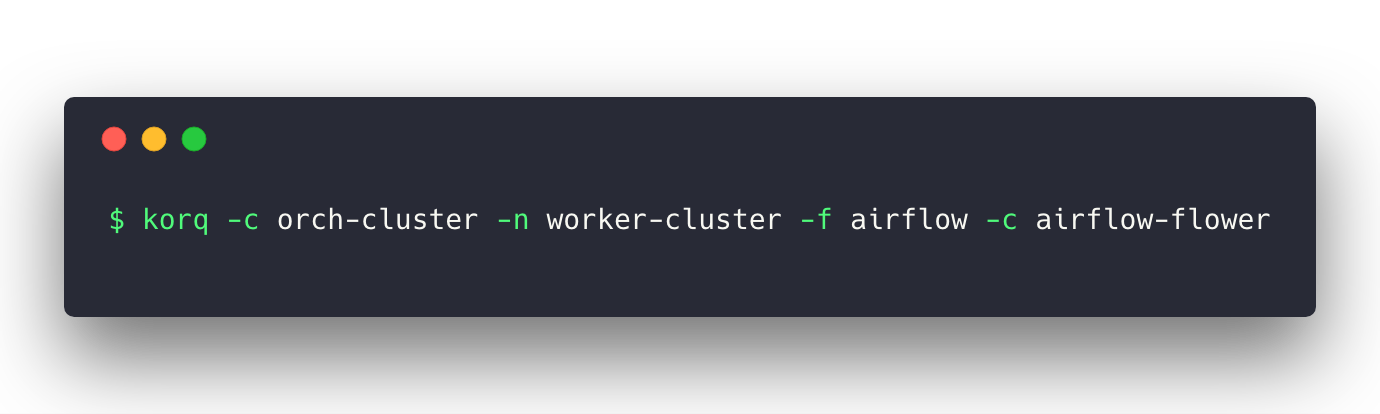

I want to see only that specific container…

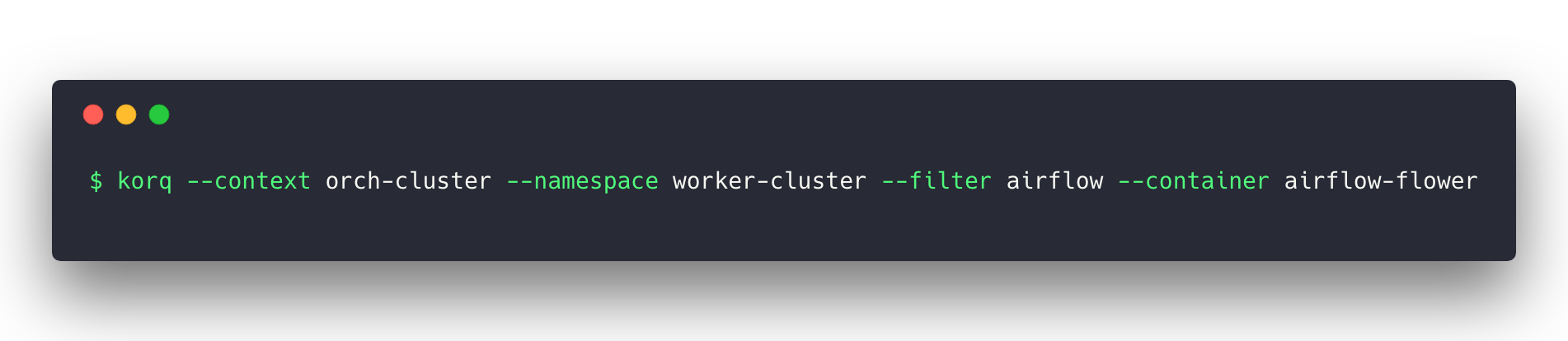

Got short?

What's next?

For KORQ:

- Head to the direction of "Resource Tracking Tool Suite"

- Adding Status Change Tracking

- Unavailability and Surge tracking served via Web API

- Other tracking tools will be added and the project will be multi-binary

- Feed polling mechanism with K8S Watch API

- Cross-compilation will be added.

Got some ideas? Become a contributor.

What's next?

For QUBE:

- Will added HTTP Streaming K8S watch API

- Rework all the public exports from the crate.

- Add resource status API

- Add support for token renewal for OICD.

Got some ideas? Become a contributor.

K∅RQ – Kubernetes Dynamic Log Tailing Utility

By vertexclique

K∅RQ – Kubernetes Dynamic Log Tailing Utility

- 480