Distributed Node #5

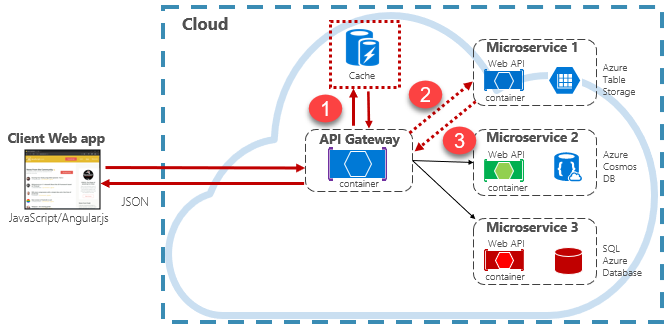

Caching

Two Sum problem

Given an array of integers nums and an integer target, return indices of the two numbers such that they add up to target.

Input: nums = [2,7,11,15], target = 9

Output: [0,1]

Output: Because nums[0] + nums[1] == 9, we return [0, 1].There are only two hard things in Computer Science: cache invalidation and naming things.

Phil Karlton (Netscape developer)

Twitter feed problem

How would you design a twitter?

Naive solution

GET feed

SELECT all tweets WHERE

userId IN (SELECT all friends of the user)

ORDER BY ...

LIMIT ...

1

3

2

Response time

Single point of failure

Max queries per second

Availability

Performance

Consistancy

A better solution

LB

Tweets services

Feeds cache cluster

Sharded SQL cluster

Gizzard

POST new post

Recompute all friends feeds

Save to redis cluster

GET feed

Has cache?

?

Written in the ANSI C

110000 SETs, about 81000 GETs per second.

Supports rich data types

Multi-utility tool

Operations are atomic

A databaase

Features

Benchmark

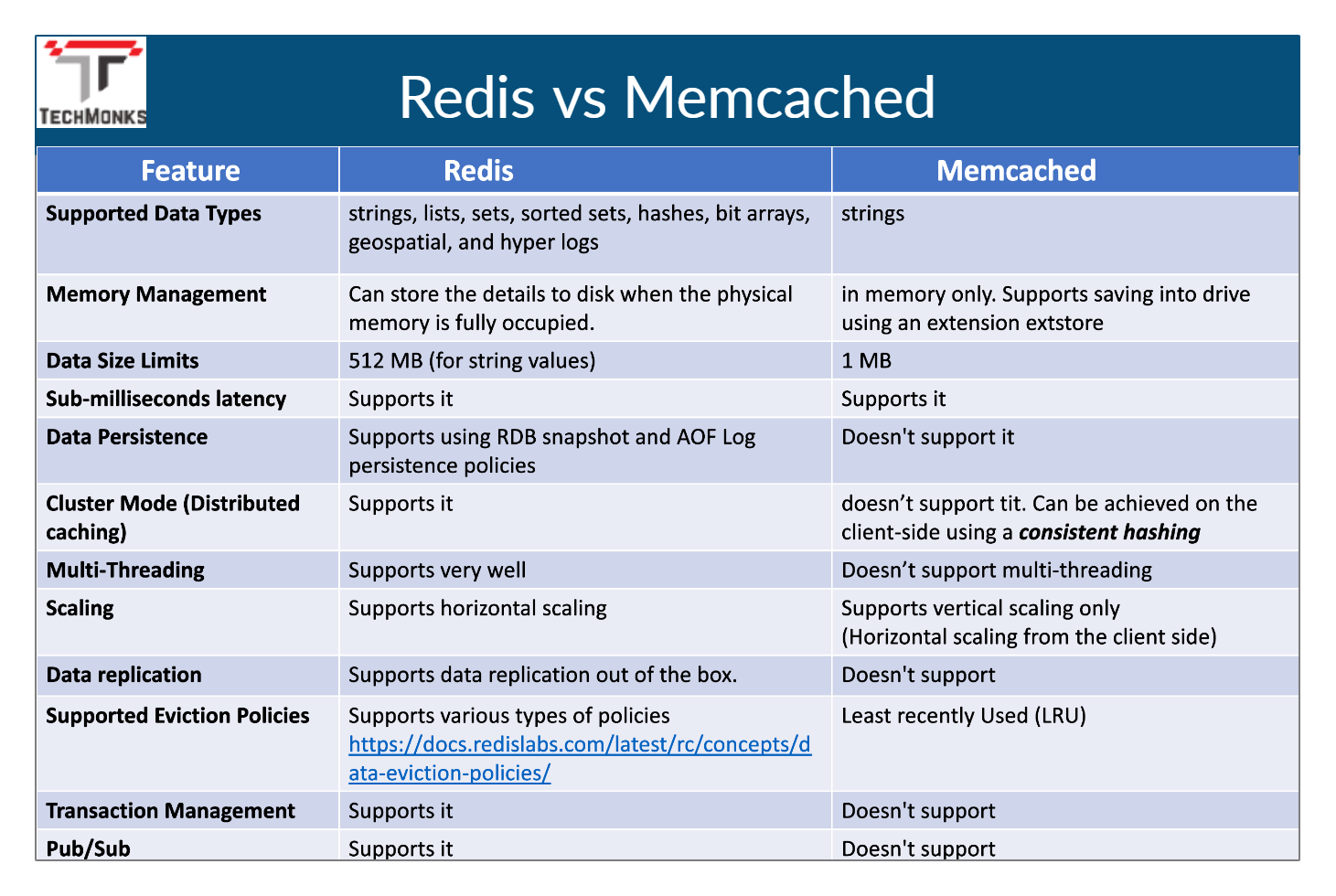

Redis vs Memcached

Play with Redis

$ docker run -d redis

$ docker exec -it <container id> /bin/bash

$ redis-cli

> ping

> CONFIG GET * In Redis, there is a configuration file (redis.conf) available at the root directory of Redis. Although you can get and set all Redis configurations by Redis CONFIG command

Datatypes

Strings

Hashes

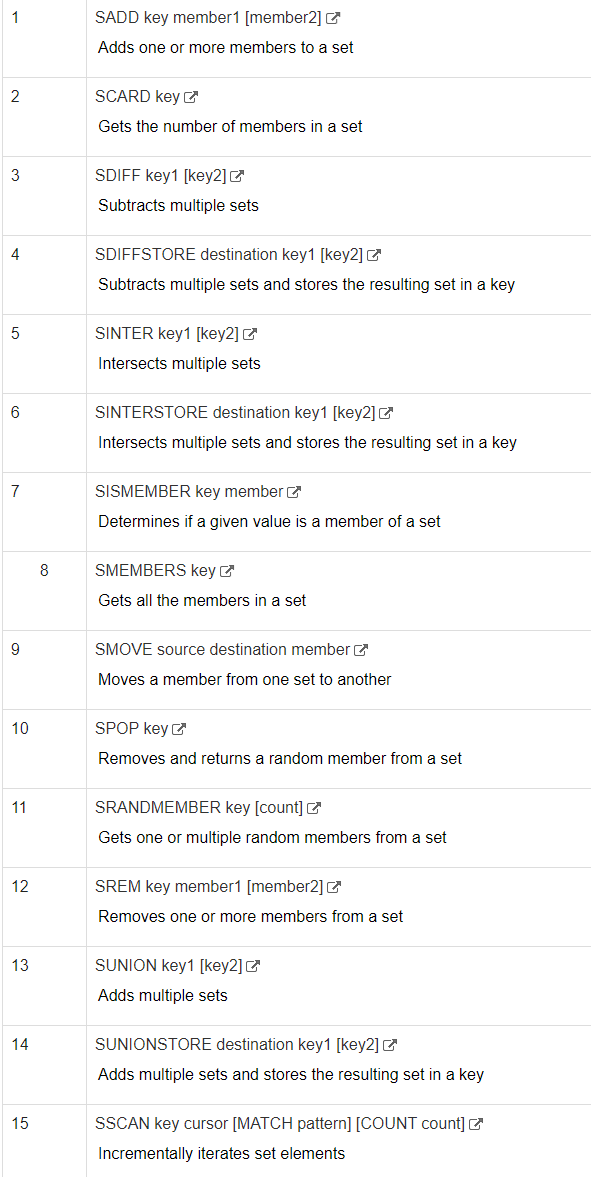

Sets

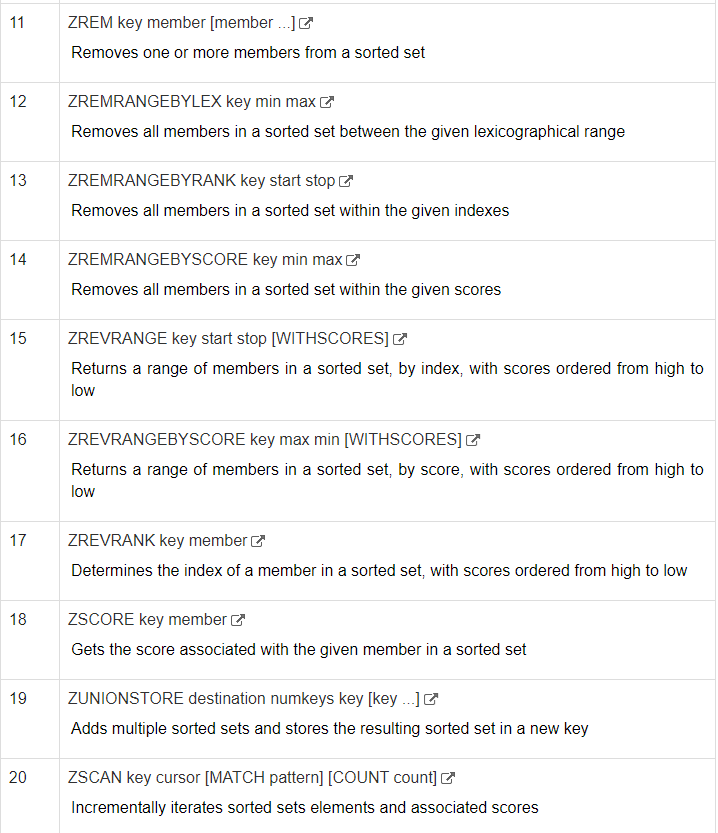

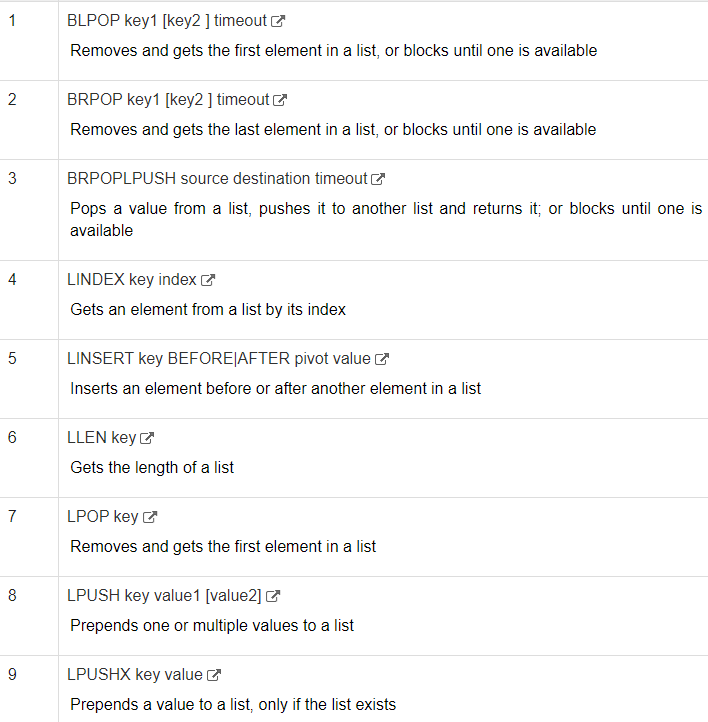

Lists

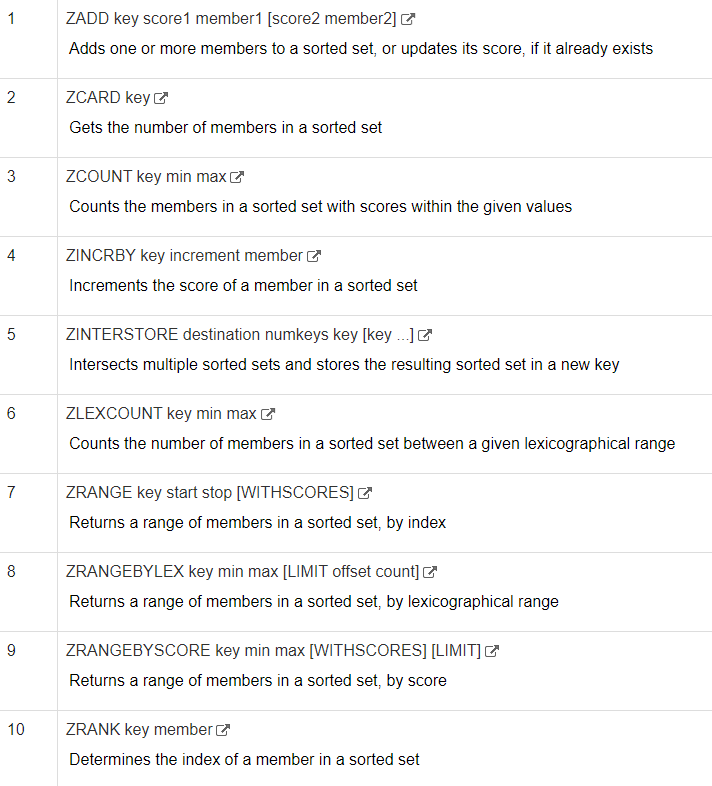

Sorted Sets

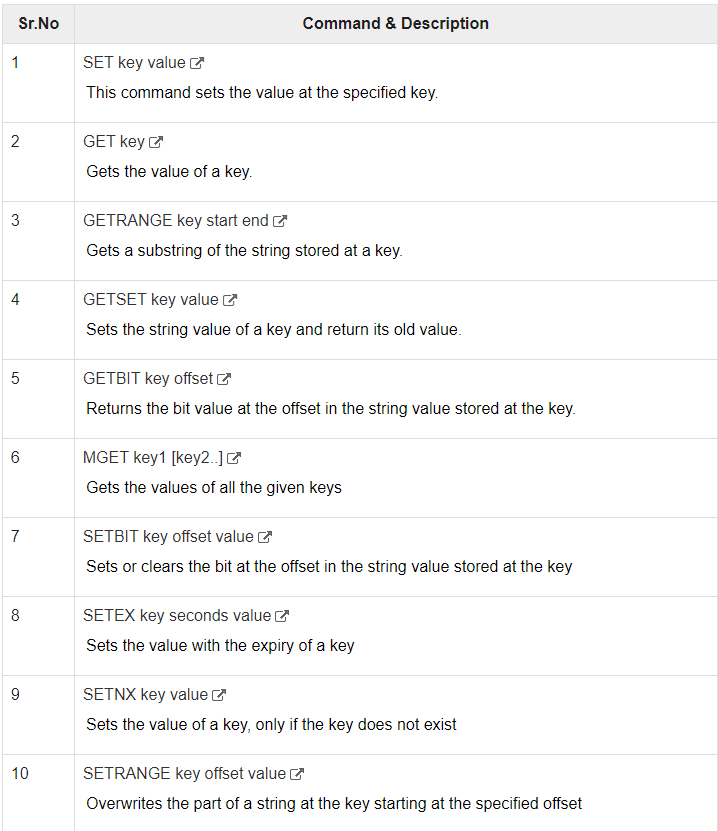

Strings

> set hello world

> get hello

> getrange hello 1 2

> strlen hello

> getset hello aaaaaa

> get hello

> set hello adasdasd ex 10Play with strings

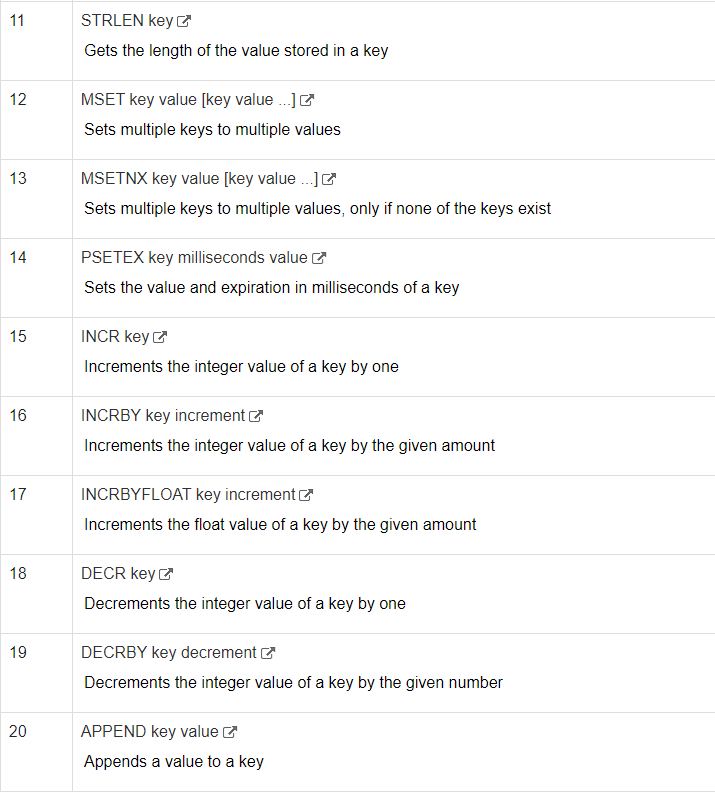

HashMaps

redis 127.0.0.1:6379> HMSET aaa name redis

description "redis basic commands for caching" likes 20 visitors 23000

OK

redis 127.0.0.1:6379> HGETALL aaa

1) "name"

2) "redis"

3) "description"

4) "redis basic commands for caching"

5) "likes"

6) "20"

7) "visitors"

8) "23000"

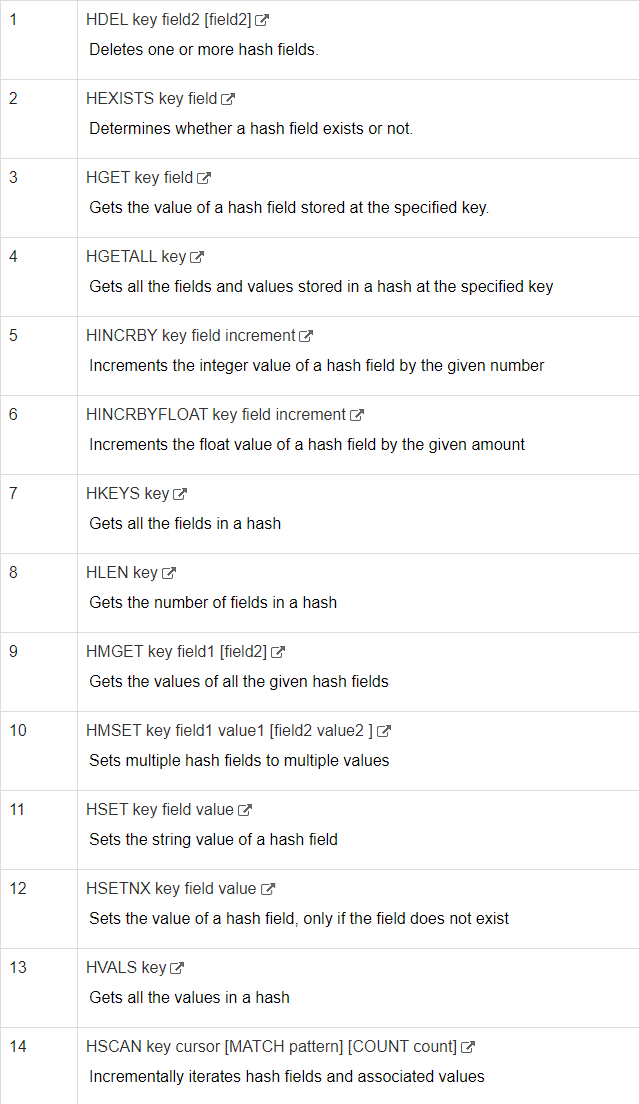

Sets

redis 127.0.0.1:6379> SADD tutorials redis

(integer) 1

redis 127.0.0.1:6379> SADD tutorials mongodb

(integer) 1

redis 127.0.0.1:6379> SADD tutorials mysql

(integer) 1

redis 127.0.0.1:6379> SADD tutorials mysql

(integer) 0

redis 127.0.0.1:6379> SMEMBERS tutorials

1) "mysql"

2) "mongodb"

3) "redis"

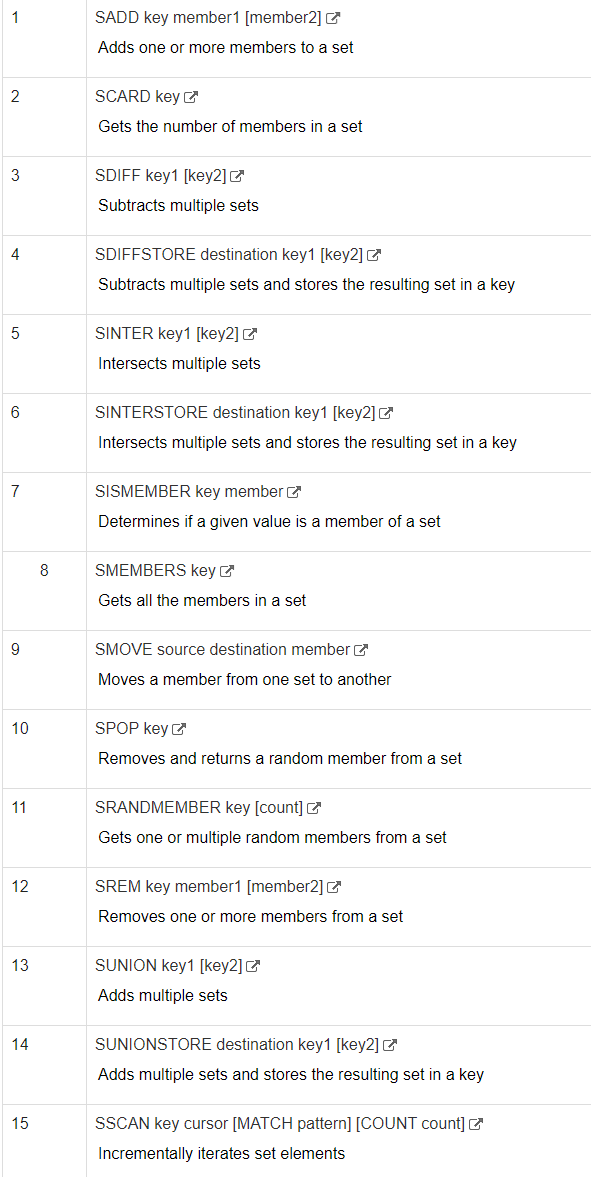

Sorted Sets

redis 127.0.0.1:6379> ZADD tutorials 1 redis

(integer) 1

redis 127.0.0.1:6379> ZADD tutorials 2 mongodb

(integer) 1

redis 127.0.0.1:6379> ZADD tutorials 3 mysql

(integer) 1

redis 127.0.0.1:6379> ZADD tutorials 3 mysql

(integer) 0

redis 127.0.0.1:6379> ZADD tutorials 4 mysql

(integer) 0

redis 127.0.0.1:6379> ZRANGE tutorials 0 10 WITHSCORES

1) "redis"

2) "1"

3) "mongodb"

4) "2"

5) "mysql"

6) "4" Redis Sorted Sets are similar to Redis Sets with the unique feature of values stored in a set. The difference is, every member of a Sorted Set is associated with a score

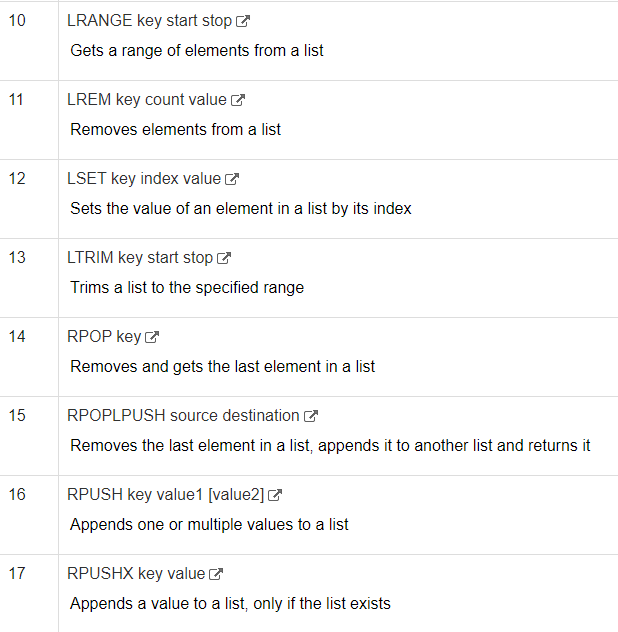

Lists

127.0.0.1:6379> lrange mylist 0 -1

1) "2"

2) "1"

127.0.0.1:6379> rpush mylist a

(integer) 3

127.0.0.1:6379> lrange mylist 0 -1

1) "2"

2) "1"

3) "a"

127.0.0.1:6379> rpop mylist

"a"

127.0.0.1:6379> lrange mylist 0 -1

1) "2"

2) "1"

127.0.0.1:6379> rpush mylist 1 2 3 4 5 "foo bar"

(integer) 8

127.0.0.1:6379> lrange mylist 0 -1

1) "2"

2) "1"

3) "1"

4) "2"

5) "3"

6) "4"

7) "5"

8) "foo bar"

Lists are useful for a number of tasks, two very representative use cases are the following:

- Remember the latest updates posted by users into a social network.

- Communication between processes, using a consumer-producer pattern where the producer pushes items into a list, and a consumer (usually a worker) consumes those items and executed actions. Redis has special list commands to make this use case both more reliable and efficient.

+

redis:

container_name: redis

image: redis

ports:

- '6379:6379'Add Redis to a compose file

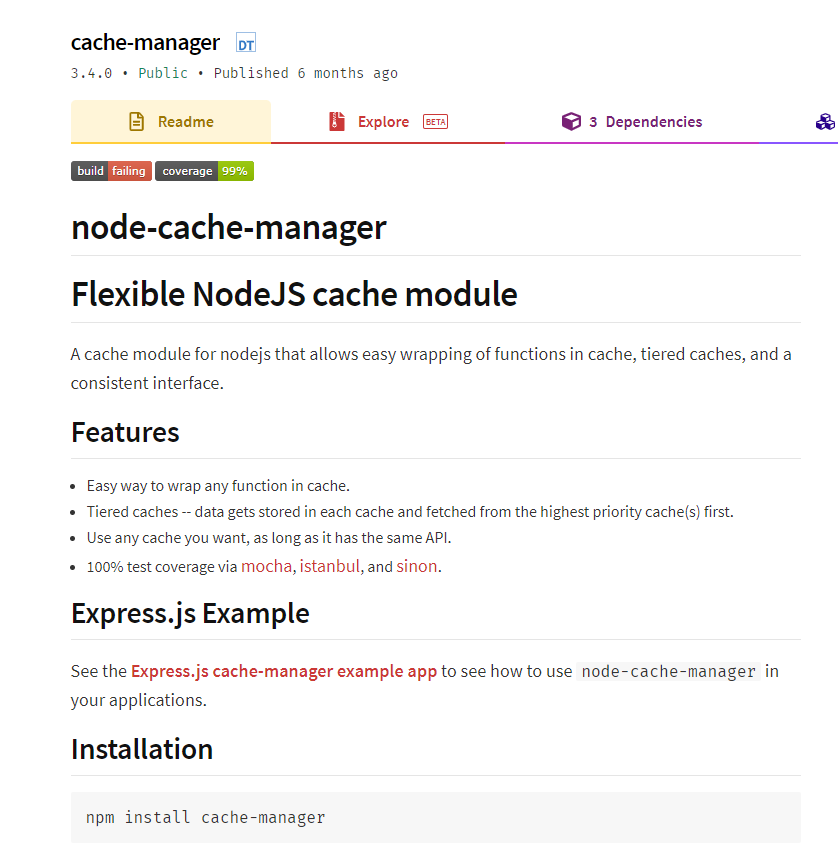

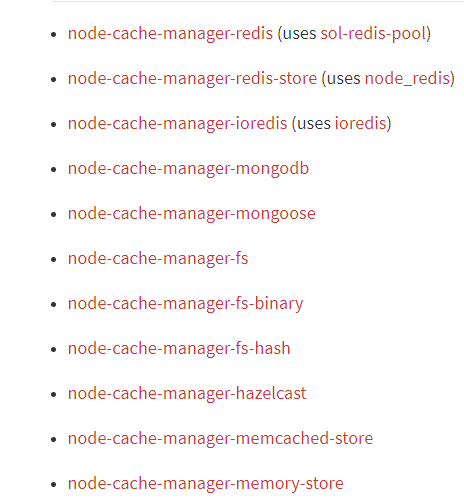

yarn add cache-manager cache-manager-ioredis

yarn add -D @types/ioredis @types/cache-managerInstall cache-manager

import * as redisStore from 'cache-manager-ioredis';

imports: [

ConfigModule.forRoot(),

MongooseModule.forFeature([{ name: User.name, schema: UserSchema }]),

CacheModule.register({

store: redis,

host: process.env.REDIS_HOST,

port: process.env.REDIS_PORT,

ttl: 0,

}),

],Setup a users module

constructor(

@Inject(CACHE_MANAGER) private cacheManager: Cache,

@Inject('IUsersService') private readonly usersService: IUsersService,

) {

let i = 0;

setInterval(() => {

this.cacheManager

.set('asd', i++)

.then(() => console.log('Write', i - 1))

.then(() => this.cacheManager.get('asd'))

.then((r) => console.log('Read', r))

.catch((e) => console.log('!!!!!!!!', e));

}, 5000);

}Inject a cache manager

@Get()

@UseInterceptors(CacheInterceptor)

@CacheTTL(1000)

async findAll() {

await new Promise((r) => setTimeout(r, 10000));

console.log('done');

return this.usersService.findAll();

}Add cache interceptor

And experiment

@Module({

imports: [

ConfigModule.forRoot(),

MongooseModule.forFeature([{ name: User.name, schema: UserSchema }]),

CacheModule.register({

store: redis,

host: process.env.REDIS_HOST,

port: process.env.REDIS_PORT,

ttl: 0,

}),

],

controllers: [UsersController],

providers: [

{

provide: 'IUsersService',

useClass: UsersService,

},

{

provide: APP_INTERCEPTOR,

useClass: CacheInterceptor,

},

],

})

export class UsersModule {}

Add global interceptor

@Post()

@UseInterceptors(CacheClearInterceptor)

create(@Body() createUserDto: CreateUserDto) {

this.logger.log(

'Someone is creating a user' + JSON.stringify(createUserDto),

);

return this.usersService.create(createUserDto);

}Add clear cache interceptor

import { Observable } from 'rxjs';

import {

Inject,

CACHE_MANAGER,

NestInterceptor,

ExecutionContext,

CallHandler,

Injectable,

} from '@nestjs/common';

import { Cache } from 'cache-manager';

import { tap } from 'rxjs/operators';

@Injectable()

export class CacheClearInterceptor implements NestInterceptor {

constructor(@Inject(CACHE_MANAGER) private cacheManager: Cache) {}

intercept(

context: ExecutionContext,

next: CallHandler<any>,

): Observable<any> | Promise<Observable<any>> {

return next.handle().pipe(

tap((a) => {

console.log(`After...`, a);

console.log(this.cacheManager.reset());

}),

);

}

}

Add clear cache interceptor

Access to all Redis commands?

import { Cache, Store } from 'cache-manager';

import { RedisStore } from './RedisStore';

import Redis from 'ioredis';

import { CACHE_MANAGER, Inject, Injectable } from '@nestjs/common';

export interface RedisStore extends Store {

getClient(): Redis.Commands;

}

@Injectable()

export class RedisMediator {

private client: Redis.Commands;

constructor(@Inject(CACHE_MANAGER) private cacheManager: Cache) {

this.client = (<RedisStore>this.cacheManager.store).getClient();

}

getClient() {

return this.client;

}

}

Create new provider

providers: [

RedisMediator,

{

provide: 'IUsersService',

useClass: UsersService,

},

{

provide: APP_INTERCEPTOR,

useClass: CacheInterceptor,

},

],Inject it to controller

constructor(

private redis: RedisMediator,

@Inject(CACHE_MANAGER) private cacheManager: Cache,

@Inject('IUsersService') private readonly usersService: IUsersService,

) {

redis

.getClient()

.lrange('mylist', 0, -1)

.then((r) => console.log('>>>', r));

redis

.getClient()

.llen('mylist')

.then((r) => console.log('>>>', r));

redis

.getClient()

.hmset(

'mymap',

new Map([

['hello', '1'],

['world', '2'],

]),

)

.then((r) => console.log('>>>', r));

redis

.getClient()

.hgetall('mymap')

.then((r) => console.log('>>>', r));

...import * as redis from 'cache-manager-ioredis';

import { APP_INTERCEPTOR } from '@nestjs/core';

import { RedisService } from 'nestjs-redis';

@Module({

imports: [

ConfigModule.forRoot(),

CacheModule.registerAsync({

useFactory: (redisService: RedisService) => {

return {

store: redis,

redisInstance: redisService.getClient(),

};

},

inject: [RedisService],

}),

MongooseModule.forFeature([{ name: User.name, schema: UserSchema }]),

],

controllers: [UsersController],

providers: [

{

provide: 'IUsersService',

useClass: UsersService,

},

{

provide: APP_INTERCEPTOR,

useClass: CacheInterceptor,

},

],

})

export class UsersModule {} constructor(

@Inject('IUsersService') private readonly usersService: IUsersService,

redisService: RedisService,

) {

redisService.getClient().set('hello', 'world');

}import { RedisModule } from 'nestjs-redis';

import { AppController } from './app.controller';

import { AppService } from './app.service';

import { UsersModule } from './users/users.module';

@Module({

imports: [

UsersModule,

ConfigModule.forRoot(),

MongooseModule.forRoot(

process.env.DATABASE_URL,

process.env.MONGO_PASSWORD

? {

authSource: 'admin',

user: process.env.MONGO_USER,

pass: process.env.MONGO_PASSWORD,

}

: undefined,

),

RedisModule.register({

host: process.env.REDIS_HOST,

port: +process.env.REDIS_PORT,

password: process.env.REDIS_PASSWORD,

}),

],

controllers: [AppController],

providers: [AppService],

})

export class AppModule {}

It would be nice if someone watched for the cluster

Sentinel

Master

Svc 2

Svc 1

Svc 3

Slave 2

Slave 1

New master?

Sentinel 1

Sentinel 2

Sentinel 3

Vote for 1

Vote for 1

Vote for 2

Quorum has chosen Slave 1 as a new Master

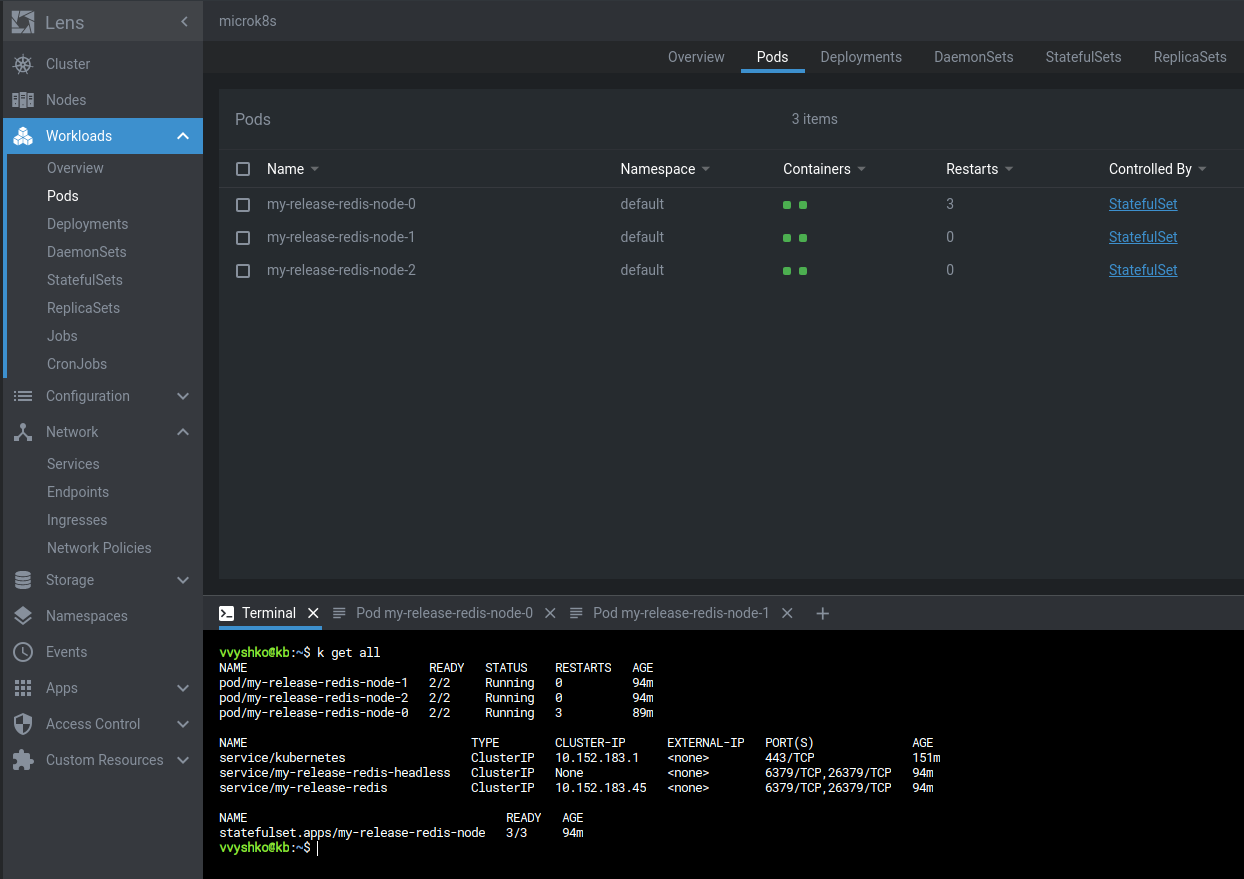

microk8s helm repo add bitnami https://charts.bitnami.com/bitnami

microk8s helm install my-release \

--set sentinel.enabled=true \

--set cluster.slaveCount=3 \

bitnami/redis

echo $(kubectl get secret --namespace default my-release-redis -o jsonpath="{.data.redis-password}" | base64 --decode)

Setup redis cluster

k delete pods my-release-redis-node-0 --grace-period=0 --forceSimulate a problem on the first node

Kill the first pod

1:X 20 Feb 2021 17:29:31.802 # +sdown sentinel 17842c5dd5c0ce41063ddf37c45de74f9021022d 10.1.156.139 26379 @ mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:31.857 # +odown master mymaster 10.1.156.139 6379 #quorum 2/2

1:X 20 Feb 2021 17:29:31.857 # +new-epoch 1

1:X 20 Feb 2021 17:29:31.857 # +try-failover master mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:31.865 # +vote-for-leader 2436318487f97c202d62546d96fcb81c16122c4d 1

1:X 20 Feb 2021 17:29:31.880 # 0fe6c937f6043ba64d0cbca3d3c2b26bb78c39d8 voted for 2436318487f97c202d62546d96fcb81c16122c4d 1

1:X 20 Feb 2021 17:29:31.955 # +elected-leader master mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:31.955 # +failover-state-select-slave master mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:32.008 # +selected-slave slave 10.1.156.140:6379 10.1.156.140 6379 @ mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:32.008 * +failover-state-send-slaveof-noone slave 10.1.156.140:6379 10.1.156.140 6379 @ mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:32.108 * +failover-state-wait-promotion slave 10.1.156.140:6379 10.1.156.140 6379 @ mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:32.314 # +promoted-slave slave 10.1.156.140:6379 10.1.156.140 6379 @ mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:32.314 # +failover-state-reconf-slaves master mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:32.365 * +slave-reconf-sent slave 10.1.156.141:6379 10.1.156.141 6379 @ mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:32.955 # -odown master mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:33.350 * +slave-reconf-inprog slave 10.1.156.141:6379 10.1.156.141 6379 @ mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:33.350 * +slave-reconf-done slave 10.1.156.141:6379 10.1.156.141 6379 @ mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:33.441 # +failover-end master mymaster 10.1.156.139 6379

1:X 20 Feb 2021 17:29:33.441 # +switch-master mymaster 10.1.156.139 6379 10.1.156.140 6379

1:X 20 Feb 2021 17:29:33.441 * +slave slave 10.1.156.141:6379 10.1.156.141 6379 @ mymaster 10.1.156.140 6379Node 2 sentinel logs

How Redis achieves persistence

RDB snapshot: Is a point-in-time snapshot of all your dataset, that is stored in a file in disk and performed at specified intervals. This way, the dataset can be restored on startup.

AOF log: Is an Append Only File log of all the write commands performed in the Redis server. This file is also stored in disk, so by re-running all the commands in their order, a dataset can be restored on startup.

Thank You!

Distributed Node #5

By Vladimir Vyshko

Distributed Node #5

Caching

- 945