Spectrum Dependent Learning Curves in Kernel Regression

Journal club

B. Bordelon, A. Canatar, C. Pehlevan

Overview

- Setting: kernel regression for a generic target function

- Decompose the generalization error over the kernel's spectral components

- Derive an approximate formula for the error

- Validated via numerical experiments (with focus on the NTK)

- Error associated with larger eigenvalues decay faster with the training size: learning through successive steps

Kernel (ridge) regression

- \(p\) training points \(\{\mathbf x_i,f^\star(\mathbf x_i)\}_{i=1}^p\) generated by target function \(f^\star:\mathbb R^d\to\mathbb R\), \(\mathbf x_i\sim p(\mathbf x_i)\)

- Kernel regression: \(\min_{f\in\mathcal H(K)} \sum_{i=1}^p \left[ f(\mathbf x_i) - f^\star(\mathbf x_i) \right]^2 + \lambda |\!|f|\!|_K\)

- Estimator: \(f(\mathbf x) = \mathbf y^t (\mathbb K + \lambda\mathbb I)^{-1} \mathbf k(\mathbf x)\)

- Generalization error:

\(y_i = f^\star(\mathbf x_i)\)

\(\mathbb K_{ij} = K(\mathbf x_i,\mathbf x_j)\)

\(k_i(\mathbf x) = K(\mathbf x,\mathbf x_i)\)

Mercer decomposition

- \(\{\lambda_\rho,\phi_\rho\}\) are the kernel's eigenstates

- \(\{\phi_\rho\}\) are chosen to form an orthonormal basis:

Kernel regression in feature space

- Expand the target and estimator functions in the kernel's basis:

\(f^\star(\mathbf x) = \sum_\rho \bar w_\rho \psi_\rho(\mathbf x)\)

\(f(\mathbf x) = \sum_\rho w_\rho \psi_\rho(\mathbf x)\)

-

Then kernel regression can be written as

\(\min_{\mathbf w,\ |\!|\mathbf w|\!|<\infty} |\!|\Psi^t \mathbf w - \mathbf y|\!|^2 + \lambda |\!|\mathbf w|\!|^2\)

- And its solution is \(\mathbf w = \left(\Psi\Psi^t + \lambda\mathbb I\right)^{-1} \Psi \mathbf y\)

design matrix \(\Psi_{\rho,i}=\psi_\rho(\mathbf x_i)\)

e.g. Teacher = Gaussian:

Generalization error and spectral components

- We can then derive \(E_g = \sum_\rho E_\rho\), with

where

the target function is only here!

the data points are only here!

Approximation for \(\left\langle G^2 \right\rangle\)

- \(\tilde\mathbf G(p,v) \equiv \left( \frac1\lambda \Phi\Phi^t + \Lambda^{-1} + v\mathbb I \right)^{-1}\)

- Derive a recurrence equation for the addition of a \(p+1\)-st point, \(\mathbf x_{p+1}\) corresponding to \(\mathbf \phi = (\phi_\rho(\mathbf x_{p+1}))_\rho\):

- Use Sherman-Morrison formula (Woodbury inversion)

Approximation for \(\left\langle G^2 \right\rangle\)

- First approximation: approximate the second term as

- Second approximation: continuous \(p \to\) PDE

PDE solution

- This linear PDE can be solved exactly (with the method of characteristics)

- Then the error component \(E_\rho\) is

Note: the same result is found with replica calculations!

Comments on the result

- The effect of the target function is simply a (mode-dependent) prefactor \(\left\langle\bar w_\rho^2\right\rangle\)

- Ratio between two modes:

- The error is large if the target function puts a lot of weight on small \(\lambda_\rho\) modes

Small \(p\):

Large \(p\):

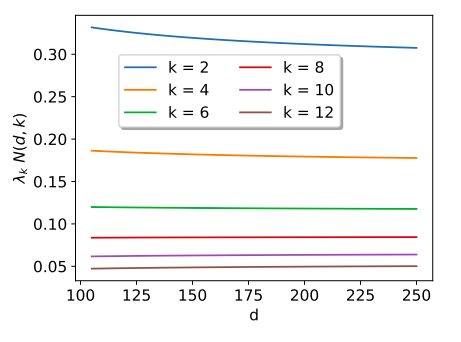

Dot-product kernels in \(d\to\infty\)

- We consider now \(K(\mathbf x,\mathbf x^\prime) = K(\mathbf x\cdot\mathbf x^\prime)\), \(\mathbf x\in\mathbb S^{d-1}\)

- Eigenstates are spherical harmonics, eigenvalues are degenerate:

e.g. NTK

(everything I say next could be derived for translation-invariant kernels as well)

for \(d\to\infty\), \(N(d,k)\sim d^k\)

and \(\lambda_k \sim N(d,k)^{-1} \sim d^{-k}\)

NTK

Dot-product kernels in \(d\to\infty\)

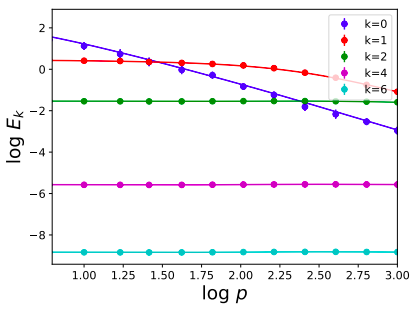

- Learning proceeds by stages. Take \(p = \alpha d^\ell\):

- Modes with larger \(\lambda_k\) are learned earlier!

Numerical experiments

Three settings are considered:

- Kernel Teacher-Student with 4-layer NTK kernels (for both)

- Finite-width NNs learning pure modes

- Finite-width Teacher-Student 2-layer NNs

kernel regression with \(K^\mathrm{NTK}\)

\(\to\) learn with NNs (4 layers h=500, 2 layers h=10000)

Note: this contains several spherical harmonics

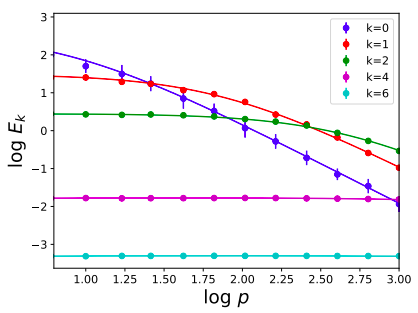

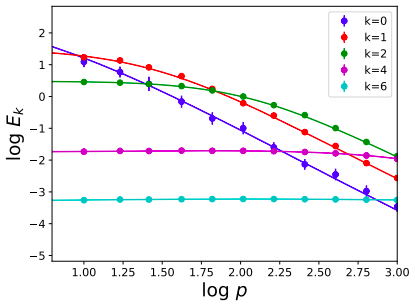

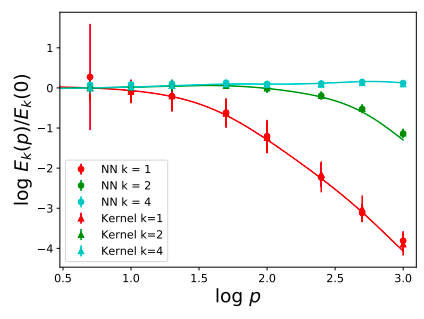

Kernel regression with 4-layer NTK kernel

\(d=10,\ \lambda=5\)

\(d=10,\ \lambda=0\) ridgeless

\(d=100,\ \lambda=0\) ridgeless

\(E_k = \sum_{m=1}^{N(d,k)} E_{km} = N(d,k) E_{k,1}\)

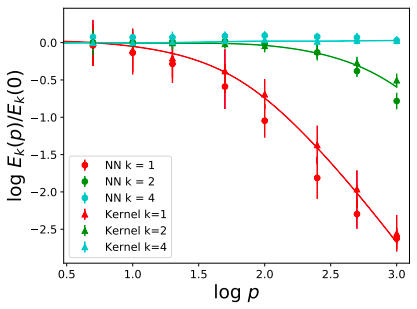

Pure \(\lambda_k\) modes with NNs

2 layers, width 10000

4 layers, width 500

\(f^\star\) has only the \(\textcolor{red}{k}\) mode

\(d=30\)

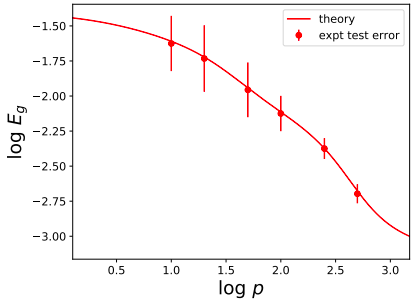

Teacher-Student 2-layer NNs

\(d=25\), width 8000

Spectrum Dependent Learning Curves in Kernel Regression and Wide Neural Networks

By Stefano Spigler

Spectrum Dependent Learning Curves in Kernel Regression and Wide Neural Networks

- 1,163