In-browser AI apps with

Gradio and

Transformers

FEDAY 2024

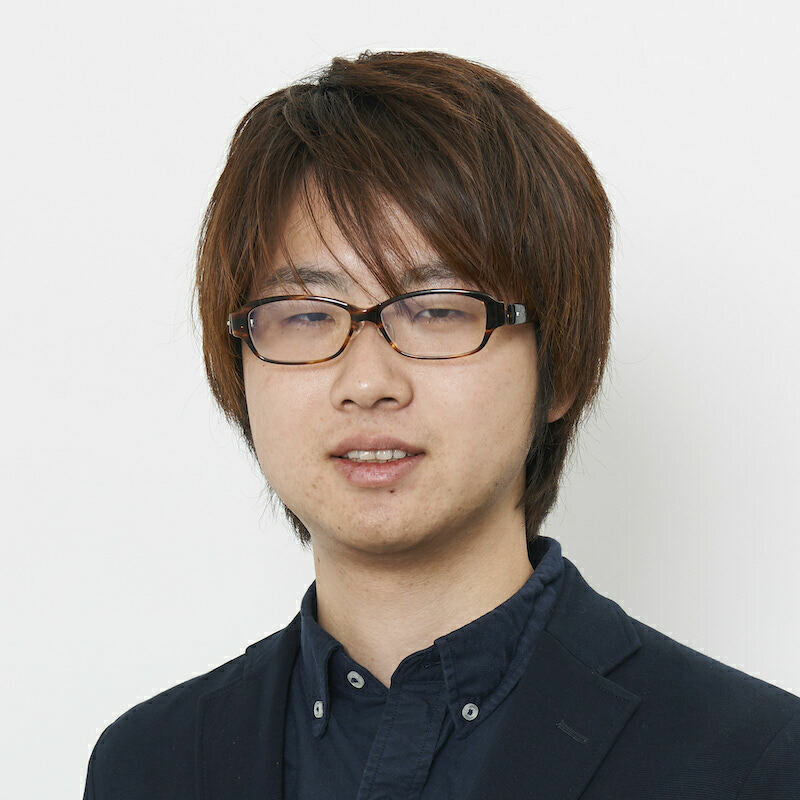

Yuichiro Tachibana, ML Developer Advocate @ 🤗

In-browser AI apps with

Gradio and

Transformers

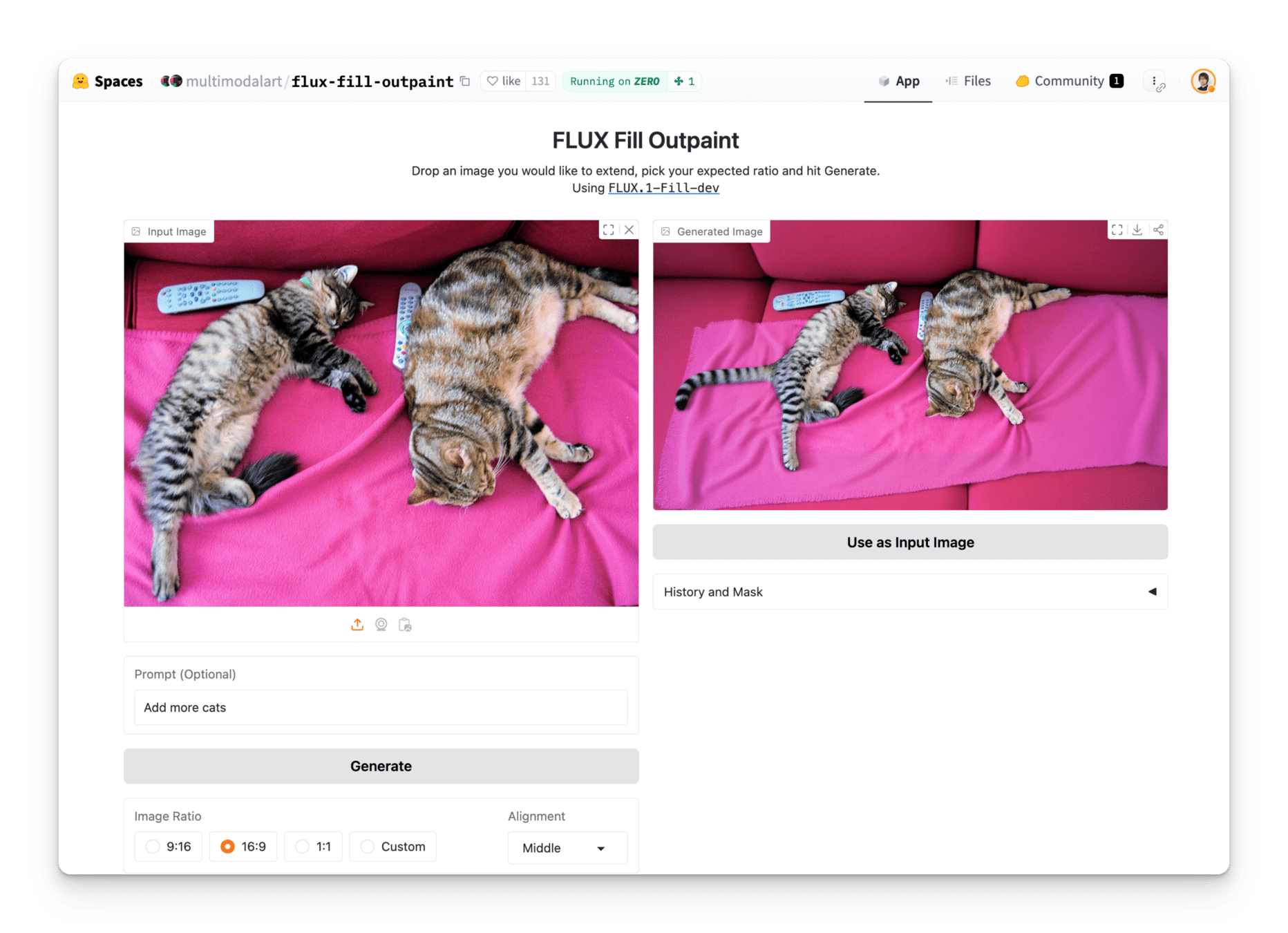

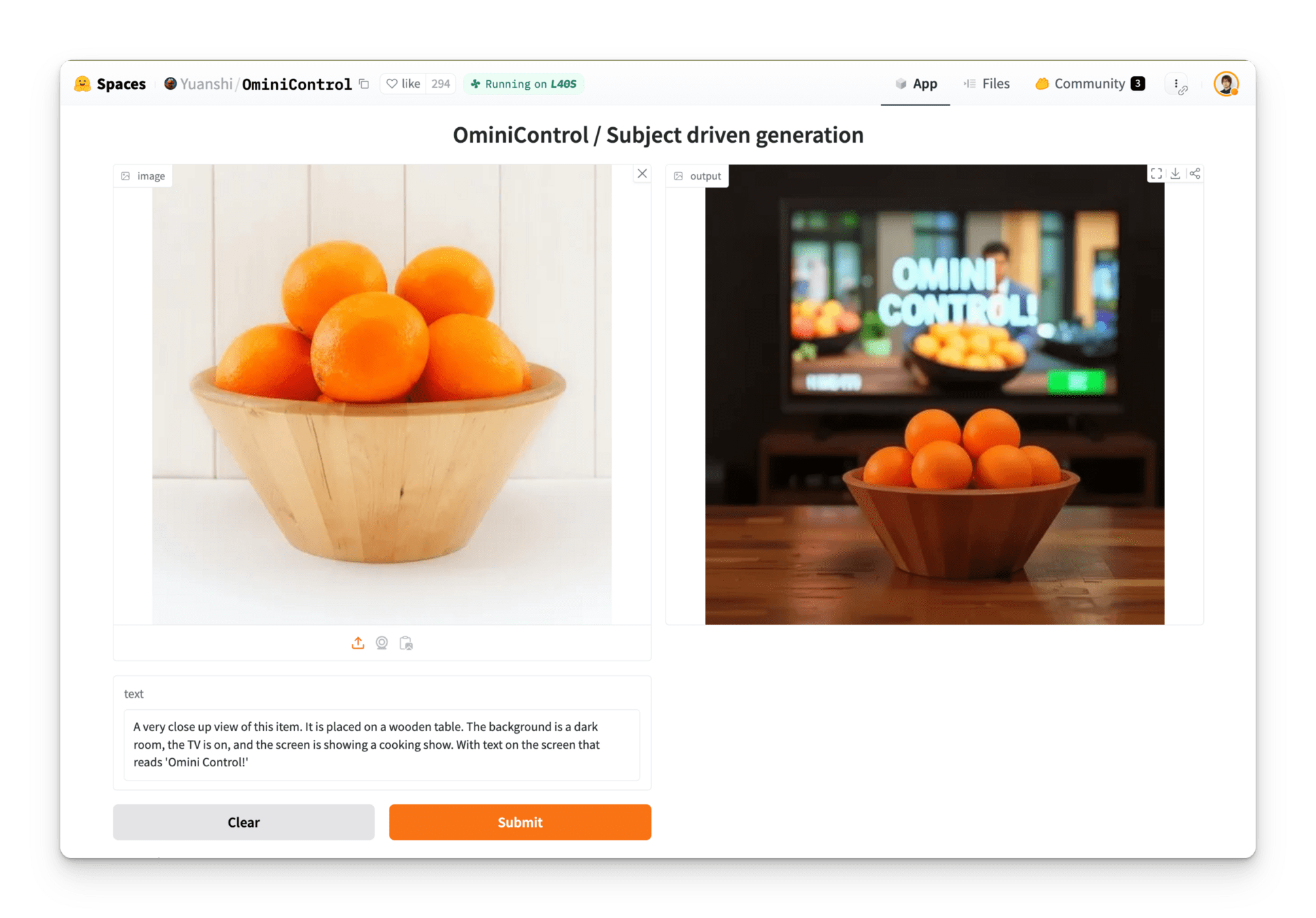

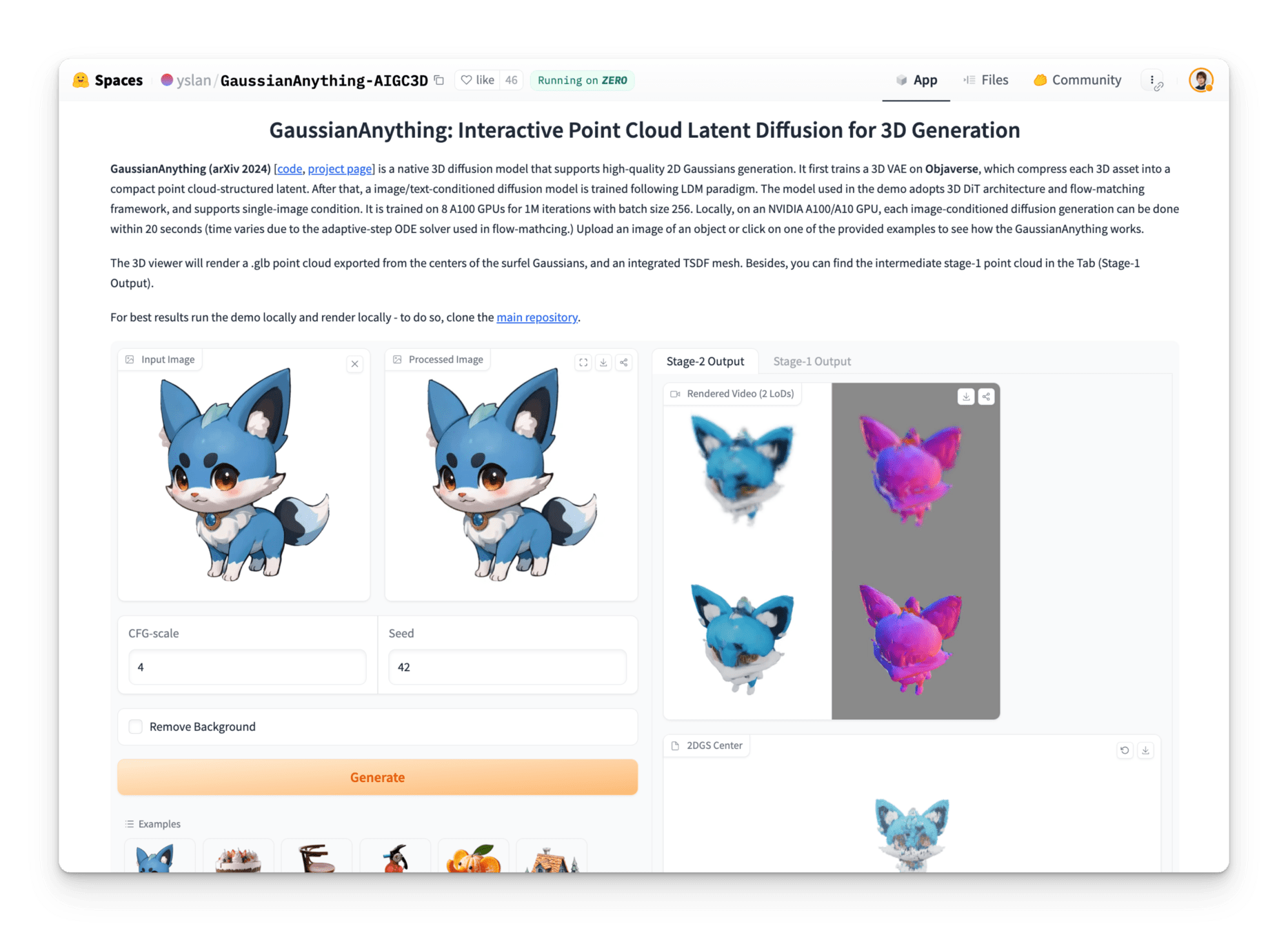

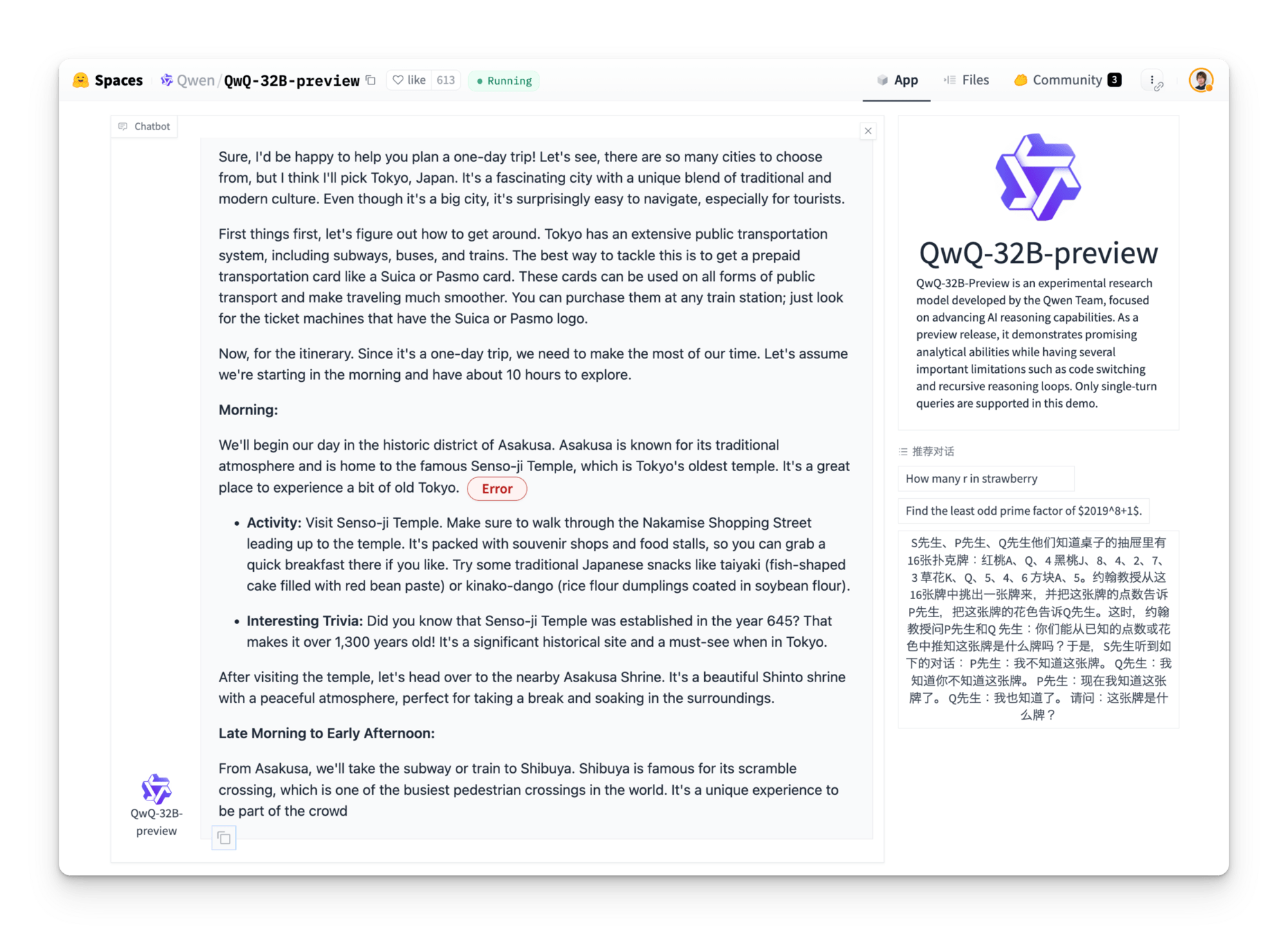

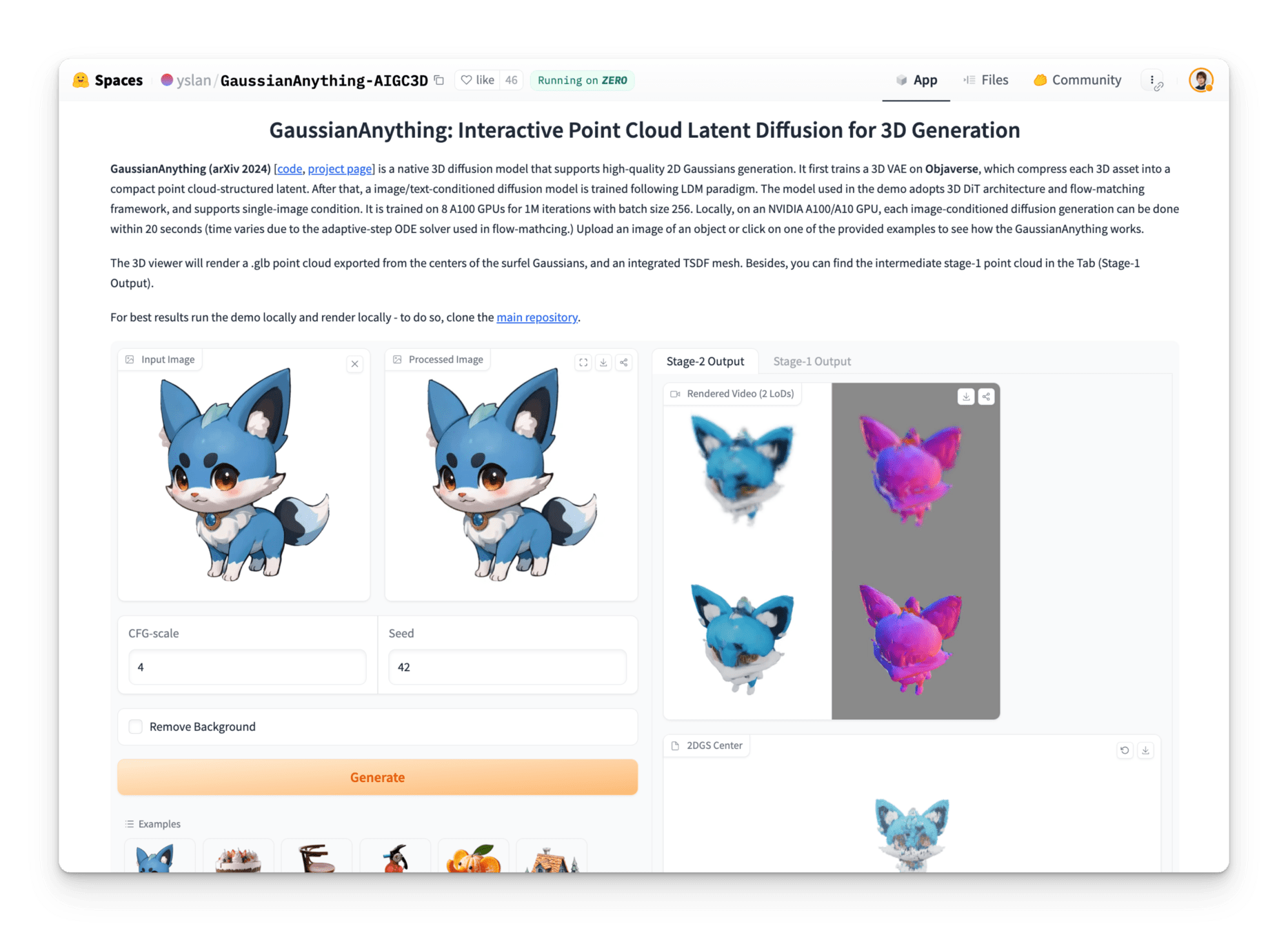

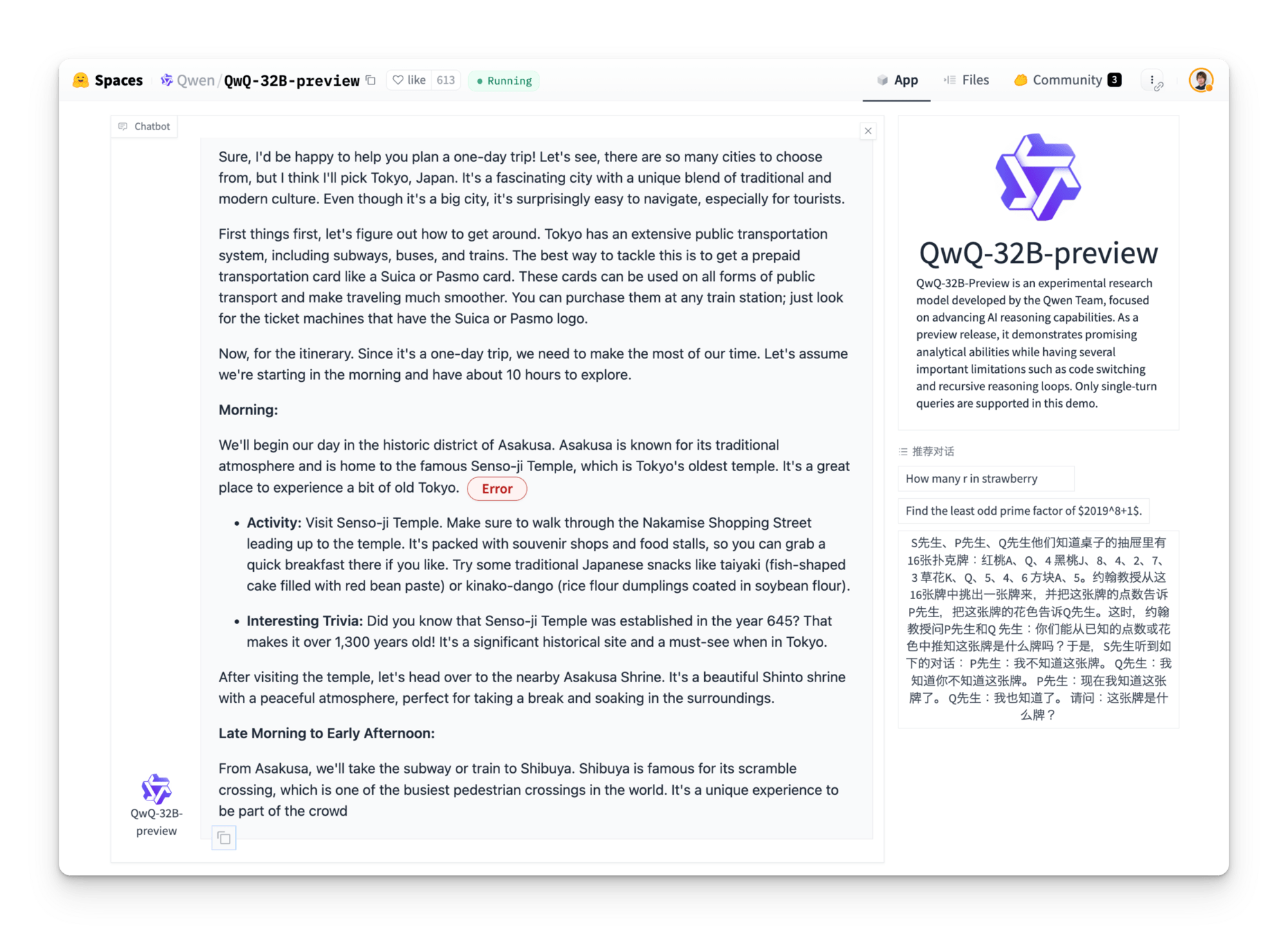

AI apps

AI devs

AI devs

AI devs

AI devs

In-browser AI apps with

Gradio and

Transformers

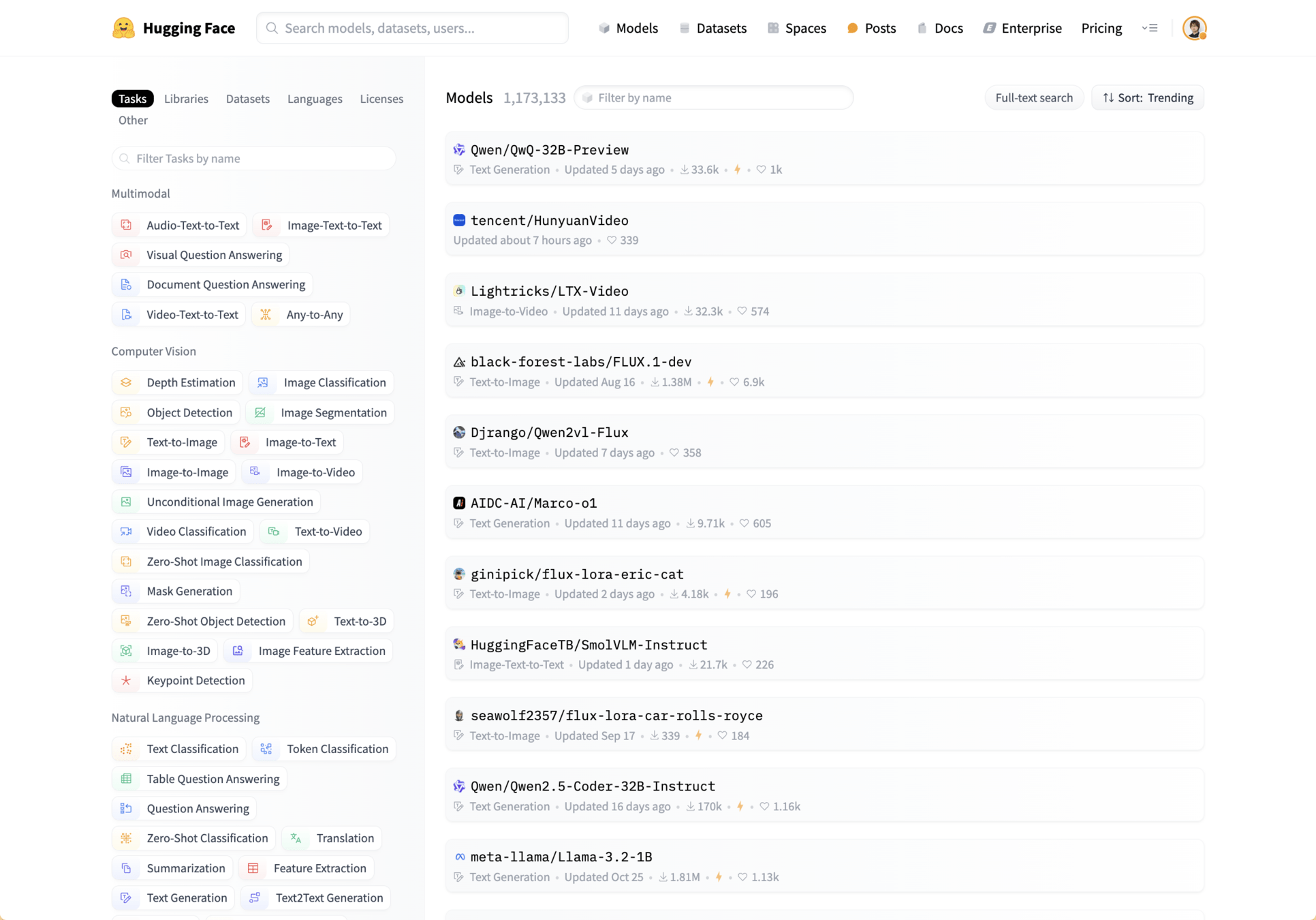

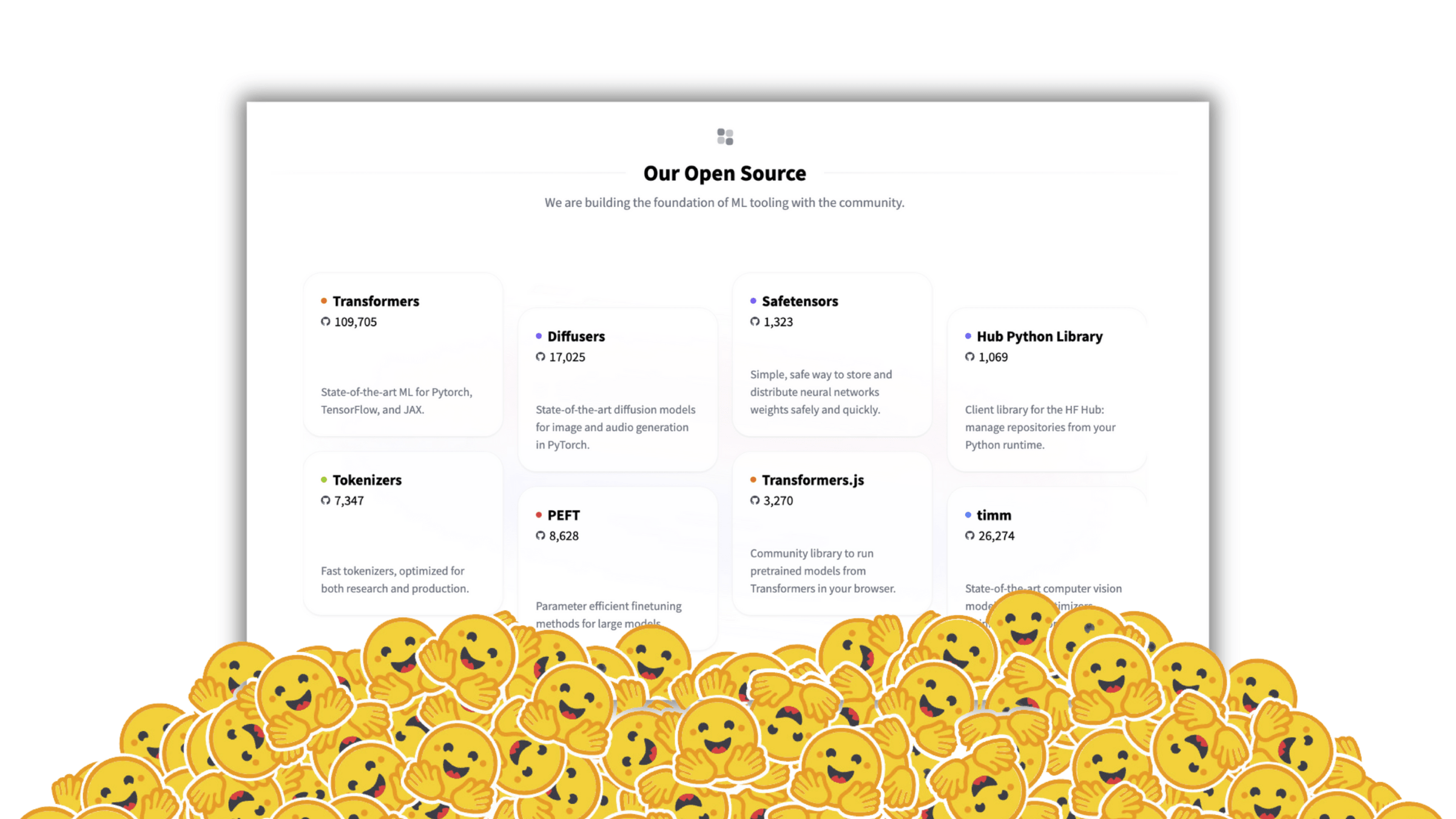

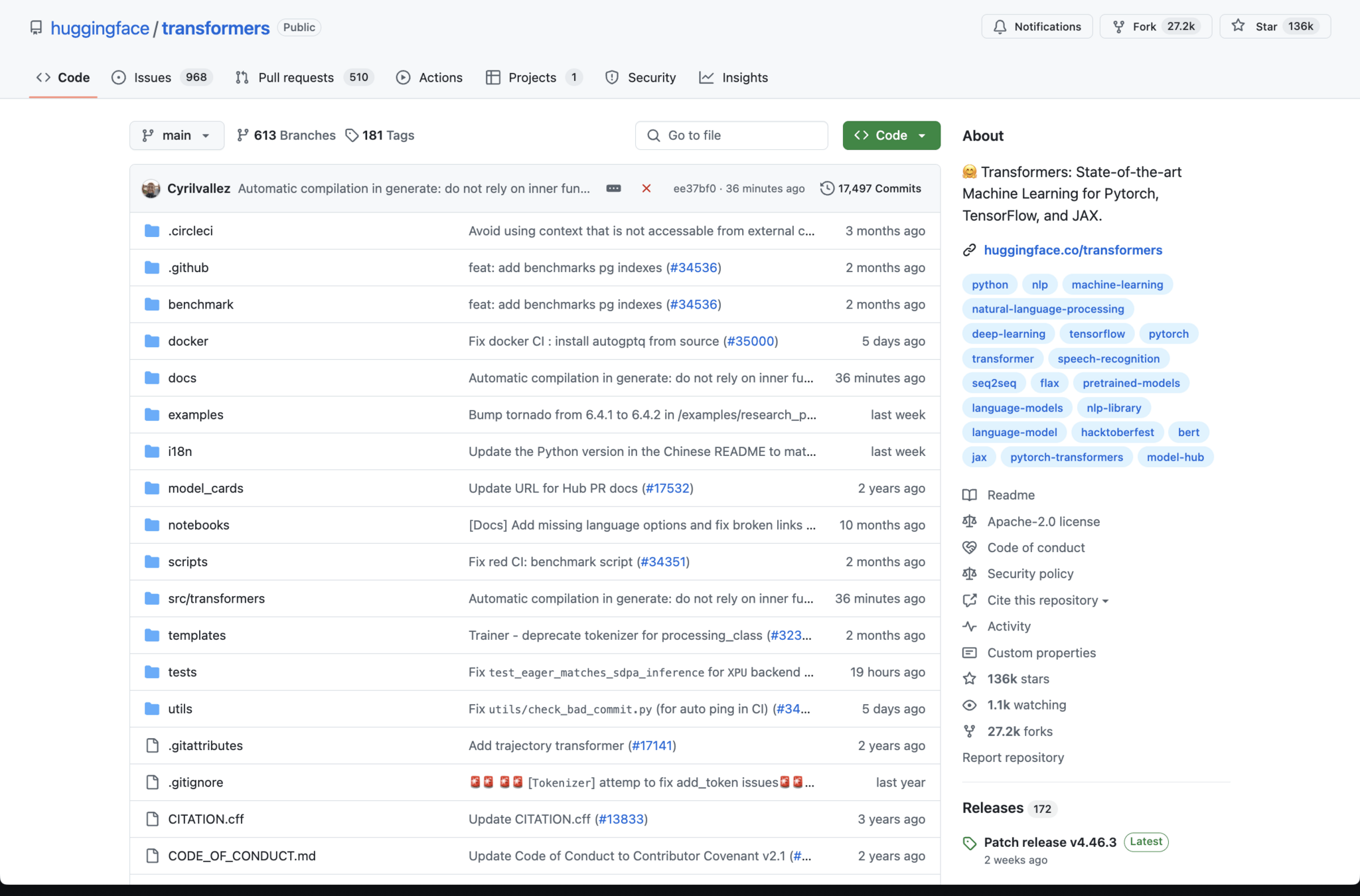

Transformers provides APIs and tools

to easily download and train state-of-the-art pretrained models.

>>> from transformers import pipeline

>>> classifier = pipeline("sentiment-analysis")

>>> classifier("We are very happy to show you the 🤗 Transformers library.")

[{'label': 'POSITIVE', 'score': 0.9998}]

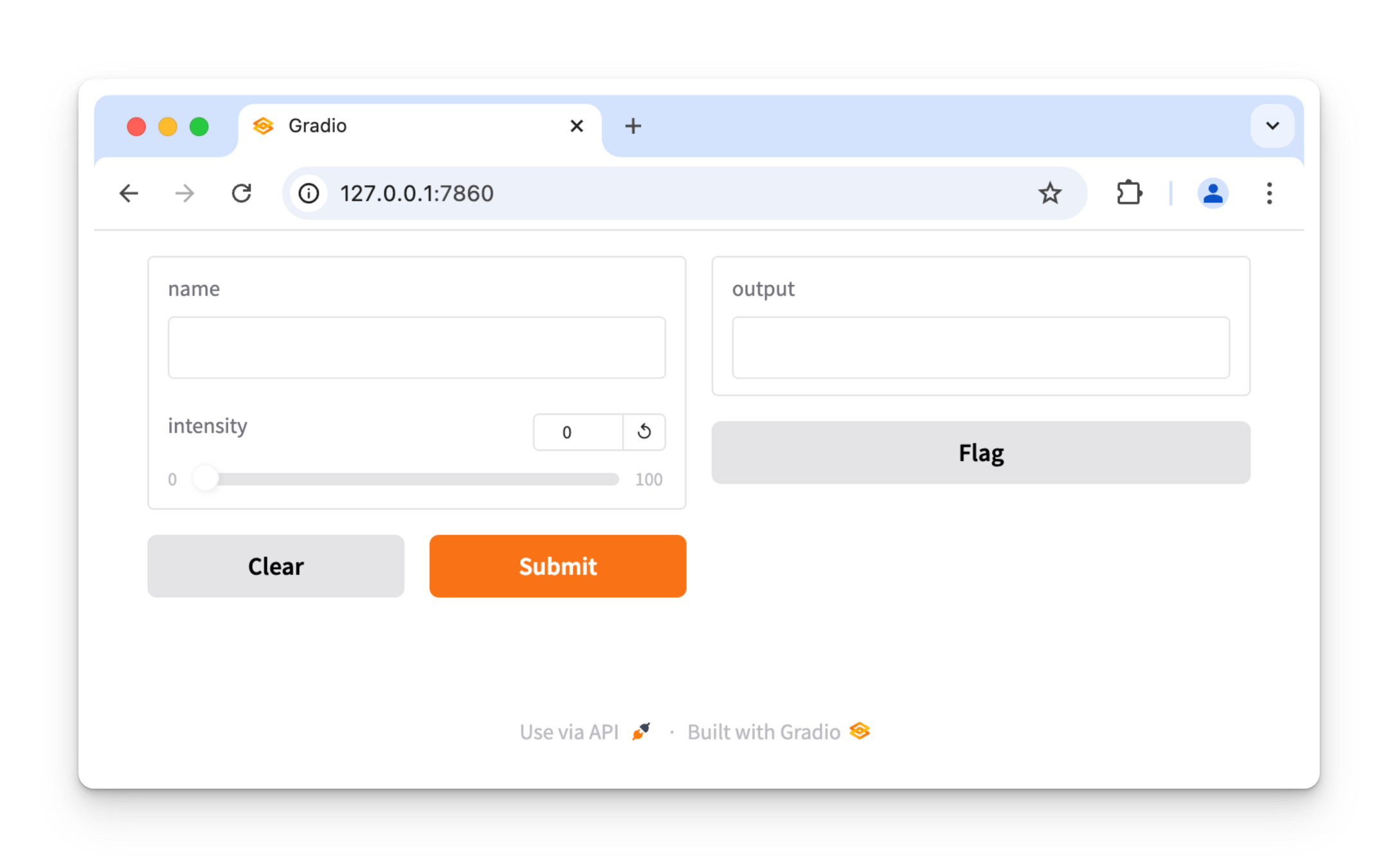

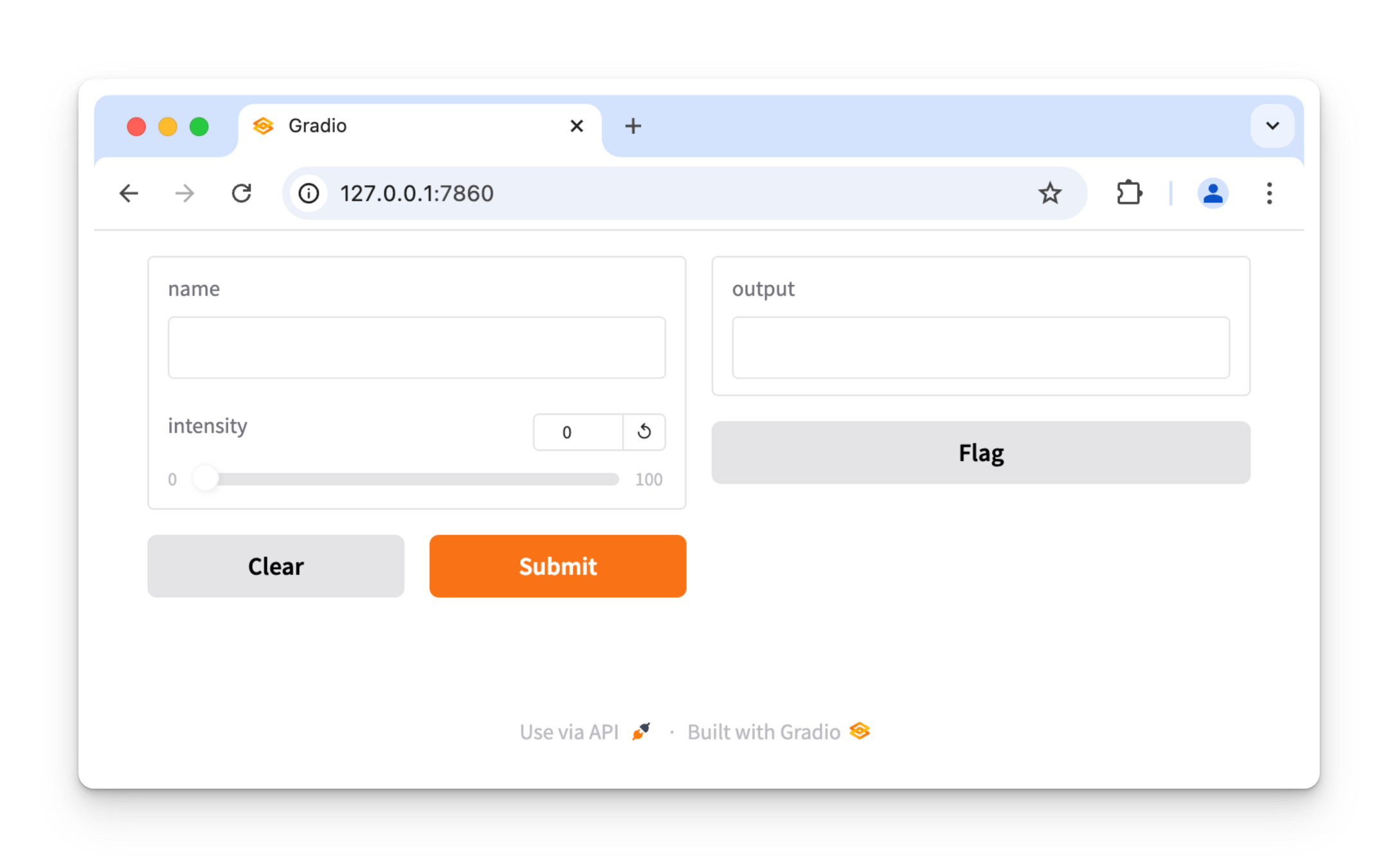

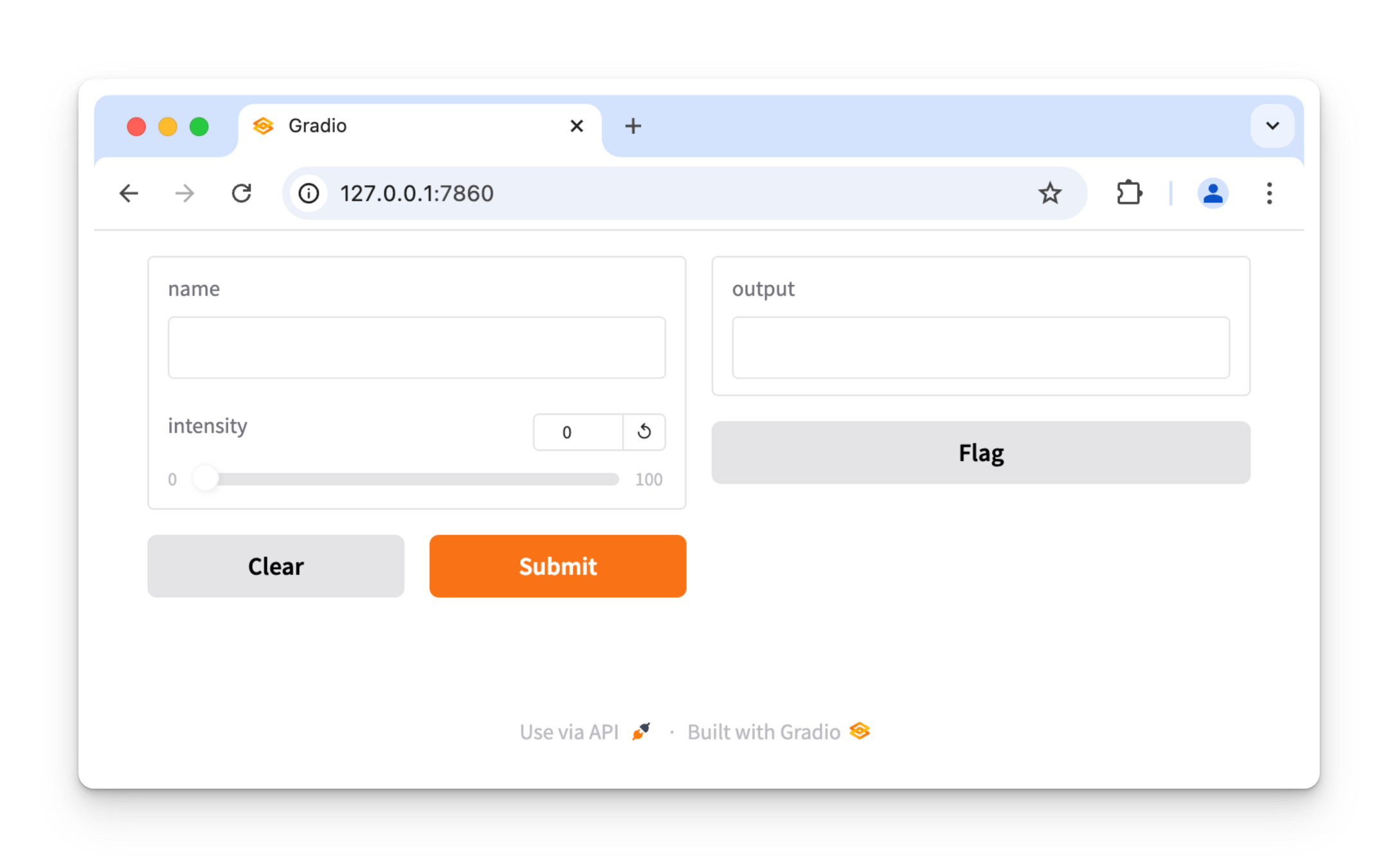

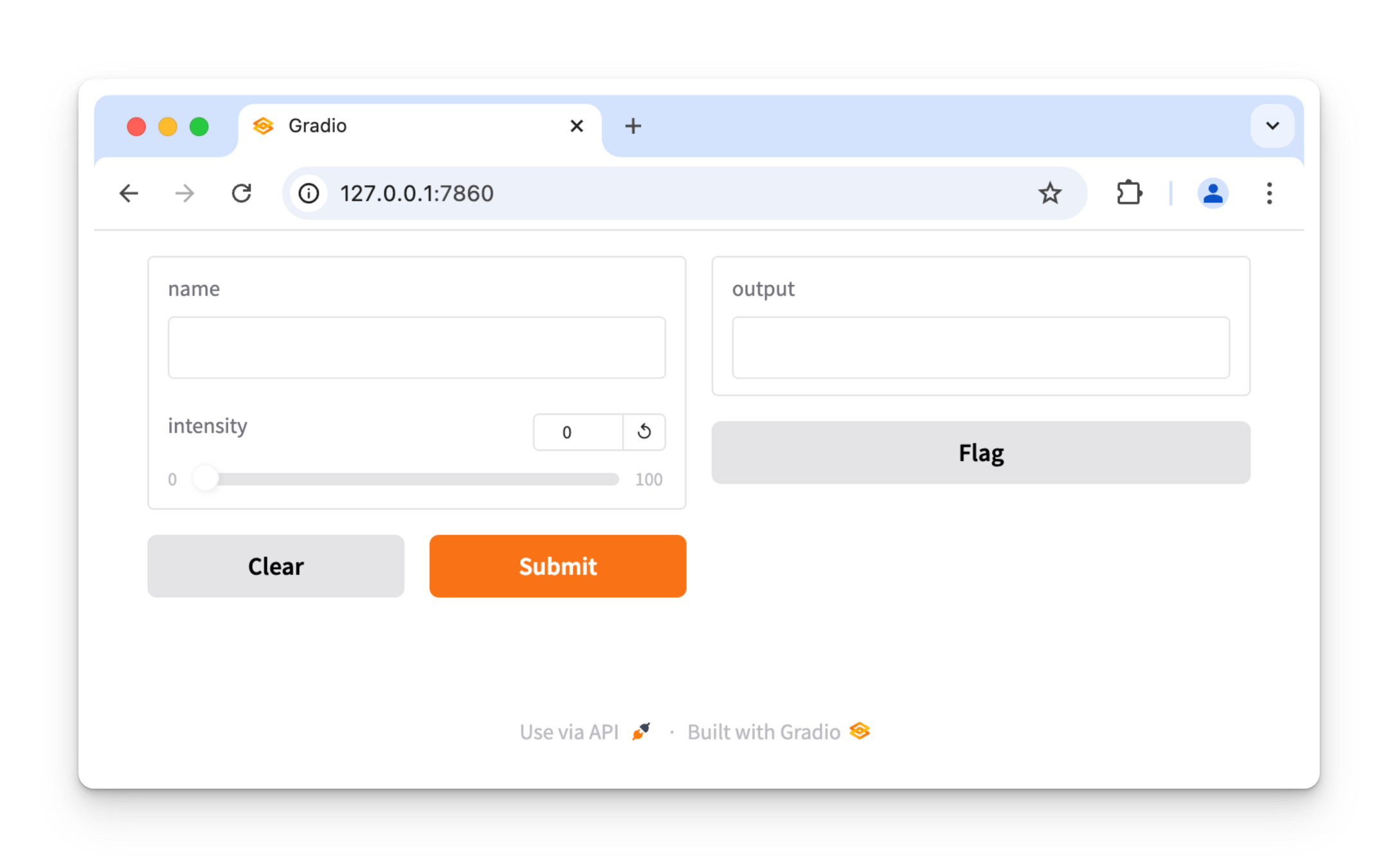

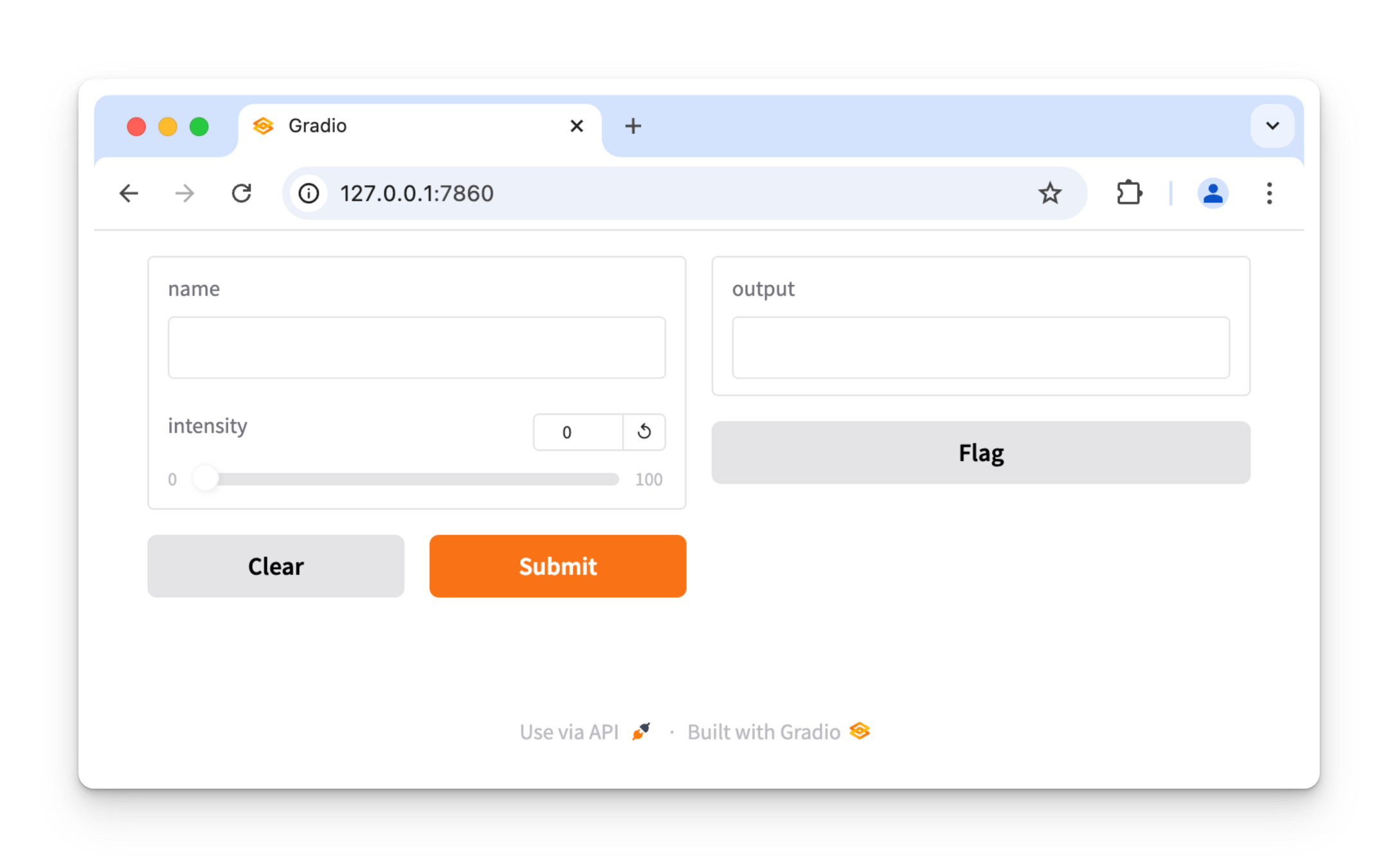

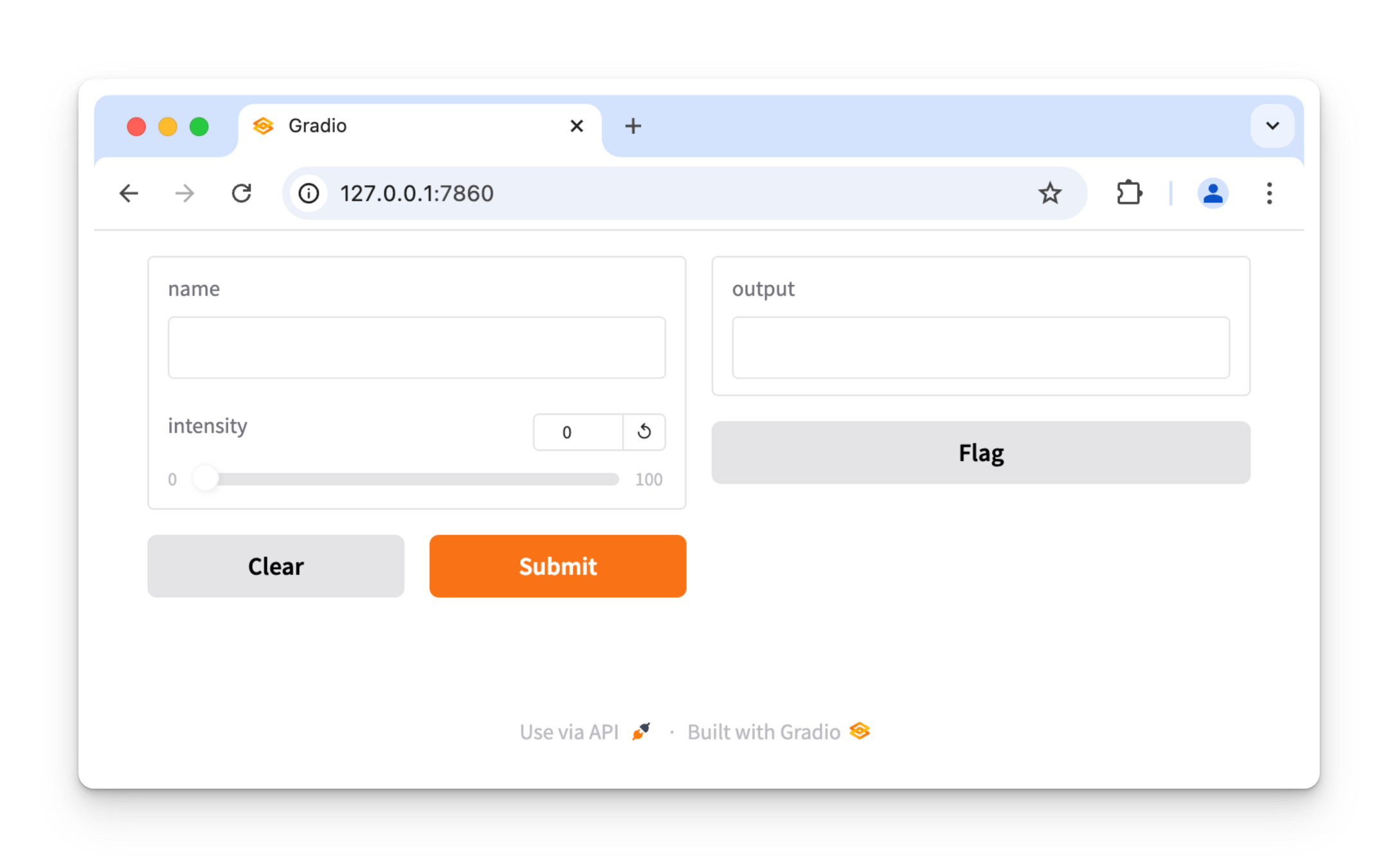

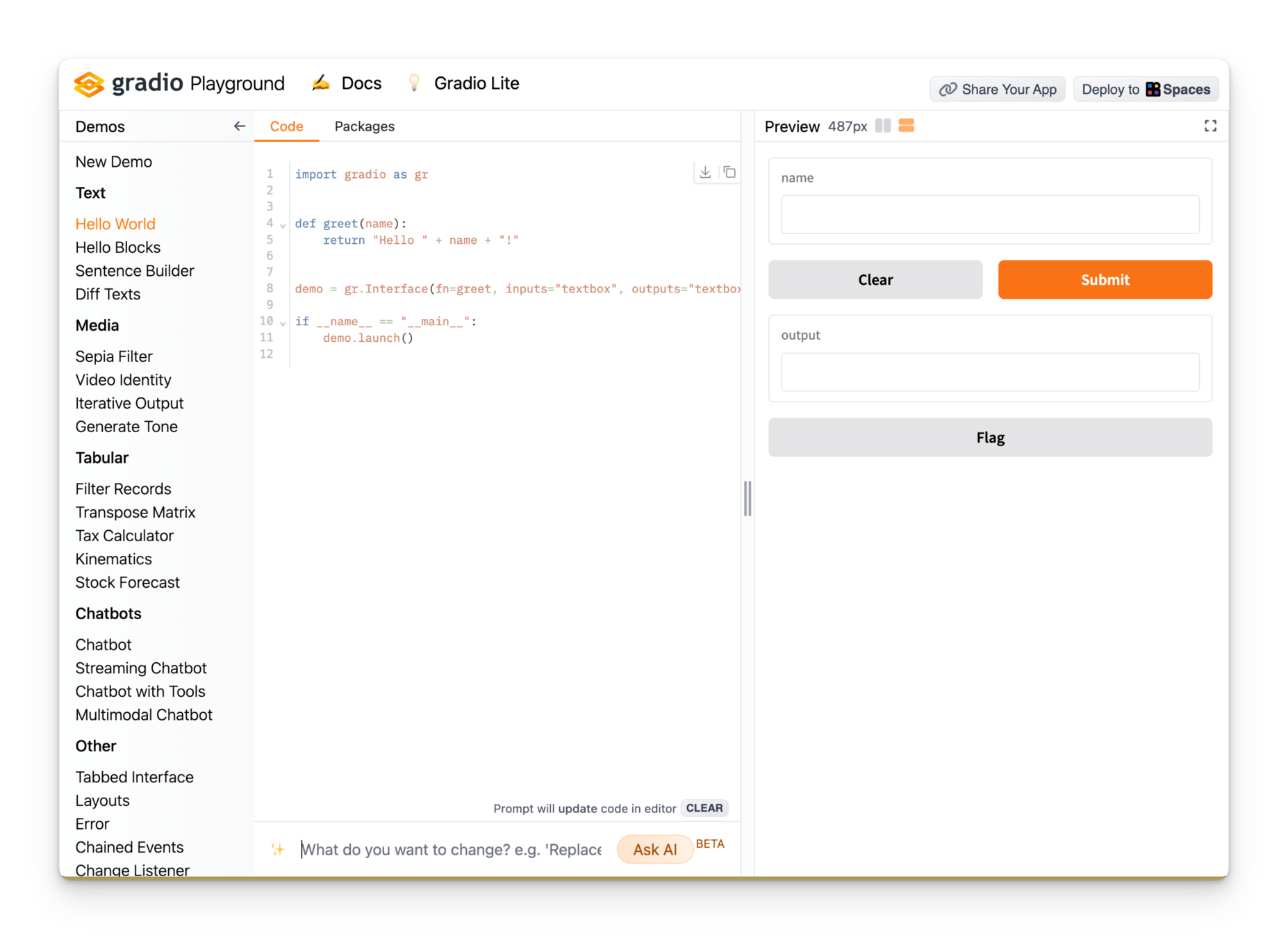

import gradio as gr

def greet(name, intensity):

return "Hello, " + name + "!" * int(intensity)

demo = gr.Interface(

fn=greet,

inputs=["text", "slider"],

outputs=["text"],

)

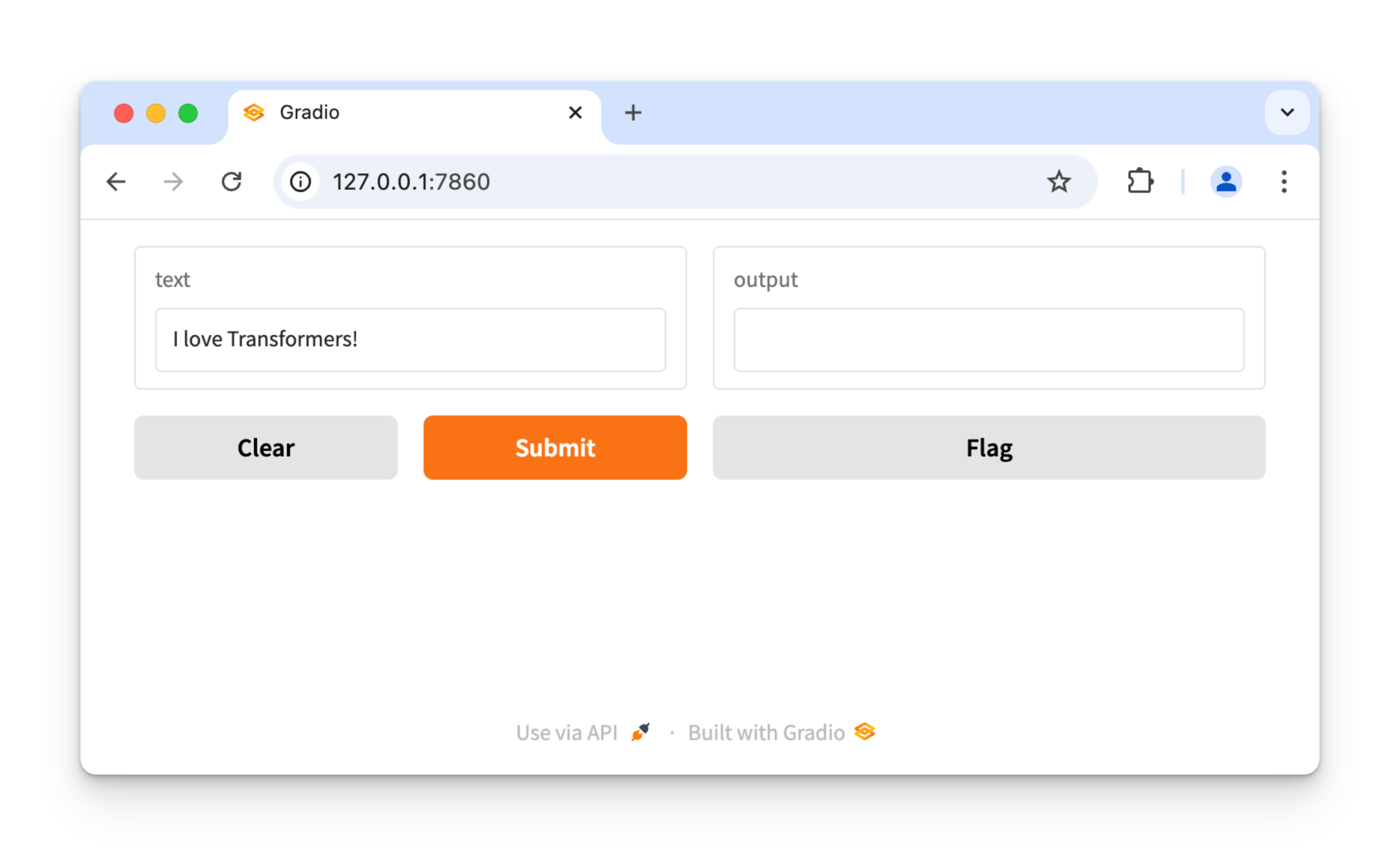

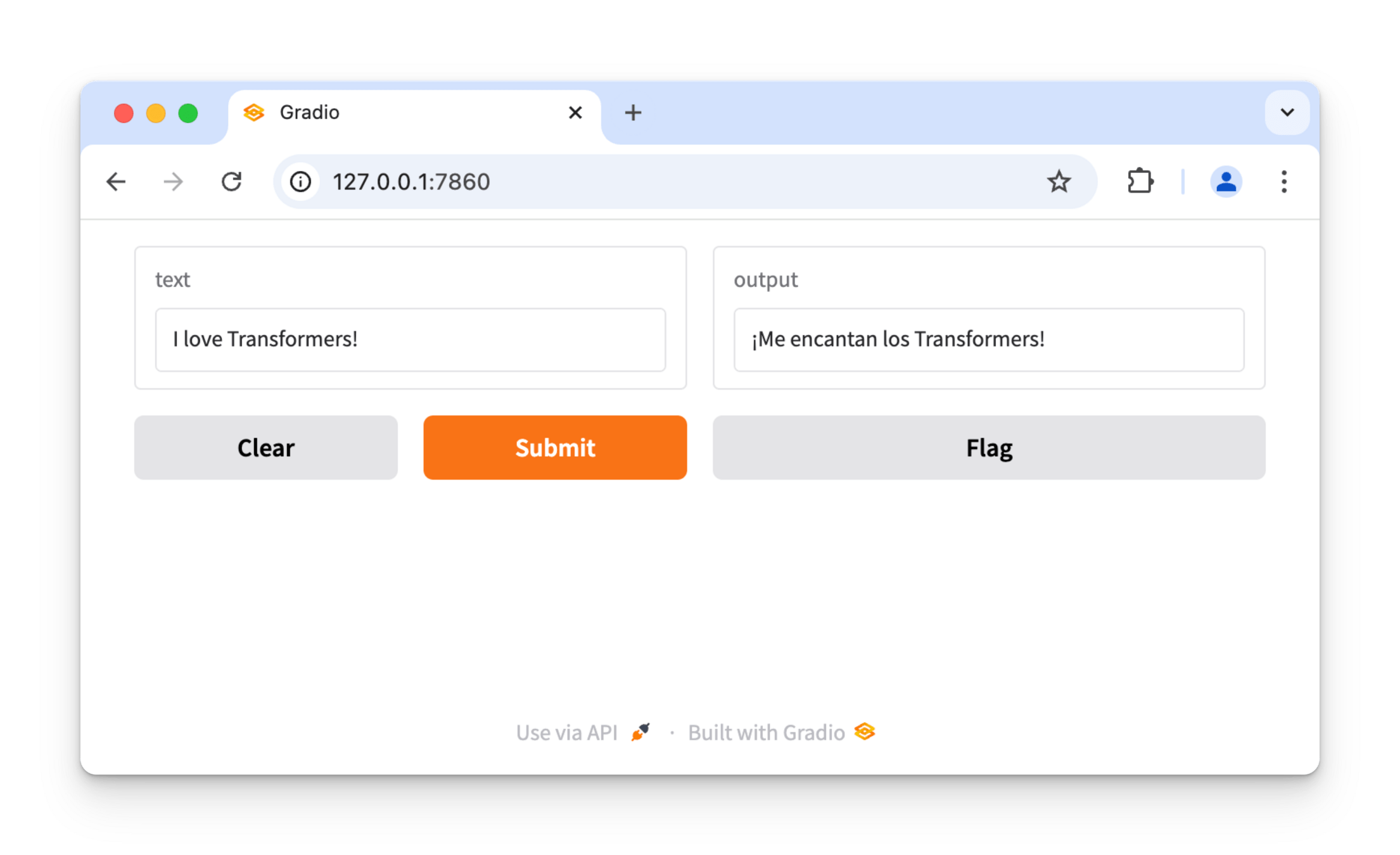

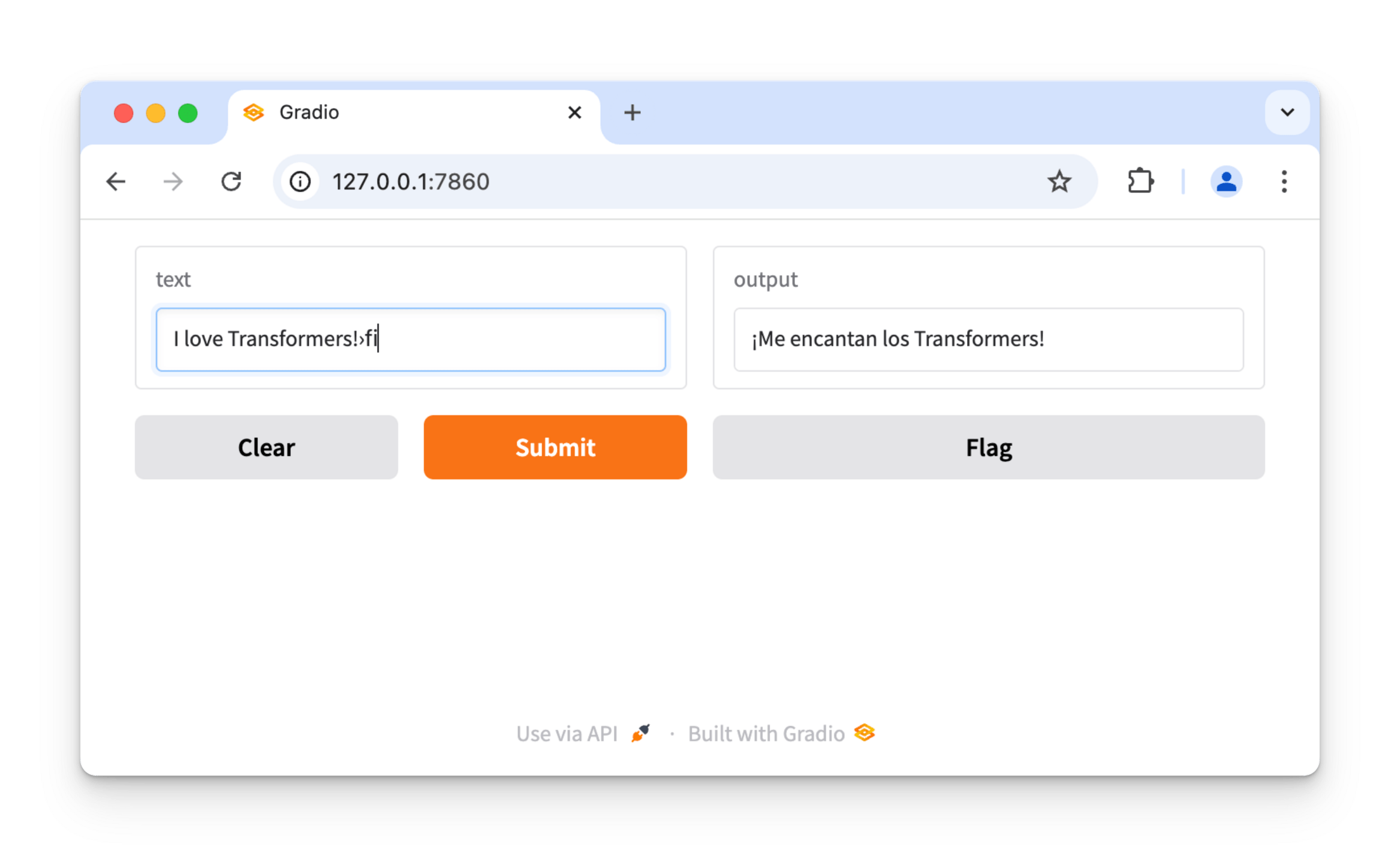

demo.launch()Gradio is an open-source Python package that allows you to quickly build a demo or web application for your machine learning model, API, or any arbitrary Python function.

🤝

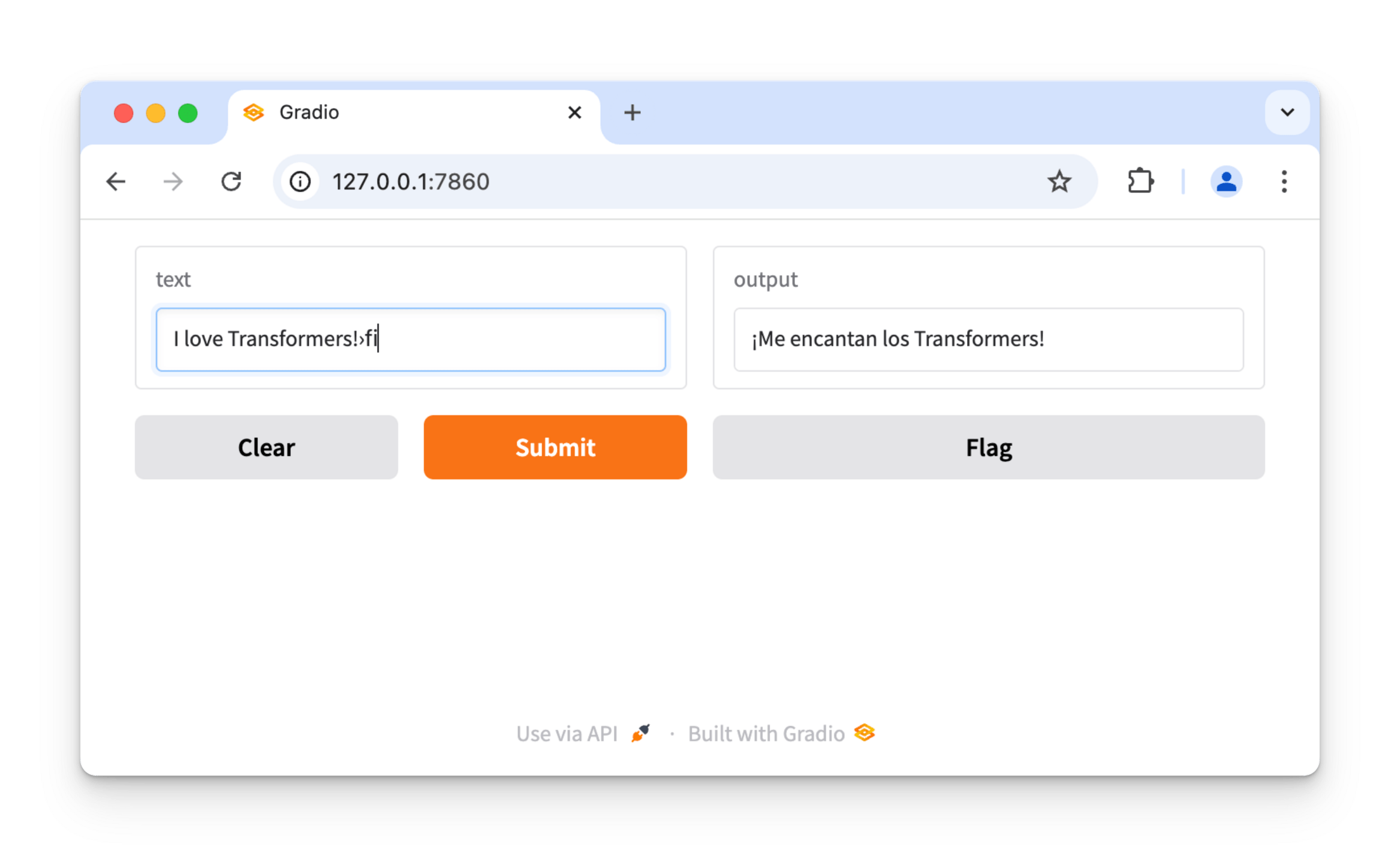

import gradio as gr

from transformers import pipeline

pipe = pipeline("translation", model="Helsinki-NLP/opus-mt-en-es")

def predict(text):

return pipe(text)[0]["translation_text"]

demo = gr.Interface(

fn=predict,

inputs='text',

outputs='text',

)

demo.launch()

🤝

import gradio as gr

from transformers import pipeline

pipe = pipeline("translation", model="Helsinki-NLP/opus-mt-en-es")

def predict(text):

return pipe(text)[0]["translation_text"]

demo = gr.Interface(

fn=predict,

inputs='text',

outputs='text',

)

demo.launch()

Server

Python runtime

Web server

AI inference

💡

🤝

Quick & Easy

Web-based AI apps

Python

at FEDAY?

❓

A central part of the AI/ML ecosystem.

In-browser AI apps with

Gradio and

Transformers

In-browser AI apps

Web-based applications that use machine learning models

to perform tasks like text analysis, image processing, or speech recognition

entirely within the user's browser.

a.k.a. frontend-only AI apps

Why in-browser?

- Privacy

- Low latency

- Offline capability

- Scalability without servers

- Low cost

Light-weight models

Subtitle

In-browser AI apps with

Gradio and

Transformers

🤝

Quick & Easy

Web-based AI apps

🤝

import gradio as gr

from transformers import pipeline

pipe = pipeline("translation", model="Helsinki-NLP/opus-mt-en-es")

def predict(text):

return pipe(text)[0]["translation_text"]

demo = gr.Interface(

fn=predict,

inputs='text',

outputs='text',

)

demo.launch()

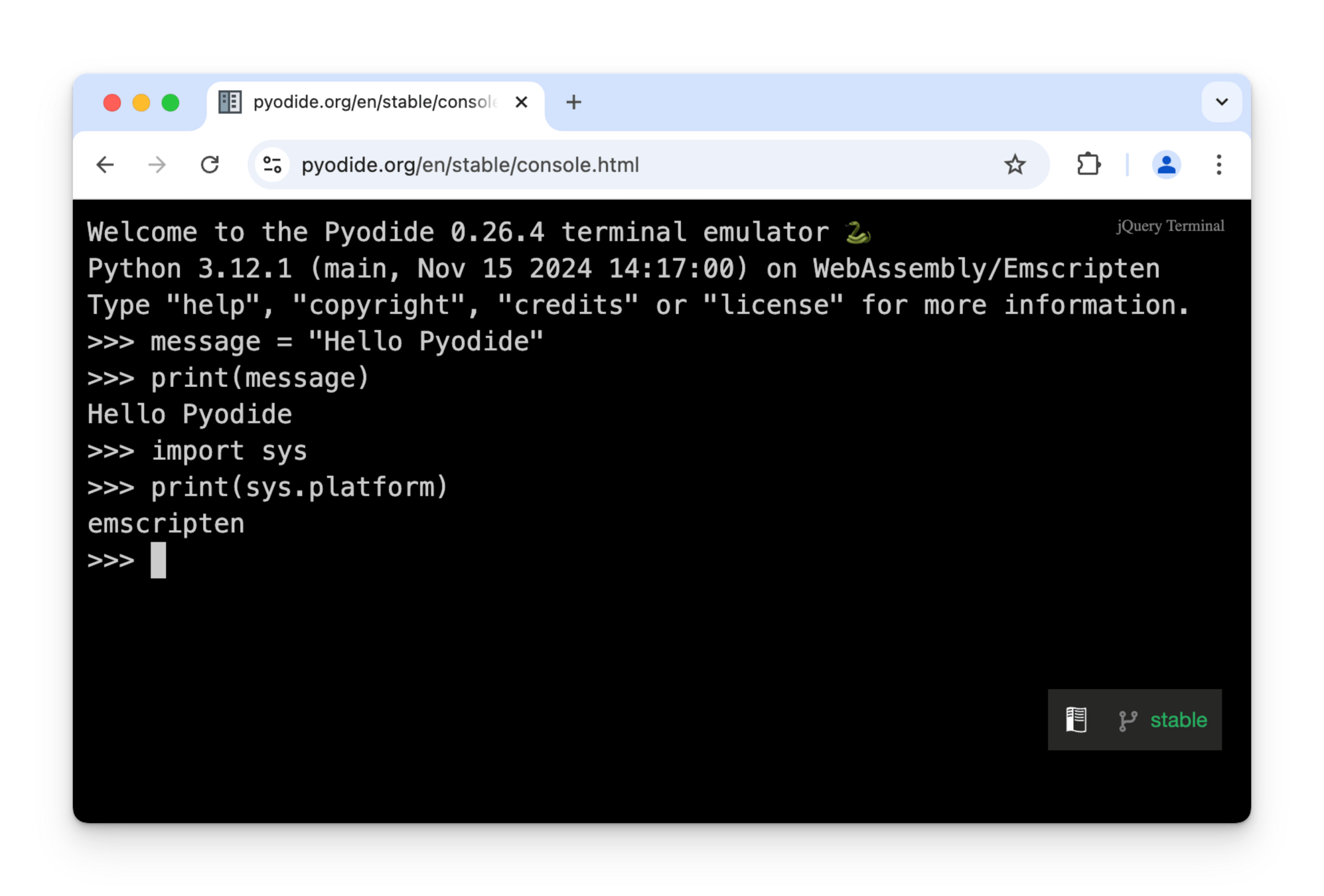

... Python on Frontend?

Pyodide is a Python distribution for the browser and Node.js based on WebAssembly.

Python interpreter on a browser

Web Browser

JS engine

Wasm Runtime

import sys

print(sys.platform)

Pyodide = Wasm-compiled Python interpreter

"emscripten"

🤝

import gradio as gr

from transformers import pipeline

pipe = pipeline("translation", model="Helsinki-NLP/opus-mt-en-es")

def predict(text):

return pipe(text)[0]["translation_text"]

demo = gr.Interface(

fn=predict,

inputs='text',

outputs='text',

)

demo.launch()

Server

Python runtime

Web server

AI inference

🤝

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline("translation", 'Xenova/m2m100_418M')

async def predict(text):

res = await pipe(text, {

"src_lang": 'en',

"tgt_lang": 'zh',

})

return res[0]["translation_text"]

demo = gr.Interface(

fn=predict,

inputs='text',

outputs='text',

)

demo.launch()

Web Browser

runtime

Pseudo-

Web server

AI inference

Worker process

Renderer process

JS-Py bridge

.JS

-lite

.JS

-lite

🤝

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline("translation", 'Xenova/m2m100_418M')

async def predict(text):

res = await pipe(text, {

"src_lang": 'en',

"tgt_lang": 'zh',

})

return res[0]["translation_text"]

demo = gr.Interface(

fn=predict,

inputs='text',

outputs='text',

)

demo.launch()

Web Browser

runtime

Pseudo-

Web server

AI inference

Worker process

Renderer process

JS-Py bridge

.JS

-lite

.JS

-lite

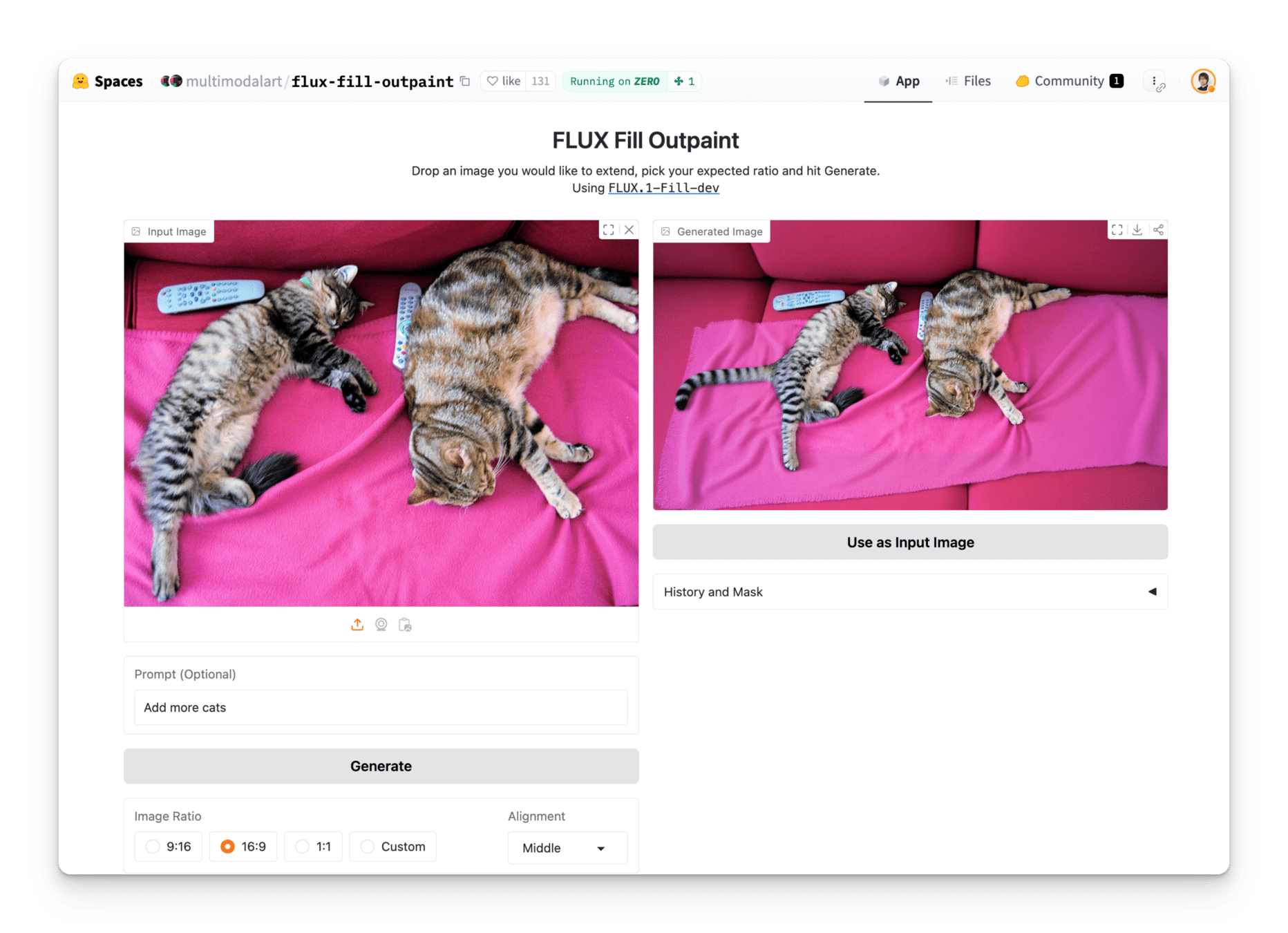

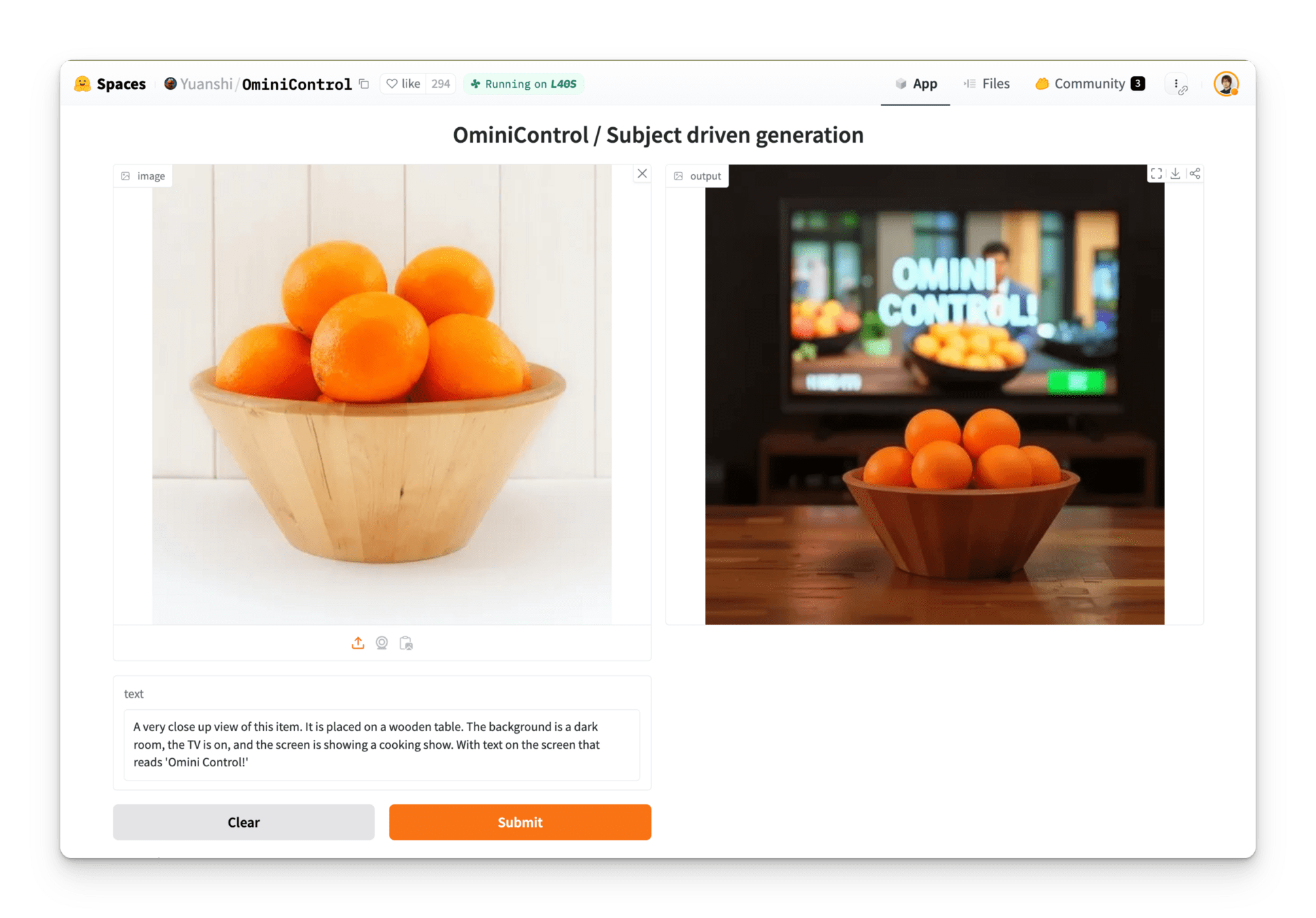

Gradio-Lite

Pyodide/Wasm-ported Gradio.

You write Python code, then get a web UI, 100% in the browser.

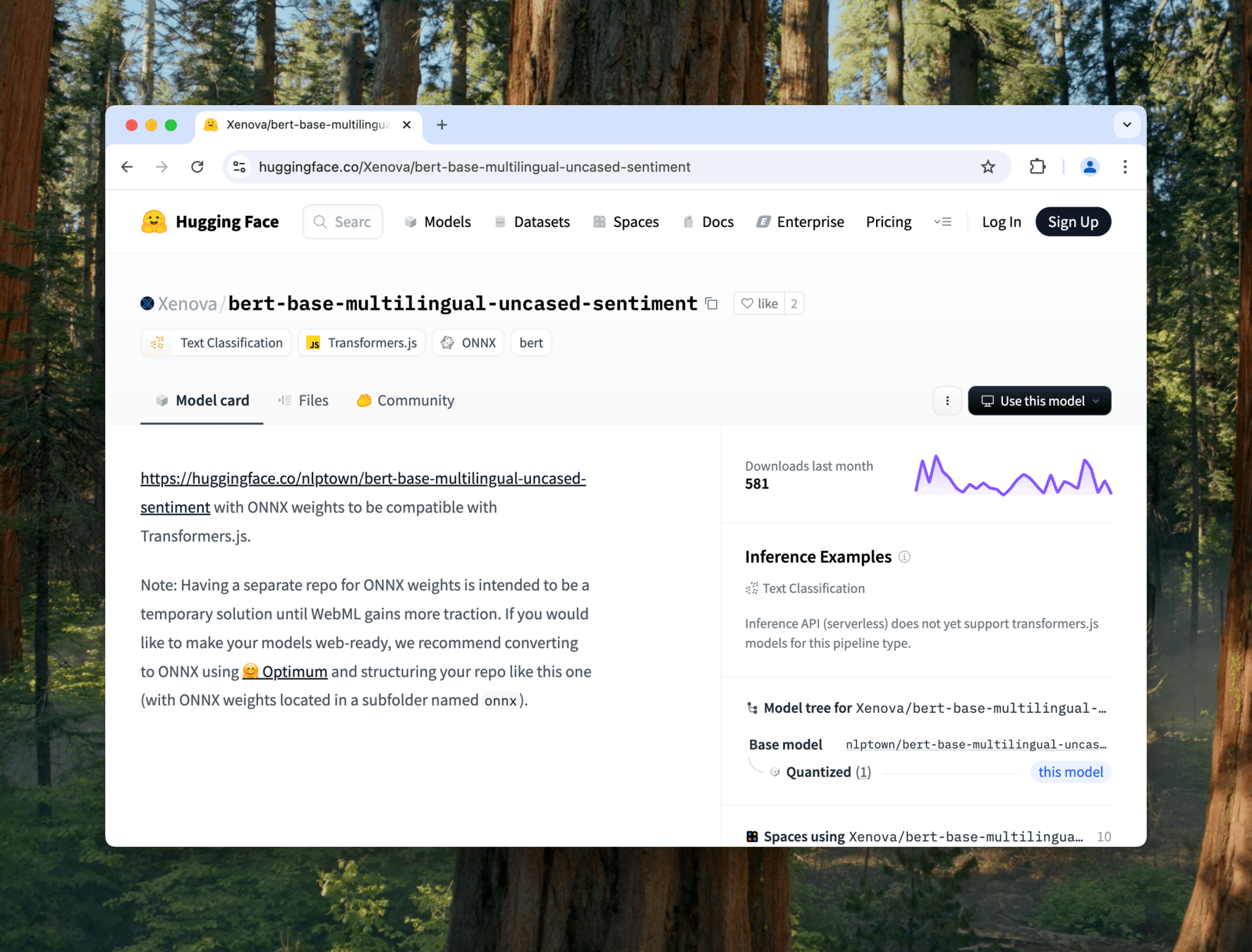

Transformers.js

JS version of Transformers.

You can use pretrained AI/ML models in the browser.

Check out the presentation 👉 https://www.bilibili.com/video/BV19c411B7QU/

Transformers.js

🤝

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline("translation", 'Xenova/m2m100_418M')

async def predict(text):

res = await pipe(text, {

"src_lang": 'en',

"tgt_lang": 'zh',

})

return res[0]["translation_text"]

demo = gr.Interface(

fn=predict,

inputs='text',

outputs='text',

)

demo.launch()Web Browser

runtime

Pseudo-

Web server

AI inference

Worker process

Renderer process

JS-Py bridge

.JS

-lite

Transformers.js.pyPython wrapper of Transformers.js 🤯

.JS

-lite

Gradio-Lite

Pyodide/Wasm-ported Gradio.

You write Python code, then get a web UI, 100% in the browser.

Transformers.js

JS version of Transformers.

You can use pretrained AI/ML models in the browser.

Check out the presentation 👉 https://www.bilibili.com/video/BV19c411B7QU/

Transformers.js.py

Use Transformers.js from Pyodide.

🤯

🤝

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline("translation", 'Xenova/m2m100_418M')

async def predict(text):

res = await pipe(text, {

"src_lang": 'en',

"tgt_lang": 'zh',

})

return res[0]["translation_text"]

demo = gr.Interface(

fn=predict,

inputs='text',

outputs='text',

)

demo.launch()

Web Browser

runtime

Pseudo-

Web server

AI inference

Worker process

Renderer process

JS-Py bridge

.JS

-lite

.JS

-lite

How to use them

$ code index.html<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

</body>

</html>

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

</gradio-lite>

</body>

</html><html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

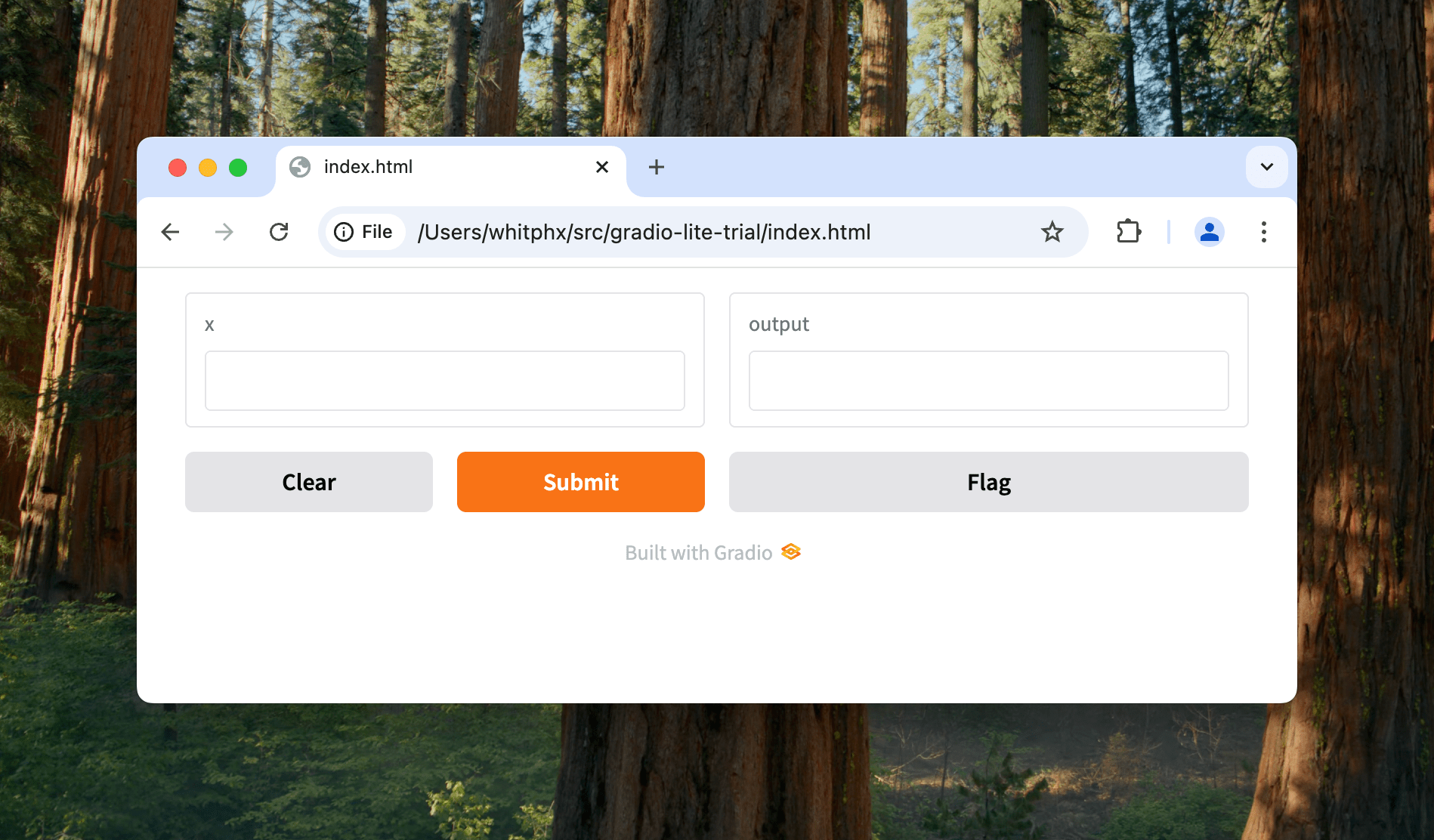

import gradio as gr

def greet(name):

return "Hello, " + name + "!"

gr.Interface(greet, "textbox", "textbox").launch()

</gradio-lite>

</body>

</html>

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline('sentiment-analysis')

demo = gr.Interface.from_pipeline(pipe)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

</html>

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline('sentiment-analysis')

demo = gr.Interface.from_pipeline(pipe)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

</html><html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

import gradio as gr

from transformers_js_py import pipeline

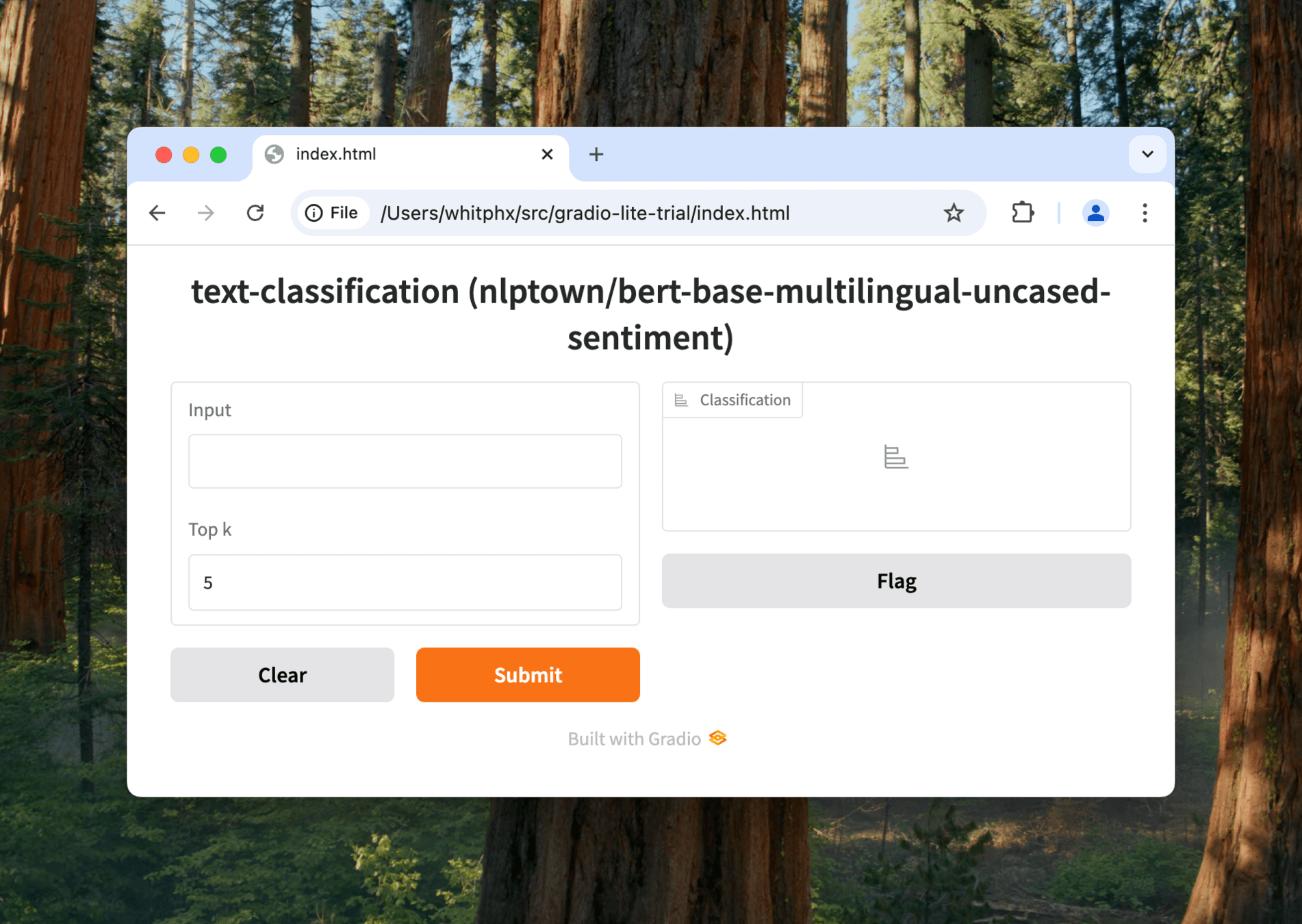

pipe = await pipeline(

'sentiment-analysis',

'Xenova/bert-base-multilingual-uncased-sentiment'

)

demo = gr.Interface.from_pipeline(pipe)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

</html>

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline(

'sentiment-analysis',

'Xenova/bert-base-multilingual-uncased-sentiment'

)

demo = gr.Interface.from_pipeline(pipe)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

</html>

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

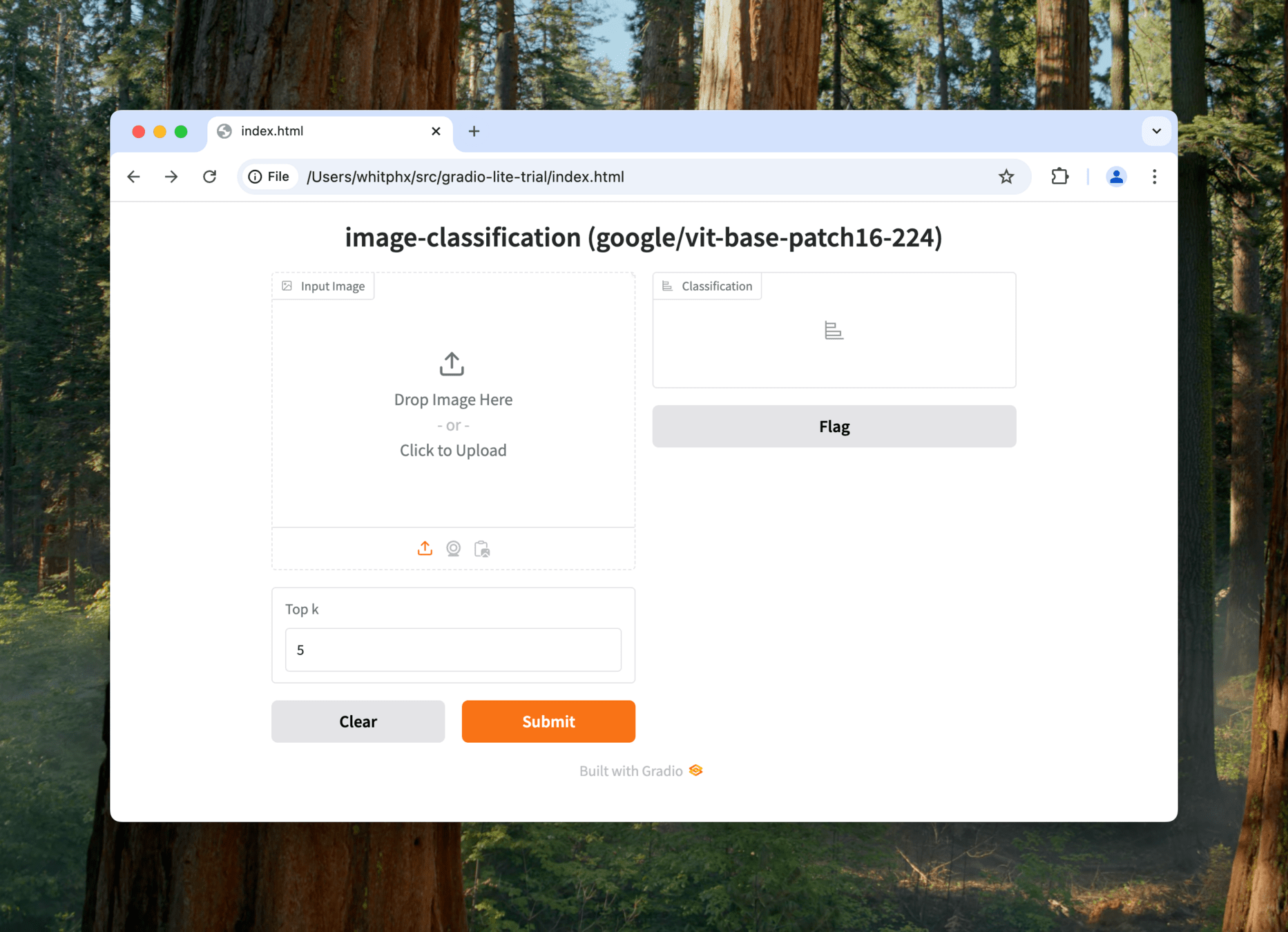

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline('image-classification')

demo = gr.Interface.from_pipeline(pipe)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

</html>

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline('sentiment-analysis')

demo = gr.Interface.from_pipeline(pipe)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

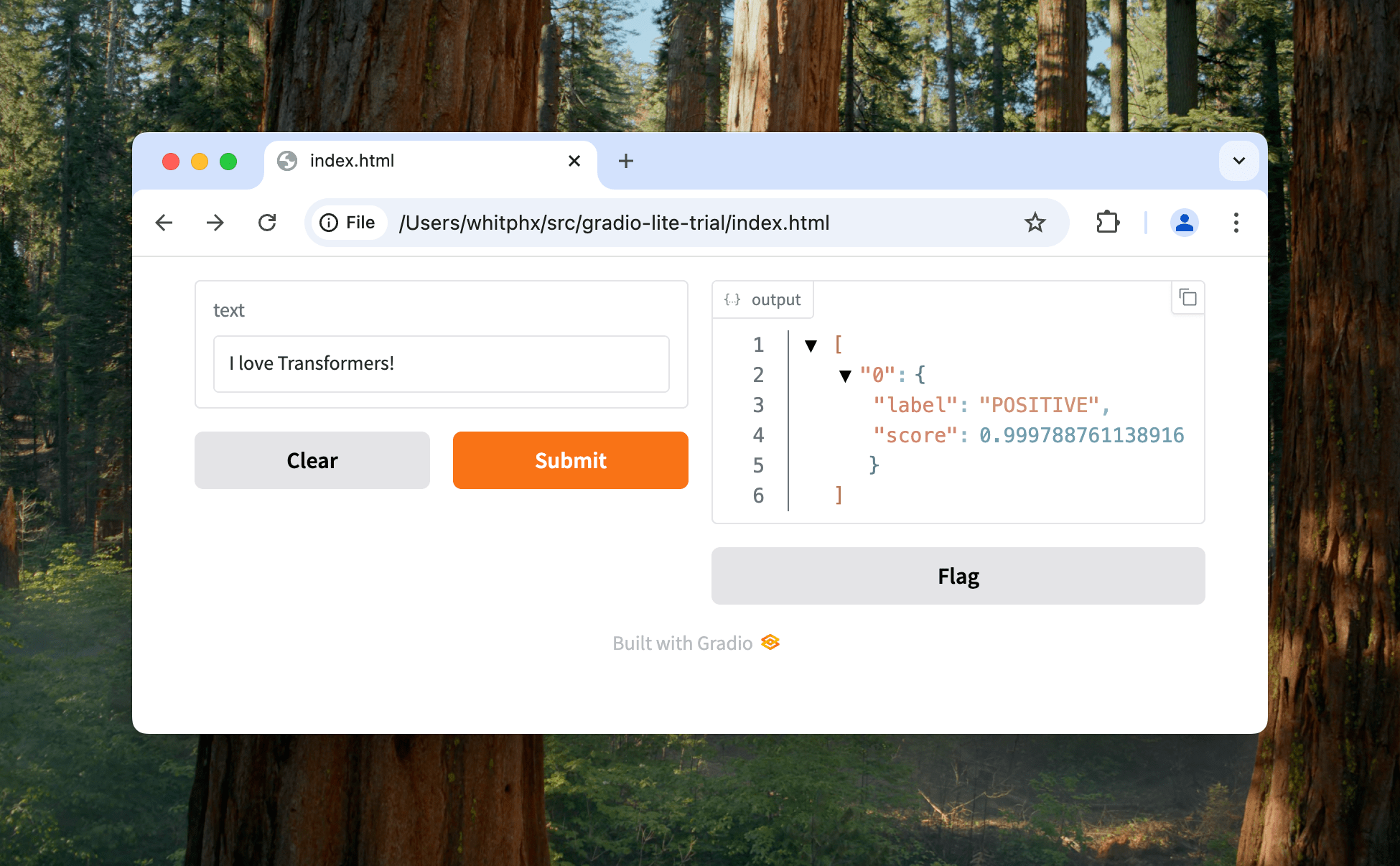

</html><html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline('sentiment-analysis')

async def fn(text):

result = await pipe(text)

return result

demo = gr.Interface(

fn=fn,

inputs=gr.Textbox(),

outputs=gr.JSON(),

)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

</html>

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline('sentiment-analysis')

async def fn(text):

result = await pipe(text)

return result

demo = gr.Interface(

fn=fn,

inputs=gr.Textbox(),

outputs=gr.JSON(),

)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

</html>

More examples...

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<title>Gradio-Lite: Serverless Gradio Running Entirely in Your Browser</title>

<meta name="description" content="Gradio-Lite: Serverless Gradio Running Entirely in Your Browser">

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

<style>

html, body {

margin: 0;

padding: 0;

height: 100%;

}

</style>

</head>

<body>

<gradio-lite>

<gradio-file name="app.py" entrypoint>

from transformers_js import import_transformers_js, as_url

import gradio as gr

transformers = await import_transformers_js()

pipeline = transformers.pipeline

pipe = await pipeline('object-detection', "Xenova/yolos-tiny")

async def detect(input_image):

result = await pipe(as_url(input_image))

gradio_labels = [

# List[Tuple[numpy.ndarray | Tuple[int, int, int, int], str]]

(

(

int(item["box"]["xmin"]),

int(item["box"]["ymin"]),

int(item["box"]["xmax"]),

int(item["box"]["ymax"]),

),

item["label"],

)

for item in result

]

annotated_image_data = input_image, gradio_labels

return annotated_image_data, result

demo = gr.Interface(

detect,

gr.Image(type="filepath"),

[

gr.AnnotatedImage(),

gr.JSON(),

],

examples=[

["cats.jpg"]

]

)

demo.launch()

</gradio-file>

<gradio-file name="cats.jpg" url="https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/cats.jpg" />

<gradio-requirements>

transformers_js_py

</gradio-requirements>

</gradio-lite>

</body>

</html>Object Detection

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<title>Gradio-Lite: Serverless Gradio Running Entirely in Your Browser</title>

<meta name="description" content="Gradio-Lite: Serverless Gradio Running Entirely in Your Browser">

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

<style>

html, body {

margin: 0;

padding: 0;

height: 100%;

}

</style>

</head>

<body>

<gradio-lite>

<gradio-file name="app.py" entrypoint>

from transformers_js import import_transformers_js, as_url

import gradio as gr

# Reference: https://huggingface.co/spaces/Xenova/yolov9-web/blob/main/index.js

IMAGE_SIZE = 256;

transformers = await import_transformers_js()

AutoProcessor = transformers.AutoProcessor

AutoModel = transformers.AutoModel

RawImage = transformers.RawImage

processor = await AutoProcessor.from_pretrained('Xenova/yolov9-c')

# For this demo, we resize the image to IMAGE_SIZE x IMAGE_SIZE

processor.feature_extractor.size = { "width": IMAGE_SIZE, "height": IMAGE_SIZE }

model = await AutoModel.from_pretrained('Xenova/yolov9-c')

async def detect(image_path):

image = await RawImage.read(image_path)

processed_input = await processor(image)

result = await model(images=processed_input["pixel_values"])

outputs = result["outputs"] # Tensor

np_outputs = outputs.to_numpy() # [xmin, ymin, xmax, ymax, score, id][]

gradio_labels = [

# List[Tuple[numpy.ndarray | Tuple[int, int, int, int], str]]

(

(

int(xmin * image.width / IMAGE_SIZE),

int(ymin * image.height / IMAGE_SIZE),

int(xmax * image.width / IMAGE_SIZE),

int(ymax * image.height / IMAGE_SIZE),

),

model.config.id2label[str(int(id))],

)

for xmin, ymin, xmax, ymax, score, id in np_outputs

]

annotated_image_data = image_path, gradio_labels

return annotated_image_data, np_outputs

demo = gr.Interface(

detect,

gr.Image(type="filepath"),

[

gr.AnnotatedImage(),

gr.JSON(),

],

examples=[

["cats.jpg"],

["city-streets.jpg"],

]

)

demo.launch()

</gradio-file>

<gradio-file name="cats.jpg" url="https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/cats.jpg" />

<gradio-file name="city-streets.jpg" url="https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/city-streets.jpg" />

<gradio-requirements>

transformers_js_py

</gradio-requirements>

</gradio-lite>

</body>

</html>Image: Object Detection

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<title>Gradio-Lite: Serverless Gradio Running Entirely in Your Browser</title>

<meta name="description" content="Gradio-Lite: Serverless Gradio Running Entirely in Your Browser">

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

<style>

html, body {

margin: 0;

padding: 0;

height: 100%;

}

</style>

</head>

<body>

<gradio-lite>

<gradio-file name="app.py" entrypoint>

import gradio as gr

import numpy as np

import PIL

import trimesh

from transformers_js import import_transformers_js, as_url

transformers = await import_transformers_js()

pipeline = transformers.pipeline

depth_estimator = await pipeline('depth-estimation', 'Xenova/depth-anything-small-hf');

def depthmap_to_glb_trimesh(depth_map, rgb_image, file_path):

assert depth_map.shape[:2] == rgb_image.shape[:2], "Depth map and RGB image must have the same dimensions"

# Generate vertices and faces

vertices = []

colors = []

faces = []

height, width = depth_map.shape

for y in range(height):

for x in range(width):

z = depth_map[y, x]

vertices.append([x, y, z])

colors.append(rgb_image[y, x])

# Create faces (2 triangles per pixel, except for edges)

for y in range(height - 1):

for x in range(width - 1):

top_left = y * width + x

top_right = top_left + 1

bottom_left = top_left + width

bottom_right = bottom_left + 1

faces.append([top_left, bottom_left, top_right])

faces.append([top_right, bottom_left, bottom_right])

# Convert to numpy arrays

vertices = np.array(vertices, dtype=np.float64)

colors = np.array(colors, dtype=np.uint8)

faces = np.array(faces, dtype=np.int32)

mesh = trimesh.Trimesh(vertices=vertices, faces=faces, vertex_colors=colors, process=False)

# Export to GLB

mesh.export(file_path, file_type='glb')

def invert_depth(depth_map):

max_depth = np.max(depth_map)

return max_depth - depth_map

def invert_xy(map):

return map[::-1, ::-1]

async def estimate(image_path, depth_scale):

image = PIL.Image.open(image_path)

image.thumbnail((384, 384)) # Resize the image keeping the aspect ratio

predictions = await depth_estimator(as_url(image_path))

depth_image = predictions["depth"].to_pil()

tensor = predictions["predicted_depth"]

tensor_data = {

"dims": tensor.dims,

"type": tensor.type,

"size": tensor.size,

}

# Construct the 3D model from the depth map and the RGB image

depth = predictions["predicted_depth"].to_numpy()

depth = invert_depth(depth)

depth = invert_xy(depth)

depth = depth * depth_scale

# The model outputs the depth map in a different size than the input image.

# So we resize the depth map to match the original image size.

depth = np.array(PIL.Image.fromarray(depth).resize(image.size))

image_array = np.asarray(image)

image_array = invert_xy(image_array)

glb_file_path = "output.glb"

depthmap_to_glb_trimesh(depth, image_array, glb_file_path)

return depth_image, glb_file_path, tensor_data

demo = gr.Interface(

fn=estimate,

inputs=[

gr.Image(type="filepath"),

gr.Slider(minimum=1, maximum=100, value=10, label="Depth Scale")

],

outputs=[

gr.Image(label="Depth Image"),

gr.Model3D(label="3D Model"),

gr.JSON(label="Tensor"),

],

examples=[

["bread_small.png"],

["cats.jpg"],

]

)

demo.launch()

</gradio-file>

<gradio-file name="bread_small.png" url="https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/bread_small.png" />

<gradio-file name="cats.jpg" url="https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/cats.jpg" />

<gradio-requirements>

transformers_js_py

trimesh

</gradio-requirements>

</gradio-lite>

</body>

</html>

Image & 3D: Depth Estimation

<!DOCTYPE html>

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

<gradio-requirements>

transformers_js_py

</gradio-requirements>

<gradio-file name="app.py" entrypoint>

from transformers_js import import_transformers_js, as_url

import gradio as gr

transformers = await import_transformers_js()

pipeline = transformers.pipeline

pipe = await pipeline('zero-shot-image-classification')

async def classify(image, classes):

if not image:

return {}

classes = [x for c in classes.split(",") if (x := c.strip())]

if not classes:

return {}

data = await pipe(as_url(image), classes)

result = {item['label']: round(item['score'], 2) for item in data}

return result

demo = gr.Interface(

classify,

[

gr.Image(label="Input image", sources=["webcam"], type="filepath", streaming=True),

gr.Textbox(label="Classes separated by commas")

],

gr.Label(),

live=True

)

demo.launch()

</gradio-file>

</gradio-lite>

</body>

</html>

Zero-shot Image Classification

- Privacy

- Low latency

- Offline capability

- Scalability without servers

- Low cost

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<title>Gradio-Lite: Serverless Gradio Running Entirely in Your Browser</title>

<meta name="description" content="Gradio-Lite: Serverless Gradio Running Entirely in Your Browser">

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

<style>

html, body {

margin: 0;

padding: 0;

height: 100%;

}

</style>

</head>

<body>

<gradio-lite>

<gradio-file name="app.py" entrypoint>

from transformers_js_py import import_transformers_js, read_audio

import gradio as gr

transformers = await import_transformers_js()

pipeline = transformers.pipeline

pipe = await pipeline('automatic-speech-recognition', 'Xenova/whisper-tiny.en')

async def asr(audio_path):

audio = read_audio(audio_path, 16000)

result = await pipe(audio)

return result["text"]

demo = gr.Interface(

asr,

gr.Audio(type="filepath"),

gr.Text(),

examples=[

["jfk.wav"],

]

)

demo.launch()

</gradio-file>

<gradio-file name="jfk.wav" url="https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/jfk.wav" />

<gradio-requirements>

transformers_js_py

numpy

scipy

</gradio-requirements>

</gradio-lite>

</body>

</html>Speech-to-Text

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

<gradio-requirements>

transformers_js_py

</gradio-requirements>

<gradio-file name="app.py" entrypoint>

from transformers_js_py import import_transformers_js

import gradio as gr

import numpy as np

transformers_js = await import_transformers_js("3.0.2")

pipeline = transformers_js.pipeline

synthesizer = await pipeline(

'text-to-speech',

'Xenova/speecht5_tts',

{ "quantized": False }

)

speaker_embeddings = 'https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/speaker_embeddings.bin';

async def synthesize(text):

out = await synthesizer(text, { "speaker_embeddings": speaker_embeddings });

audio_data_memory_view = out["audio"]

sampling_rate = out["sampling_rate"]

audio_data = np.frombuffer(audio_data_memory_view, dtype=np.float32)

audio_data_16bit = (audio_data * 32767).astype(np.int16)

return sampling_rate, audio_data_16bit

demo = gr.Interface(synthesize, "textbox", "audio")

demo.launch()

</gradio-file>

</gradio-lite>

</body>

</html>Text-to-Speech

Supported tasks/models

Text

Image/Video

Audio

Multimodal

Question Answering, Summarization, Text Classification, Text Generation, Text-to-text Generation, Token Classification, Translation, Zero-Shot Classification, Feature Extraction, etc...Depth Estimation, Image Classification, Image SegmentationImage-to-Image, Mask Generation, Object Detection, Video Classification, Unconditional Image Generation, Image Feature ExtractionAudio Classification, Audio-to-Audio, Automatic Speech Recognition, Text-to-SpeechDocument Question Answering, Image-to-Text, Text-to-Image, Visual Question Answering, Zero-Shot Audio Classification, Zero-Shot Image Classification, Zero-Shot Object DetectionDeployment/Delivery

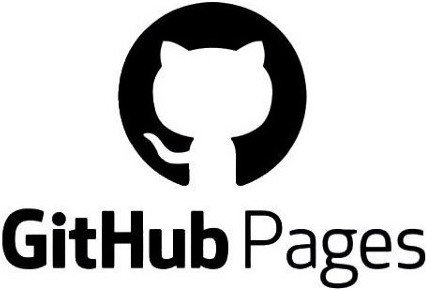

Hosting a static page

<html>

<head>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.js"></script>

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@gradio/lite/dist/lite.css" />

</head>

<body>

<gradio-lite>

import gradio as gr

from transformers_js_py import pipeline

pipe = await pipeline('sentiment-analysis')

async def fn(text):

result = await pipe(text)

return result

demo = gr.Interface(

fn=fn,

inputs=gr.Textbox(),

outputs=gr.JSON(),

)

demo.launch()

<gradio-requirements>

transformers-js-py

</gradio-requirements>

</gradio-lite>

</body>

</html>

Static HTML file

And any your static web server...

...or even distributing the HTML file to your colleagues 😅

Gradio Playground

Use cases

Does it replace JS?

No.

In-browser benefits

- Privacy

- Low latency

- Offline capability

- Scalability without servers

- Low cost

Python/Gradio benefits

- AI ecosystem

- Built-in components for AI apps

- Easy and quick development

- Privacy

- Low latency

- Offline capability

- Scalability without servers

- Low cost

- AI ecosystem

- Built-in components for AI apps

- Easy and quick development

Pros

Cons

- Performance: Initial payload size, loading time, memory usage, etc.

- Not flexible: pre-defined components and styles.

- Slight difference from the normal Python runtime, e.g. event-loop nature.

Fine-tuned UI/UX

Prototype/demo

Low-effort

High-effort

JS frontend +

Transformers.js

Gradio-Lite + Transformers.js.py

Fine-tuned UI/UX

Prototype/demo

Low-effort

High-effort

JS frontend +

Transformers.js

Gradio-Lite + Transformers.js.py

In-browser AI

Server-side AI

Gradio + Transformers

JS frontend +

Transformers

Server-side

Architecture

Client-side

Prototype

Researchers

Production

Production

Frontend-devs

Gradio

Transformers

()

Transformers

Gradio-Lite

Transformers.js.py

(React, ...)

Transformers.js

Phase

- Early-stage demo/prototypes

- For communications between researchers, developers, managements, clients, etc

- In-office/private tools

- and...

Gradio-Lite + Transformers.js

are good for...

Please try them out!

Gradio-Lite:

Serverless Gradio Running Entirely in Your Browser

Building Serverless Machine Learning Apps

with Gradio-Lite and Transformers.js

https://www.gradio.app/guides/gradio-lite-and-transformers-js

Gradio-Lite Transformers.js

In-browser LLM app in pure Python:

Gemini Nano + Gradio-Lite

https://huggingface.co/blog/whitphx/in-browser-llm-gemini-nano-gradio-lite

Yuichiro Tachibana

@whitphx

- Pythonista

- OSS enthusiast

- ML Developer Advocate at Hugging Face

- Streamlit Creator

In-browser AI apps with Gradio and Transformers

By whitphx

In-browser AI apps with Gradio and Transformers

- 683