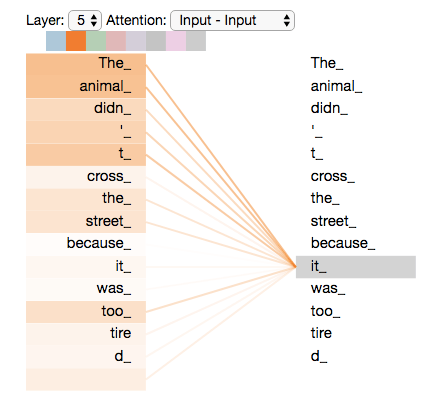

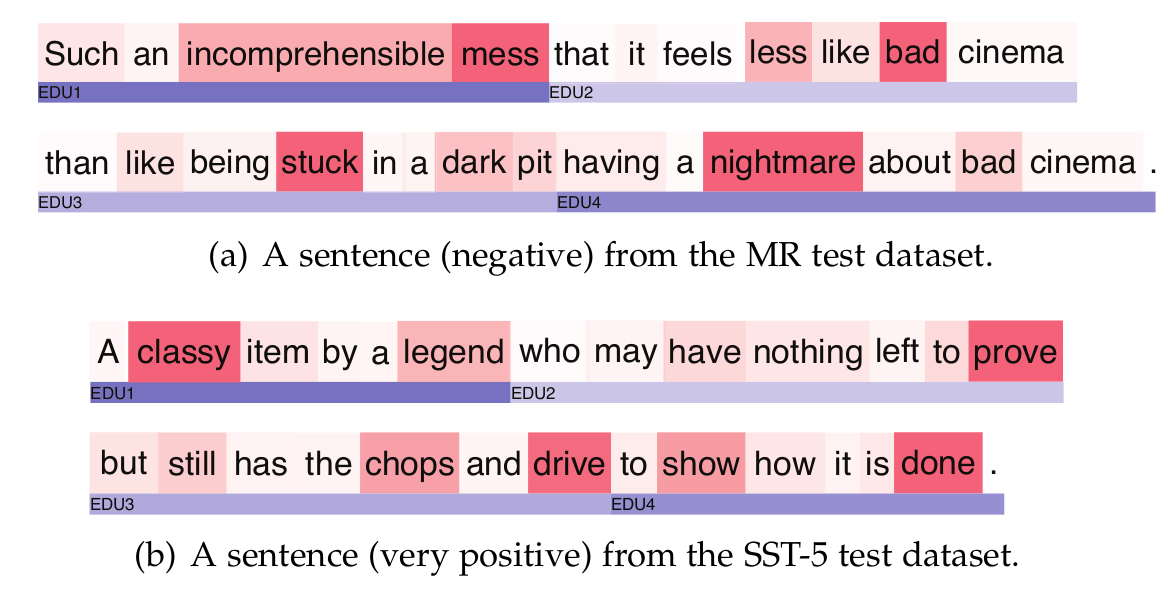

Attention

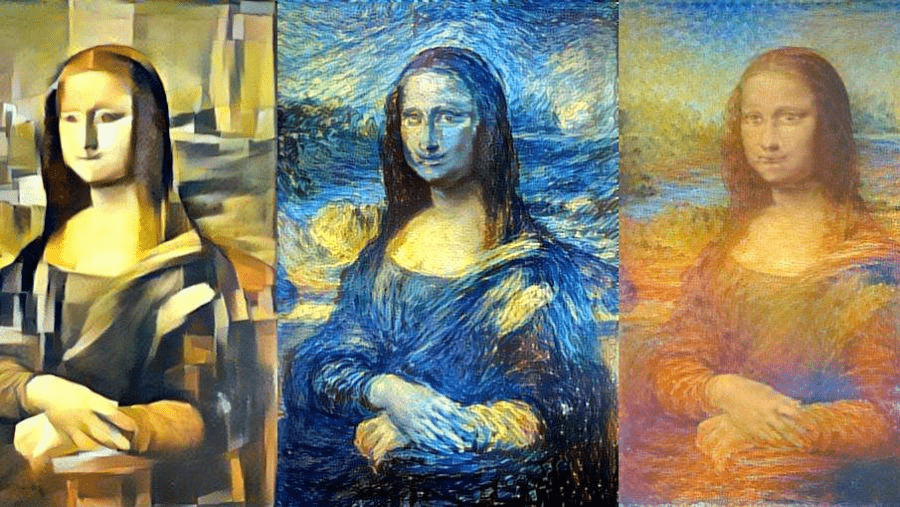

In Computer Vision

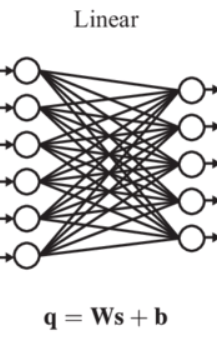

Older approaches

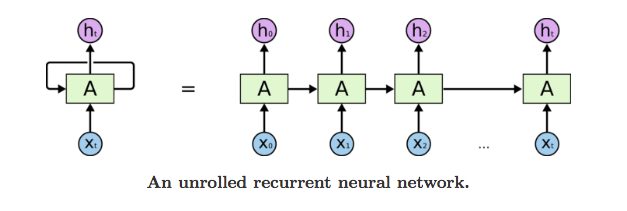

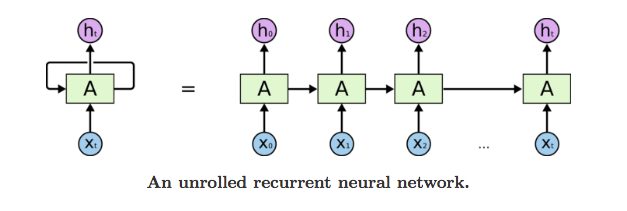

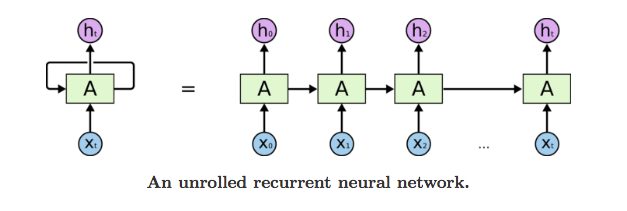

For language models: RNN

One model is running over every word

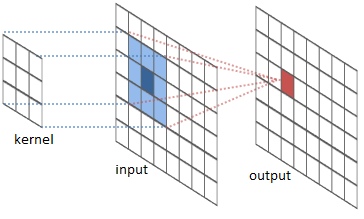

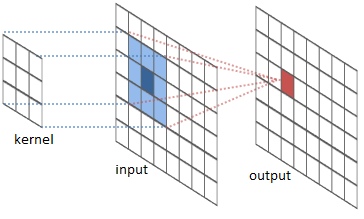

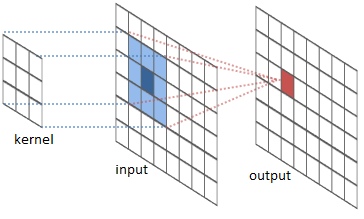

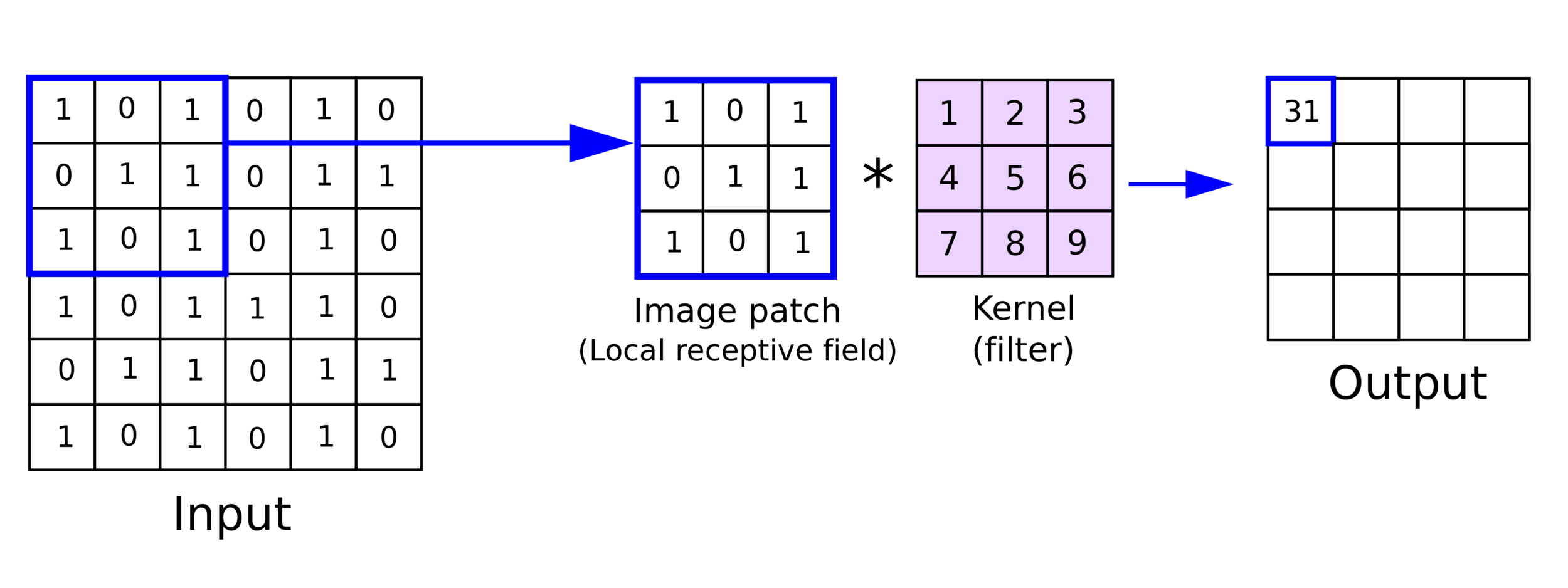

For computer vision: Convolution

Only a small block of pixels processed at a time

Convolutional Layer

Recursive Neural Network

Older approaches

For language models: RNN

One model is running over every word

For computer vision: Convolution

Only a small block of pixels processed at a time

Convolutional Layer

Recursive Neural Network

Older approaches

Ideally we'd process everything at once

All words / image pixels being fed to a single layer

Motivation

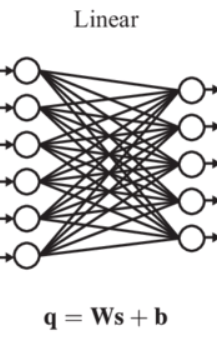

Linear & Convolutional layers rely on an input of fixed size

Motivation

But there's tasks with inputs of arbitrary size

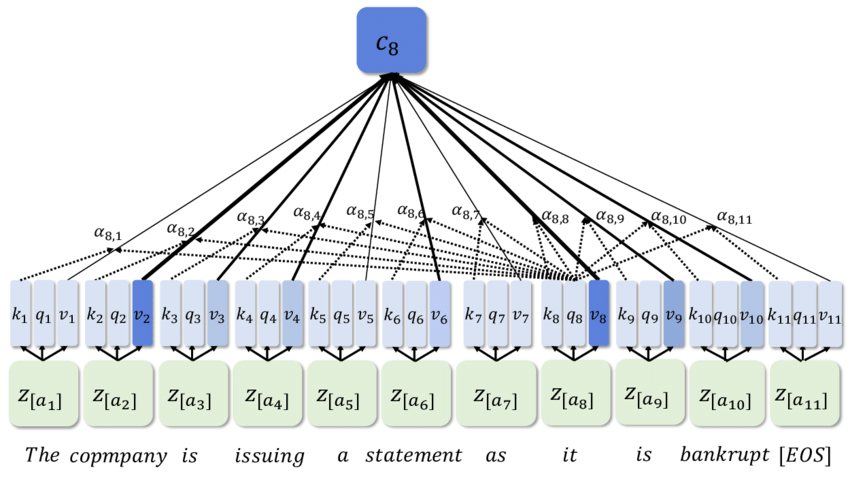

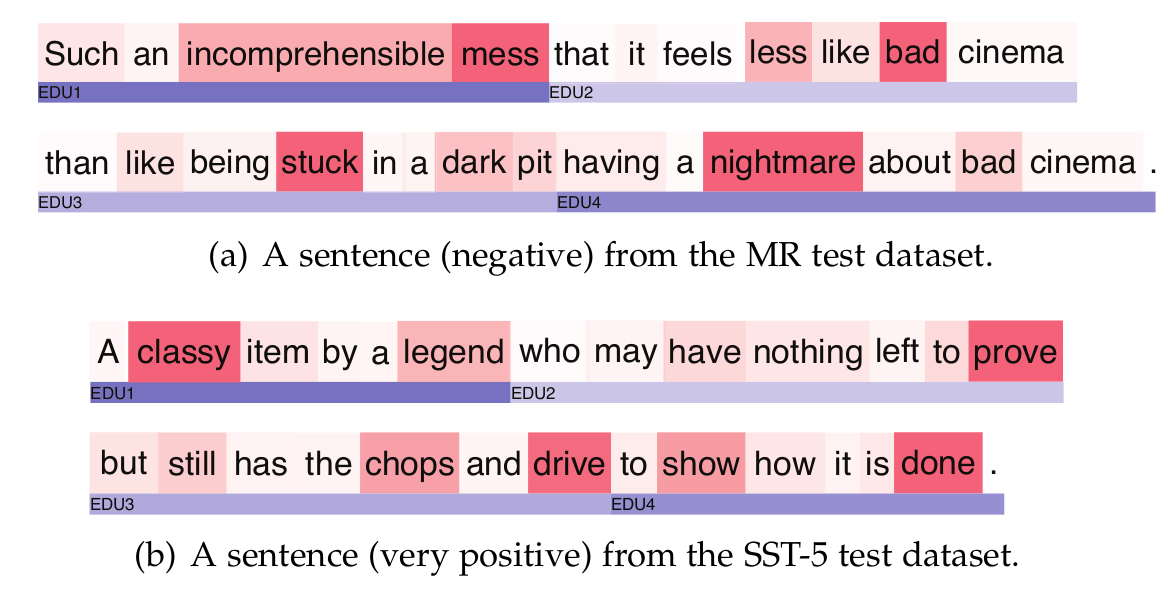

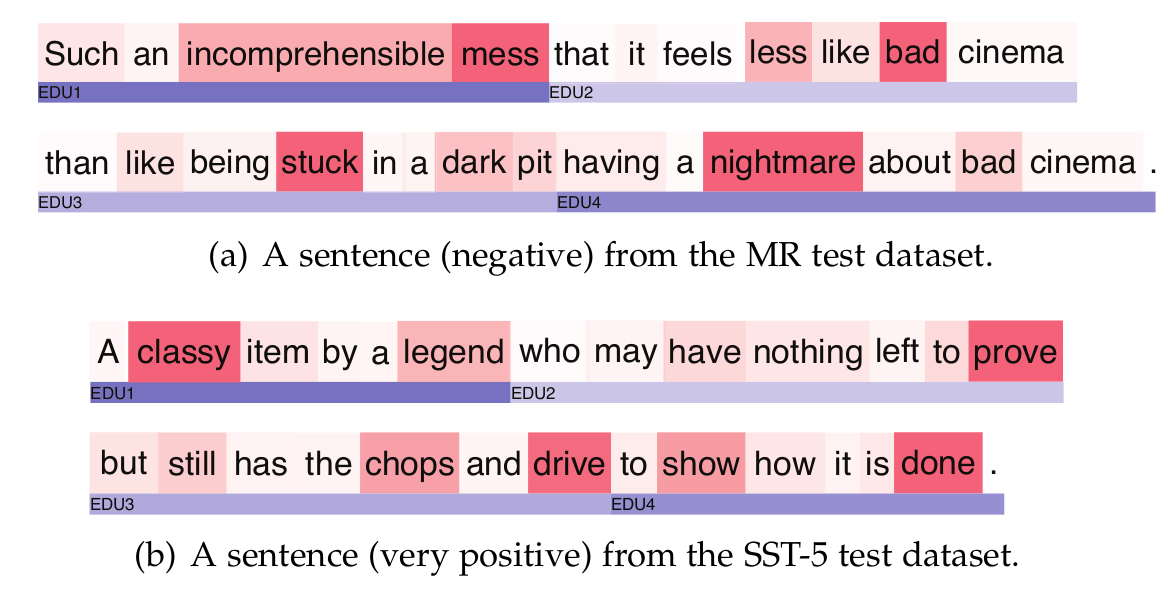

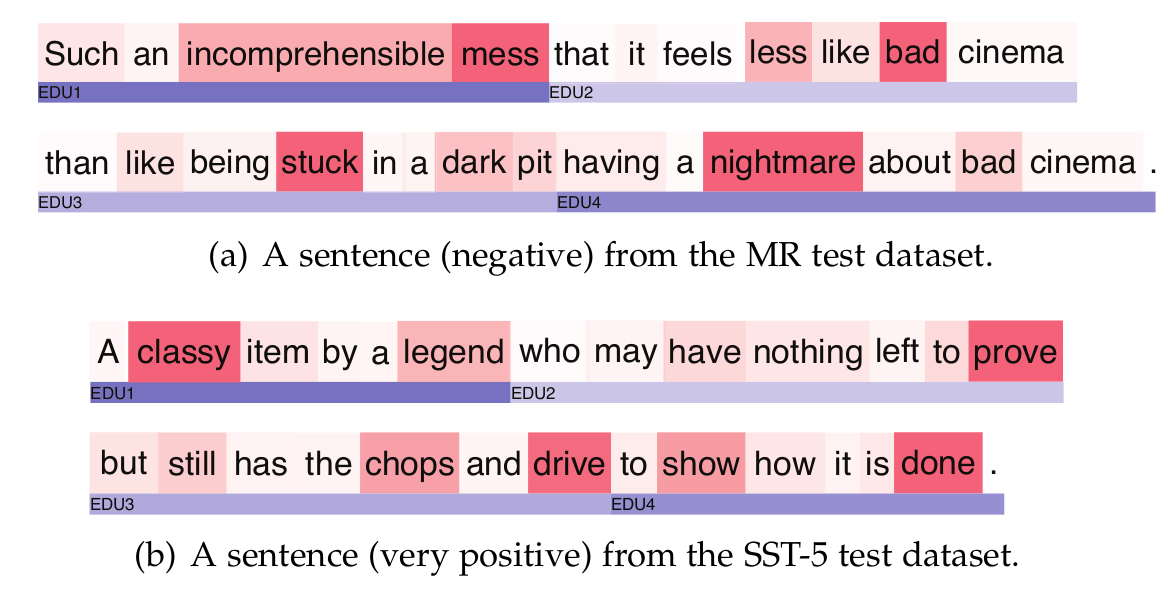

Intuition

Naive idea:

Have the same "Linear Layer", but derive weights on the go

Weights are stored in a matrix

Weights are derived from the two elements

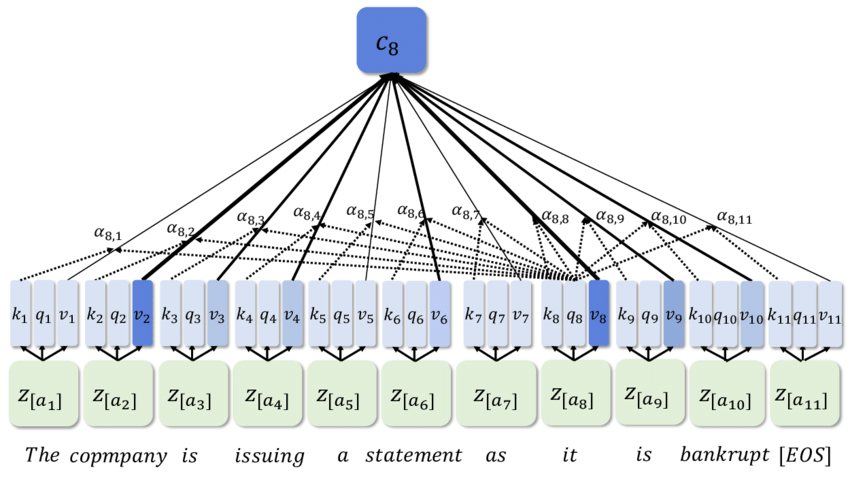

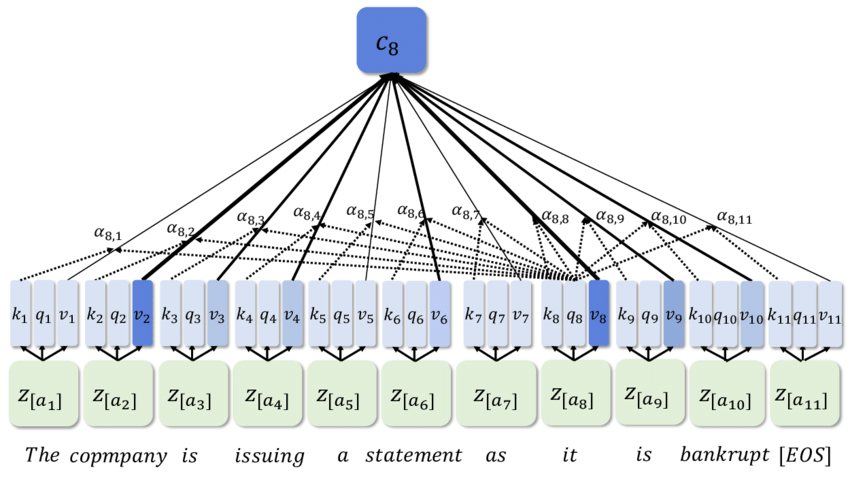

Intuition

Naive idea:

Have the same "Linear Layer", but derive weights on the go

W(i, j) - significance of word i to word j

Intuition

Just use the similarity between words

w_i is a word token (a vector)

Intuition

Cosine Similarity is convenient

Intuition

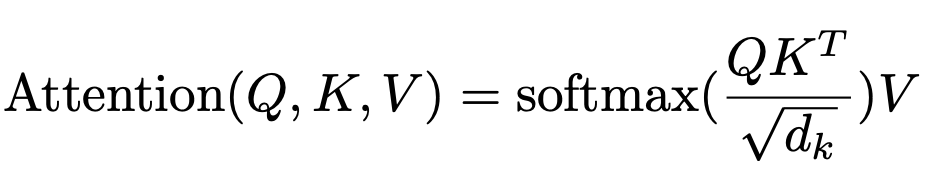

Give even more control to the NNs!

Intuition

Where does that formula come from?

sim is the similarity function

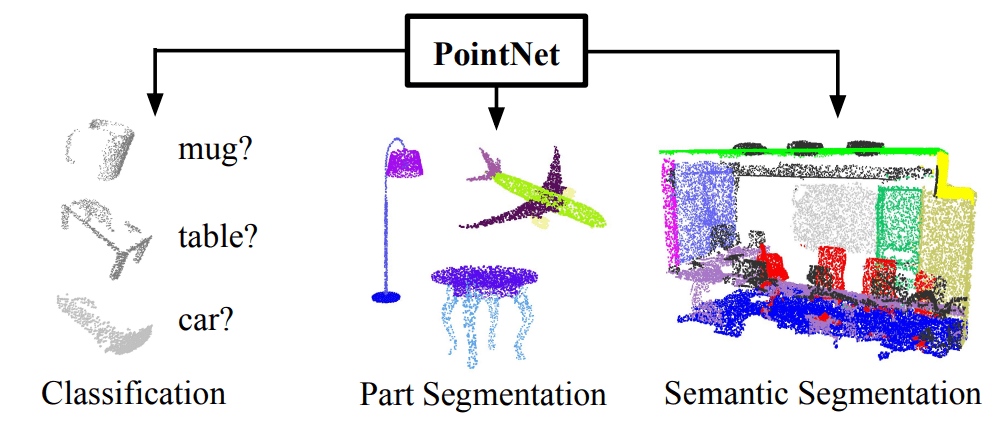

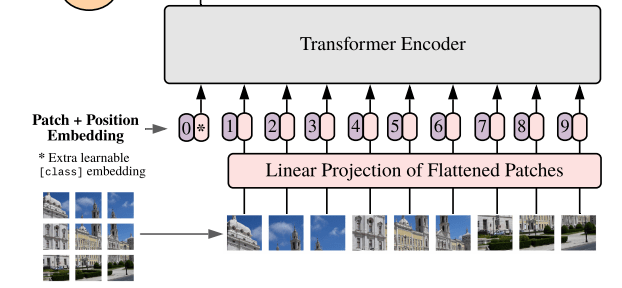

ViT

ViT (Vision Transformer) uses Positional Encoding

To account for the 2D positioning

ViT

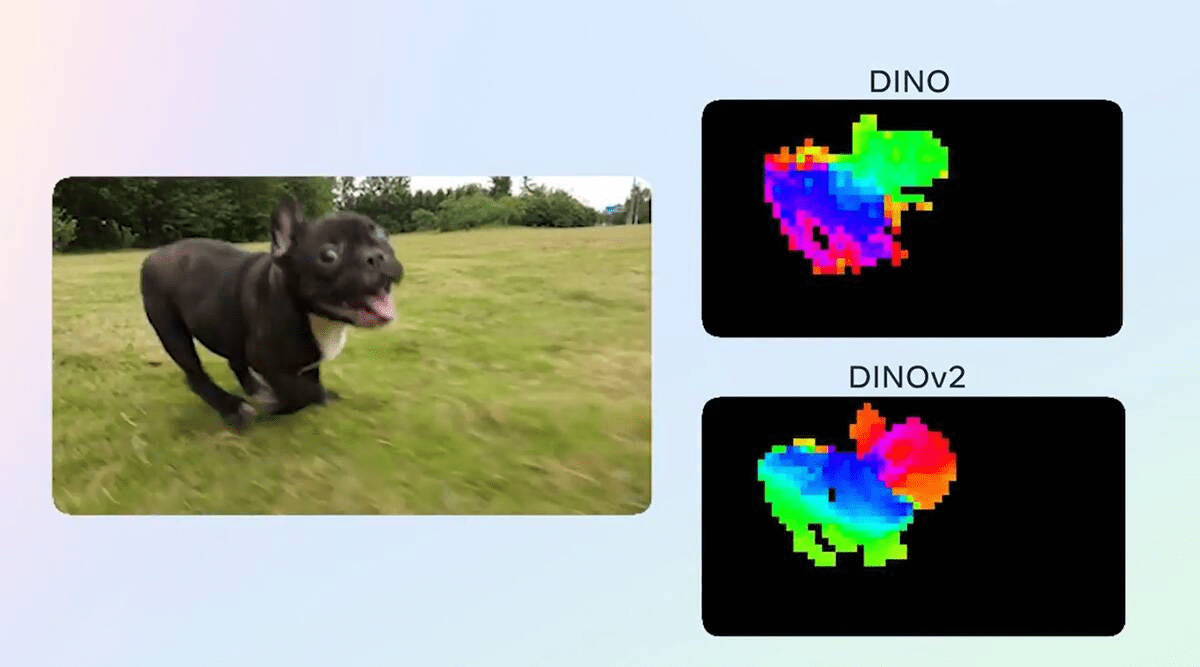

Segment Anything uses big ViTs

DiNO uses big ViTs

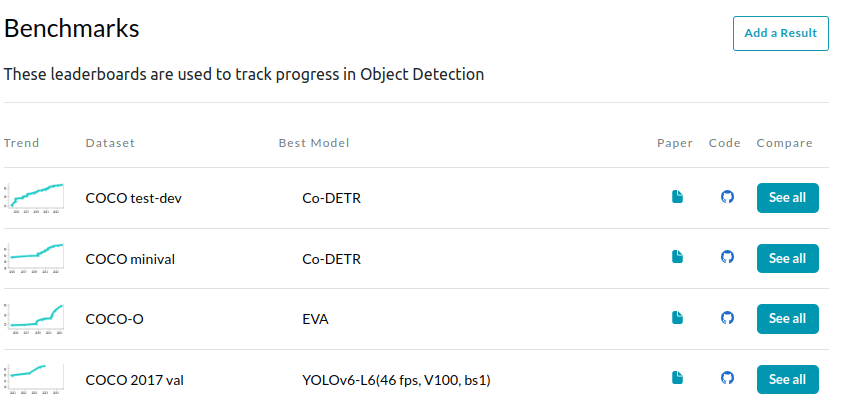

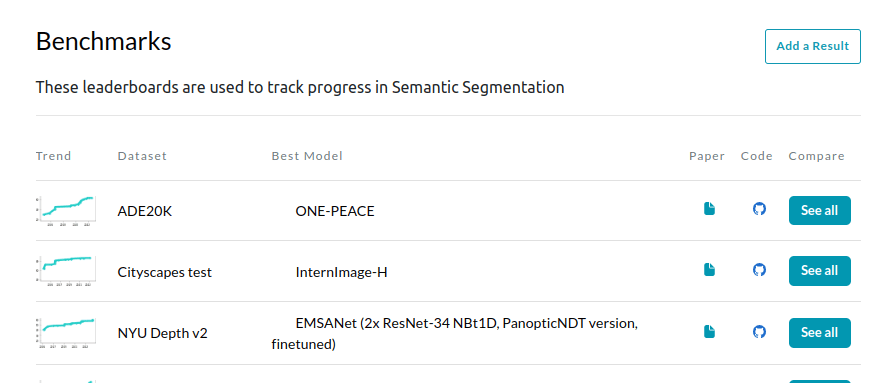

CV benchmarks are mostly overtaken by ViTs

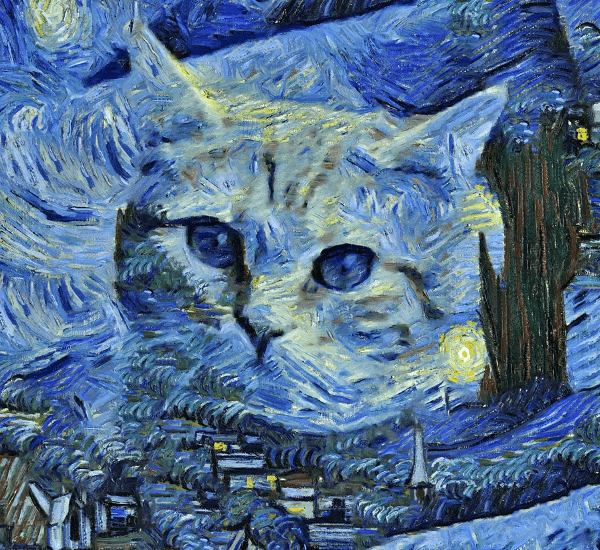

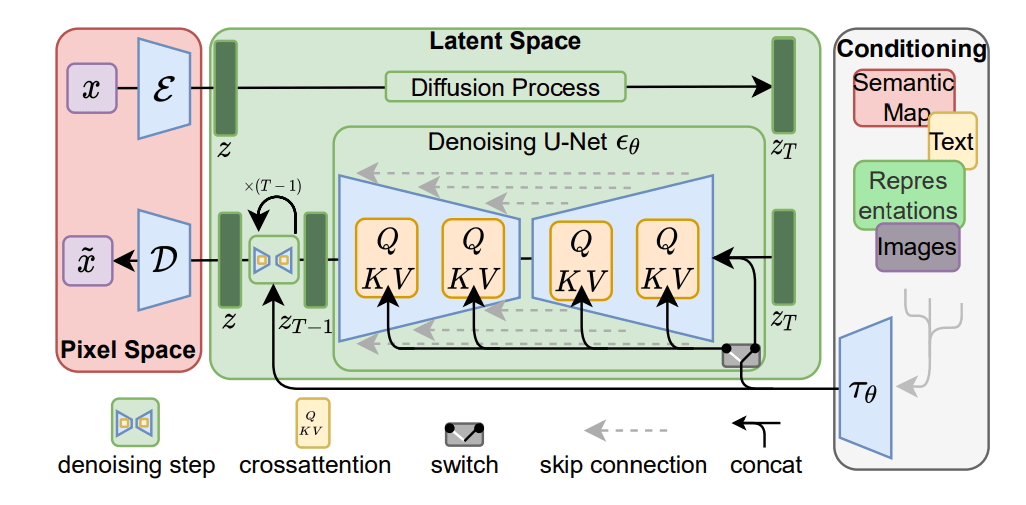

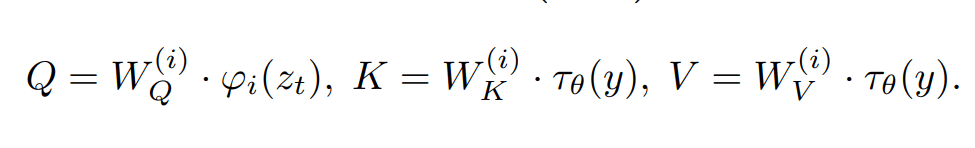

Stable Diffusion

The actual denoising diffusion U-Net is a ViT

The text is integrated there

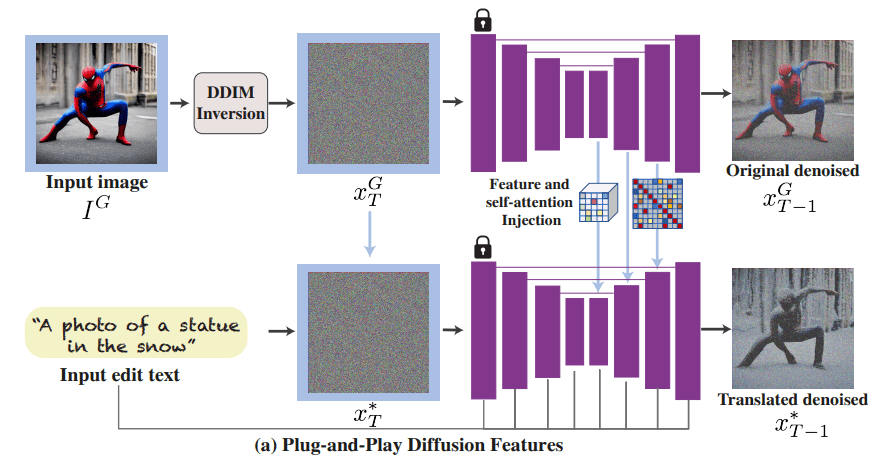

Image editing tricks

During diffusion, intermediate activations from generating original image

Are injected during generation of the new image

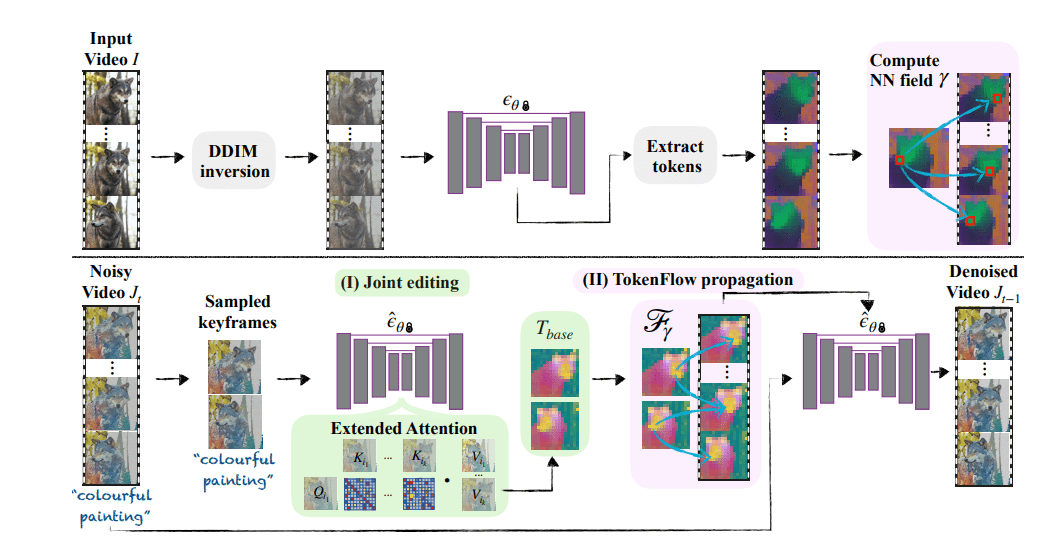

Video editing tricks

Similar thing for video, but we deform the features using Optical Flow

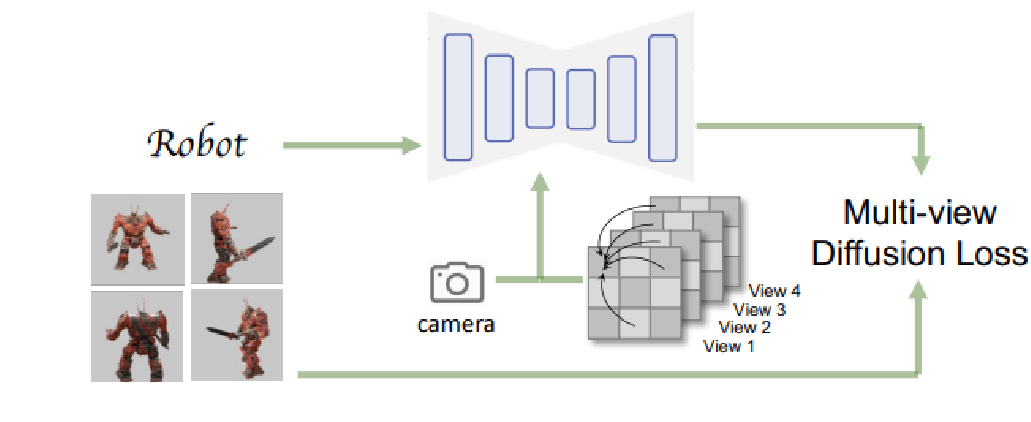

Text-to-3D

Attention is applied cross-images

Text-to-3D

Attention is used to inject image features into a 3D structure

Attention in CV

By xallt

Attention in CV

- 220