PostgreSQL at Scale

Writing reliable migrations

#WhoAmI

Previously:

zil.kucinskas@gmail.com

Ruby on Rails migrations

class RemoveSomeColumnFromUsers < ActiveRecord::Migration[6.0]

def change

remove_column :users, :some_column

end

endDeveloper writes a file:

rails db:migrateRun (for example at deployment time):

ALTER TABLE "users" DROP COLUMN "some_column";Rails will generate and execute the following DDL:

Postgres main job is to keep your data consistent

Schema changes are challenging. It's like changing a car tire while it barrels down the motorway.

Consistency problems

Case #1 - column is added in the middle of a SELECT statement's execution

Expectation - we don’t want some rows in the result set to have the new column and others not.

Case #2 - column is dropped between the issuance of an UPDATE that writes to it and the actual execution of that UPDATE

Expectation - we certainly don’t want to write that obsolete value to some undefined location in memory or on disk!

Solution: PostgreSQL locking

Generally in Postgres, we have 3 main mechanisms of locking:

* Table-level locks

* Row-level locks

* Advisory locks

For most DDL changes, an ACCESS EXCLUSIVE lock will be required, a very strict lock that prevents all reads and all writes for the duration of the query.

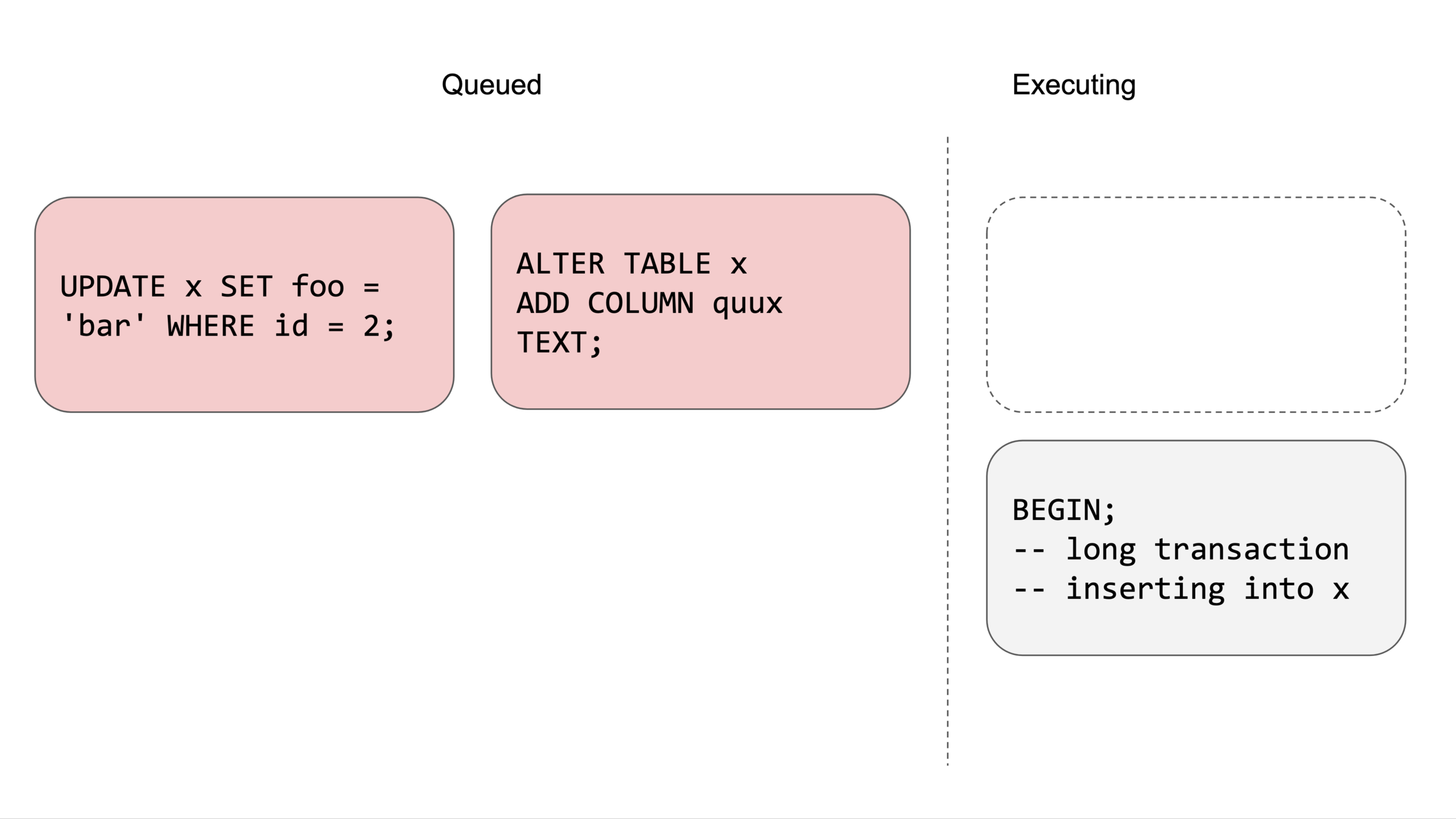

Locking problems

Queries blocked for a long time by slow transaction and migration

When developers learn about locks?Common Case #1 - Deadlocks

When developers learn about locks?Common Case #2 - Migration

When I realize that my simple migration caused a major production outage

Reliable Migrations

Pitfalls and solutions

🚫 Removing a column

class RemoveSomeColumnFromUsers < ActiveRecord::Migration[6.0]

def change

remove_column :users, :some_column

end

endProblems in production

PGError: ERROR: column "some_column" does not exist✅ Removing a column

class User < ApplicationRecord

self.ignored_columns = ["some_column"]

endclass RemoveSomeColumnFromUsers < ActiveRecord::Migration[6.0]

def change

remove_column :users, :some_column

end

end1. Ignore from the cache

2. Deploy the code

3. Write a migration to remove the column

4. Deploy and run migration

Note: Don't forget to clean up the code

🚫 Adding a column with a default value

class AddSomeColumnToUsers < ActiveRecord::Migration[6.0]

def change

add_column :users, :some_column, :text, default: "default_value"

end

endProblems

* Adding a column with a default value to an existing table causes the entire table to be rewritten.

Note: This operation is safe in Postgres 11+

✅ Adding a column with a default value

class AddSomeColumnToUsers < ActiveRecord::Migration[6.0]

def up

add_column :users, :some_column, :text

change_column_default :users, :some_column, "default_value"

end

def down

remove_column :users, :some_column

end

end🚫 Backfilling data

class AddSomeColumnToUsers < ActiveRecord::Migration[6.0]

def change

add_column :users, :some_column, :text

User.update_all some_column: "default_value"

end

endProblems:

* Backfilling in the same transaction that alters a table locks the table for the duration of the backfill.

* Running a single query to update data can cause issues for large tables

✅ Backfilling data

class BackfillSomeColumn < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def up

User.unscoped.in_batches do |relation|

relation.update_all some_column: "default_value"

sleep(0.01) # throttle

end

end

endThree key elements of backfilling data safely:

* Batching

* Throttling

* Running outside of a transaction (Rails console, background jobs or disable_ddl_transaction!)

🚫 Renaming or changing the type of a column

class RenameOrChangeSomeColumn < ActiveRecord::Migration[6.0]

def change

# Example A. Renaming column

rename_column :users, :some_column, :new_name

# Example B. Changing column type

change_column :users, :some_column, :new_type

end

endCaveats - safe changes:

- Changing between varchar and text columns [9.1+]

- Increasing the precision of a decimal or numeric column [9.2+]

- Making a decimal or numeric column unconstrained [9.1+]

- Changing between timestamp and timestamptz columns when session time zone is UTC in Postgres 12+

✅ Renaming or changing the type of a column

- Create a new column

- Write to both columns

- Backfill data from the old column to the new column

- Move reads from the old column to the new column

- Stop writing to the old column

- Drop the old column

🚫 Renaming a table

class RenameUsersToCustomers < ActiveRecord::Migration[6.0]

def change

rename_table :users, :customers

end

end✅ Renaming a table

- Create a new table

- Write to both tables

- Backfill data from the old table to new table

- Move reads from the old table to the new table

- Stop writing to the old table

- Drop the old table

🚫 Creating a table with the force option

class CreateUsers < ActiveRecord::Migration[6.0]

def change

create_table :users, force: true do |t|

# ...

end

end

endProblems in production:

* The force option can drop an existing table.

✅ Creating a table with the force option

class CreateUsers < ActiveRecord::Migration[6.0]

def change

create_table :users do |t|

# ...

end

end

end🚫 Using change_column_null with a default value

class ChangeSomeColumnNull < ActiveRecord::Migration[6.0]

def change

change_column_null :users, :some_column, false, "default_value"

end

endProblems in production:

* This generates a single UPDATE statement to set the default value.

✅ Using change_column_null with a default value

class ChangeSomeColumnNull < ActiveRecord::Migration[6.0]

def change

change_column_null :users, :some_column, false

end

end1. Backfill the column safely.

2. Execute migration:

Note: In Postgres, change_column_null is still not safe with this method.

🚫 Setting NOT NULL on an existing column

class SetSomeColumnNotNull < ActiveRecord::Migration[6.0]

def change

change_column_null :users, :some_column, false

end

endProblems in production:

In Postgres, setting NOT NULL on an existing column requires an AccessExclusiveLock, which is expensive on large tables.

✅ Setting NOT NULL on an existing column

class SetSomeColumnNotNull < ActiveRecord::Migration[6.0]

def change

execute 'ALTER TABLE "users" ADD CONSTRAINT "users_some_column_null"'\

'CHECK ("some_column" IS NOT NULL) NOT VALID'

end

endclass ValidateSomeColumnNotNull < ActiveRecord::Migration[6.0]

def change

execute 'ALTER TABLE "users" VALIDATE CONSTRAINT "users_some_column_null"'

end

endNote: This is not 100% the same as NOT NULL column constraint. Here’s a good explanation.

1. Add a constraint

2. Validate it in a separate transaction

🚫 Adding an index

class AddSomeIndexToUsers < ActiveRecord::Migration[6.0]

def change

add_index :users, :some_column

end

endProblems in production

* In Postgres, adding an index non-concurrently locks the table.

✅ Adding an index

class AddSomeIndexToUsers < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def change

add_index :users, :some_column, algorithm: :concurrently

end

endAdd indexes concurrently:

* If you forget disable_ddl_transaction!, the migration will fail.

* Also, note that indexes on new tables (those created in the same migration) don’t require this.

🚫 Removing an index

class RemoveSomeIndexFromUsers < ActiveRecord::Migration[6.0]

def change

remove_index :users, :some_column

end

endProblems in production

* In Postgres, removing an index non-concurrently locks the table for a brief period.

✅ Removing an index

class AddSomeIndexToUsers < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def change

remove_index :users, :some_column, algorithm: :concurrently

end

endRemove indexes concurrently:

🚫 Adding a reference

class AddReferenceToUsers < ActiveRecord::Migration[6.0]

def change

add_reference :users, :city

end

endProblems in production

* Rails adds an index non-concurrently to references by default, which is problematic for Postgres.

✅ Adding a reference

class AddReferenceToUsers < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def change

add_reference :users, :city, index: { algorithm: :concurrently }

end

endMake sure the index is added concurrently.

🚫 Adding a foreign key

class AddForeignKeyOnUsers < ActiveRecord::Migration[6.0]

def change

# Case A.

add_foreign_key :users, :orders

# Case B.

add_reference :users, :order, foreign_key: true

end

endProblems in production

* In Postgres, new foreign keys are validated by default, which acquires a ShareRowExclusiveLock that can be expensive on large tables.

✅ Adding a foreign key

class AddForeignKeyOnUsers < ActiveRecord::Migration[6.0]

def change

add_foreign_key :users, :orders, validate: false

end

endInstead, validate it in a separate migration with a more agreeable RowShareLock. This approach is documented by Postgres to have “the least impact on other work.”

class ValidateForeignKeyOnUsers < ActiveRecord::Migration[6.0]

def change

validate_foreign_key :users, :orders

end

endThen validate it in a separate migration.

🚫 Adding a json column

class AddPropertiesToUsers < ActiveRecord::Migration[6.0]

def change

add_column :users, :properties, :json

end

endProblems in production

* In Postgres, there’s no equality operator for the json column type, which can cause errors for existing SELECT DISTINCT queries.

✅ Adding a json column

class AddPropertiesToUsers < ActiveRecord::Migration[6.0]

def change

add_column :users, :properties, :jsonb

end

endUse jsonb instead:

Closing remarks

Timeouts

ALTER ROLE myuser SET statement_timeout = '1h';

ALTER ROLE myuser SET lock_timeout = '10s';A good practice is to set:

* long statement timeout

* short lock timeout

Impact:

* migrations can run for a while, and if a migration can’t acquire a lock in a timely manner, other statements won’t be stuck behind it.

Recap

| Operation | Safety | Exceptions / Solutions |

|---|---|---|

| Creating tables | ✅ Safe | |

| Removing tables | ✅ Safe | |

| Adding columns | ⚠️ Warning |

✅ No constraints on the column ⚠️ Prefer jsonb over json |

| Removing columns | ⚠️ Warning | ✅ When telling AR to ignore column first |

| Renaming columns or renaming tables | 🚫 Unsafe | ✅ Add new column/table, write to both, remove the old one |

Recap

| Operation | Safety | Exceptions / Solutions |

|---|---|---|

| Creating indexes | ⚠️ Warning | ✅ Create concurrently |

| Removing indexes | ⚠️ Warning | ✅ Create concurrently |

| Renaming index | 🚫 Unsafe | ⚠️ Postgres 12 lowers barrier |

| Adding a reference | ⚠️ Warning | ✅ Create concurrently |

| Removing reference | ✅ Safe | |

| Adding a foreign key | ⚠️ Warning | ✅ Add foreign key without validation and validate in a separate migration |

| Removing a foreign key | ✅ Safe |

Useful gems

* strong_migrations (ankane from Instacart) - Catch unsafe migrations in development

* nandi (GoCardless) - Fear free PostgreSQL migrations for Rails

* zero_downtime_migrations (LendingHome) - Zero downtime migrations with ActiveRecord 3+ and PostgreSQL

* pg_ha_migrations (Braintree) - Enforces DDL/migration safety in Ruby on Rails project with an emphasis on explicitly choosing trade-offs and avoiding unnecessary magic.

* safe-pg-migrations (Doctolib) - ActiveRecord migrations for Postgres made safe.

Useful resources

1. Gitlab - What requires no downtime?

2. Gitlab::Database::MigrationHelper code

3. Gitlab Migrations Style Guide

4. PostgreSQL at Scale: Database Schema Changes Without Downtime

5. Rollout - Rails Migrations with Zero Downtime

6. When Postgres blocks: 7 tips for dealing with locks

7. Safe Operations For High Volume PostgreSQL

Write reliable migrations

Learn from others' failures, use the right tools to make your life easier and don't take your database down

Thank you for your attention.

Any questions?

Scan me to get presentation link

PostgreSQL at Scale - Writing Reliable Migrations

By Žilvinas Kučinskas

PostgreSQL at Scale - Writing Reliable Migrations

- 1,079