elery is digestive

@stalwartcoder

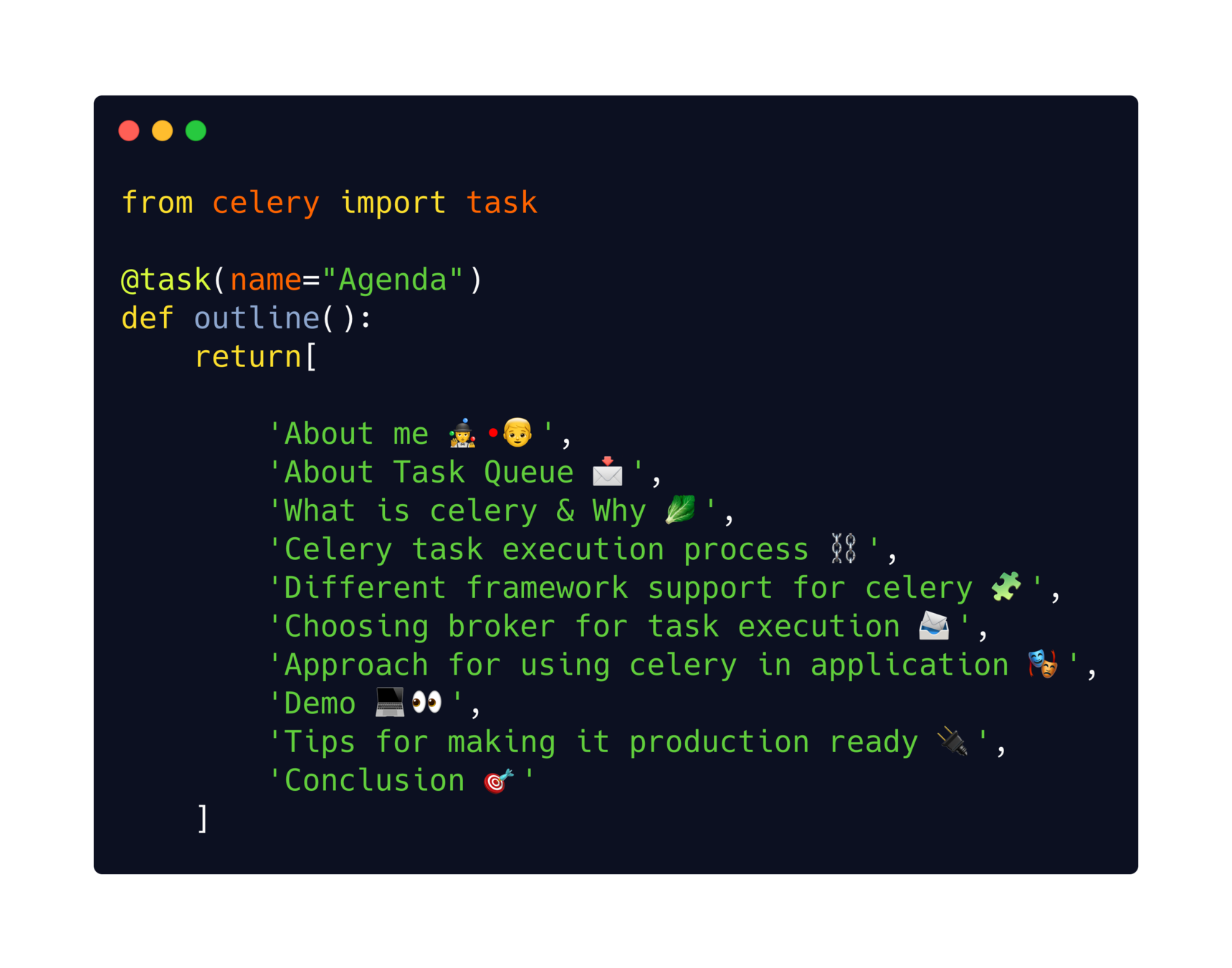

Agenda

Abhishek

👨💻 Software Eng. at Essentia SoftServ

🐍 Pythonista

👨👩👧👦 Community first person 💛

connect with me:

🚫 not "10x Engineer"

Task Queue

Task Queue

Manage background work - outside the usual HTTP request-response cycle.

For example, a web app poll the API every 10 minutes to collect the names of the top crypto currencies.

A task queue would handle invoking code to call the API, process the results and store them in a persistent DB for

later use.

Input to task queue's are a unit of work - separate code.

📬

Task Queue is a system for parallel execution of tasks.

Need of Task Queue... 🕵️

✊ Useful in certain situation.

👉 General guidelines :

- Are you using a lot of cron jobs to process data in the background?

- Does your application takes more than a few seconds to generate response?

- Do you wish you could distribute the processing of the data generated by your application among many servers?

- OR an asynchrounous DB update ?

distributes task

Broker

sends task

Client

Worker

Worker

Worker

distributes task

distributes task

WAIT!!

I’ve heard Asynchronous before!

Yes, AJAX

Some of the response time issues can be solved :

- With AJAX responses that continually enhance the initial response.

- Only if the AJAX responses also complete within a reasonable amount of time.

You need a Queue :

- Long processing times can’t be avoided in generating responses.

- You want application data to be continuously processed in the background and readily available when requested.

Different Frameworks or Projects for TQ

🔷 Huey

Blah....blahh...blaahhh

Let's talk about *celery*

Do it....

Celery is an asynchronous task queue/job queue based on distributed message passing.

It is focused on real-time operation, and scheduling as well.

Tasks can execute asynchronously (in the background) or synchronously (wait until ready).

Easy to integrate & multi broker support .

Key concept

- Message Broker

- Worker - Executors

De facto standard for Python

Why should i use it ?

🤔

it's just simple!

from celery import Celery

app = Celery('tasks', broker='pyamqp://guest@localhost//')

@app.task

def add(x, y):

return x + y>>> from tasks import add

>>> add.delay(4, 4)

<AsyncResult: 889143a6-39a2-4e52-837b-d80d33efb22d>Define task

Execute task

$ celery -A tasks worker --loglevel=infoRun the celery worker

$ pip install celeryinstall celery

- Minimize request/response cycle

- Smoother user experience

- Offload time/cpu intensive processes

- Flexibility - many points of customization

- Supports web hook, job routing

- Remote control of workers

- Logging, debugging & monitoring (Flower) support.

- Wide support of web frameworks actively developed.

- Amazing documentation and lot of tutorials!

🛠️ Framework Support

client 1

CeleryWorker 1

client 2

Broker

Task Result Storage

Task Queue 1

Task Queue 2

Task Queue N

...

send tasks

send tasks

distribute tasks

distribute tasks

get task result

store task result

store task result

Celery Architecture

CeleryWorker 2

Celery

Broker

DB for task result

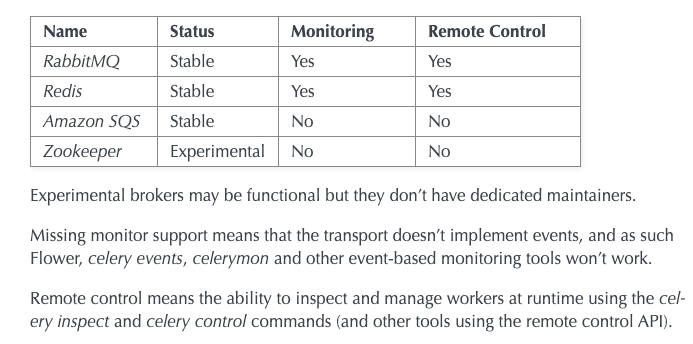

RabbitMQ

Redis

AWS SQS

Selecting Broker

Finally !! 🤦♂️

Approach for using celery 👨💻

- Simple setup : single queue & single worker

- Large setup : multiple queue & dedicated worker with concurrency pattern

- Key choices :

- Broker

- Result store

More detail :

Some Tips 😬

- Start simple !

- Watch for the result .

- Monitor it !

- Consume faster than you produce.

- Try concurrency over tasks.

- Keep your task clean.

- Replace your cron jobs!

More:

Resources 📚

Conclusion 💡

- Take a slow task / function

- Decouple it from system

- Run it asynchronously

- Don't use it for everything, they are not magic wand !

celery is digestive

By Abhishek Mishra

celery is digestive

- 1,213