[Convolutional Neural Netowrk :: Activation Functions Research ]

Minor Project Presentation

Abhishek Kumar 2013ecs07

Department Of Computer Science and Engg.

Shri Mata Vaishno Devi University

Acknowledgement

- My Mentor:

Dr Ajay Kaul - My senior, alumni & CTO Neuron: Mr. Rishabh Shukla

- My teachers at our college and Teammates

Outline

- Introduction of LMS, ML and Sentiment Analysis

- The technologies used to carry out the project

- Methodology and the working

- Findings and implementation

- Scope of the project

- Conclusion

****We have covered each section of the article in detail in the file submitted.

Machine Learning Intro

Oh Yes, Machine Learning is here

Yes, A big Yes

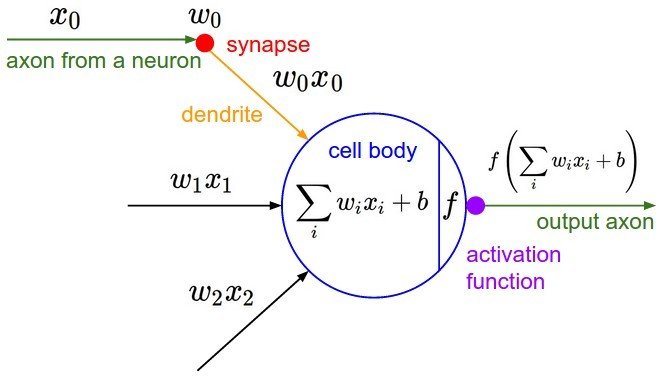

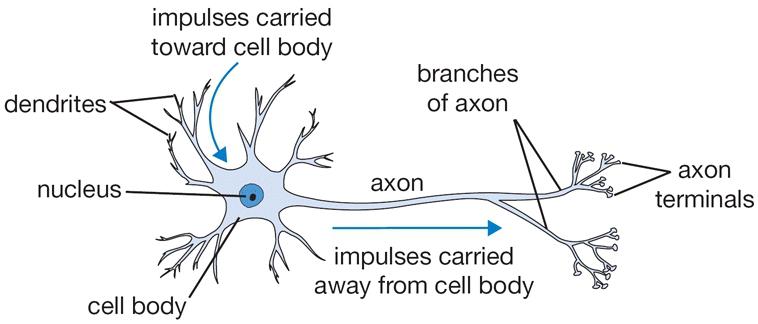

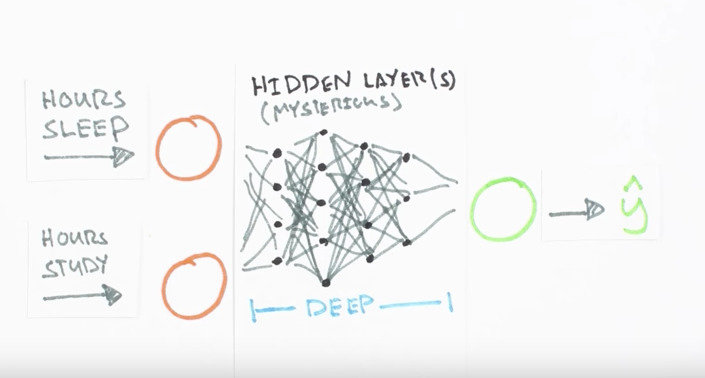

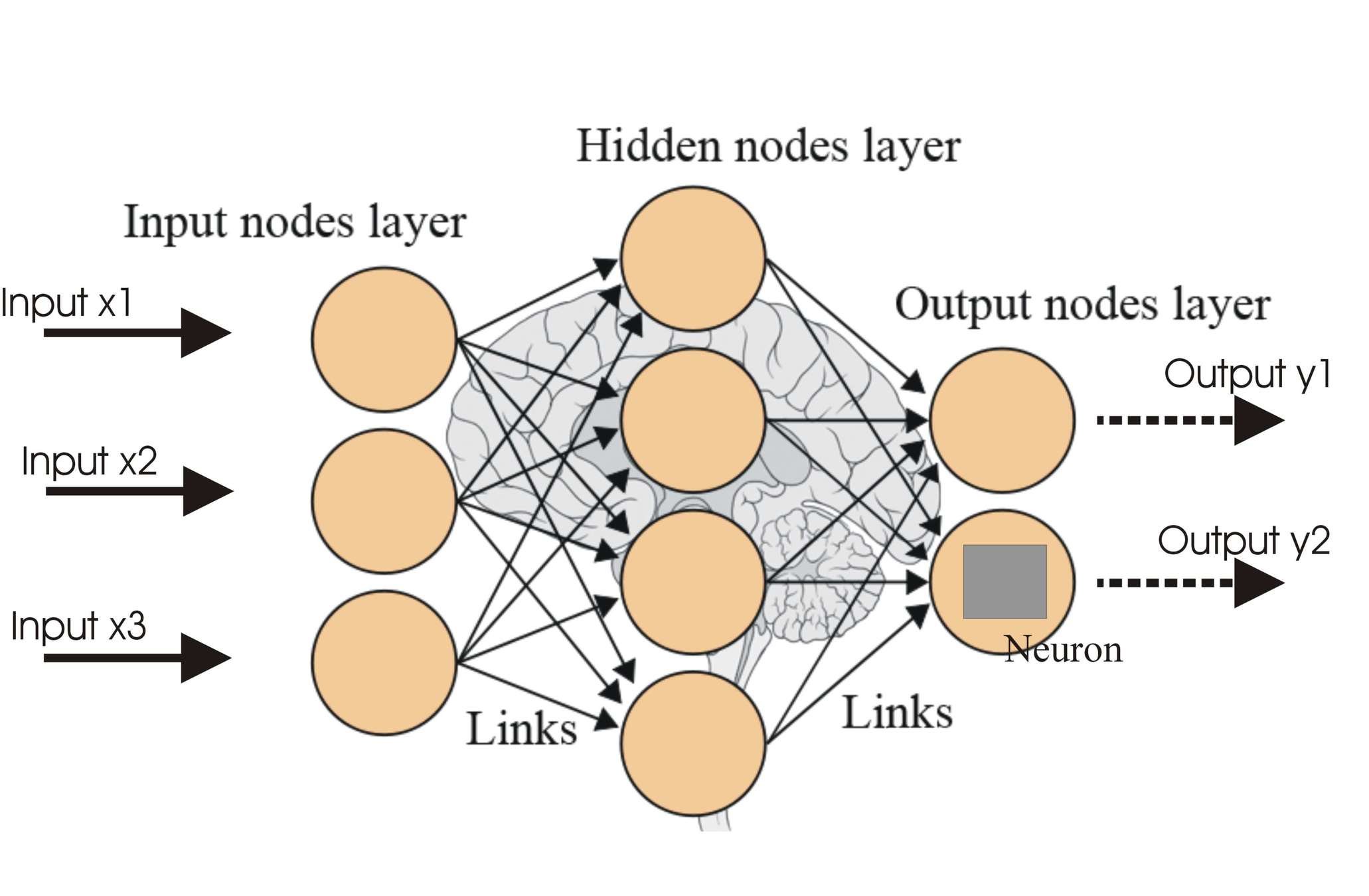

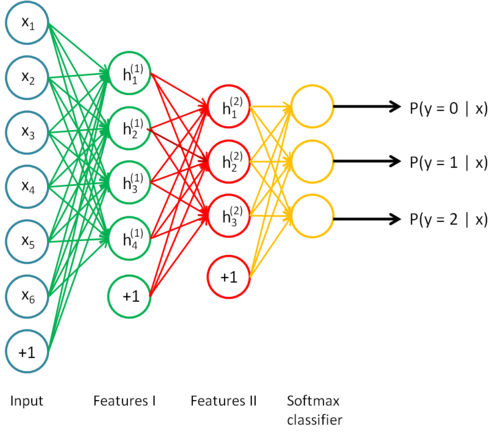

Neural Network

But all more simpler

(simple neural network with 2 input and 5 hidden layers)

Input Layer

Each pixel in the image will be a feature (0 ~ 255)

Each image has three channels (R,G,B)

Each channel has 50*50 pixels

Total feature vector size = 50*50*3 = 7500

Hidden Layer

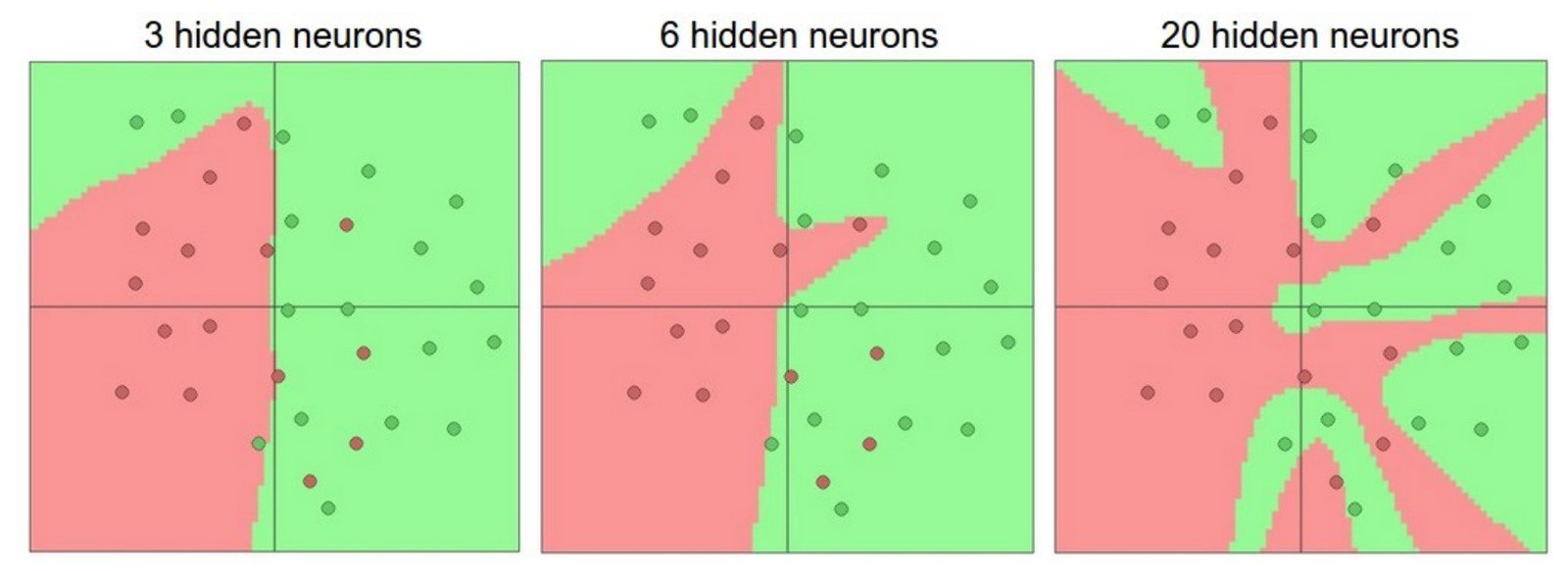

#of layers and # of neurons in each layer are hyperparameters

Need activate function to learn non-linear boundries

Activate Function

Choices for f : Sigmoid, Tanh,

Rectified Linear Unit (f(x) = max(0,x)) ...

The research work

Output Layer

Basically a logistic regression for multiple classes

Inputs are the hidden neurons of previous layers

Cost function is based on the output layer

Output 9 probabilities corresponding to 9 classes

Use back-propagation to calculate gradient and then update weights

Convolution

Can be viewed as a feature extractor

inputs: 5x5 image and 3x3 filter

outputs: (5-3+1)x(5-3+1) = a 3x3 feature map

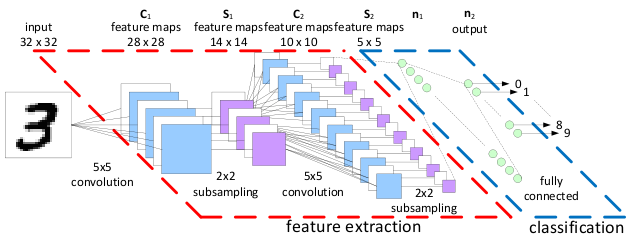

CovNet Example

Convolution

inputs: 3x50x50 image and k filters with size 5x5

outputs = ???

We will have 3*(50 - 5 + 1)^2*k many features

Need pooling to reduce the # of features

6,348*k

if k = 25, convolution generates around 160,000 features!

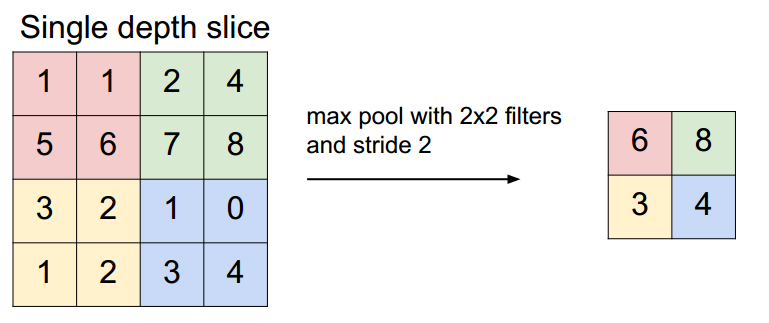

Max-Pooling

Also known as Sub-Sampling

For 2 x 2 pooling, suppose input = 4 x 4

Output = 2 x 2

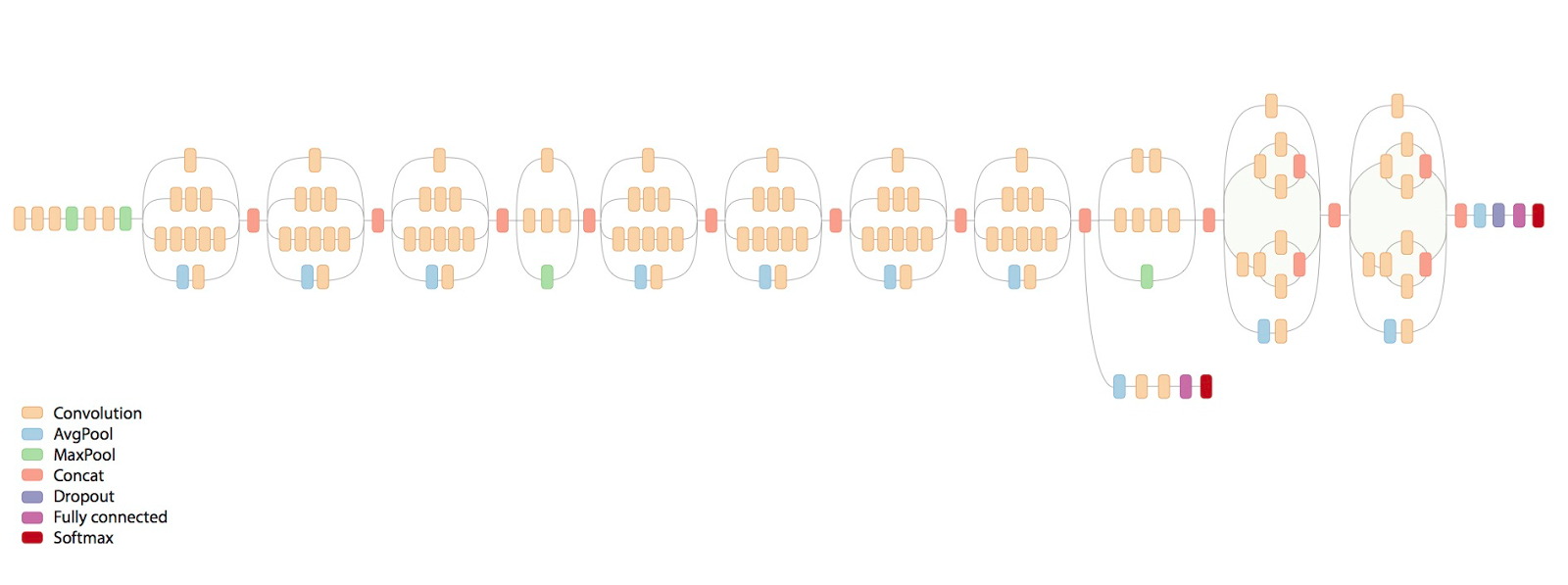

Architecture

2 convolution layers with pooling

1 hidden layer

1 output layer (logistic regression)

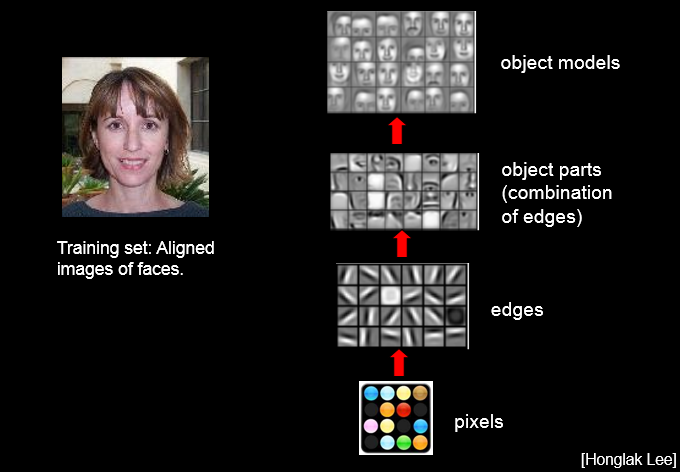

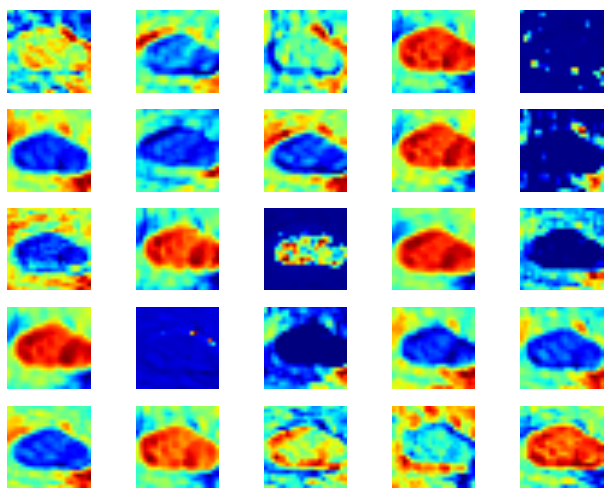

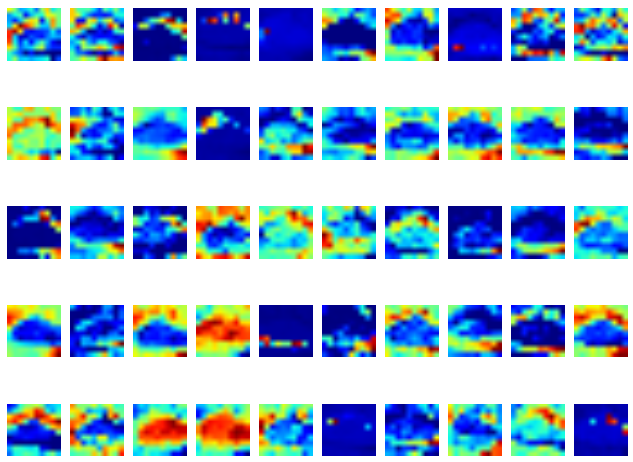

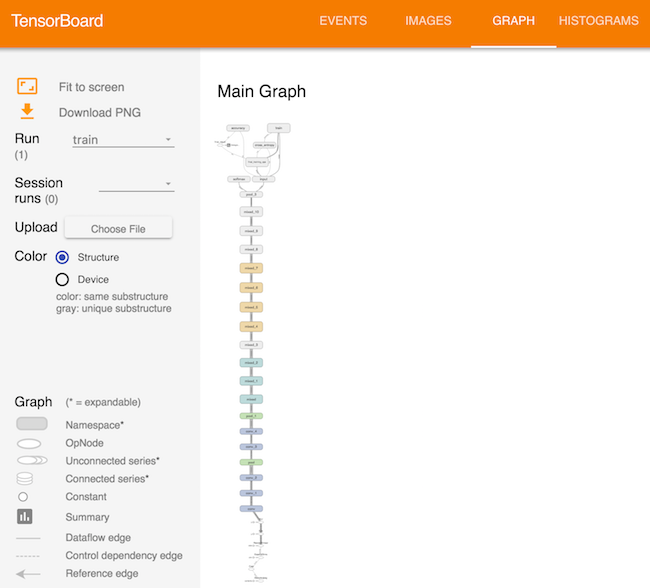

Visualize CNN

Input

First Covolution Layer with 25 filters

Second Covolution Layer with 50 filters

Technologies used

- Python (and its Libraries)

- Tensorflow

- Inception

- Numpy

- Pandas

- and much more.....

Introduction to Inception and Neural network

Inception: The lifeline of Google

Neural Network: The life line of Machine Learning

***In our case we used a Convolutional Neural networks

Inception and Neural network

Working of Inception

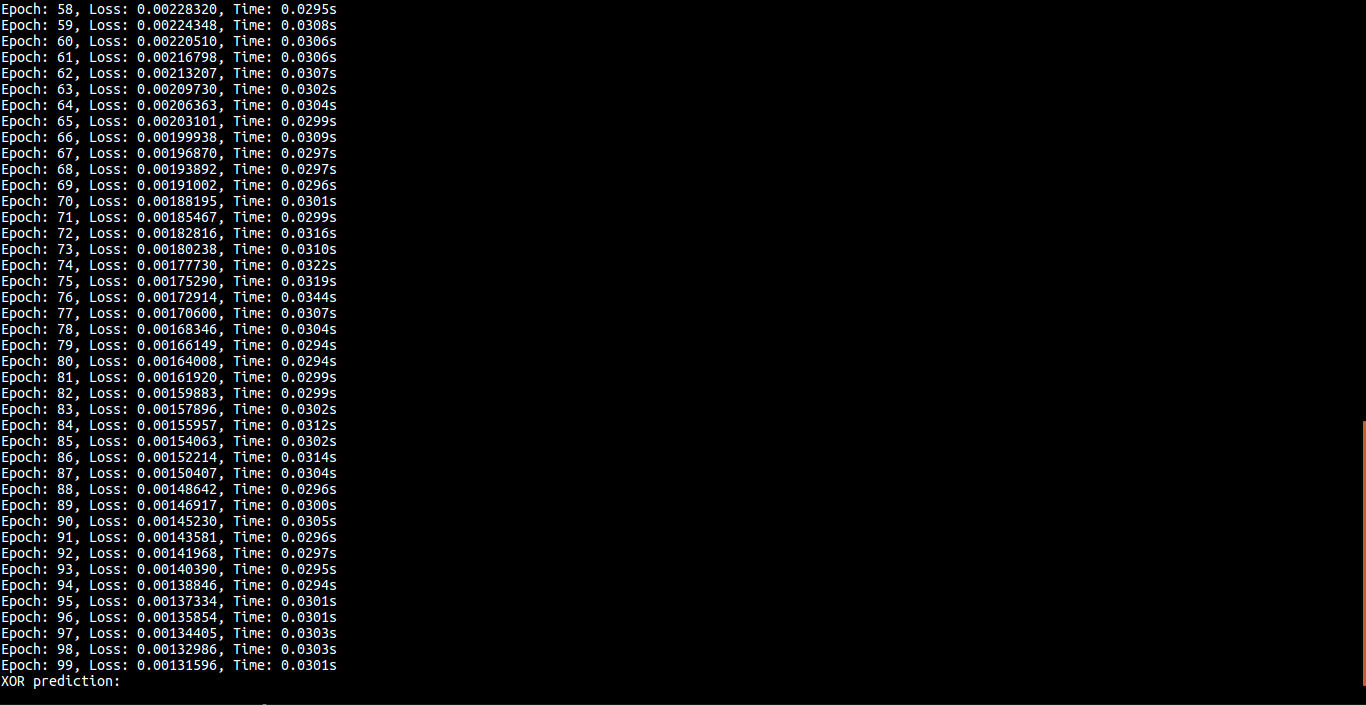

A neural network from Scratch

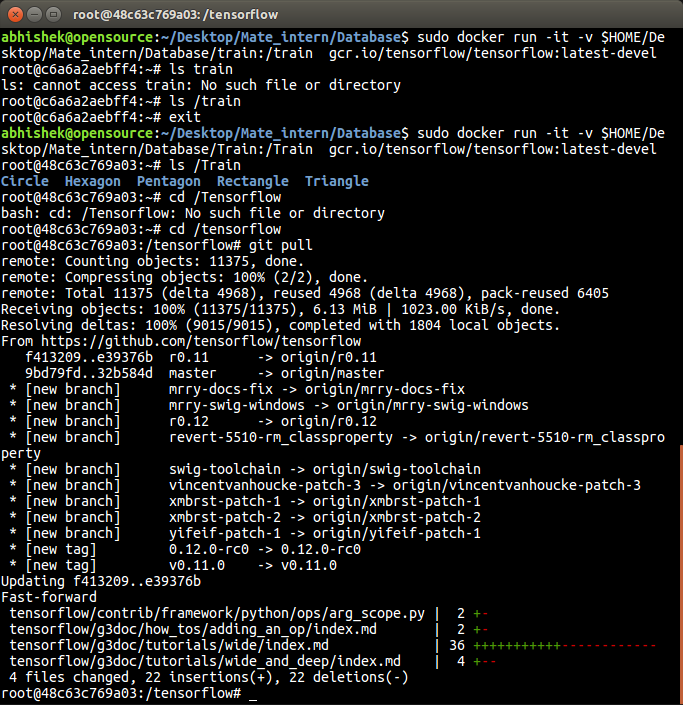

- Installing dependencies

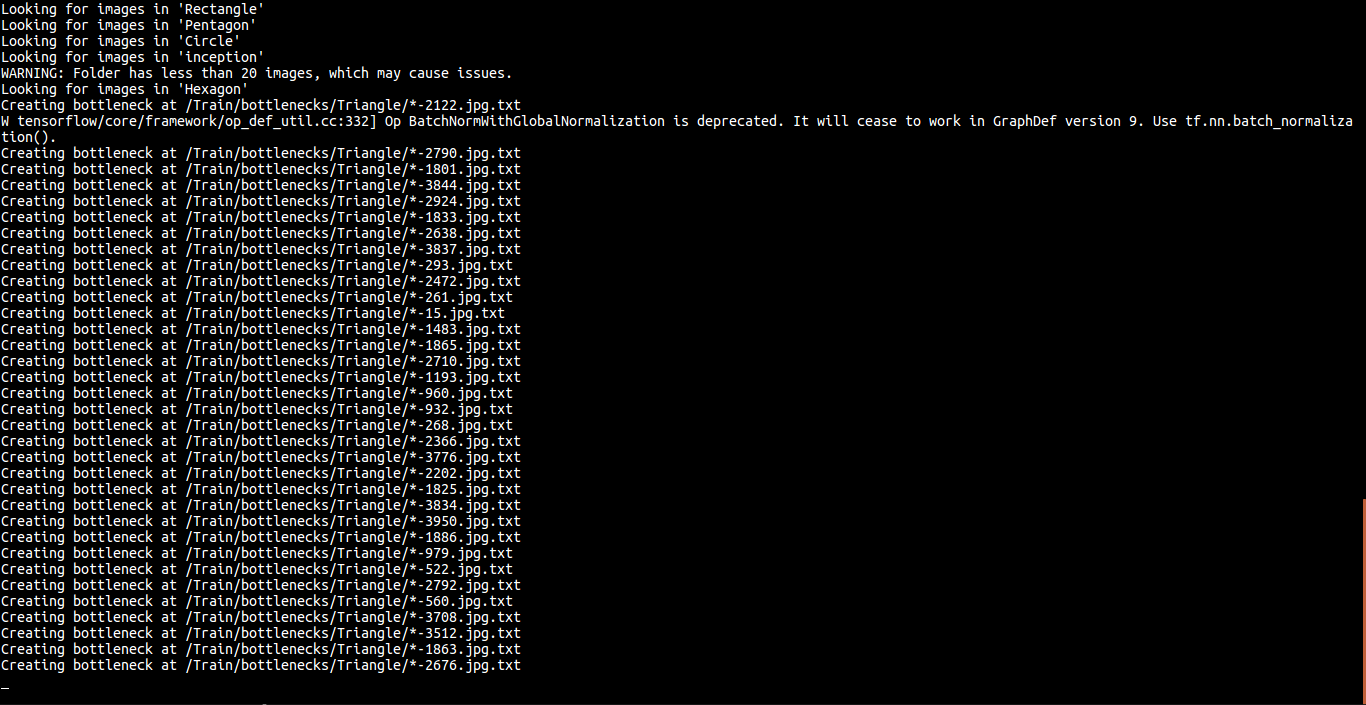

- Merging out Dataset

- Adding Dataset to Inception

- Pulling the changes of Tensorflow

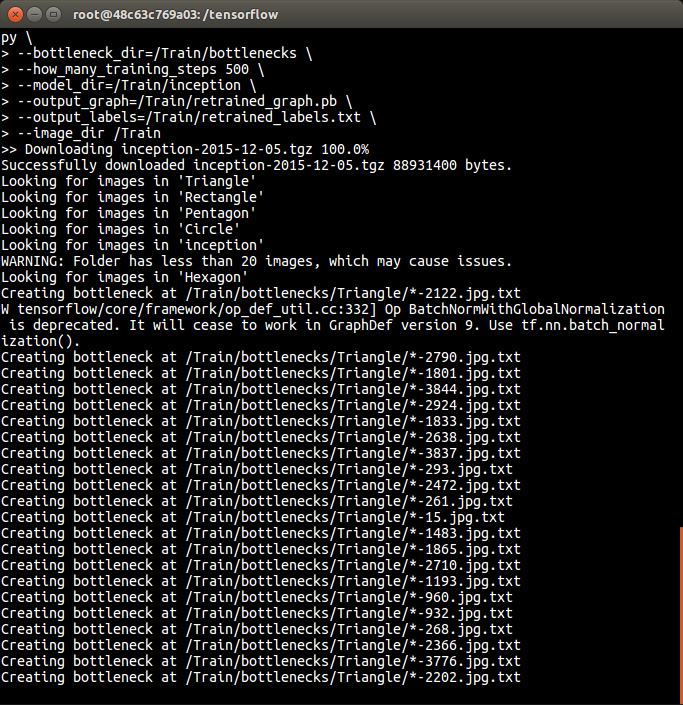

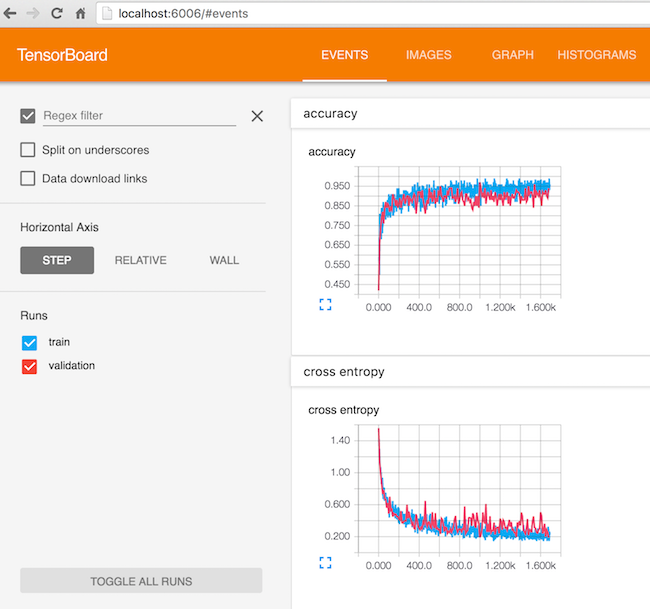

And this is how it looked

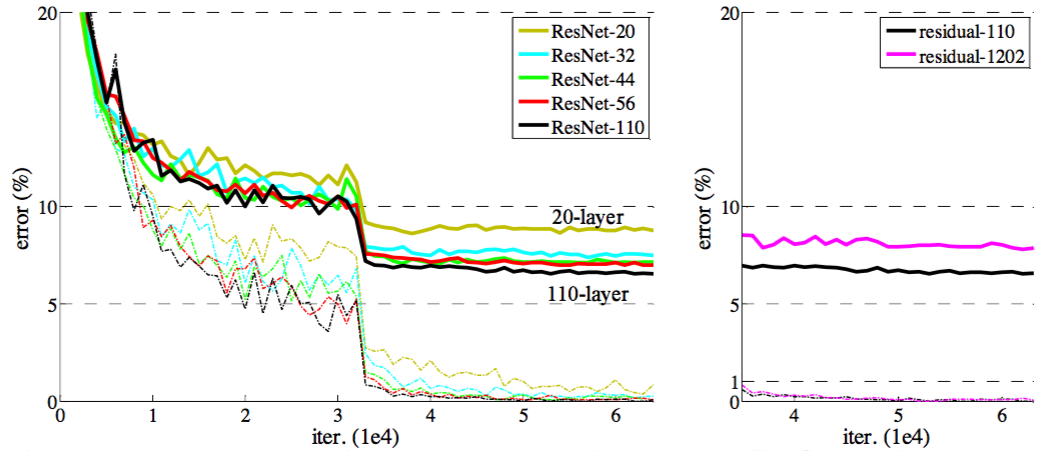

The Training and Testing Part

An Accuracy of 88%

Holla !!!!

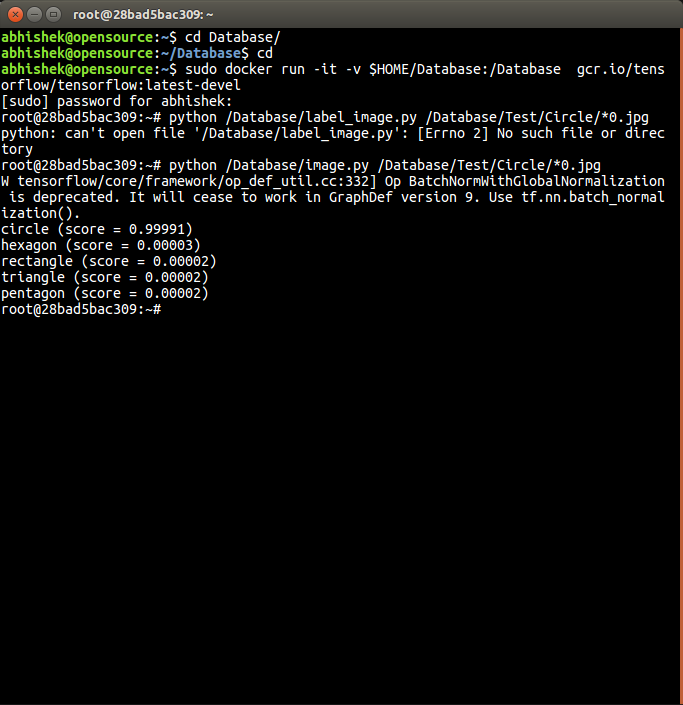

Result?

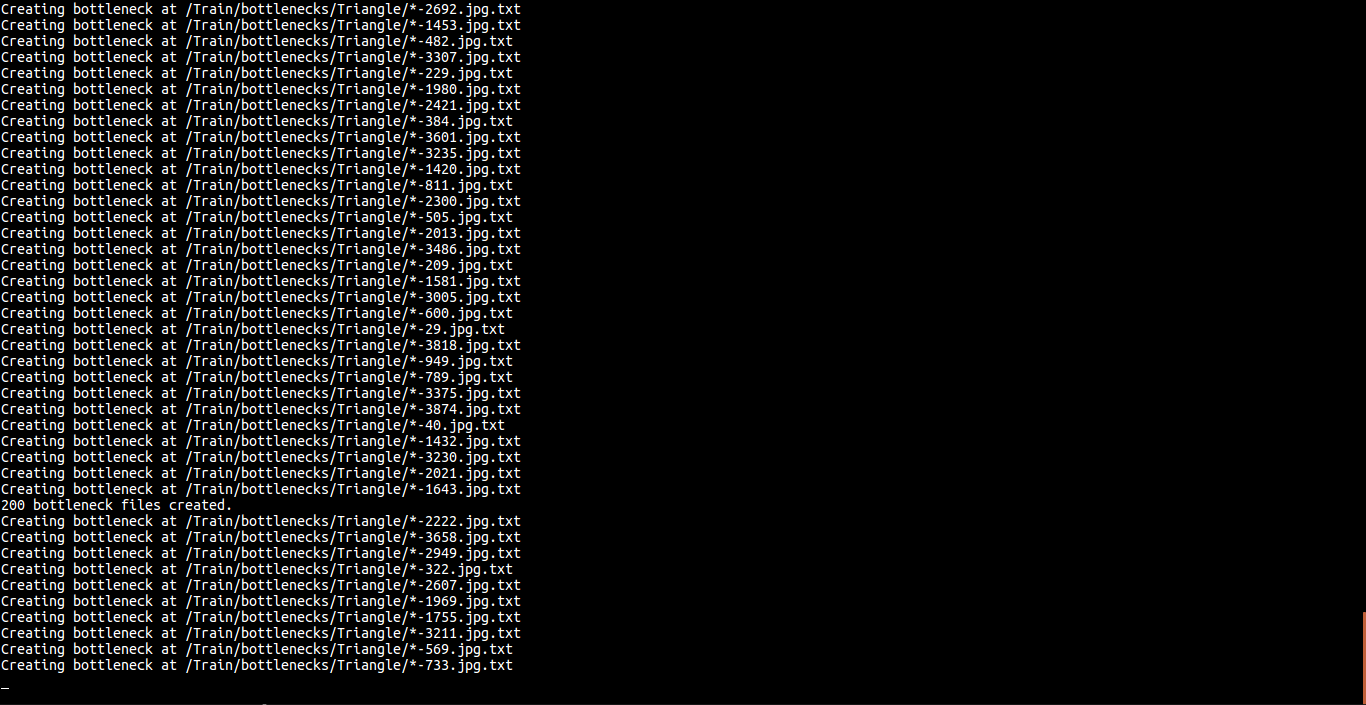

Some Graph and analysis

Lets Try it out..

Here is a screenshot of our model classifying a Circle with an accuracy of 99.999%

Hyperparameters

What is a Hyperparameters?

Semi-Supervised

- Unsupervised Pre-training

- Supervised Fine-tuning

- Use large amount of unlabeled data

- Work as initialization process of weight

Adjust the weight by cost of classification and back-propagation

Thank you and Have a Happy Day

Minor Project 2016

By Abhishek Kumar Tiwari

Minor Project 2016

- 1,134