Common primitives in Docker environments

Alex Giurgiu (alex@giurgiu.io)

Docker

is

great!

Until you want to deploy your new application in production...

on multiple machines

You thought you have this

When in fact you have this

We are trying to get here

This problem is intensely debated at the moment...

with many competing projects...

that approach it in one way or another...

Just look at

- Mesos

- Google's Omega

- Kubernetes

- CoreOS

- Centurion

- Helios

- Flynn

- Deis

- Dokku

- etc.

What do they have in common?

- they abstract a set of machines, making it look like its one machine

- they provide a set of primitives that deal with resources on that set of machines

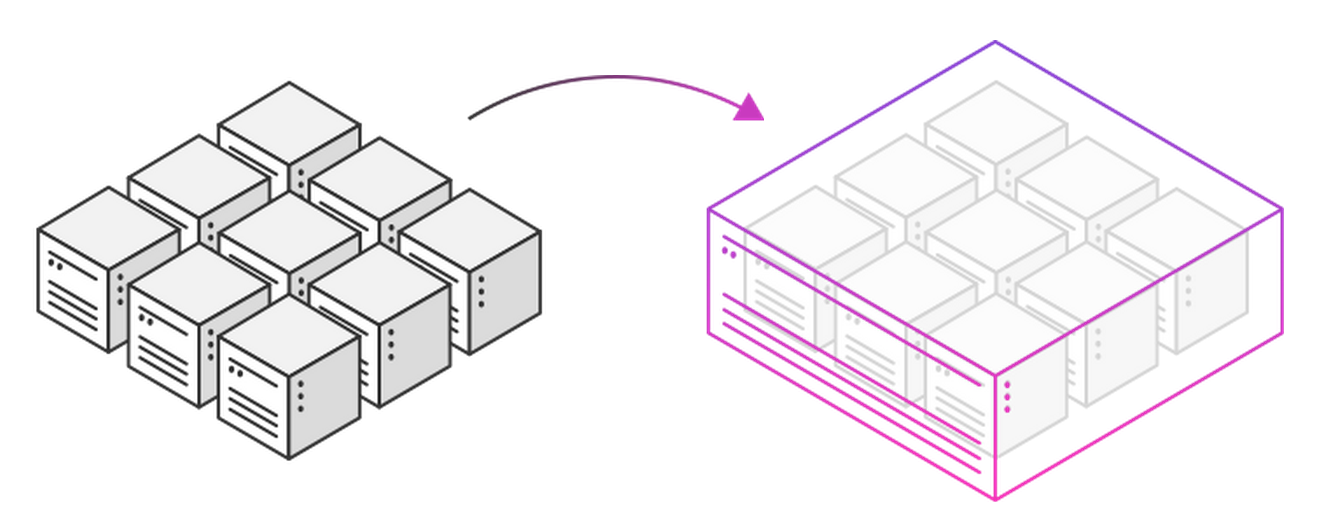

From this

To this

Why not use one of the mentioned solutions?

Most of them require you to write your application/workload in a custom way. To totally give in to their way of doing things.

But (I)we want to run the old/legacy applications, while gaining the same advantages

Our goals are similar

- standardize the way we interact with our infrastructure

- treat all machines in a similar way

- achieve reliability, through software and not through hardware

- achieve reproducible infrastructure

- reduce manual labor

Our building block

Container

Inputs

(binaries,code,packages, etc)

External services

Build process

State

Common primitives

"common enough that a generalized solution can be devised"

"should be applicable to both in-house or external applications"

Common primitives

- persistence

- service discovery

- monitoring

- logging

- authentication and authorization

- image build service

- image registry

(state) Persistence

Persistence

- one of the hardest problems to solve in a clean and scalable way

- should be transparent for the application

- most people just avoid Docker-izing services that require persistence

Local

- bring the state locally, relative to where the container runs

- should be taken care by your deployment/PaaS solution

- advantages: write/read speeds, reliability

- disadvantages: potentially slow deploys, complex orchestration

Remote

- keep state remotely and "mount" it where the application is deployed

- can be done by your PaaS solution or by the container itself

- advantages: simpler to orchestrate, fast deploys

- disadvantages: write/read speeds, (un)reliability

Projects that try to solve persitence

Flocker - https://github.com/ClusterHQ/flocker

?

Flocker way(local)

Service discovery and registration

Service discovery

- most worked on aspect of Docker orchestration

- quite a few different open source projects that tackle this problem

- multiple approaches: environment variables, configuration files, key/value stores, DNS, ambassador pattern etc.

Open source projects

- Consul (my personal favorite)

- etcd (CoreOS's favorite)

- ZooKeeper (many people's favorite)

- Eureka (Netflix's favorite)

- Smartstack (Airbnb's favorite)

- ...

(service discovery)

- choose a solution that can accommodate both legacy and custom applications: discovery using DNS or HTTP

- choose a solution that can be manipulated using a common protocol: HTTP

- make sure to remove died out applications from your SD system

- Ideally it should have no single point of failure

- Consul satisfies all the above requirements

How to do it

(service discovery)

Consul

(service discovery)

- can be queried over DNS and HTTP

- distributed key:value store

- consistent and fault tolerant(RAFT)

- fast convergence(SWIM)

- Service checks

Service registration

Can be done

- by your application - simple HTTP call to Consul

- a separate script/application inside your container

- another container that inspects running containers -progrium/registrator

Most importantly, each container should provide metadata about the service its running.

Monitoring

Monitoring

2 perspectives

- service monitoring - can be done as in pre-Docker times

- container monitoring

Service monitoring

(monitoring)

- can be done with tools like Nagios

- your monitoring system should react dynamically to services that start and stop

- containers should define what needs to be monitored

- services should register themselves in the monitoring system

- Consul supports service checks

Container monitoring

(monitoring)

- monitor container state(up/down) - Docker event API provides this information

- gather performance and usage metrics about each container - Google's cAdvisor provides this

- cAdvisor provides an API to pull the data out, so you can feed it to your trending system

Monitoring principles

(monitoring)

- have a layer of system monitoring - that trusts humans

- have a layer of behavior tests - doesnt trust humans. Used to make sure that a certain environment is up

- reduces manual labor

- enables detailed insights inside the kernel and applications

- they have a new "cloud" version

- same thing can be achieved on your private Docker platform

Sysdig

(DTrace for Linux)

Logging

Logging

- logs will be used by engineers to troubleshoot issues

- ... but now your application is a distributed moving target

- the need for centralized log aggregation is big

How to do it

(logging)

Multiple approaches

- applications write logs to STDOUT and you pick up the logs using the Docker API or client. Logspout can be used to ship the logs remotely

- applications write logs inside the container and a logging daemon inside the container(RSYSLOG) ships the logs to a centralized location

- applications write logs in a volume which is shared with another container that runs a log shipping daemon

How to do it

(logging)

- Choose an approach that fits your needs and send the logs to a centralized location

- logstash-forwarder is a great to forward your logs(please dont choose python-beaver)

- elasticsearch is a great way to store your logs

- Kibana is a great way to visualize your logs

What do we do about log ordering?

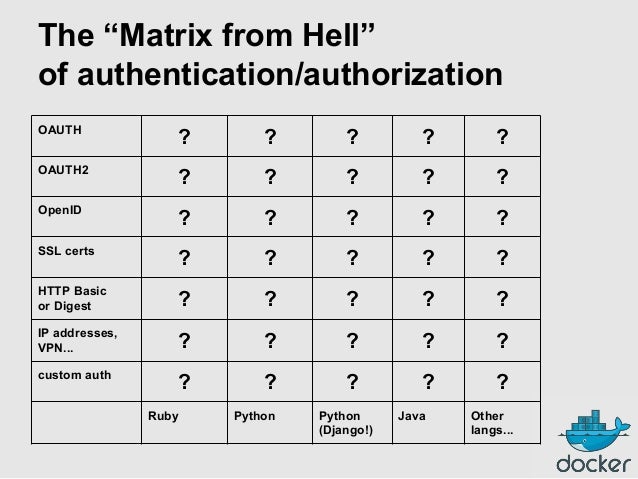

Authentication and authorization

Authentification

- how can you prove that a container/service is who it says it is?

- useful to have a generalized way of authenticating all your containers

- that way you can count on the reported identity when allowing access to certain resources

How to do it

(authentication)

- Largely unsolved

- Docker 1.3 tries to check image signatures if they come from the public registry and if they are marked as an "official repo"

- A PKI setup fits the problem, with a unique certificate for every container(not image)

- Docker promised some PKI based solution in future releases - I would wait for that

Authorization

- builds on top of authentication

- will keep track of what resources a container/service can access

- should hand over details like user/pass pairs, API tokens and ssh keys

How to do it

(authorization)

- Do NOT bake in credentials and ssh keys into images (security and coupling)

Easy way

- mount external volume that contains credentials, ssh keys or even ssh agent sockets

- doesn't require authentication

- increases the complexity of your deployment solution

Hard way

- store credentials in a centralized service

- requires some form of authentication

- decreases complexity in your deployment solution

How to do it

(authorization)

Crypt and Consul(or etcd)

- tries to solve the problem by using OpenPGP

- each container needs access to a private key. Can be made available through volume

- credentials are stored encrypted in Consul

- credentials get retrieved and decrypted in container

Image build service

Image build service

- Build gets triggered when code gets changed and committed to your repository

- Can perform basic checks to make sure the image complies with some basic rules

- Commits image to image registry

- If other images depend on it, a build job should be triggered for those images

- Extra tip: more control over the input sources for your images will in turn improve the reliability of your builds

How to do it

(image build service)

Git and Jenkins?

- probably any vcs and CI tool will work

- but Git and Jenkins work great

Simple workflow

commits code

Git post commit hook

Github webhook

Jenkins test

and build

Push to

registry

Container

Inputs

(binaries,code,packages, etc)

Build process

Basic build process

Image registry

Image registry

- a central place to store your Docker images

- Docker Hub is the public one

- you can easily run a private registry

Open source projects

Docker registry

https://github.com/docker/docker-registry

Artifactory

http://www.jfrog.com/open-source/

(image registry)

How to do it

(image registry)

- USE a registry and dont rely on building images on every machine

- tag your images with specific versions

- make version requirements explicit

Image registry

Where are we now?

- a lot of hype, experience needs to follow

- the sheer number of projects and work put in the ecosystem is impressive

- this momentum fuels on itself and ignites rapid development in projects that are required to achieve certain things

- can you program?

Some conclusions

- reduce coupling between components

- think about your platform as a functional program with side effects - identify the logic and identify the state

- architect your system in a service oriented way - this way any required service can be placed inside a container

- avoid running services on your Docker host

- all container operations should be programmable, and ideally idempotent

The network is the last bastion of inflexibility.

- trade-off between flexibility and performance (throughput,latency)

- detailed analysis of performance?

Questions?

deck-docker-primitives

By alex giurgiu

deck-docker-primitives

- 3,242