How Cassandra Works

Agenda

- Overall overview (~10m)

- How writes work (~10-15m)

- How reads work (~10-15m)

- QA session (~5m)

- Bonus

Cassandra is highly tunable

- There is a configuration parameter for almost any option

- cassandra.yaml (global settings for the cluster)

- table or keyspace based

Architectural Overview

Cassandra was designed for fast writes

Cassandra was designed for fast writes

Writes in Cassandra aren’t free, but they’re awfully cheap. Cassandra is optimized for high write throughput, and almost all writes are equally efficient. If you can perform extra writes to improve the efficiency of your read queries, it’s almost always a good tradeoff. Reads tend to be more expensive and are much more difficult to tune.

Cassandra was designed for fast writes

Disk space is generally the cheapest resource (compared to CPU, memory, disk IOPs, or network), and Cassandra is architected around that fact. In order to get the most efficient reads, you often need to duplicate data.

Cassandra was designed for fast writes

These are the two high-level goals for your data model:

- Spread data evenly around the cluster

- Minimize the number of partitions read

Rows are spread around the cluster based on a hash of the partition key, which is the first element of the PRIMARY KEY. So, the key to spreading data evenly is this: pick a good primary key

Partitions are groups of rows that share the same partition key. When you issue a read query, you want to read rows from as few partitions as possible.

Cassandra was designed for fast writes

The way to minimize partition reads is to model your data to fit your queries. Don’t model around relations. Don’t model around objects. Model around your queries.

- Step 1: Determine What Queries to Support

- Step 2: Try to create a table where you can satisfy your query by reading (roughly) one partition

Cassandra was designed for fast writes

- Cassandra is designed/optimized for writes (it is significantly cheap)

- Data duplication is a new normal (disk is cheap comparing to CPU and RAM)

- Keep the data spread around the cluster evenly

- Reduce the number of partitions required to read to get the answer

- These 2 goals above conflicting with each other, balance between them

- To tune for performant reads, design the tables to fit the queries pattern

- Come with a list of queries in advance (roughly) application supports

- Design your table around these queries

- Usually one table per query pattern

- Make sure to take your read/update ratio into account when designing your schema

- Explore how collections, user-defined types, and static columns reduce the number of partitions need to read to satisfy a query

Cassandra was designed for fast writes

- Cassandra finds rows fast

- Cassandra scans columns fast

- Cassandra does not scan rows

Consistent Hashing

-2^63 (java.long.MIN_VALUE) +2^63-1 (java.long.MAX_VALUE)

Virtual Nodes

- num_tokens (256, in cassandra.yaml)

- If instead we have randomized vnodes spread throughout the entire cluster, we still need to transfer the same amount of data, but now it’s in a greater number of much smaller ranges distributed on all machines in the cluster. This allows us to rebuild the node faster than our single token per node scheme.

Nodes discovery

- Nodes use gossiping protocol to learn about cluster and topology (alive, running, down, load, where)

- every 1 second up to 3 other nodes

- system.peers

On each node

- Partitioner - hashes a partition key into token (partitioner in cassandra.yaml)

- A snitch determines which data centers and racks nodes belong to. Snitches inform Cassandra about the network topology so that requests are routed efficiently

Request Coordination

- Coordinator node manages replication process

- Nodes also referred as replicas

RF and CL

- Replication Factor RF (configured during keyspace creation) (3 - is the recommended minimum)

- Consistency Level CL (driver level, and could be changed per request; ANY, ONE (Netflix), ALL, QUORUM = RF / 2) (by default ONE)

- CL meaning varies by request (write - saved, read - return)

- Cassandra writes to all replicas of the partition key, even replicas in other data centers. The consistency level determines only the number of replicas that need to acknowledge the write/read success to the client application (eventual consistency)

Hinted Handoff

- Recover mechanism

- If coordinator fails to write the partition the hinted handoff is added* to the coordinator's system.hints

- The writes are replayed when the node is back online

- The config in cassandra.yaml:

hinted_handoff_enabled (default: true) could be configured separately per DC

max_hint_window_in_ms (3 hours) if more, nodetool repair must be used

* - only if CL is met, otherwise write operation is reported back as failed

Read repair

- During "each" read request

- The full data is only requested from one replica node

- All others receive digest query

- Digest query returns a hash to check the current data state

- Nodes with stale data are updated

occurs on a configurable percentage of reads (read_repair_chance on the table level) - To adjust: ALTER TABLE [table] WITH [property] = [value]

nodetool repair

When to run nodetool repair:

- when recover a failing node

- bring downed node back

- when the time the node down is more than configured hinted handoff

- periodically on the node with infrequently reads

- periodically on the node with many write or delete requests

- every gc_grace_seconds (table property controlling the tombstone garbage collection period, tombstone is a flag set on the deleted column with a partition)

system keyspace

- hints, peers, local

How writes work

How writes work

- There are 4 main "players" during writing the data:

1. CommitLog (append only file)

2. Memtables (one memtable per CQL table) (in-memory ds, write-back cache)

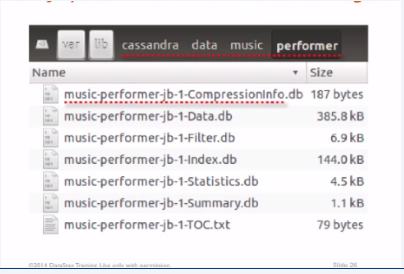

3. SSTables (immutable)

4. and Compaction (tunable, based on read/write heavy) - CommitLog is used to rebuild Memtables quickly in case of failure

How writes work

- Every node when it receives the data to write does the following:

1. Writes the data into CommitLog

2. Writes the data into the corresponding Memtable

3. Periodically Memtables are flushed to disk to immutable SSTables

4. And periodically Compaction runs merging SSTables into one new SSTable (clearing previous generation SSTables) - When data is written into Memtable and CommitLog only then the acknowledgment is sent back to coordinator and to the client as well

- When data is flushed to SSTable the memory heap and CommitLog are cleaned

How writes work

- The CommitLog data is synced with files system in 2 ways:

periodic (default) or batch (50 ms). In case of periodic the acknowledgment of write is sent immediately, in case of batch, the client needs to wait extra 50ms before ack is sent. - Memtable is flushed to SSTable when one of the following occurs:

- the memtable_total_space_in_mb threshold is reached (default: 25% of JVM heap)

- the commitlog_total_space_in_mb is reached

- or nodetool flush is run (nodetool flush [keyspace] [table(s)])

How writes work

NEVER leave the default settings for the data_file_directories and commitlog_directory

(benefit from SSD sequential writes)

How writes work

How reads work

Resources

Thank you!

QA

Cassandra

By Aliaksandr Kazlou

Cassandra

- 2,014