From Shapes to Actions

Andy Zeng

Workshop on 3D Vision and Robotics

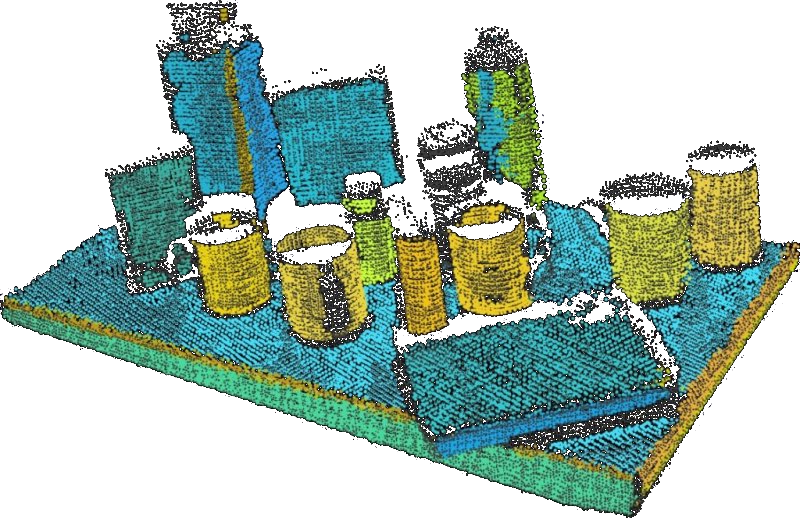

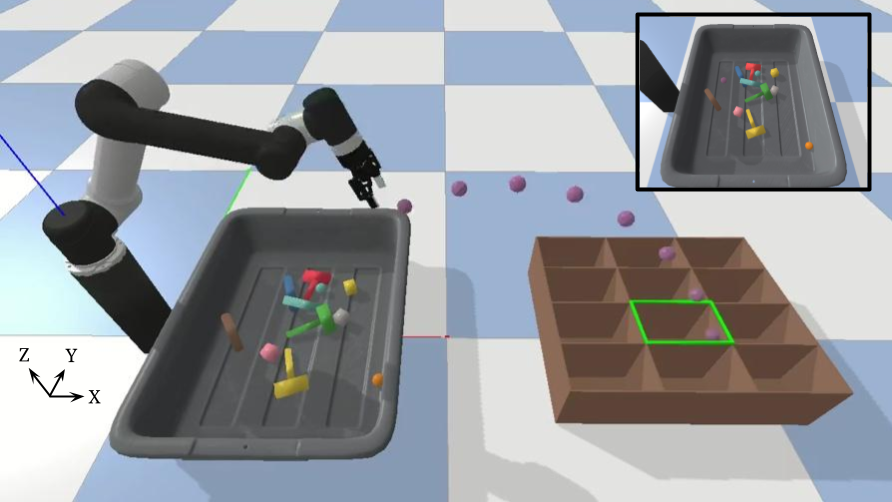

Learning Precise 3D Manipulation from Multiple Uncalibrated Cameras

Akinola et al., ICRA 2020

Image credit: Dmitry Kalashnikov

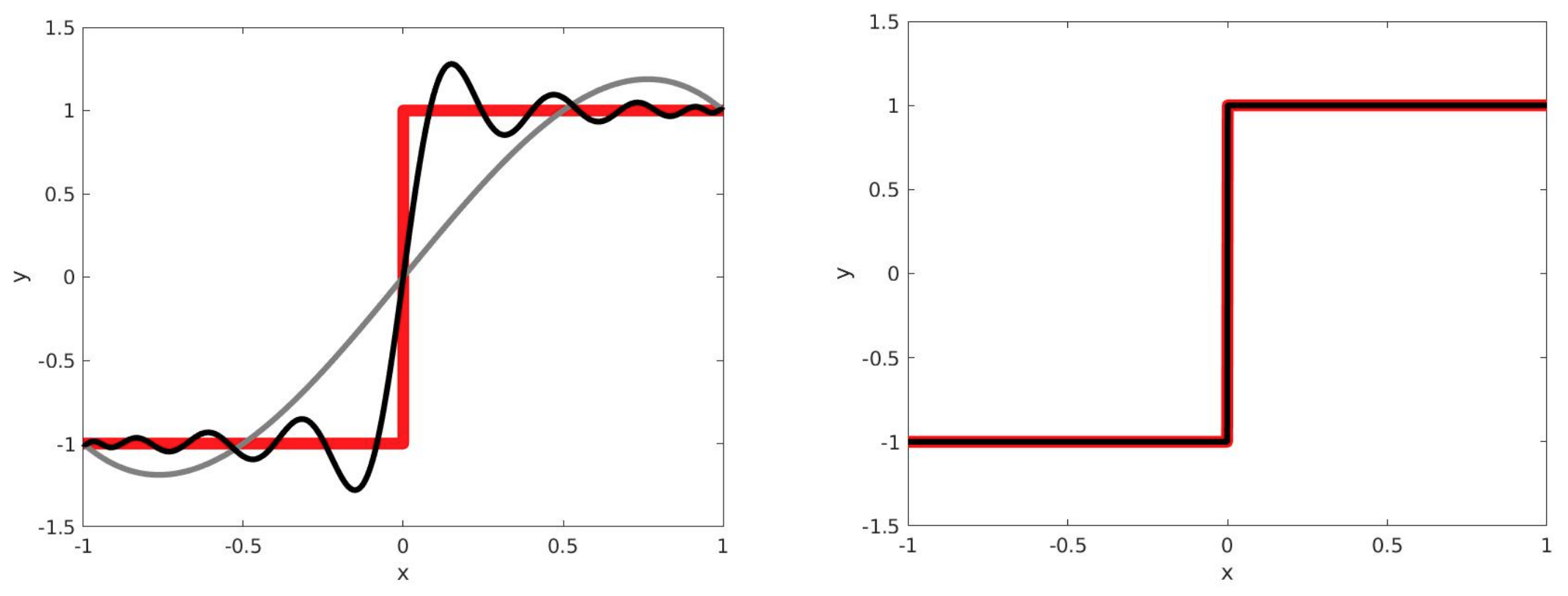

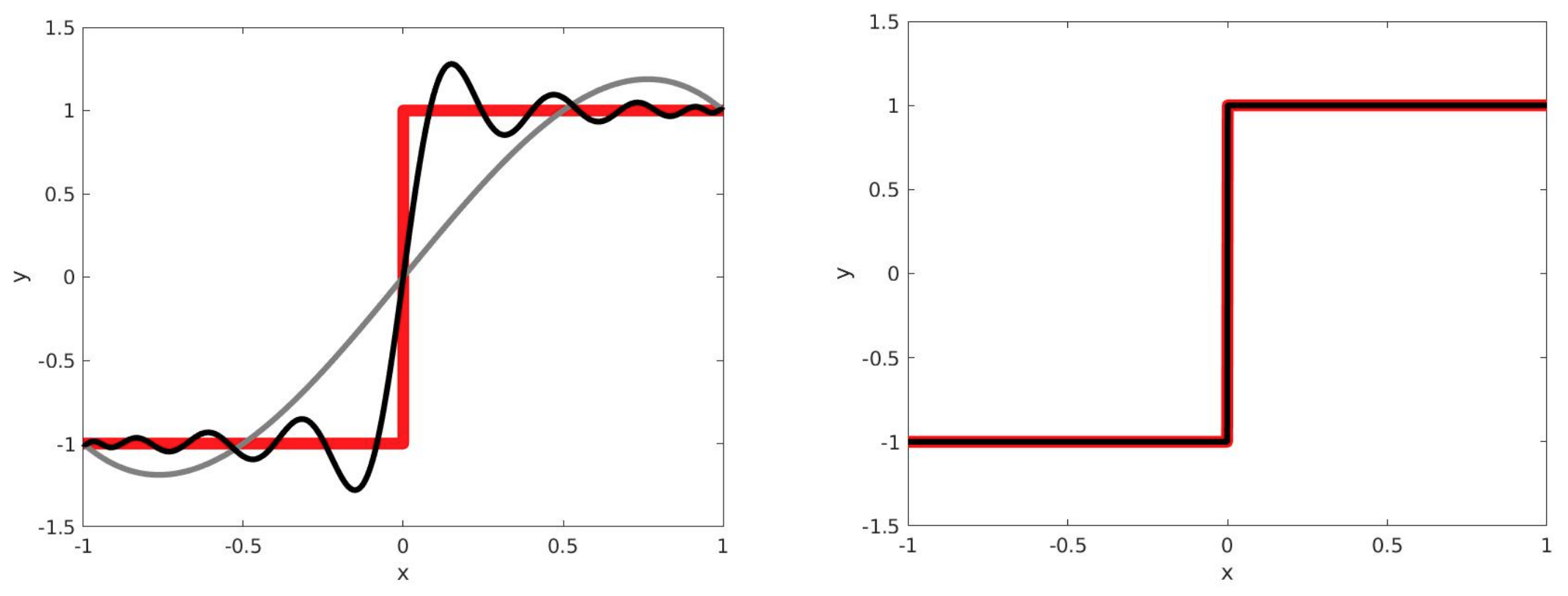

Semi-Algebraic Approximation using Christoffel-Darboux Kernel

Marx et al., Springer 2021

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

Mildenhall and Srinivasan et al., ECCV 2020

RealSense

Gahan Wilson

"BusyPhone" - a 2020 BusyBoard

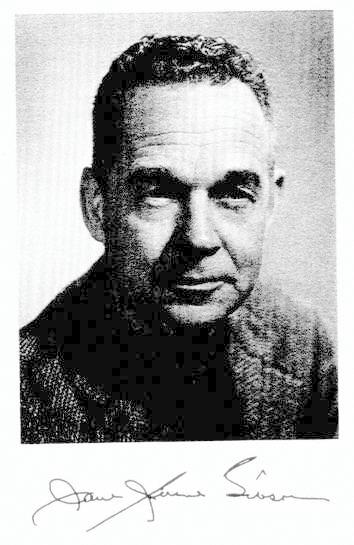

"The Perception of the Visual World"

James J. Gibson (1950)

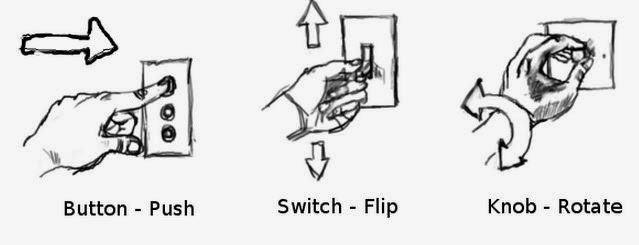

affordances

the opportunities for action provided by a particular object or environment

"Affordance in User Interface Design" by Avadh Dwivedi

"Role of Affordances in DT and IoT" by Anant Kadiyala

grasping

lifting

twisting

touching

grasping

grasping

pushing

Shape classification!

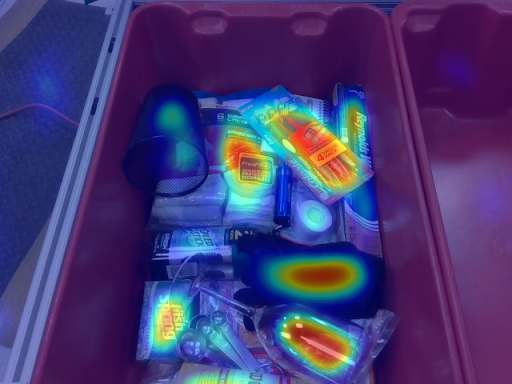

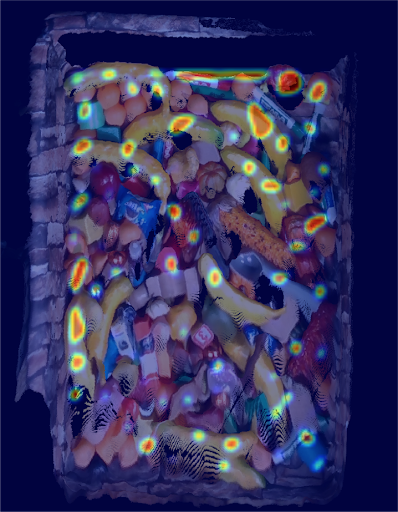

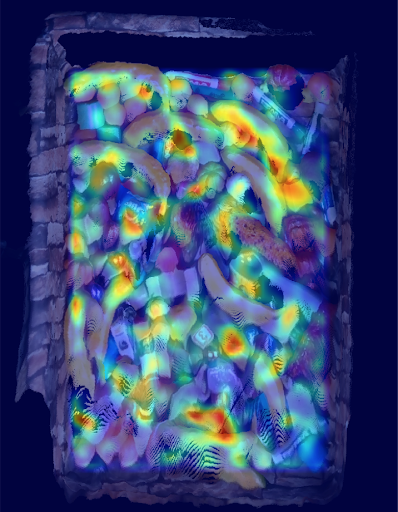

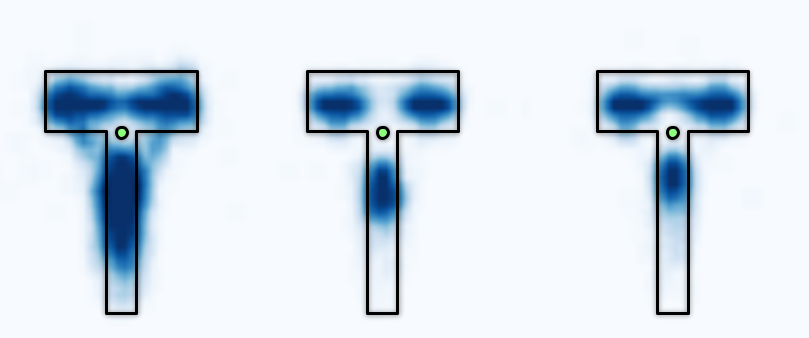

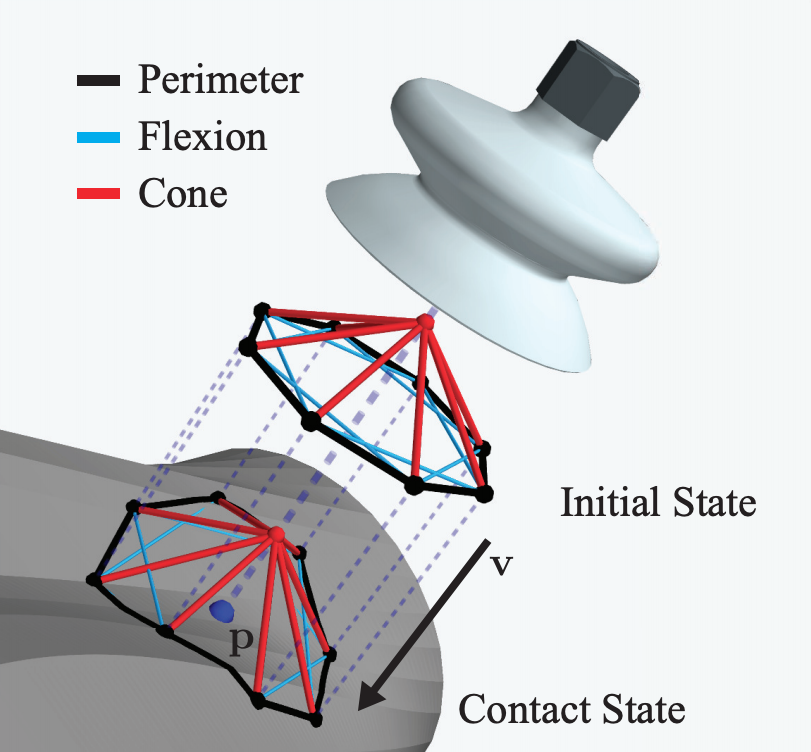

Suction

Grasping

RGB-D Data Collection

Fully Convolutional Networks

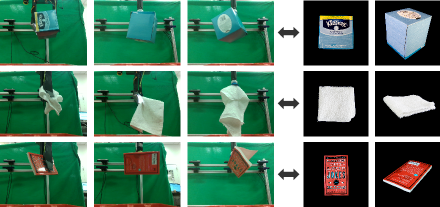

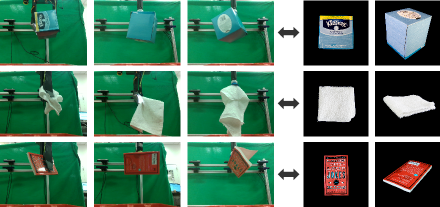

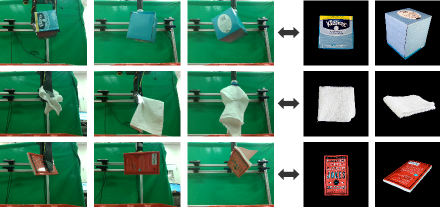

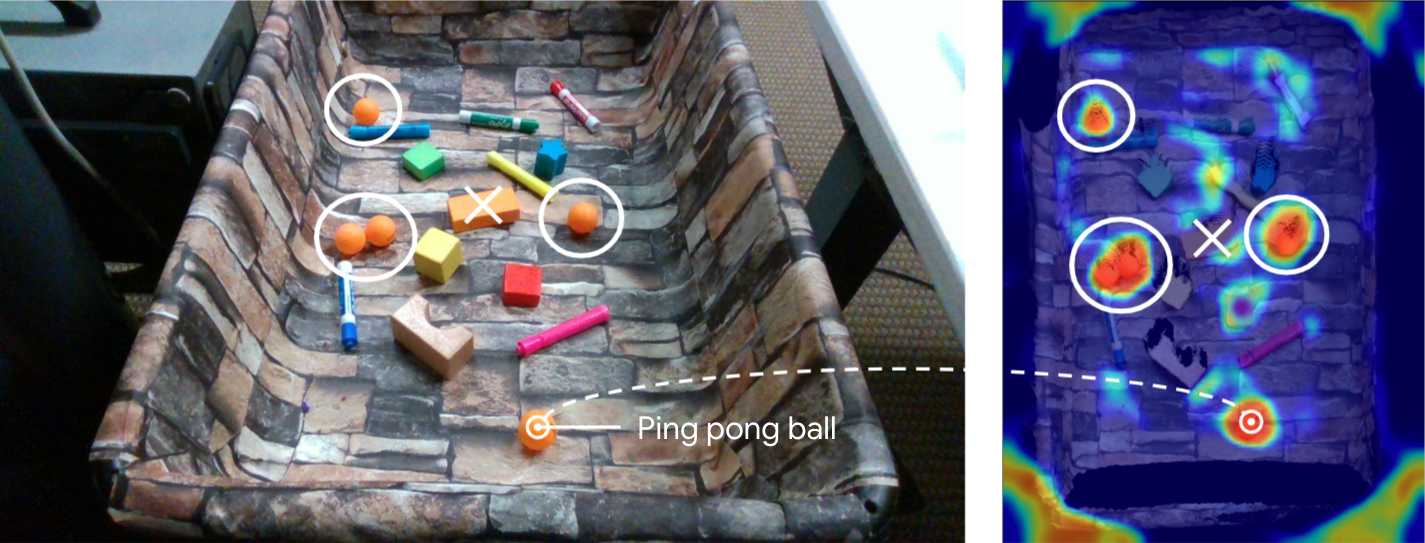

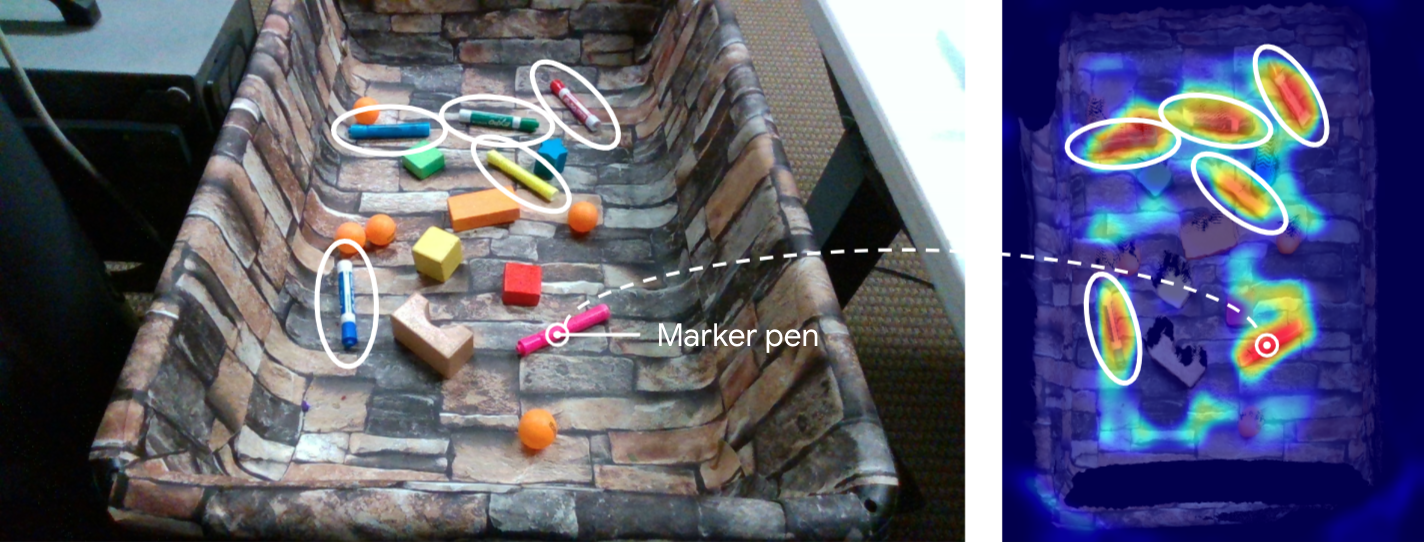

Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching

Zeng et al., ICRA 2018, 1st Place Stow Task Amazon Robotics Challenge 2017

A3

Grasping confidence

Throwing velocity

Input RGB-D

TossingBot: Learning to Throw Arbitrary Objects with Residual Physics

Zeng et al., RSS 2019, T-RO 2020, Best Paper Award

Grasps self-supervised by throws

Self-supervised grasping

Perception

Actions

TossingBot: Learning to Throw Arbitrary Objects with Residual Physics

Zeng et al., RSS 2019, T-RO 2020, Best Paper Award

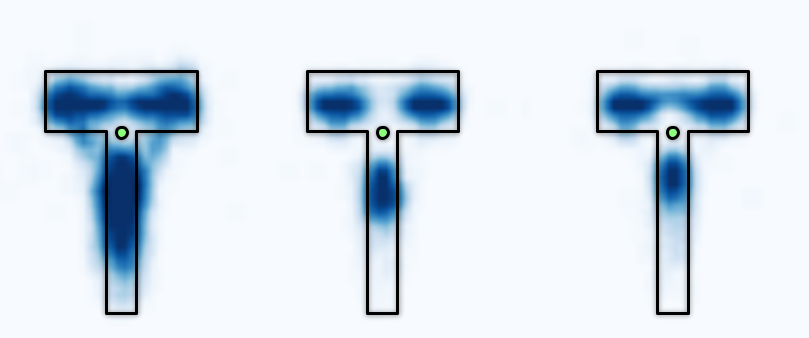

Throwing improves how we see grasps

TossingBot: Learning to Throw Arbitrary Objects with Residual Physics

Zeng et al., RSS 2019, T-RO 2020, Best Paper Award

object

end effector

Dex-Net 3.0: Computing Robust Vacuum Suction Grasp Targets in Point Clouds using a New Analytic Model and Deep Learning

Mahler et al., ICRA 2018

Rearrangement: A Challenge for Embodied AI

Batra et al., 2020

object ↔ object?

Manipulation → Rearranging Objects (3D Space)

Can we predict these displacements, without object assumptions?

✓

✗

✗

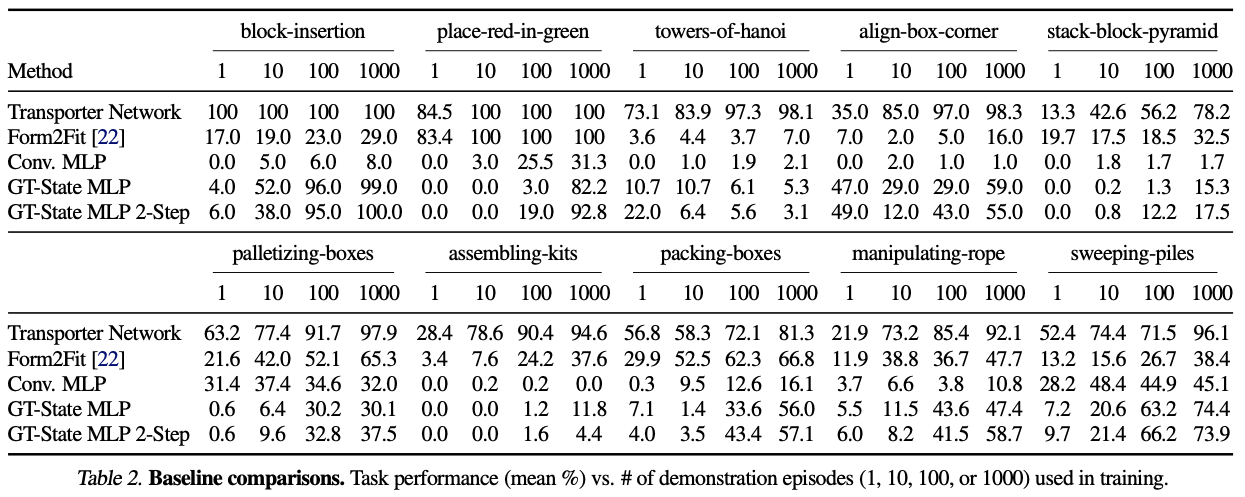

Transporter Networks: Rearranging the Visual World for Robotic Manipulation

Zeng et al., CoRL 2020

Transporter Nets

Form2Fit: Learning Shape Priors for Generalizable Assembly from Disassembly

Kevin Zakka et al., ICRA 2020

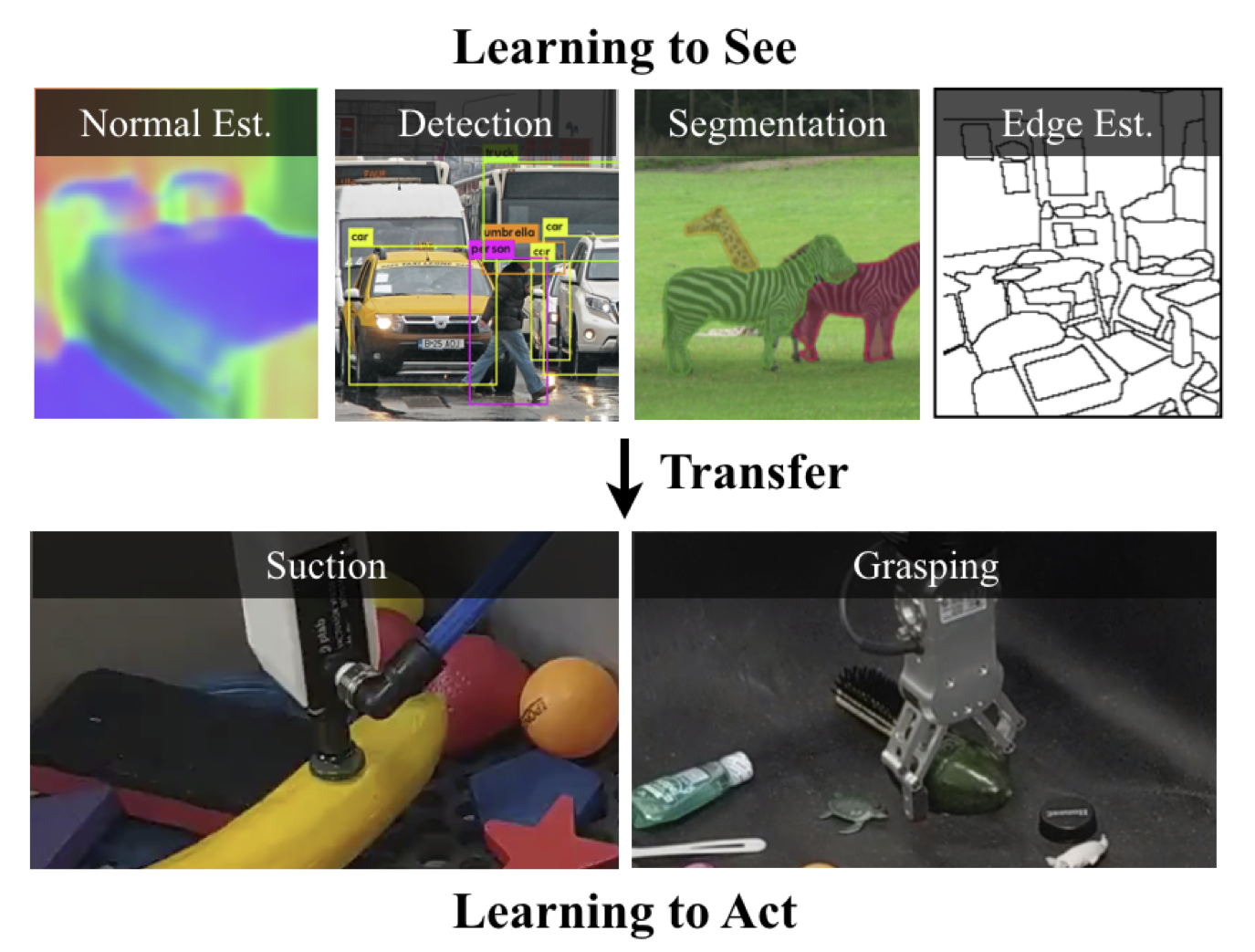

- Affordance perception: shapes → actions (e.g. grasping)

- Actions → affordance perception (e.g. TossingBot)

- Relationship between objects → actions (e.g. Transporter Nets)

From Shapes to Actions

Lin Yen-Chen et al., ICRA 2020

Jimmy Wu et al., ICRA 2021

Daniel Seita et al., ICRA 2021

Thank you!

Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching

Andy Zeng, Shuran Song, Kuan-Ting Yu, Elliott Donlon, Francois R. Hogan, Maria Bauza, Daolin Ma, Orion Taylor, Melody Liu, Eudald Romo, Nima Fazeli, Ferran Alet, Nikhil Chavan Dafle, Rachel Holladay, Isabella Morona, Prem Qu Nair, Druck Green, Ian Taylor, Weber Liu, Thomas Funkhouser, Alberto Rodriguez

TossingBot: Learning to Throw Arbitrary Objects with Residual Physics

Andy Zeng, Shuran Song, Johnny Lee, Alberto Rodriguez, Thomas Funkhouser

Transporter Networks: Rearranging the Visual World for Robotic Manipulation

Andy Zeng, Pete Florence, Jonathan Tompson, Stefan Welker, Jonathan Chien, Maria Attarian, Travis Armstrong, Ivan Krasin, Dan Duong, Vikas Sindhwani, Johnny Lee

Learning to Rearrange Deformable Cables, Fabrics, and Bags with Goal-Conditioned Transporter Networks

Daniel Seita, Pete Florence, Jonathan Tompson, Erwin Coumans, Vikas Sindhwani, Ken Goldberg, Andy Zeng

Spatial Intention Maps for Multi-Agent Mobile Manipulation

Jimmy Wu, Xingyuan Sun, Andy Zeng, Shuran Song, Szymon Rusinkiewicz, Thomas Funkhouser

Form2Fit: Learning Shape Priors for Generalizable Assembly from Disassembly

Kevin Zakka, Andy Zeng, Johnny Lee, Shuran Song

Learning to See before Learning to Act: Visual Pre-training for Manipulation

Lin Yen-Chen, Andy Zeng, Shuran Song, Phillip Isola, Tsung-Yi Lin

2021-CVPR-3DVR

By Andy Zeng

2021-CVPR-3DVR

- 842