Large Language Models on Robots

Demonstrating

On the Journey for Shared Priors

with machine learning

Implicit Behavior Cloning

Learning Grasping from Data

RT-1: Robotics Transformer

On the Journey for Shared Priors

with machine learning

Interact with the physical world to learn bottom-up commonsense

i.e. "how the world works"

Implicit Behavior Cloning

Learning Grasping from Data

RT-1: Robotics Transformer

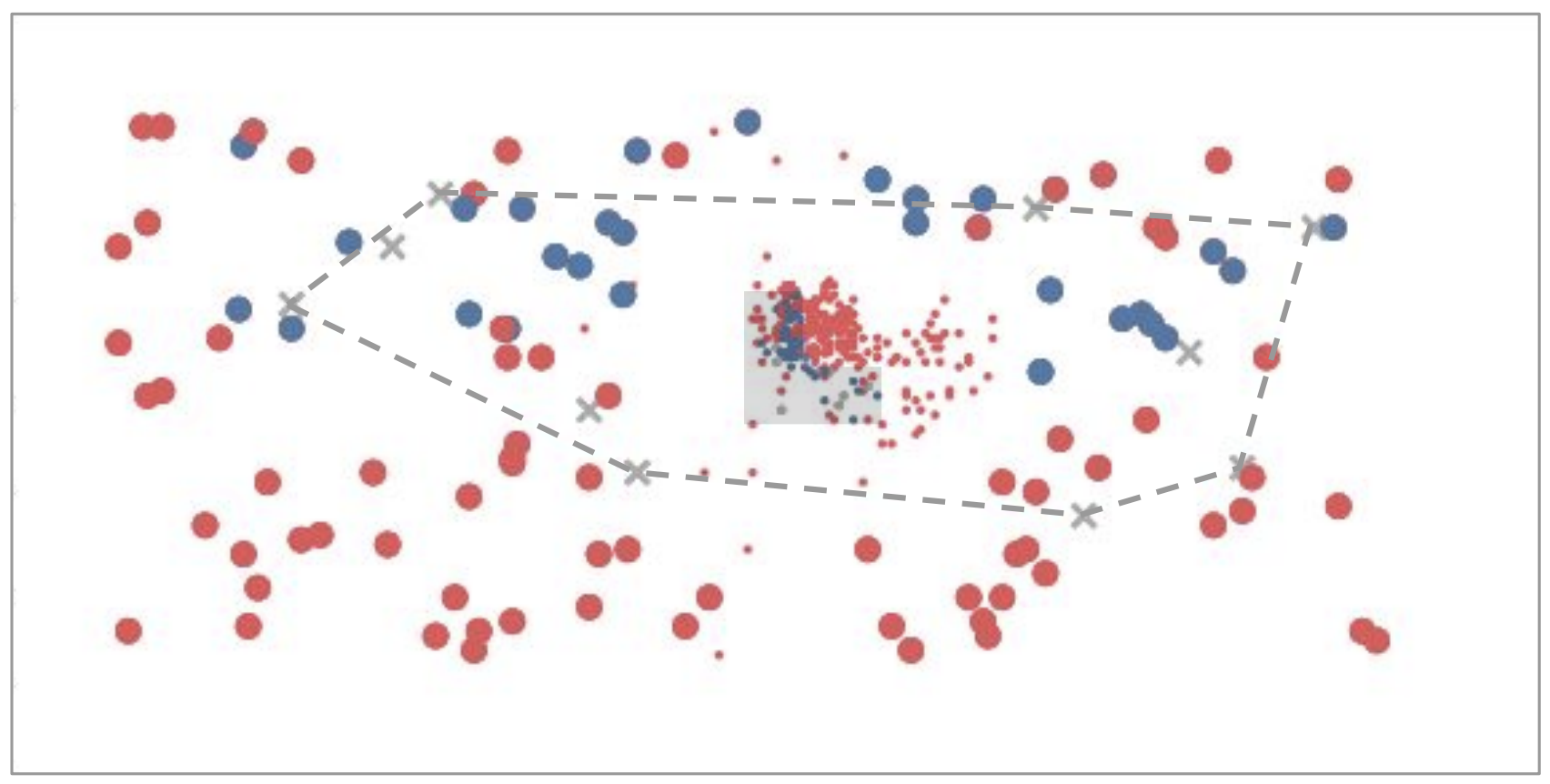

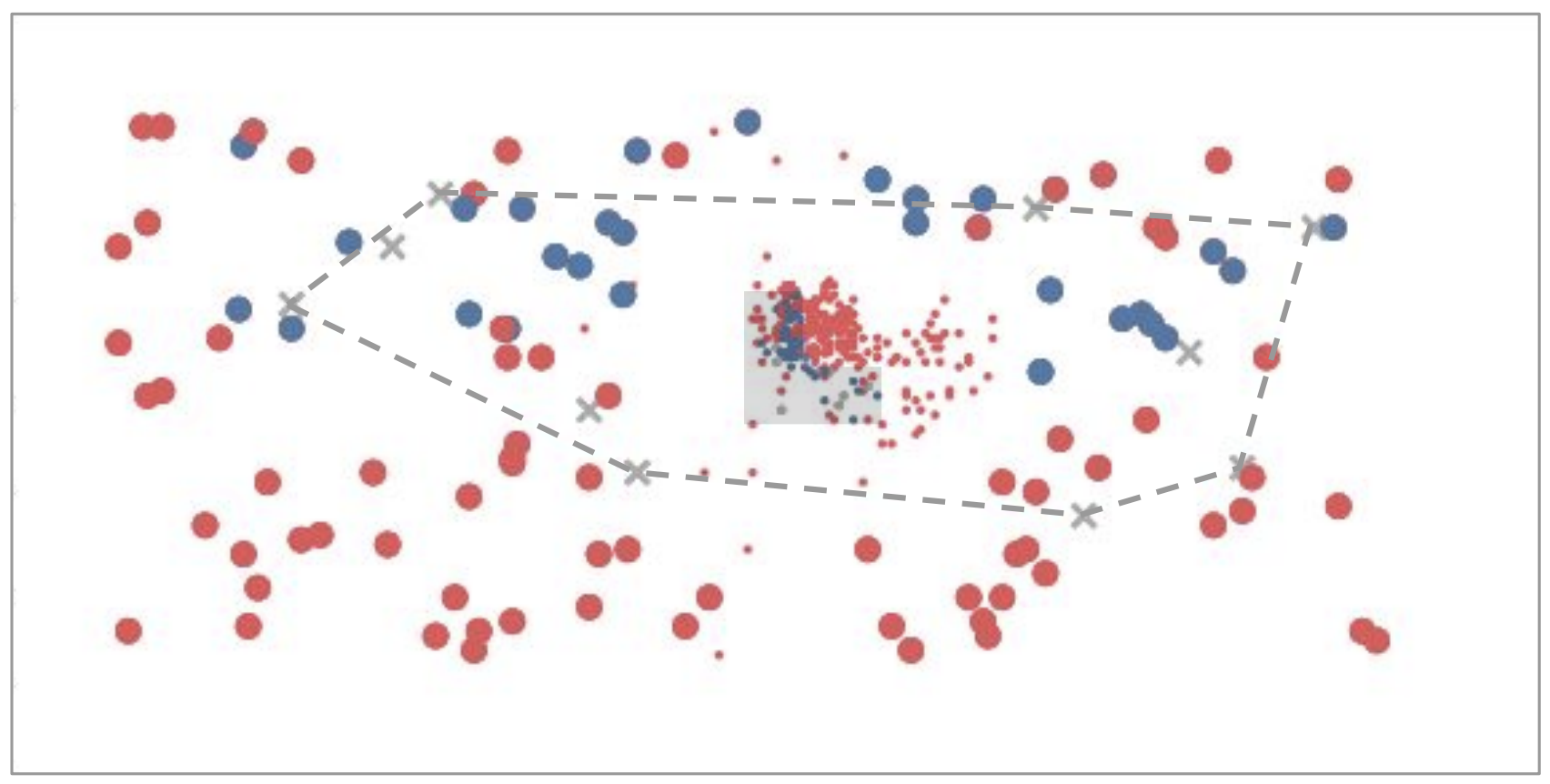

Machine Learning is a Box

Interpolation

Extrapolation

adapted from Tomás Lozano-Pérez

Machine Learning is a Box

Interpolation

Extrapolation

Internet

Meanwhile in NLP...

Large Language Models

adapted from Tomás Lozano-Pérez

Internet

Meanwhile in NLP...

Books

Recipes

Code

News

Articles

Dialogue

Large Language Models?

Robot Planning

Visual Commonsense

Robot Programming

Socratic Models, Inner Monologue

Code as Policies

PaLM-SayCan

Somewhere in the space of interpolation

Lives

Demo: Robot Planning with PaLM-SayCan

Michael Ahn, Anthony Brohan, Noah Brown, Yevgen Chebotar, Omar Cortes, Byron David, Chelsea Finn, Chuyuan Fu, Keerthana Gopalakrishnan, Karol Hausman, Alex Herzog, Daniel Ho, Jasmine Hsu, Julian Ibarz, Brian Ichter, Alex Irpan, Eric Jang, Rosario Jauregui Ruano, Kyle Jeffrey, Sally Jesmonth, Nikhil J Joshi, Ryan Julian, Dmitry Kalashnikov, Yuheng Kuang, Kuang-Huei Lee, Sergey Levine, Yao Lu, Linda Luu, Carolina Parada, Peter Pastor, Jornell Quiambao, Kanishka Rao, Jarek Rettinghouse, Diego Reyes, Pierre Sermanet, Nicolas Sievers, Clayton Tan, Alexander Toshev, Vincent Vanhoucke, Fei Xia, Ted Xiao, Peng Xu, Sichun Xu, Mengyuan Yan, Andy Zeng

https://say-can.github.io

Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

Presented at the Conference on Robot Learning (CoRL) 2022

Language models can plan and ground them using affordances

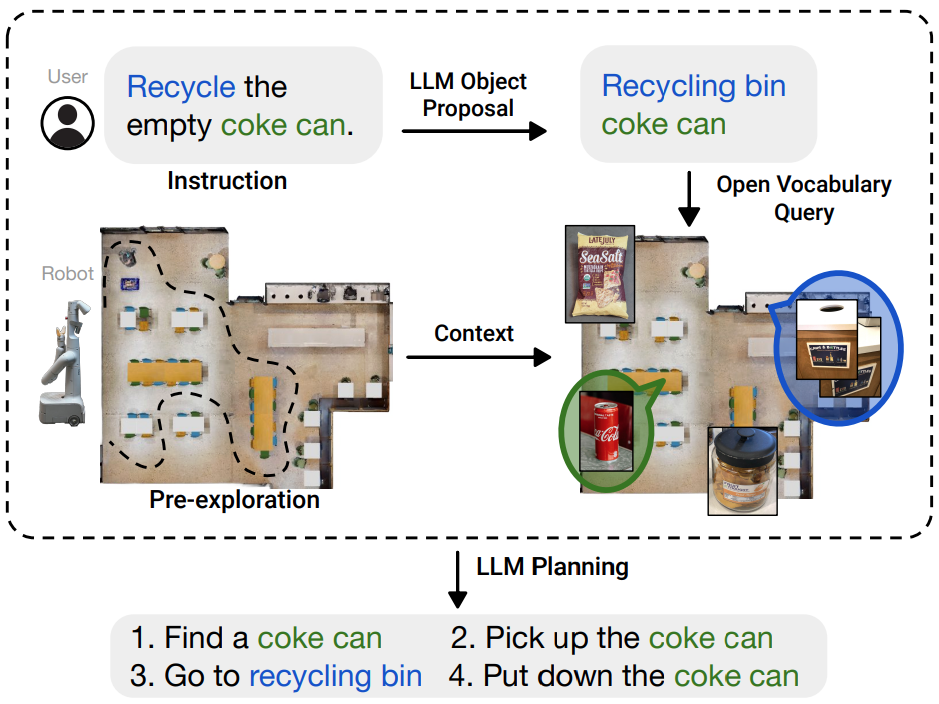

SayCan + NLMap or VLMap:

Demo: Robot Programming with Code as Policies

Language models can write robot code

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

Presented at the IEEE International Conference on Robotics and Automation (ICRA) 2023

Video chat with a robot!

Overall Trends

Task

PaLM-SayCan

+ more closed-loop feedback

+ more low-level reasoning

+ more in-context learning

Socratic Models

Inner Monologue

Code as Policies

Task

Task

Task

state

action

action

action

action

state

action

action

state

action

action

state

state

expressed in robot code

Limitations

- Language models may hallucinate:

- Can confidently produce incorrect outputs

- Don't know when they don't know

- Language can be a lossy representation

- Expensive to run in terms of cost and latency

- Limited reasoning over "embodied" context

Limitations

- Language models may hallucinate:

- Can confidently produce incorrect outputs

- Don't know when they don't know

- Language can be a lossy representation

- Expensive to run in terms of cost and latency

- Limited reasoning over "embodied" context

Understanding the limits of language models today may give rise to future work

Come visit our demo!

Thank you!

2023-RSS-demo

By Andy Zeng

2023-RSS-demo

- 890