Language as Robot Middleware

Andy Zeng

Princeton Robotics Seminar

Manipulation

amazing skill! teppanyaki steak master

https://youtu.be/5qVMIKCn_Cs

Problem difficulty = #DoFs (robot) + #DoFs (environment)

Robotics as a platform to study intelligent machines

Robotics as a platform to study intelligent machines

SHRDLU, Terry Winograd, MIT 1968

"will you please stack up both of the red blocks and either a green cube or a pyramid"

person:

"what does the box contain?"

person:

"the blue pyramid and blue block."

robot:

"can the table pick up blocks?"

person:

"no."

robot:

"why did you do that?"

person:

"ok."

robot:

"because you asked me to."

robot:

Simple things are surprisingly complex

"At an analytical level, pushing is a well understood problem... These are usually based on Coulomb's friction law..."

"The reality, however, is bitter... the sensitivity of the task to small changes in contact geometry, along with the variability of friction, hinders accurate predictions."

More than a Million Ways to Be Pushed. A High-Fidelity Experimental Dataset of Planar Pushing

Kuan-Ting Yu, Maria Bauza, Nima Fazeli, Alberto Rodriguez, IROS 2016

Imitation Learning with Behavior Cloning

Supervised Learning

1. Collect dataset with human teleop

2. Train end-to-end deep networks

- High-rate (velocity) control

- Explicit (e.g. MSE) losses

Transformers

or ConvNets

Actions

Pixels

Imitation Learning with Behavior Cloning

Supervised Learning

1. Collect dataset with human teleop

2. Train end-to-end deep networks

- Data hungry

- Policy often gets "stuck"

- High-rate (velocity) control

- Explicit (e.g. MSE) losses

In Practice

Transformers

or ConvNets

Actions

Pixels

Imitation Learning with Behavior Cloning

Supervised Learning

1. Collect dataset with human teleop

2. Train end-to-end deep networks

- Data hungry

- Policy often gets "stuck"

- High-rate (velocity) control

- Explicit (e.g. MSE) losses

In Practice

Transformers

or ConvNets

Actions

Pixels

Imitation Learning with Behavior Cloning

- Data hungry

- Policy often gets "stuck"

In Practice

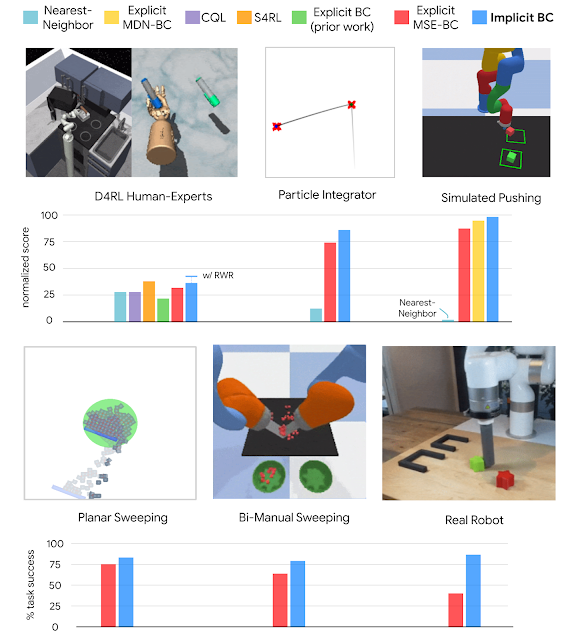

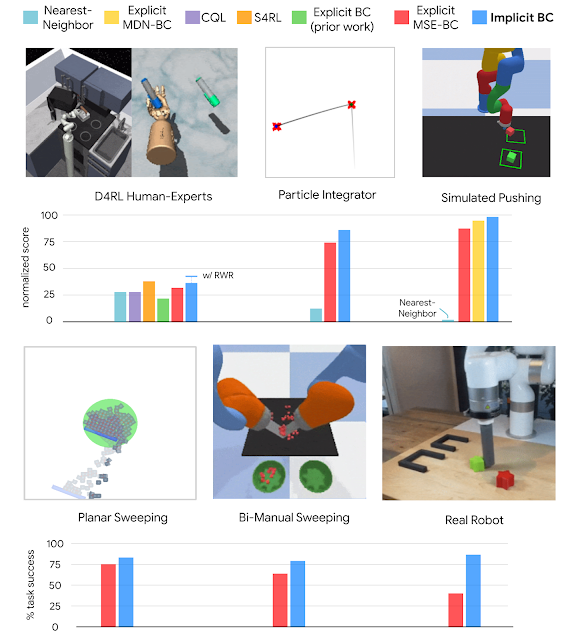

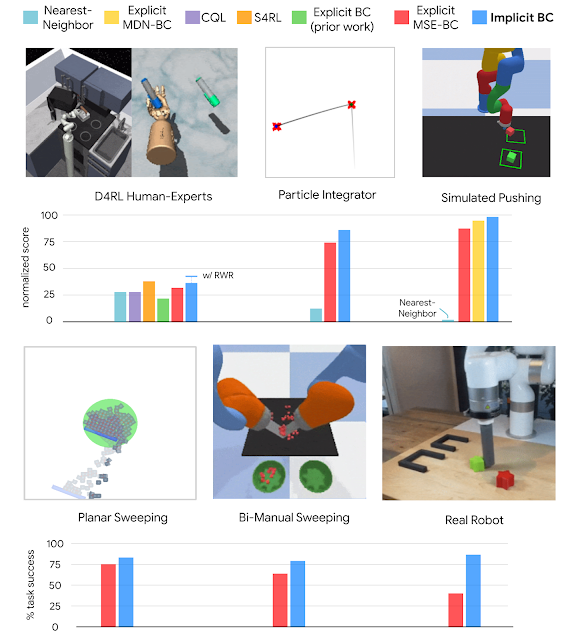

Implicit Behavior Cloning

Implicit Behavioral Cloning, CoRL 2021

Pete Florence, Corey Lynch, Andy Zeng, Oscar Ramirez, Ayzaan Wahid, Laura Downs, Adrian Wong, Johnny Lee, Igor Mordatch, Jonathan Tompson

Implicit Behavior Cloning

Implicit Behavioral Cloning, CoRL 2021

Pete Florence, Corey Lynch, Andy Zeng, Oscar Ramirez, Ayzaan Wahid, Laura Downs, Adrian Wong, Johnny Lee, Igor Mordatch, Jonathan Tompson

Implicit Behavior Cloning

Implicit Behavioral Cloning, CoRL 2021

Pete Florence, Corey Lynch, Andy Zeng, Oscar Ramirez, Ayzaan Wahid, Laura Downs, Adrian Wong, Johnny Lee, Igor Mordatch, Jonathan Tompson

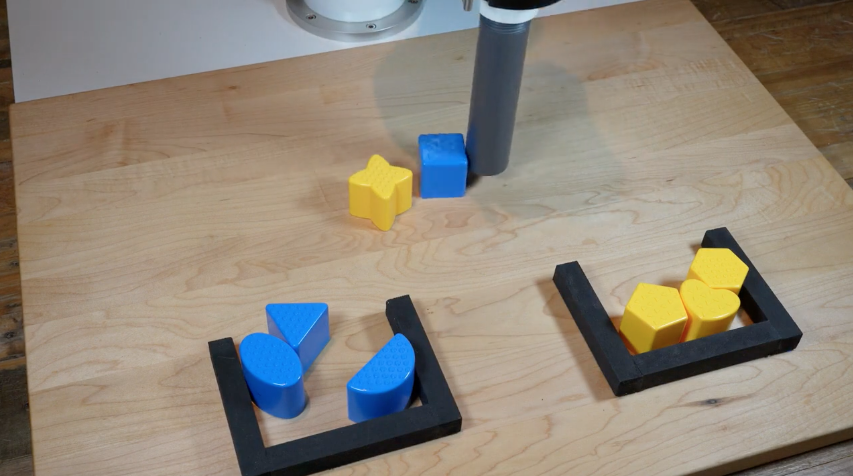

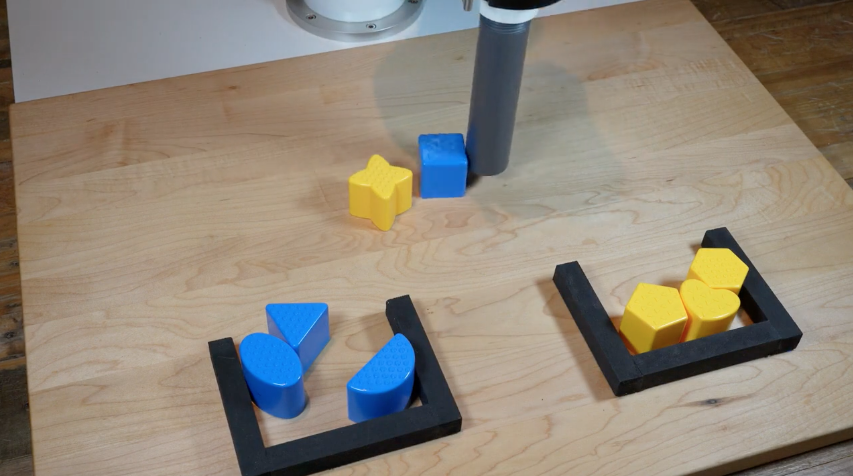

Implicit Behavior Cloning & Transporter Nets

Transporter Networks: Rearranging the Visual World for Robotic Manipulation, CoRL 2020

Andy Zeng, Pete Florence, Jonathan Tompson, Stefan Welker, Jonathan Chien, Maria Attarian, Travis Armstrong, Ivan Krasin, Dan Duong, Ayzaan Wahid, Vikas Sindhwani, Johnny Lee

A generalization of loss functions from Transporter Nets (spatial action maps)

Implicit Behavior Cloning

Implicit Behavioral Cloning, CoRL 2021

Pete Florence, Corey Lynch, Andy Zeng, Oscar Ramirez, Ayzaan Wahid, Laura Downs, Adrian Wong, Johnny Lee, Igor Mordatch, Jonathan Tompson

Implicit Behavior Cloning

Implicit Behavioral Cloning, CoRL 2021

Pete Florence, Corey Lynch, Andy Zeng, Oscar Ramirez, Ayzaan Wahid, Laura Downs, Adrian Wong, Johnny Lee, Igor Mordatch, Jonathan Tompson

Implicit Behavior Cloning

Implicit Behavioral Cloning, CoRL 2021

Pete Florence, Corey Lynch, Andy Zeng, Oscar Ramirez, Ayzaan Wahid, Laura Downs, Adrian Wong, Johnny Lee, Igor Mordatch, Jonathan Tompson

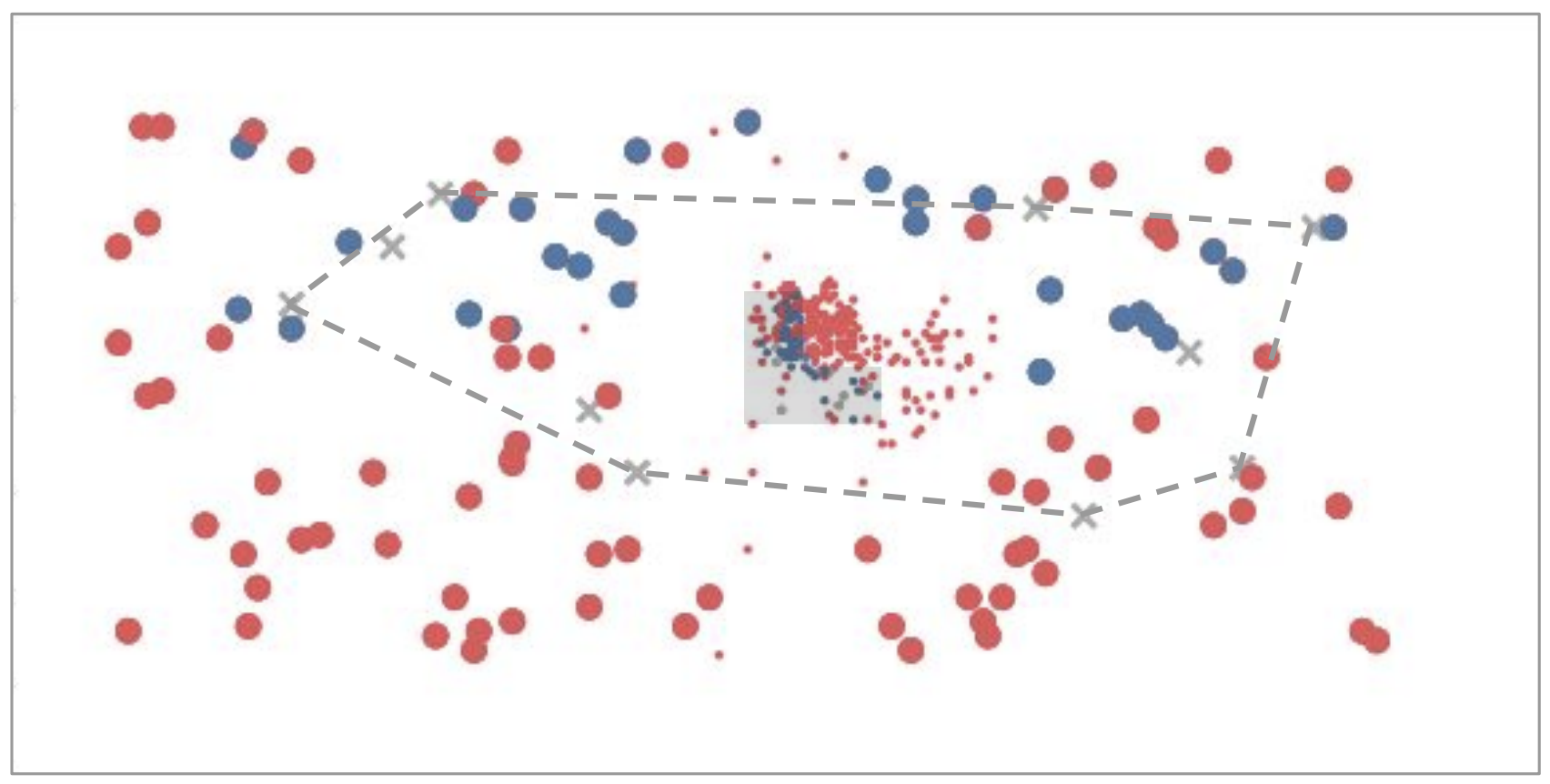

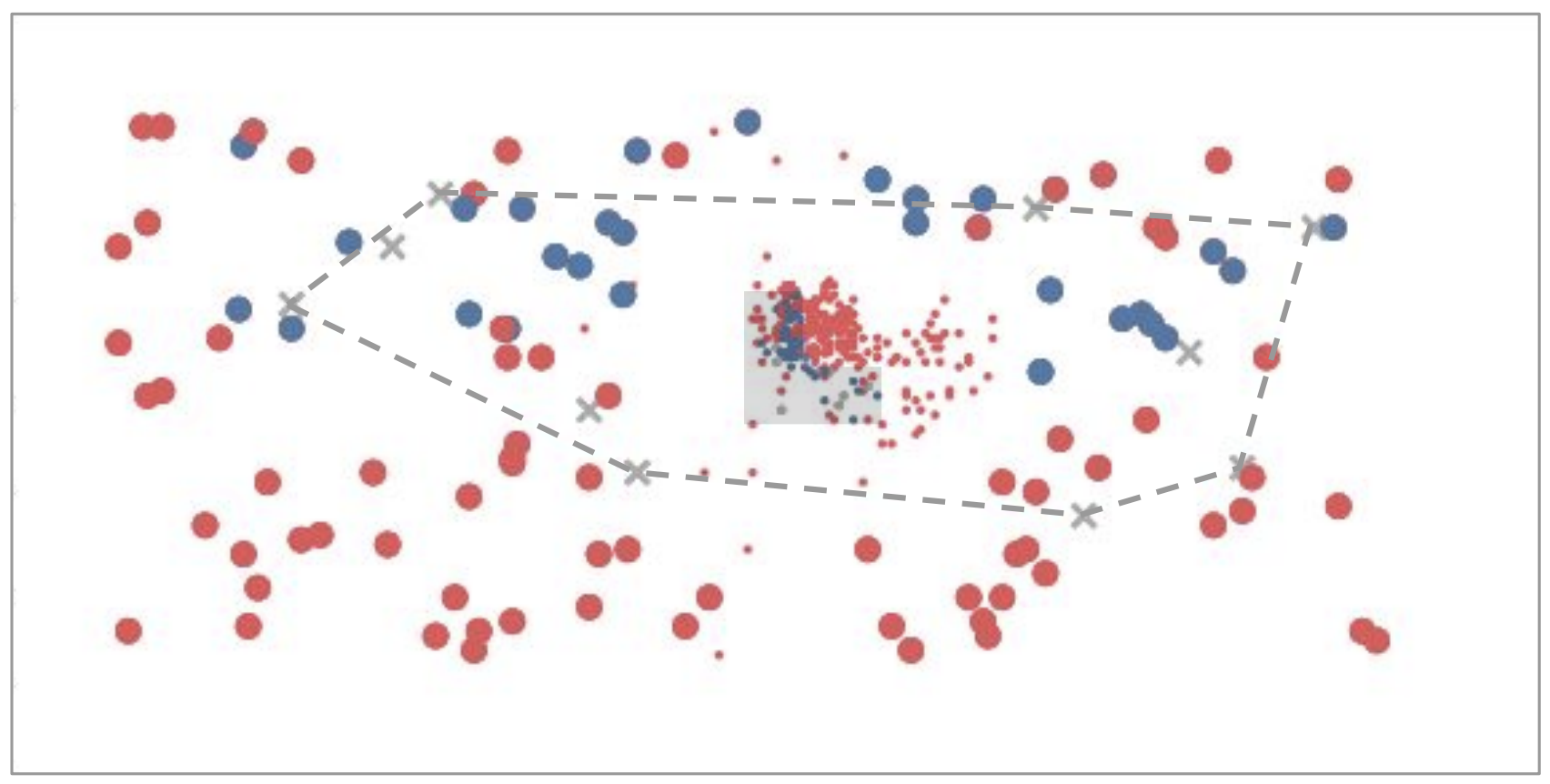

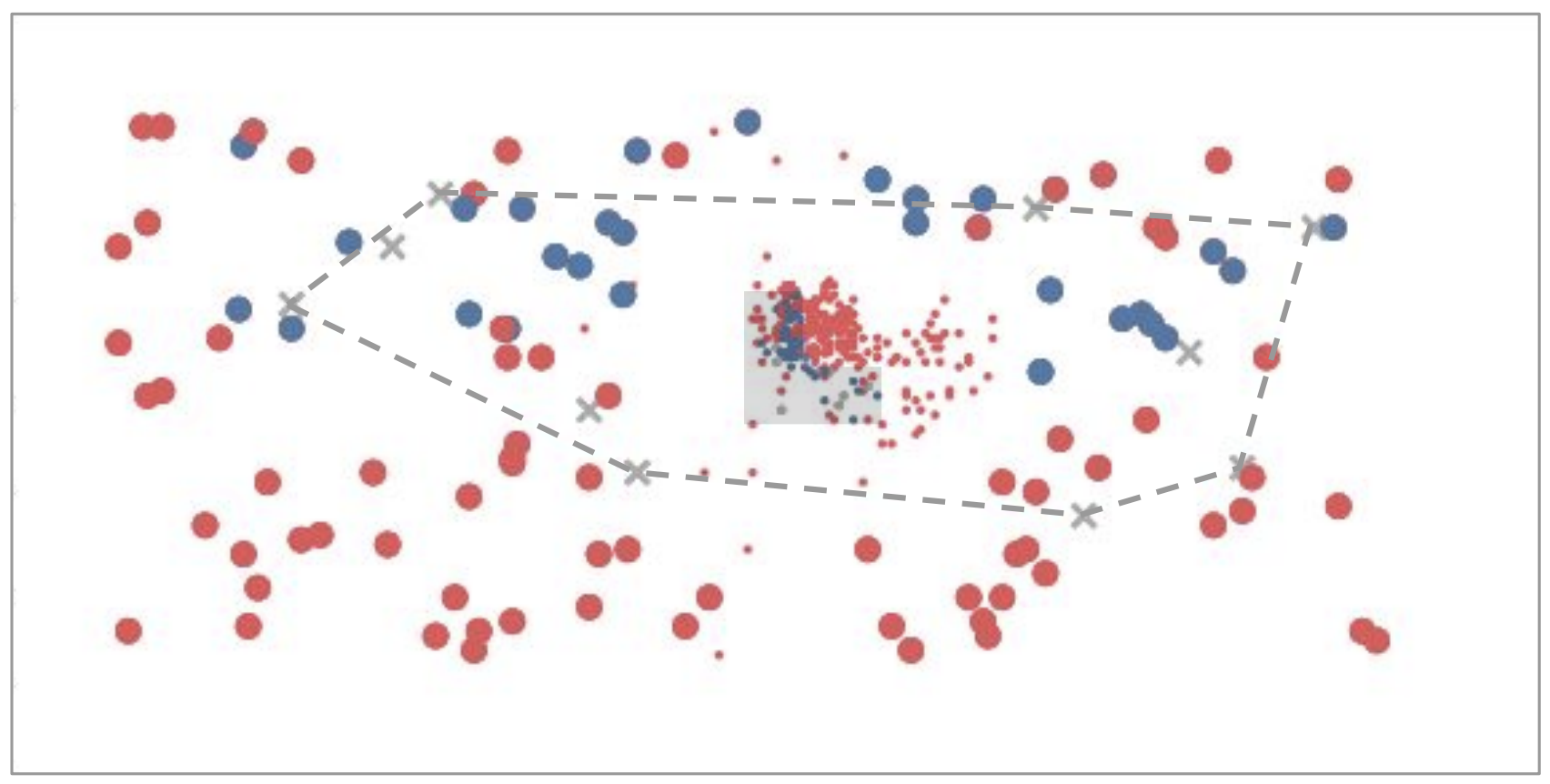

Interpolation vs Extrapolation*

- Sample efficiency improvements

Deep Learning is a Box

Interpolation

Extrapolation

Deep Learning is a Box

Interpolation

Extrapolation

Roboticist

Vision

NLP

Deep Learning is a Box

Interpolation

Extrapolation

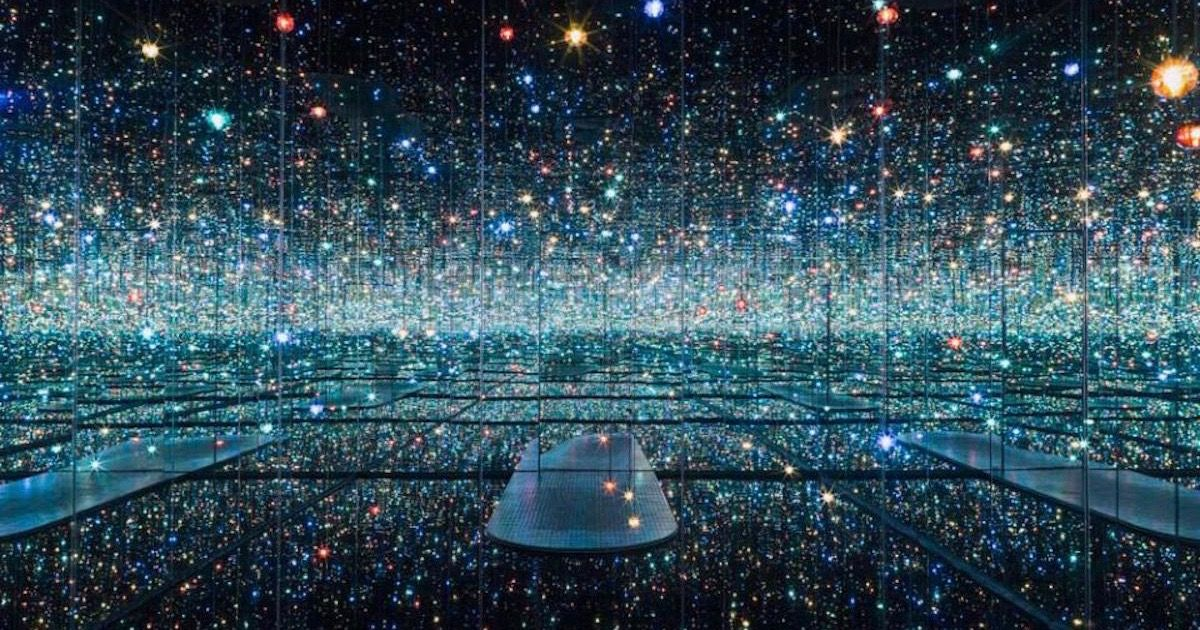

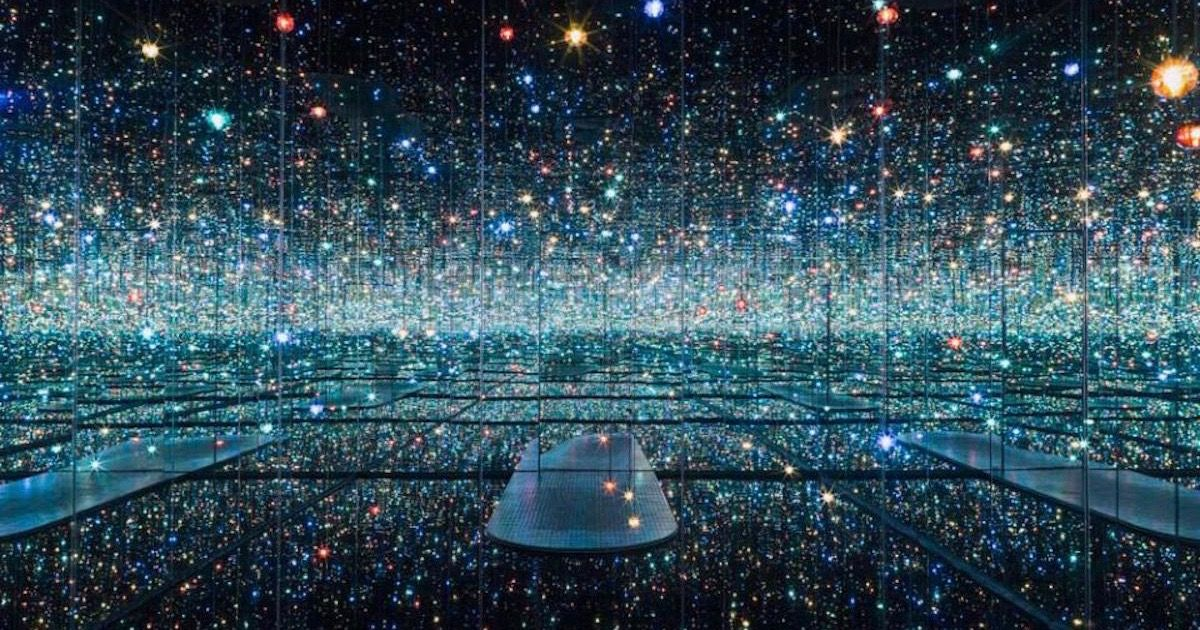

Internet

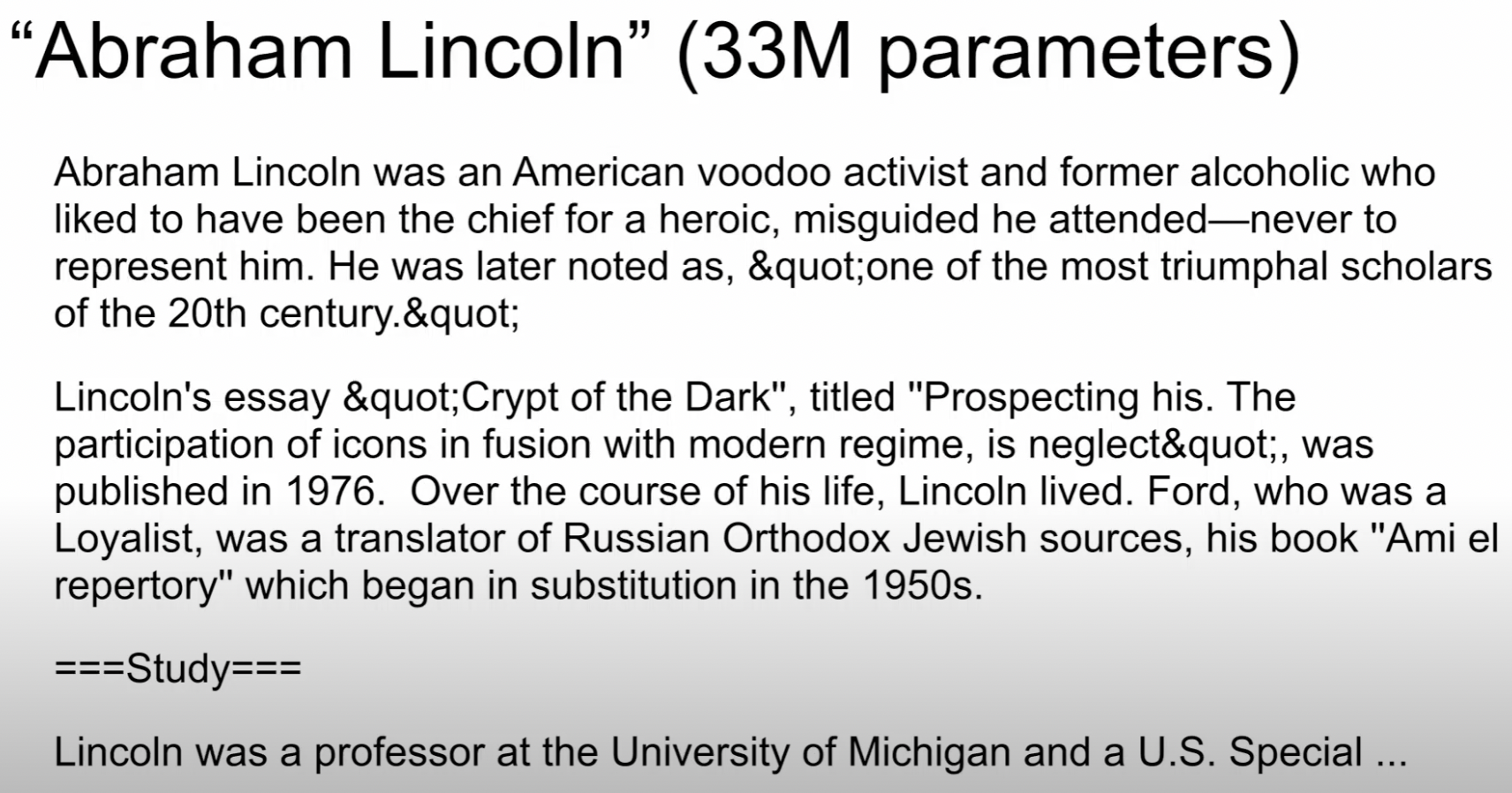

Meanwhile in NLP...

Large Language Models

Large Language Models

Internet

Meanwhile in NLP...

Books

Recipes

Code

News

Articles

Dialogue

Demo

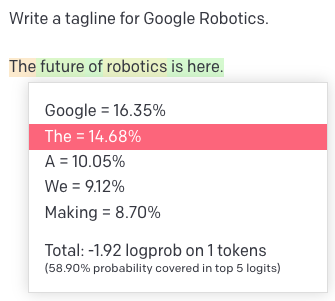

Quick Primer on Language Models

Tokens (inputs & outputs)

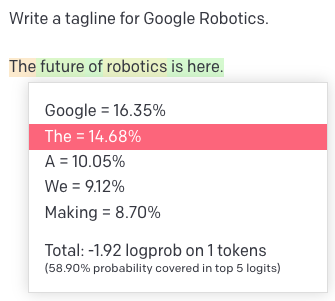

Transformers (models)

Attention Is All You Need, NeurIPS 2017

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin

Quick Primer on Language Models

Tokens (inputs & outputs)

Transformers (models)

Pieces of words (BPE encoding)

big

bigger

per word:

biggest

small

smaller

smallest

big

er

per token:

est

small

Attention Is All You Need, NeurIPS 2017

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin

Quick Primer on Language Models

Tokens (inputs & outputs)

Transformers (models)

Self-Attention

Pieces of words (BPE encoding)

big

bigger

per word:

biggest

small

smaller

smallest

big

er

per token:

est

small

Attention Is All You Need, NeurIPS 2017

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin

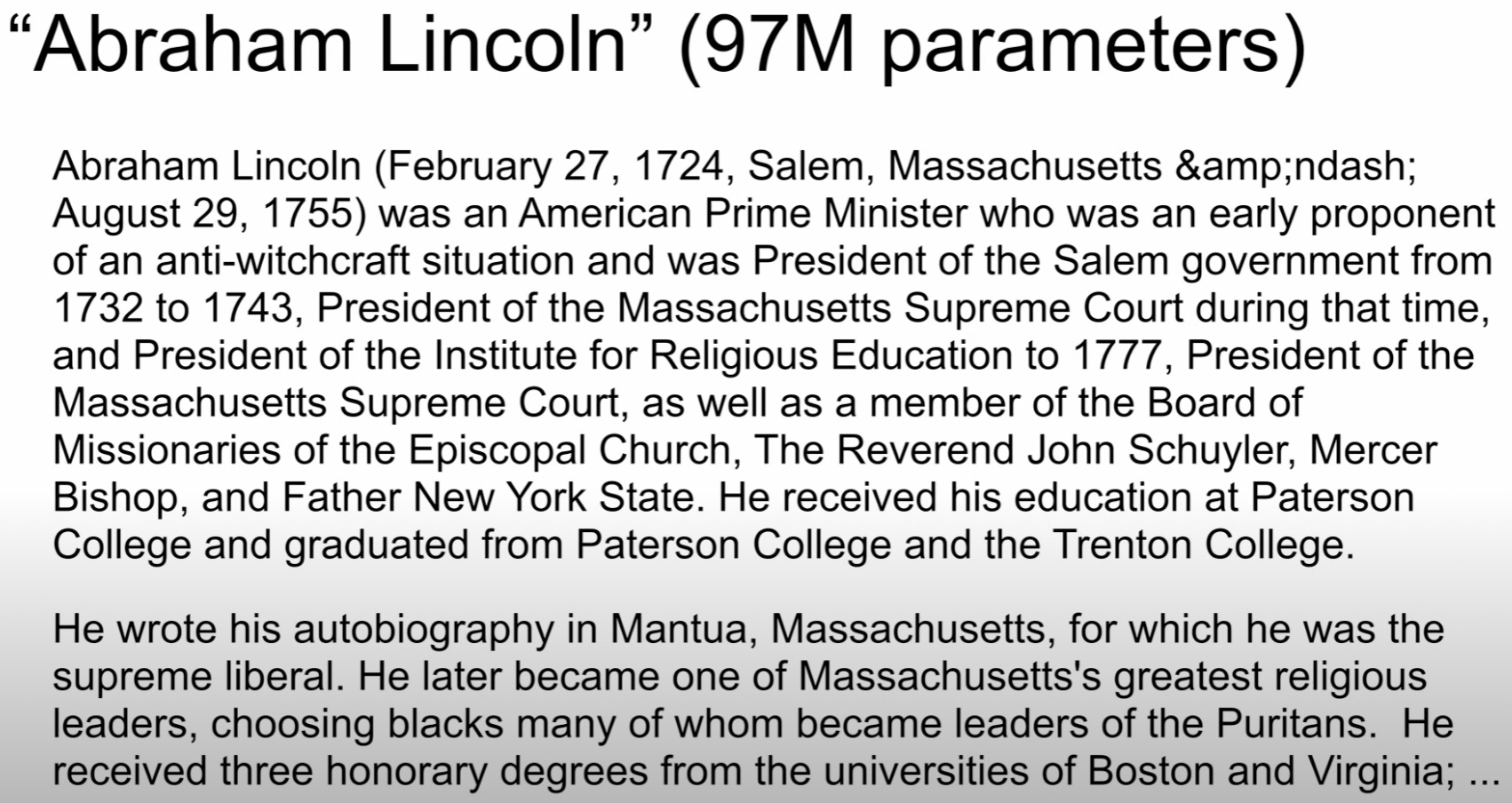

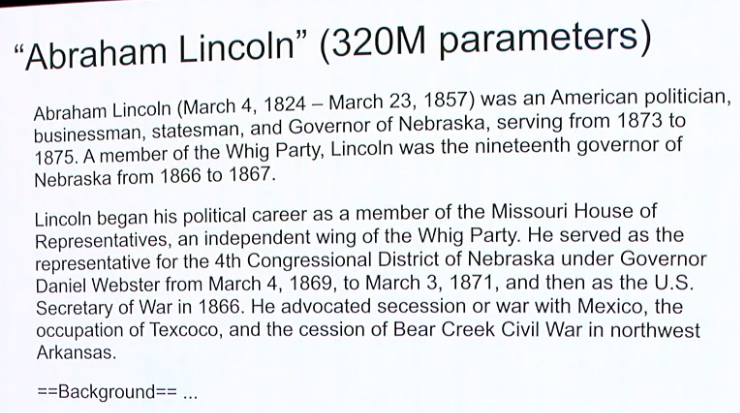

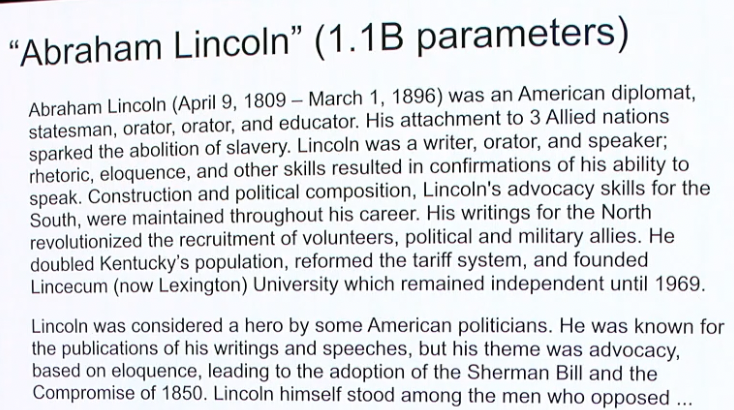

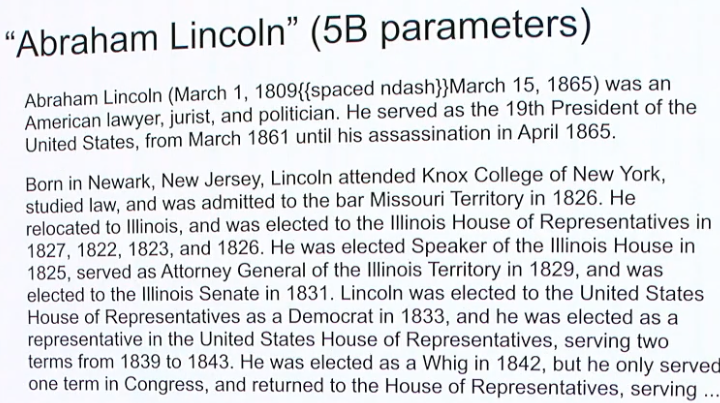

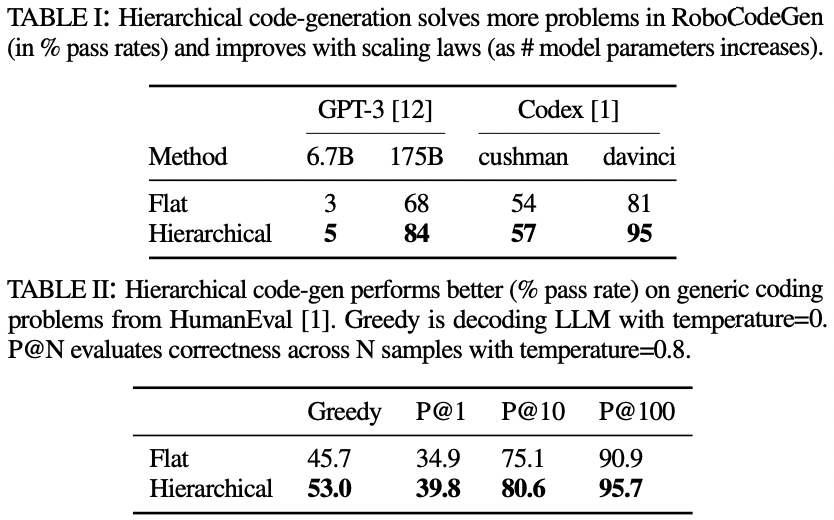

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Somewhere in the space of interpolation

Example?

Lives robot planning

Large Language Models on Robots

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Open research problem! but here's one way to do it...

Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

https://say-can.github.io

Large Language Models on Robots

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Open research problem! but here's one way to do it...

Visual Language Model

CLIP, ALIGN, LiT,

SimVLM, ViLD, MDETR

Human input (task)

Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

https://say-can.github.io

Large Language Models on Robots

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Open research problem! but here's one way to do it...

Visual Language Model

CLIP, ALIGN, LiT,

SimVLM, ViLD, MDETR

Human input (task)

Large Language Model for Planning (e.g. SayCan)

Language-conditioned Policies

Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

https://say-can.github.io

Live Demo

Language as a "state" representation

3D Recontruction

Language as a "state" representation

semantic?

compact?

compositional?

general?

interpretable?

Perception

Actions

Planning

3D Recontruction

Language as a "state" representation

semantic?

compact?

compositional?

general?

interpretable?

Perception

Actions

Planning

3D Recontruction

scope of guarantees

safety & bias mitigation

Language as a "state" representation

3D Recontruction

Perception

Actions

Planning

semantic? ✓

compact? ✓

compositional? ✓

general? ✓

interpretable? ✓

What about language?

Ego4D

Limits of language as a "state" representation

- Loses spatial precision

- Highly multimodal (lots of different ways to say the same thing)

- Not as information-rich as in-domain representations (e.g. images)

Limits of language as a "state" representation

- Only for high level? what about control?

Perception

Planning

Control

Socratic Models

Inner Monologue

ALM + LLM + VLM

SayCan

Wenlong Huang et al, 2022

LLM

Imitation? RL?

Engineered?

Intuition and commonsense is not just a high-level thing

Intuition and commonsense is not just a high-level thing

Applies to low-level behaviors too

Is the "dark matter" of robotics

- Spatial: "move a little bit to the left"

- Temporal: "move faster"

- Functional: "balance yourself"

Demo

Intuition and commonsense is not just a high-level thing

Seems to be stored in the depths of in language models... how to extract it?

Applies to low-level behaviors too

Is the "dark matter" of robotics

- Spatial: "move a little bit to the left"

- Temporal: "move faster"

- Functional: "balance yourself"

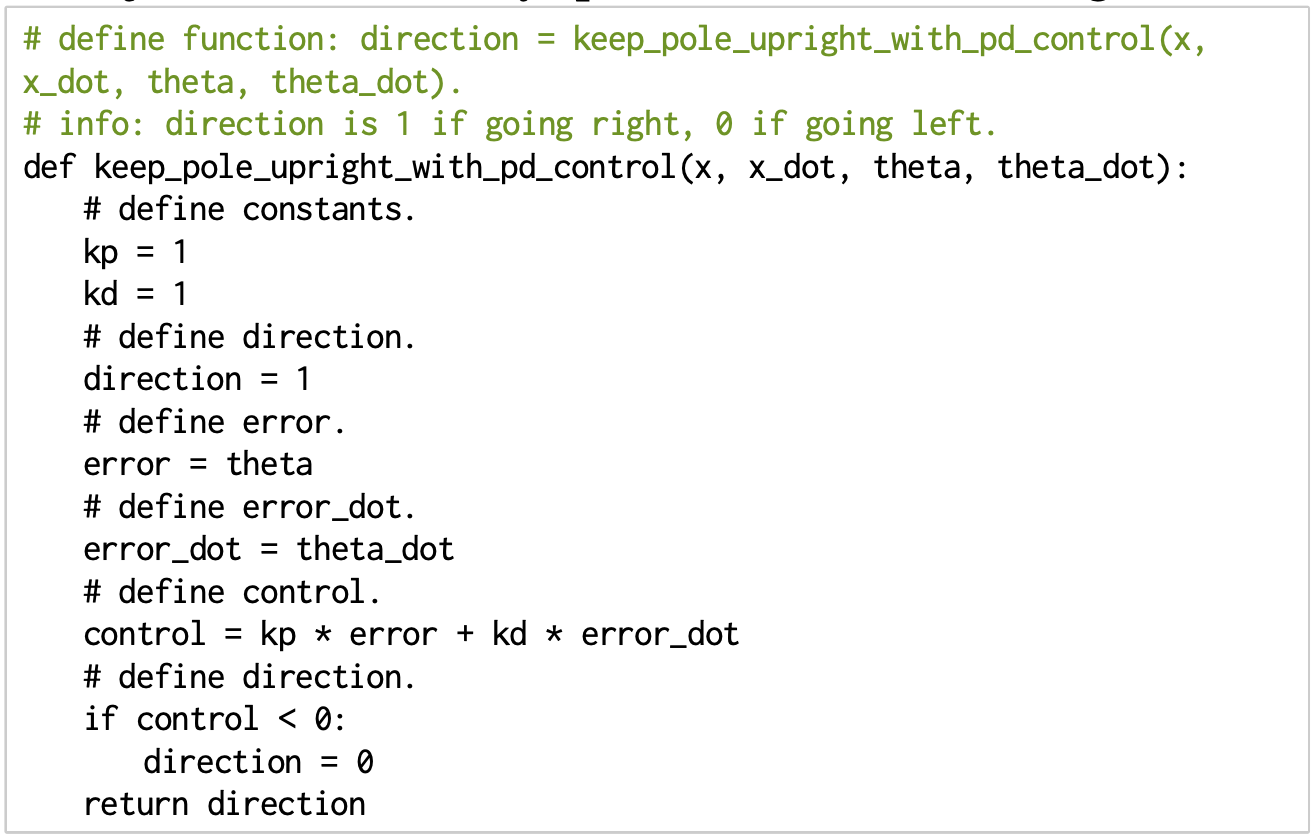

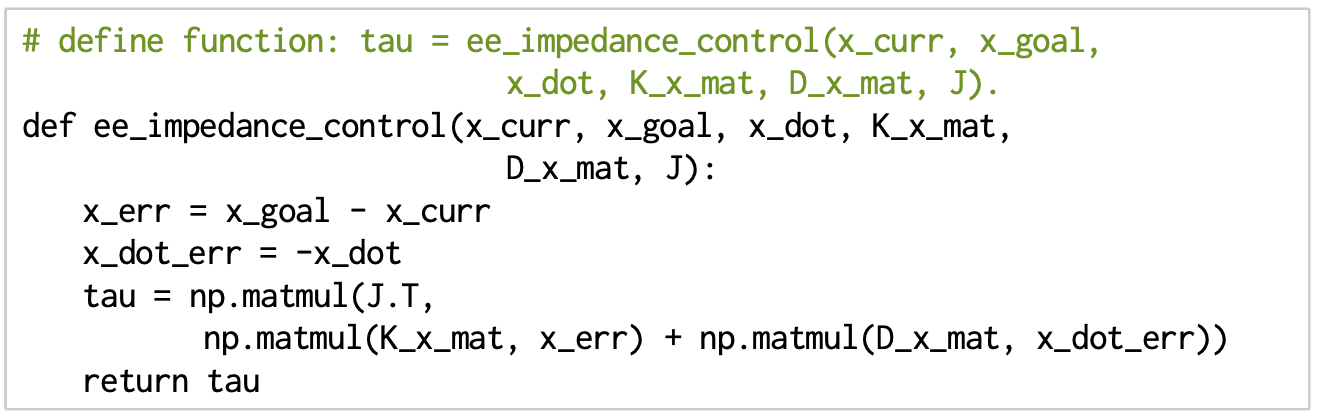

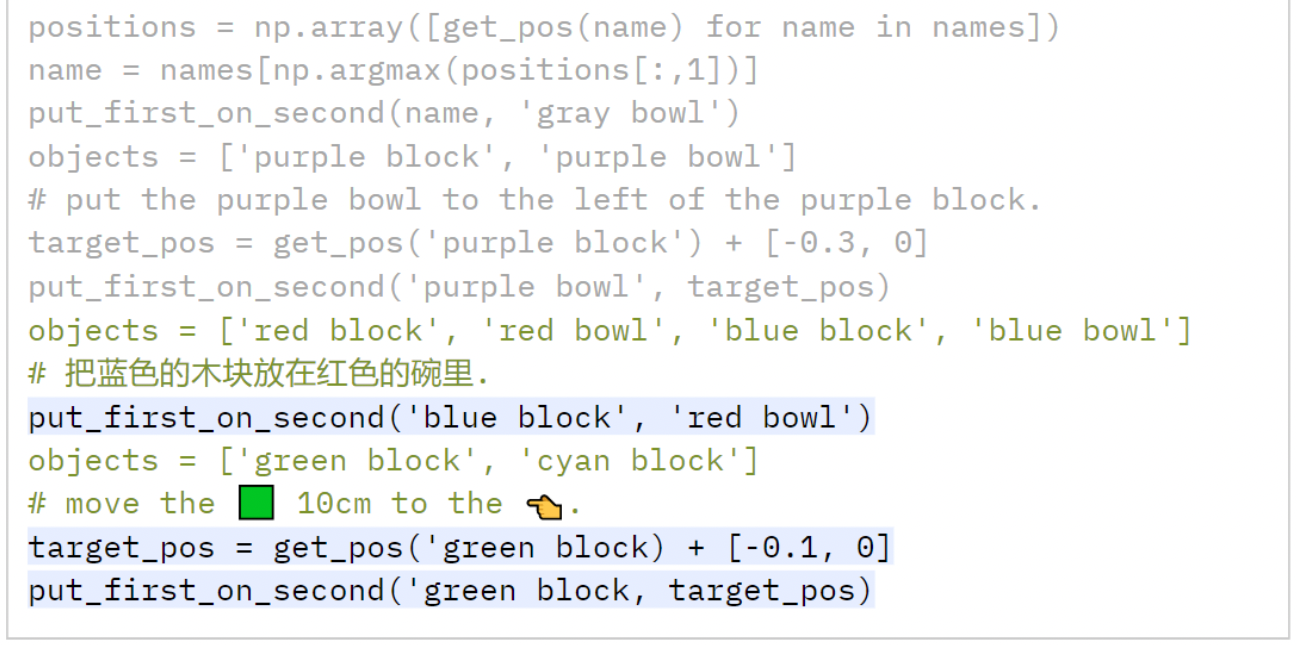

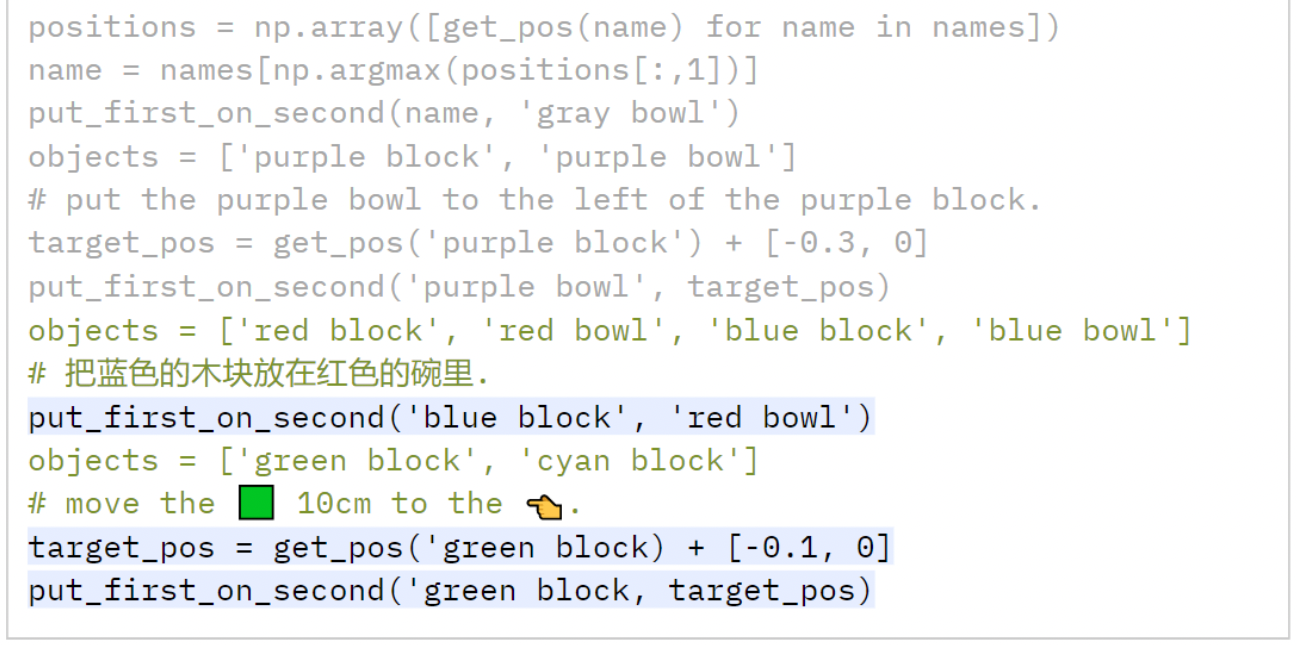

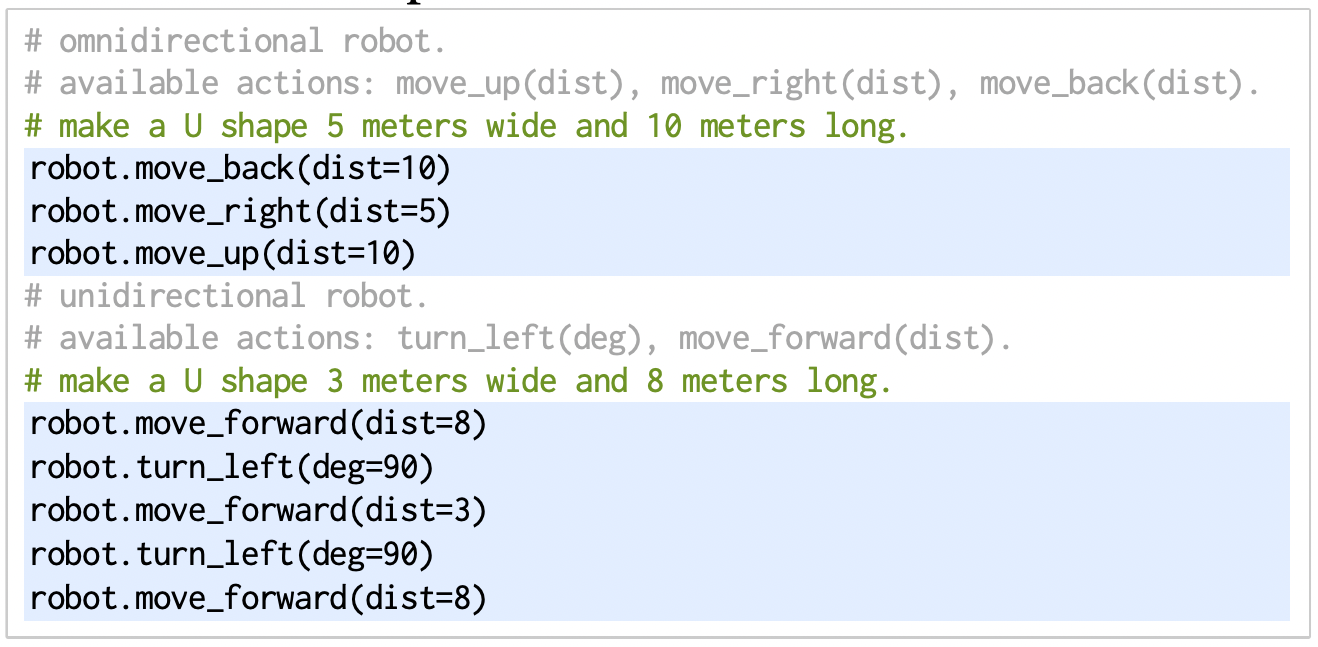

Language models can write code

Code as a medium to express low-level commonsense

Live Demo

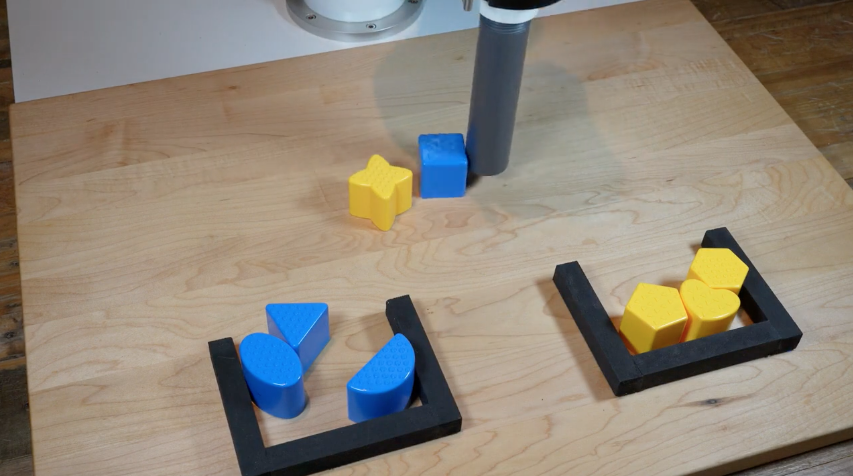

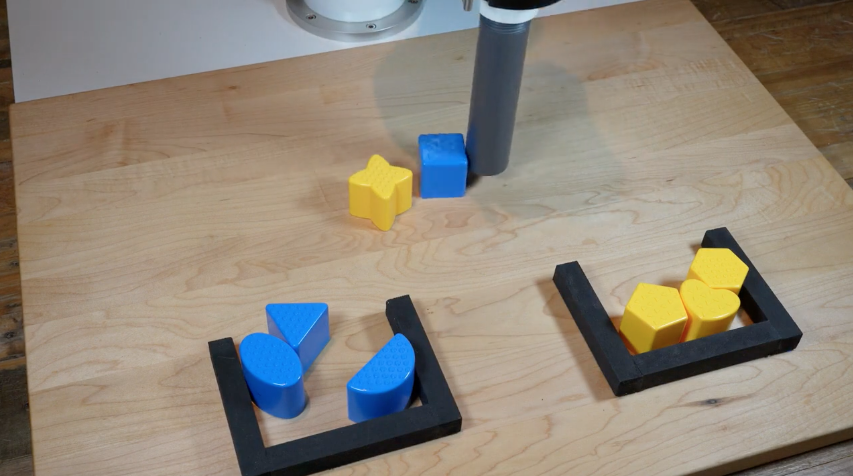

Language models can write code

Code as a medium to express low-level commonsense

Live Demo

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

Language models can write code

Code as a medium to express low-level commonsense

Live Demo

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

Language models can write code

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

use NumPy,

SciPy code...

Language models can write code

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

- PD controllers

- impedance controllers

Language models can write code

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

Language models can write code

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

What is the foundation models for robotics?

How much data do we need?

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

How much data do we need?

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

How much data do we need?

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

50 expert demos

How much data do we need?

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

500 expert demos

50 expert demos

How much data do we need?

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

500 expert demos

5000 expert demos

50 expert demos

How much data do we need?

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

500 expert demos

5000 expert demos

50 expert demos

How much data do we need?

Robot Learning

Language Models

- Finding other sources of data (sim, YouTube)

- Improve data efficiency with prior knowledge

Not a lot of robot data

Lots of Internet data

500 expert demos

5000 expert demos

50 expert demos

Scale alone might not be enough

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

adapted from Tomás Lozano-Pérez

Machine learning is a box

... but robotics is a line

- Finding other sources of data (sim, YouTube)

- Improve data efficiency with prior knowledge

Different embodiments etc....

A possible middleground

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

Embrace Compositionality

adapted from Tomás Lozano-Pérez

Deep learning is a box

... but robotics is a line

A possible middleground

Robot Learning

Language Models

Not a lot of robot data

Lots of Internet data

Embrace Compositionality

adapted from Tomás Lozano-Pérez

Deep learning is a box

... but robotics is a line

2. composing them

autonomously

1. build boxes

Towards grounding everything in language

Language

Control

Vision

Tactile

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

"Language" as the glue for robots & AI

Language

Perception

Planning

Control

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

"Language" as the glue for robots & AI

Language

Perception

Planning

Control

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

We have some reason to believe that

"the structure of language is the structure of generalization"

To understand language is to understand generalization

https://evjang.com/2021/12/17/lang-generalization.html

Sapir–Whorf hypothesis

Towards grounding everything in language

Language

Perception

Planning

Control

Humans

Towards grounding everything in language

Language

Perception

Planning

Control

Humans

Not just for general robots,

but for human-centered intelligent machines!

- Grounding safety and risk in language

Things I wish my robot had...

- Grounding safety and risk in language

- Compositional guarantees on safety and generalization?

Things I wish my robot had...

- Grounding safety and risk in language

- Compositional guarantees on safety and generalization?

- Embed more LLM prior knowledge into control?

Things I wish my robot had...

- Grounding safety and risk in language

- Compositional guarantees on safety and generalization?

- Embed more LLM prior knowledge into control?

- PAC-bayes on LLM planners?

Things I wish my robot had...

Thank you!

Pete Florence

Adrian Wong

Johnny Lee

Vikas Sindhwani

Stefan Welker

Vincent Vanhoucke

Kevin Zakka

Michael Ryoo

Maria Attarian

Brian Ichter

Krzysztof Choromanski

Federico Tombari

Jacky Liang

Aveek Purohit

Wenlong Huang

Fei Xia

Peng Xu

Karol Hausman

and many others!

Amazon Picking Challenge

arc.cs.princeton.edu

Team MIT-Princeton

excel at simple things, adapt to hard things

How to endow robots with

"intuition" and "commonsense"?

2022-Princeton-Talk

By Andy Zeng

2022-Princeton-Talk

- 776