Asynchronous JavaScript

Synchronous EXECUTION

Particular case of an asynchronous execution, where the process waits for every single thing,

even if independent.

The synchronous case

- I want something

- I wait

- I get it

- I move to the next thing

- And it forces me to ask for one thing at a time

CPU Activity

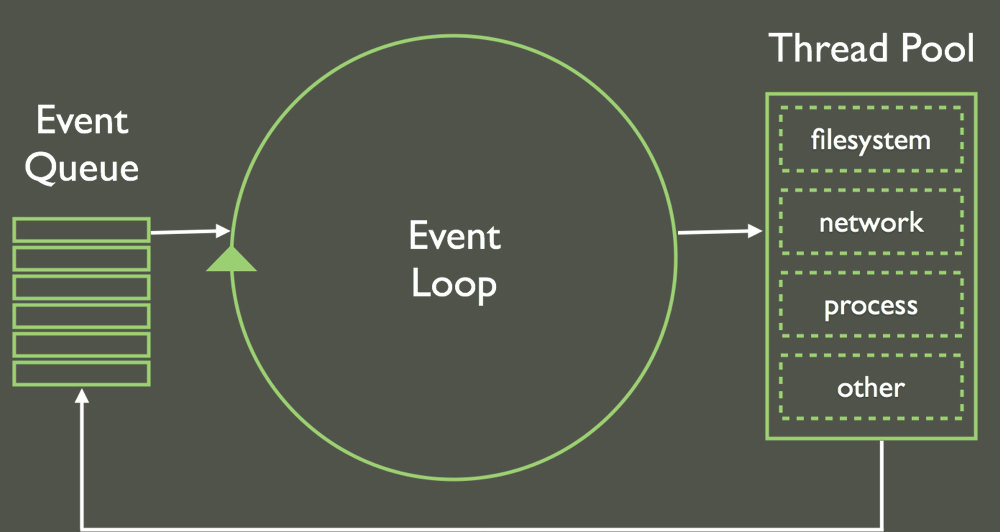

Asynchronous Execution

It's not magic.

It's a "simple" queue

What you basically do

- You provide callbacks

- The synchronous part is only about pushing the callback as a reference in memory

- The bridge between now and the future is the set of closure variables and context retained by the provided callback

- Your callback will be called, or not

Is it mono threaded ?

- One event loop is mono threaded.

- Clusters are not

- But they do not share memory natively

(nevertheless you can send data process to process)

I - Basic use

- For now you don't chain anything

Event emitter

let ee = new EventEmitter();

ee.on('data', ({prop}) => {

// ... do something with `prop`

});- Event emitters can emit things

Callback style

fs.readFile('path/to/file.ext', (err, data) => {

// ... `err` not null means something went wrong

// ... otherwise consider `data`

});- Emitted things can make a whole, that is eventually errored or completed

- The nodejs classical callback style can be used

Callback style

II - Advanced use

- You start chaining things and manipulating flows

- More generally, it's all about having

- consumers

- producers

- consumers of producers

- and things in between

Utilities for callbacks APIs

async provides a complete set of utilities for handling callbacks APIs

Promise

let promisify = (f) => (...args) => new Promise((resolve, reject) => {

args.length = f.length - 1;

f(...args, (err, data) => {

err ? reject(err) : resolve(data);

});

});

let readFile = promisify(fs.readFile);

readFile('path/to/file')

.then(

(data) => {

// ... do something with `data`

},

(err) => {

// ... do something with `err`

}

);- You can chain handlers on resolution or rejection

- Those handlers can return other promises or values

Promise

- Common pitfalls with promises:

https://pouchdb.com/2015/05/18/we-have-a-problem-with-promises.html

Streams

// readable stream

let r = fs.createReadStream('file.txt');

// writable stream

let w = fs.createWriteStream('file.txt.gz');

// duplex stream

let z = zlib.createGzip();

r.pipe(z).pipe(w);- Emitted things can be readable streams piped into writable streams

Streams

import {Transform} from 'stream';

class OddUpper extends Transform {

_transform(chunk, enc, cb) {

for (let i = 0, length = chunk.length; i < length; i += 2) {

chunk.writeUInt8(chunk.readUInt8(i) - 32, i); // uppercase

}

this.push(chunk);

cb();

}

}

var oddUpper = new OddUpper();

oddUpper.pipe(process.stdout);

oddUpper.write('hello world\n');

oddUpper.write('another line');

oddUpper.end();

// output:

// HeLlO WoRlD

// AnOtHeR LiNe

- You can operate at the chunk buffer level

Streams

- Implement your own stream.Transform:

http://codewinds.com/blog/2013-08-20-nodejs-transform-streams.html

- Implement your own stream.Duplex:

http://codewinds.com/blog/2013-08-31-nodejs-duplex-streams.html

DISCRETE FRP WITH OBSERVABLES

let observable = Rx.Observable.create((observer) => {

observer.next(1);

observer.next(2);

observer.next(3);

setTimeout(() => {

observer.next(4);

observer.complete();

}, 1000);

});

observable.subscribe({

next: (x) => { /* ... work with `x` */ },

error: (err) => { /* ... work with the error `err` */ },

complete: () => { /* ... it has finished */ }

});- FRP stands for Functional Reactive Programming

- Emitting things is notifying an observer

- Subscribing to an observable lets the observer call your subscription methods

- Subscribing is active, subscription is passive

Discrete FRP with observables

Rx.Observable.fromEvent(input, 'input')

.map(({target: { value }}) => value) // extract the value

.filter(({length}) => length > 2) // filter by length

.debounceTime(400) // debounce

.distinctUntilChanged() // produce only on change

.switchMap(asyncSearch) // switch producer on each consumption

.subscribe(

(results) => {

// ... work with `results`

},

(err) => {

// ... handle `err`

}

)

;- Implementations come with full sets of operators powerful when dealing with async flows

- Promises are only a particular case of it

Discrete FRP with observables

- Learn FRP with rx.js:

http://reactivex.io/documentation/operators.html

- See this reference for the latest API changes documentation for RxJS 5 beta versions:

http://reactivex.io/rxjs

- Play with interactive diagrams of Rx Observables

http://rxmarbles.com

- Other attempts:

http://jsbin.com/leziqoy/edit?html,js,output

- Netflix using RxJS:

http://fr.slideshare.net/RyanAnklam/rethink-async-with-rxjs

Discrete FRP with observables

- Principles of functional programming

https://github.com/iirvine/principles-of-reactive-programming

Synchronous Asynchronous JavaScript

- Synchronous syntax has the strengh to be surpriseless

- But most of the time you get things sequentially when you would have got them in parallel

- With the combination of generators and wrappers, you get the ability to await for asynchronous processes on demand (that can process in parallel)

Generators

- Known as asymmetric coroutines

- Wrappers can control output to resume input with asynchronous results

// `yield` and `return` in a generator create the single async I/O

// sequence between the iterator and the wrapper

let getMergedData = co.wrap(function* (...urls) {

let data;

try {

// fetch in parallel

data = yield urls.map(asyncFetch);

} catch(err) {

// ... handle `err`

}

return asyncDataMerge(...data);

});

getMergedData(url1, url2, url3).then((mergedData) => {

// ... work with `mergedData`

});

Generators

- You can delegate generation natively

let sequentialFetch = function* (...urls) {

let url;

while (url = urls.pop()) {

yield asyncFetch(url);

}

};

let getMergedData = co.wrap(function* (...urls) {

let data;

try {

// delegate to a waterfall fetcher

data = yield* sequentialFetch(urls);

} catch(err) {

// ... handle `err`

}

return asyncDataMerge(...data);

});

getMergedData(url1, url2, url3).then((mergedData) => {

// ... work with `mergedData`

});Async/await

- An async function awaits other async executions

- It basically replaces generators when used with wrappers like co

// you get less control than with generators

// but it will eventually not depend on external lib

let getMergedData = async function(...urls) {

let data;

try {

// fetch in parallel

data = await* urls.map(asyncFetch);

} catch(err) {

// ... handle `err`

}

return await asyncDataMerge(...data);

});

getMergedData(url1, url2, url3).then((mergedData) => {

// ... work with `mergedData`

});

CSP

- Stands for Communicating Sequential Processes

- Makes use of channels

- JS implementations try to mimic goroutines

function* player(name, table) {

while (true) {

var ball = yield csp.take(table);

if (ball === csp.CLOSED) {

console.log(name + ": table's gone");

return;

}

ball.hits += 1;

console.log(name + " " + ball.hits);

yield csp.timeout(100);

yield csp.put(table, ball);

}

}

// goroutine power but in the same thread

csp.go(function* () {

var table = csp.chan();

csp.go(player, ["ping", table]);

csp.go(player, ["pong", table]);

yield csp.put(table, {hits: 0});

yield csp.timeout(1000);

table.close();

});CSP

- Learn CSP with js-csp:

https://github.com/ubolonton/js-csp

http://jlongster.com/Taming-the-Asynchronous-Beast-with-CSP-in-JavaScript

- Learn CSP with golang:

http://talks.golang.org/2012/concurrency.slide

http://talks.golang.org/2013/advconc.slide

- Goroutine vs mono threaded CSP performance comparison:

https://www.npmjs.com/package/co-channel#differences-between-javascript-coroutine-and-goroutine

What should i use ?

- You need to implement a lifecycle made of logic piping relations: learn FRP and promises

- Those pipes are greatly optimizable when you consider chunks: learn streams

- You need to implement independant processes living concurrently: learn CSP

- You need to implement prodecural computations made of sync and async computations: learn wrapped generators or async/await and promises

- You need to do simple things on simple events: learn event emitters

- You need to sleep: go to bed

FRP vs CSP ?

- Both can separate

- producing

- consuming

- the medium in between

- CSP has this explicit way of saying: "go do this somewhere else, and, yeah, take those channels with you"

- Pure functions passed to FRP operators are also jobs that can be done somewhere else, also bringing an observable and an observer with it

- So again, prefer CSP for independant processes, FRP for logical pipes

- And why not combine them, with transducers and immutables (looks like the path to clojurescript)

III - Optimizing and decoupling your async code

Ensure lazy execution

- A synchronous collection lets you write transformation chains executed immediately

- An asynchronous collection forces you to think the transformations pipeline as a whole, which is executed lazily on consuming data

- This lazy execution can be used with synchronous collections to avoid looping over elements multiple times (better performances)

- Basically, even for synchronous collections, it's better to think "lazy first"

Decouple processes with transducers

- A transform chain is made of reducers

- Map, filter, etc. are actually made of reducers

- A transducer is about transforming reducers or transducers

- It's decoupled from input and output so that it can be used with sync and async collections (arrays, streams, observables, channels)

- A transducer is described by its init, step, and result methods

Transducers

- origins and how it works:

http://jlongster.com/Transducers.js--A-JavaScript-Library-for-Transformation-of-Data - benchmark:

http://jlongster.com/Transducers.js-Round-2-with-Benchmarks - transducers-js + RxJS:

https://github.com/Reactive-Extensions/RxJS/blob/master/doc/gettingstarted/transducers.md - RxJS transduce bridge:

https://github.com/Reactive-Extensions/RxJS/blob/master/src/core/linq/observable/transduce.js - "Transducers" by Rich Hickey:

https://www.youtube.com/watch?v=6mTbuzafcII

Asynchronous JavaScript

By Alexis Tondelier

Asynchronous JavaScript

- 1,022