Dynamo: Amazon’s Highly Available Key-value Store

Colin King

CMSC818E

November 7, 2017

Motivation

- Amazon was pushing the limits with Oracle's Enterprise Edition

- Unable to sustain availability, scalability, and performance needs

- 70% of Amazon's operations were K/V, no need for RDMS

- Availability >> Strong consistency

- Reliability depends on persistent state

- Created Dynamo: a distributed, scalable, highly available, eventually-consistent K-V store

- Mixes together a variety of previously studied concepts

- Question: Can an eventually consistent database work in production under significant load?

Principles

- Highly available: 99.9% of requests < 300ms

- Incrementally scalable: Ability to add new nodes, one at a time

- Symmetry / Decentralization: No SPOF, all nodes share the same role

- Optimistic Replication: "always writeable" system, postpone CR

- Heterogeneity: Support nodes of varying capabilities

Data Model

- Intentionally barebones

- No transactions or relational schema

- K-V systems are "embarrassingly parallel"

-

get(key)

- key: arbitrary data, MD5-hased to a 128-bit id

-

value: data blob, opaque to Dynamo

- may return multiple objects, with conflicting versioning

- rare: 99.94% return a single version

- may return multiple objects, with conflicting versioning

-

put(key, context, object)

- context: opaque to client

- Used for client-side CR

Key Design Features

- P2P System

- Consistent Hashing

- Virtual Nodes

- Preference List Replication

- Failure Detection

- Temporary: Hinted Handoff

- Permanent: Gossip Protocol

- Conflict Resolution

- Vector Clocks

- Replica Synchronization

- Configurability

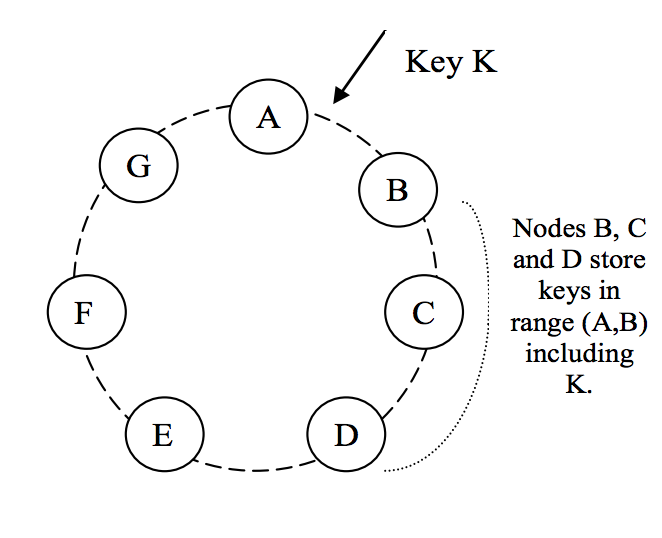

P2P: Consistent Hashing

- Need to be able to add and remove nodes without downtime

- Solution: Map each node to a range in the hash's output space

- Each node is assigned a random location on the ring

- Handles all keys up to the next node in the ring

P2P: Virtual Nodes

-

Problems:

- Not usually a uniform distribution, without LLN

- Fails to support heterogeneity

-

Solution: Virtual Nodes

- Each physical machine is treated as a configurable number of virtual nodes

- Distributes the key space handled by a physical node

- Great for failures

P2P: REPLICATION

- Data is replicated across N successor nodes

- This forms the "preference list"

- Effectively, each node is actually responsible for the range of keys belonging to all N-1 predecessors

-

Problem: Replication needs to occur across physical not virtual nodes

- Preference list is constructed by skipping nodes to ensure physical replication

Failures: TEMPORARY

- Nodes fail all the time in a cloud environment, for a variety of reasons

- Oftentimes are only temporarily offline

- When a node is unavailable, another node can accept the write

- Enables "always-writeable" system

- (Assuming 1+ nodes!)

- Enables "always-writeable" system

- The write is stored in a local buffer

- Applied once the network heals

Failures: PERMANENT

-

Problem: Need to know when a node goes offline

- Heartbeats are expensive (n^2!)

-

Gossip Protocol

- Every node tracks the overall cluster state

- At every tick, randomly choose another node in the ring

- Two nodes sync their cluster states

- Leads to eventual failure propagation

CONFLICT RESOLUTION

- For an "always-writeable" system, conflict resolution cannot be done at write-time.

- Dynamo uses read-time reconciliation instead

- Weak consistency guarantees -> divergent object versions

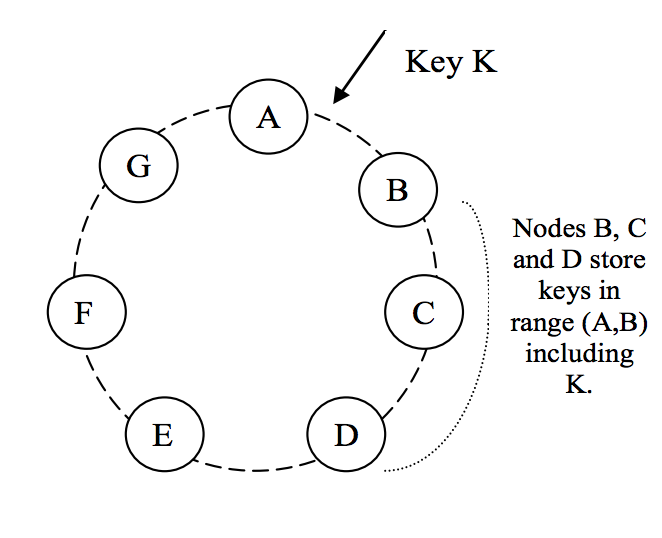

CONFLICT RESOLUTION: Vector CLocks

- Dynamo uses vector clocks

- Avoids walk-clock skew issues

- VC is a list of tuples:

- [node, counter]

- Each object version is immutable

- "Application-assisted conflict resolution"

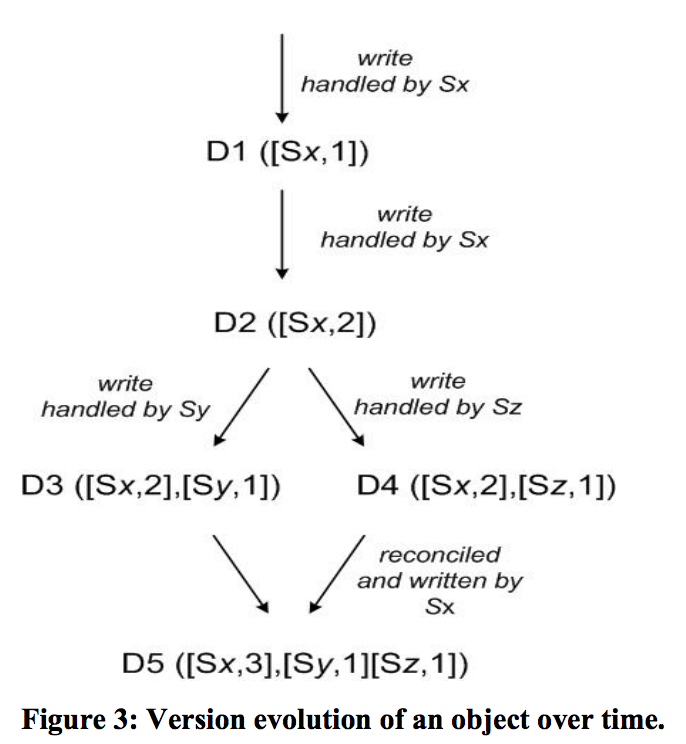

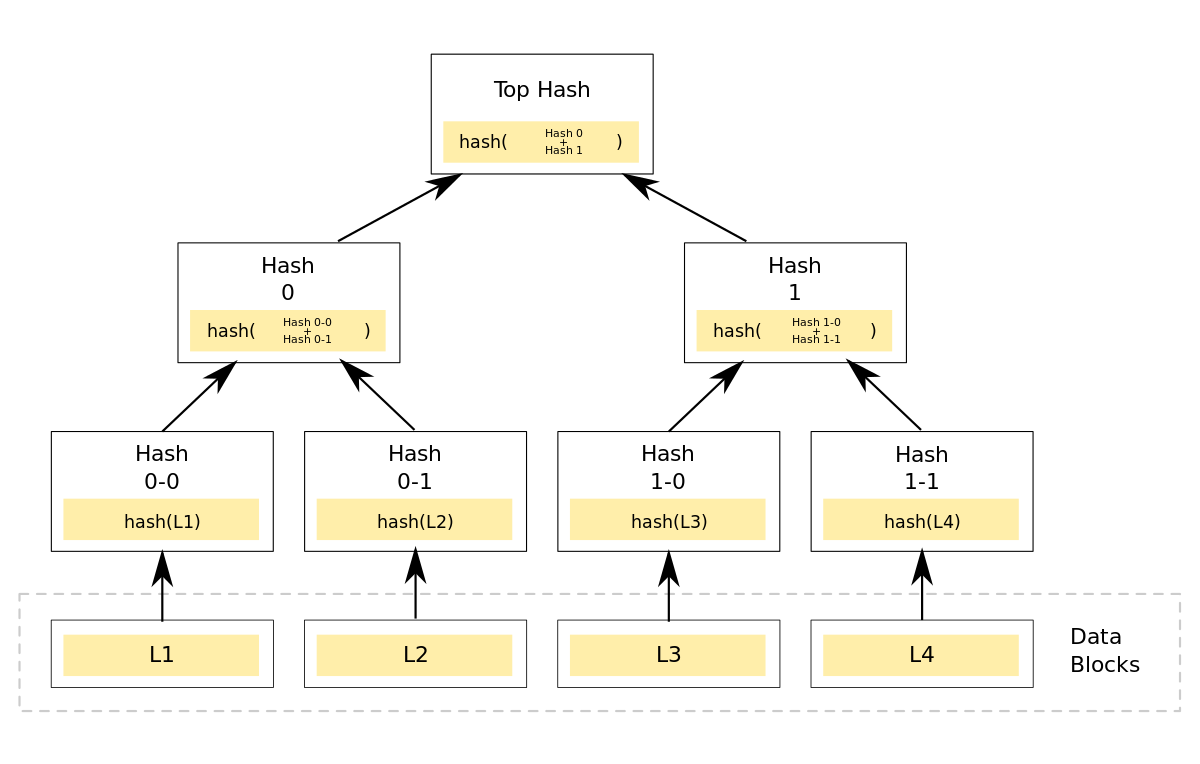

CONFLICT RESOLUTION: Replica SYNCHRONIZATION

-

Problem: Recovering from a permanent failure or a network partition

- Can be very expensive with significant divergence

-

Merkel trees simplify the process of figuring out which parts of a key-range are different.

- Each leaf is a hash of a key

- Minimizes data exchange, too!

- Used to sync divergent replicas in the background.

CONFIGURABILITY

- Some services need to be able to configure the trade-off between availability, consistency, cost-effectivess, and performance.

- Three parameters:

- N: Length of the preference list

-

W: # nodes required for a write

- Must consider durability

- R: # nodes required for a read

- Typical configuration: N=3, R=2, W=2

- Very small!

TRADEOFFS

- AP system (in CAP)

- Hotspots can form in the chord ring, not handled by Dynamo

- No relational query capabilities (like RDMS)

- Movement to NewSQL

- The full ring's metadata could become very large, and each node has to maintain this!

- Hierarchal system could resolve some of these issues

Resources

- Dynamo: Amazon’s Highly Available Key-value Store [DeCandia, et al.]

- A Decade of Dynamo: Powering the next wave of high-performance, internet-scale applications [Werner Vogels]

Dynamo: Amazon’s Highly Available Key-value Store

By Colin King

Dynamo: Amazon’s Highly Available Key-value Store

CMSC818E Presentation on Amazon's Dynamo paper

- 1,056