20 years of Robotics

What Changed, what didn't,

what the future holds

What is this all about?

-

How robotic changed in the recent years.

-

Why robotic software is written the way it is.

-

Effective Design Patterns in robotics.

Who am I ?

-

A passionate roboticist.

-

A senior software developer.

-

Project Manager, Business Developer, Mechanical Engineer, Startup Founder, Geek, etc...

A younger and thinner version of me (on the right side)

A weird email...

Dude, it is 2018, where is my robot?

Robotics is hard

- Requires complex Domain Specific knowledge.

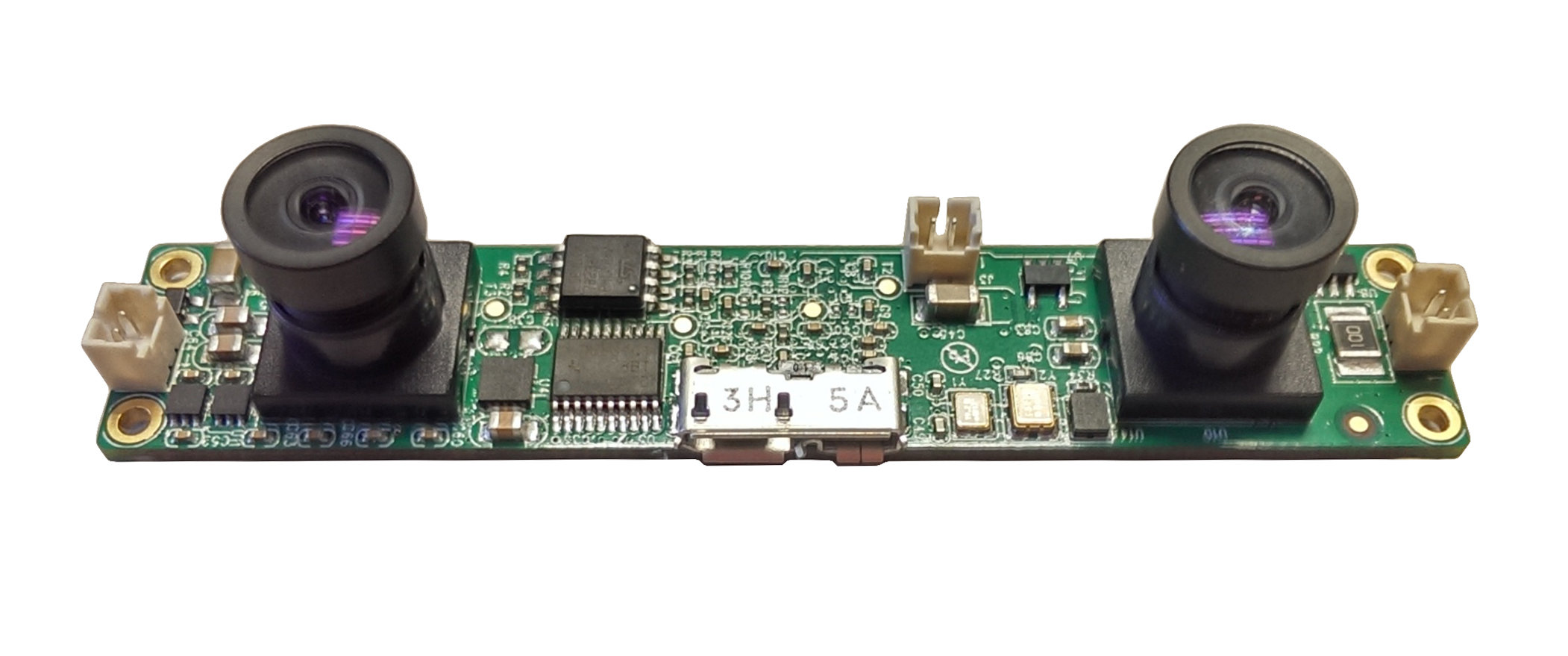

- Close to the hardware. A lot of work

iswas required on the driver side. - Difficult to model uncertainty and real-world environments.

- Depends on scientific progresses in other domains.

Software wise...

- Non trivial problem requires concurrency and parallelism.

- You never have enough CPU.

- Robotic engineers are bad Software engineers.

- Complexity.

- Lot of sensors.

- Many actuators.

- On board batteries.

- Brutal space and weight constraints.

- Biped locomotion was not a solved problem.

- Complex software architecture.

all the way down...

Why?

- Historically, Robotics was about embedded computing

- You want to squeeze the last CPU cycle

- Domino effect... the ecosystem is mostly C++, so no one change

Consequence?

- Steep learning curve for newbies.

- A complex computing language used by inexperienced software developers.

Its

2005:

Robotic Software Frameworks everywhere

Orocos

Marie

Miro

Opros

Aseba

Very ambitious but...

- Academic :-/

- Difficult to install.

- Cherry-picked use-cases.

- No documentation.

- No tools.

- Few drivers.

- "I can't get my PhD solving bugs".

- "Not invented here" syndrome

In other words:

no critical mass

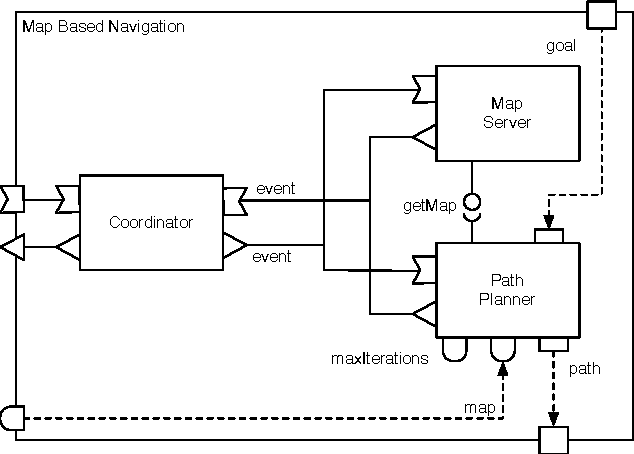

All these middlewares had many things in common

Component Based Design...

... but not well formalized.

Inter process communication...

... a SEGFAULTs workaround?

Publish-Subscribe...

Concurrency / Parallelism...

... because time constraints suck.

... just spawn more processes.

Algorithms / Drivers separation...

... with my ad-hoc interface.

Component Based Software Engineering

Component-based software engineering (CBSE) is a branch of software engineering that emphasizes the separation of concerns with respect to the wide-ranging functionality available throughout a given software system.

It is a reuse-based approach to defining, implementing and composing loosely coupled independent components into systems.

Publish-Subscribe

In robotics we want:

- To distribute data to multiple clients.

- Often, we don't need synchronous communication.

- Robustness against unreliable data producers.

- Freedom to use multiple computational nodes.

2008: and then ROS came...

ROS was bound to win the "middlewares war"

Technically speaking:

- Easy to learn

- No-bullshits API

- Many Tools

- Many Drivers

- Easy to install

- Well documented

- C++ Package management

ROS was bound to win the "middlewares war"

They had:

- Many talented people

- Multi-years effort

- Smart marketing

- Uncompromising Vision

- Community building

- Focused on real-world

- in other words: "Lot of meny"

I am not being cynic.

World domination requires money

- It accelerated 3x-5X the development of robotic systems.

- It became the "standard" that we desperately needed (the winner takes it all).

- Open Source

The good:

The bad:

- ROS is a just a (very good) tool, but people rely too much on it.

- Resource hungry in some cases.

- Thrown away many important lesson from CBSE.

- Open Source.

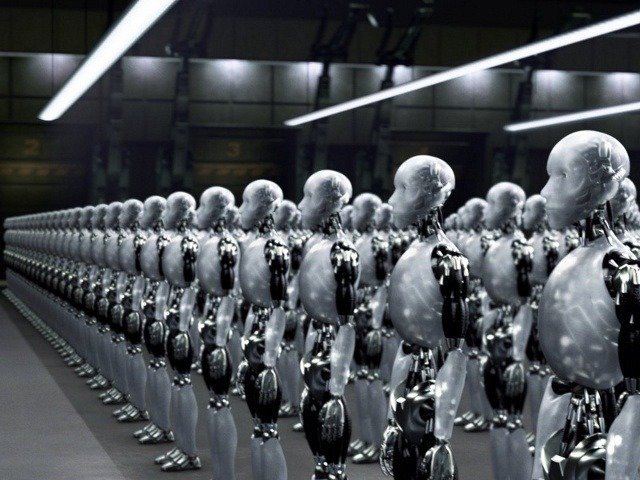

Biggest challenges in robotics (until 2014)

- It is a small market (*)

- Small investments and little capital

- Depends on innovation in other fields

- Technology is not mature

- Hard to industrialize

2014 - today

It is a small marketSmall investments and little capital- Depends on innovation in other fields (Autonomous cars?)

- Technology is not mature (?)

- Hard to industrialize

The most important bottleneck:

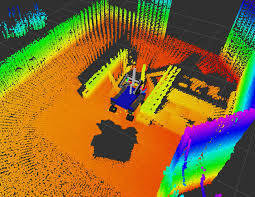

Computer Vision and Perception

Robotic Perception. How hard could it be?

Computer Vision... is hard!

"This AI and Machine Learning stuff is useless"

Me 2001

The A.I. Winter

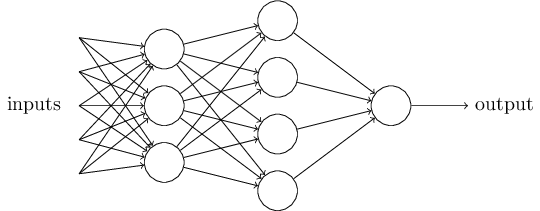

Deep Learning

smashed

the previous state-of-the-art benchmarks

ImageNET 2012: everything changes...

We had intuition, but not the mathematical foundation...

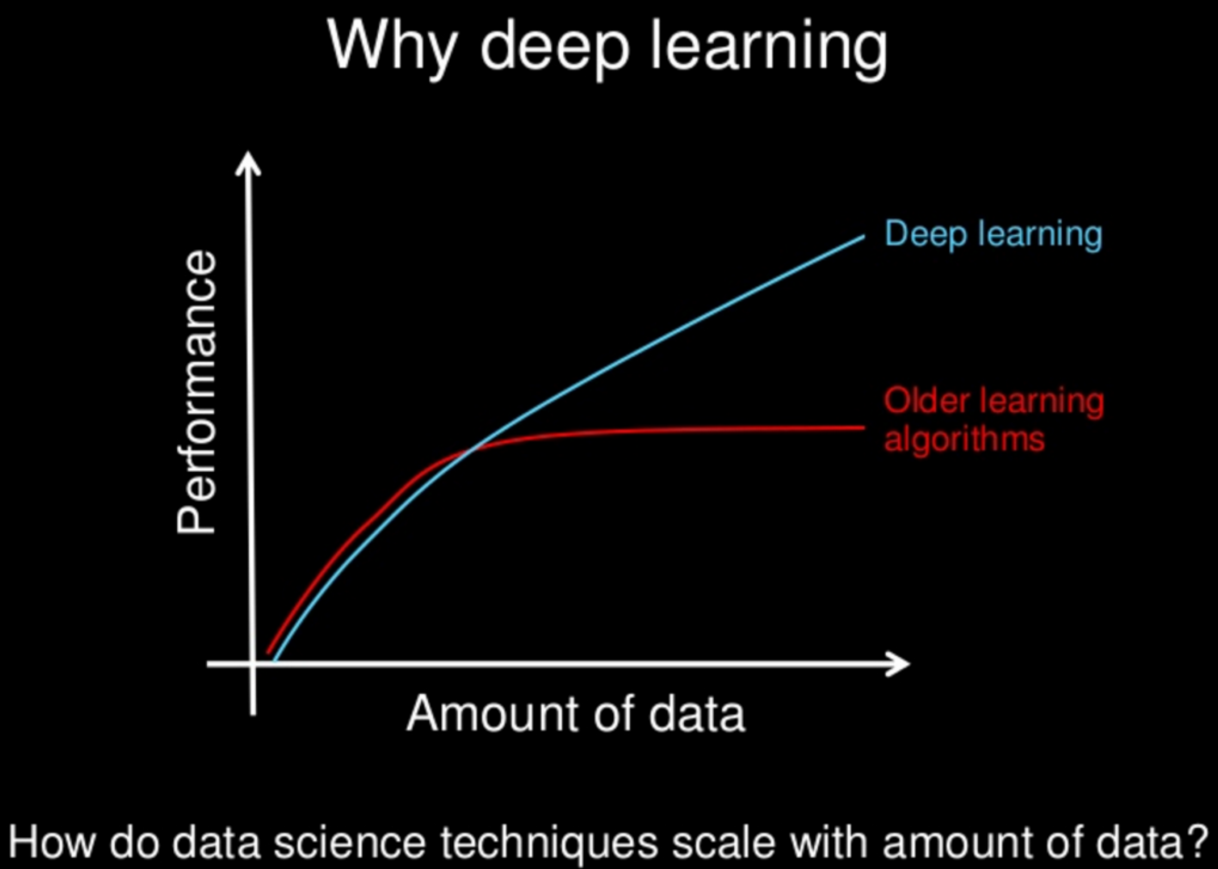

...We didn't know that we "just" needed:

- A bigger engine (Computational Power)

- Much more fuel (Data)

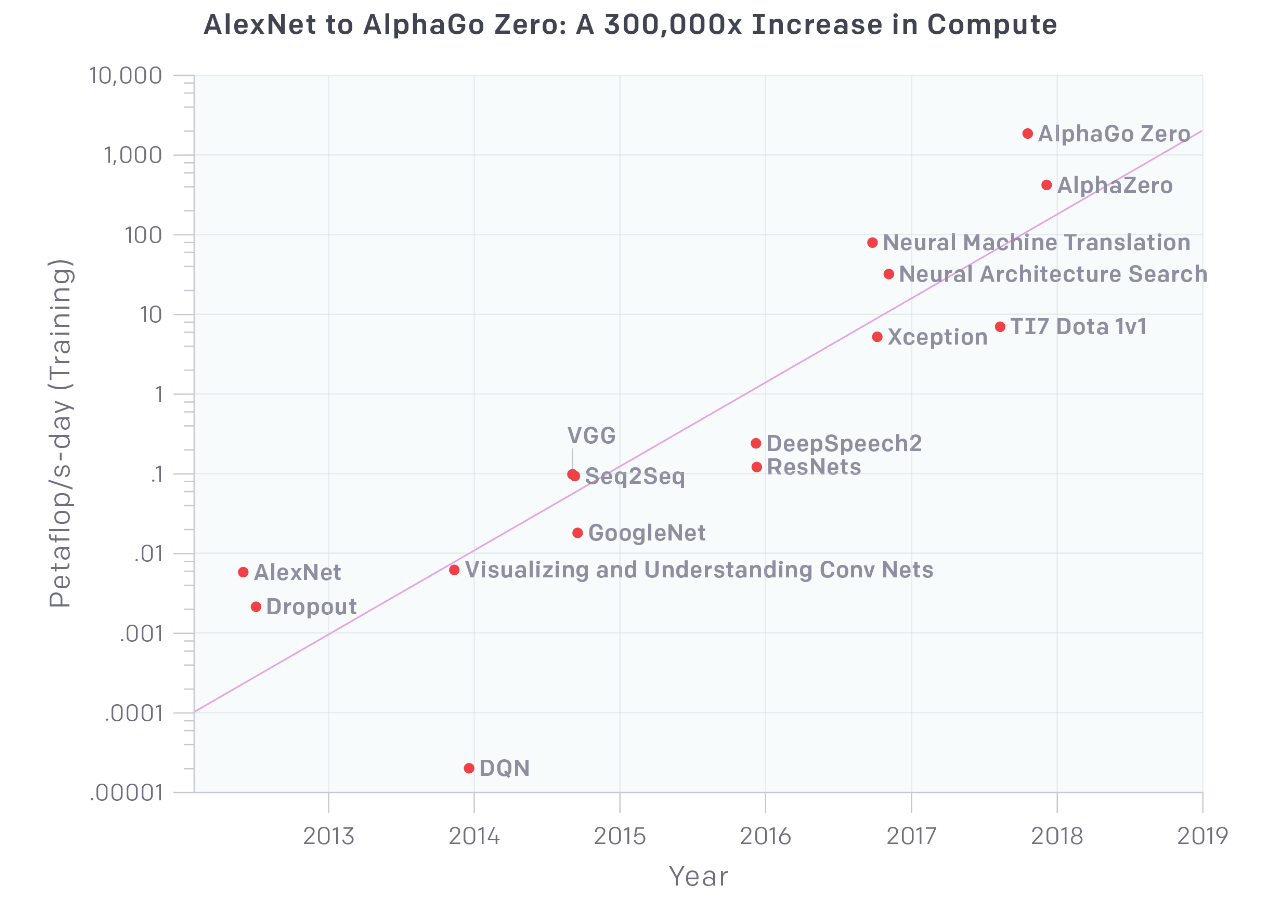

Since 2012, the amount of compute used in the largest AI training runs has been increasing exponentially with a 3.5 month-doubling time.

By comparison, Moore’s Law had an 18-month doubling period.

Since 2012, this metric has grown by more than 300,000x

This is just just the beginning:

- A.I. will evolve more and more, in a non linear way.

- Robotics will be able to solve more Business Cases and Use Cases.

- Riding the hype wave, many startups will be created.

- More Fundamental Research is needed.

- More Applied Research, too.

- Nevertheless, serial production of complex robots is still hard.

Thank you for

your attentionn

20 years of Robotics

By Davide Faconti

20 years of Robotics

- 1,056