Python Multithreading and Multiprocessing: Concurrency and Parallelism

About Me

-

Pythonist, Gopher

A story!

Aspected time

50 Min

A story!

A story!

Aspected time

30 Min

A story!

Aspected time

15 Min

Parralism

Parallelism is to add more hardware or software resources to make computation faster.

Parallelism is about doing lots of things at once

Concurrency

Permit multiple tasks to proceed without waiting for each other.

Concurrency is about dealing with lots of things at once

Concurrency & Parralism

Concurrency & Parralism

Concurrency & Parralism

Concurrency & Parralism

Multiprocessing & Multithreading is not easy!

Multiprocessing & Multithreading

- Ability of a central processing unit or a single core in a multi-core processor to execute multiple processes or threads concurrently

Python threading

- Higher level threading interface on top of thread of lower level thread module

-

In python 3 lower level thread module renamed to _thread

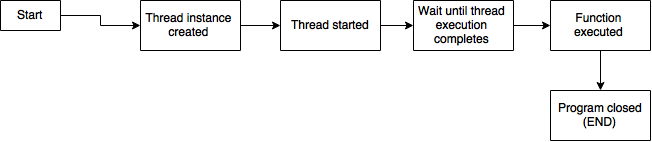

Start thread

from threading import Thread

def print_hello():

print("Hello World")

t = Thread(target=print_hello, args=[])

t.start()

t.join()Thread start

- Threads are system level

- Linux POSIX & Windows threads

- Scheduling

Thread start

from threading import Thread

def calc(n):

while n > 0:

n -= 1

t = Thread(target=calc, args=[100000000])

t.start()

t.join()

# Started a single thread

# 4.02 seconds to execute this programfrom threading import Thread

def calc(n):

while n > 0:

n -= 1

t1 = Thread(target=calc, args=[100000000])

t2 = Thread(target=calc, args=[100000000])

t1.start()

t2.start()

t1.join()

t2.join()

# How much time this program would take to

# complete execution ? Thread start

from multiprocessing import Process

from threading import Thread

def calc(n):

while n > 0:

n -= 1

def do_calc():

t = Thread(target=calc, args=[100000000])

t.start()

t.join()

p1 = Process(target=do_calc, args=[])

p2 = Process(target=do_calc, args=[])

p1.start()

p2.start()

p1.join()

p2.join()Global Interpreted Lock

- Parallel execution of thread forbidden.

- Release lock on read, write, send, recv

Another boring story

Global Interpreted Lock

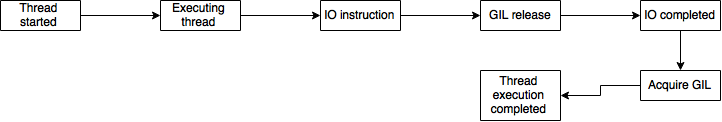

- GIL release on IO operations

- What about CPU bound threads ?

Global Interpreted Lock

Global Interpreted Lock

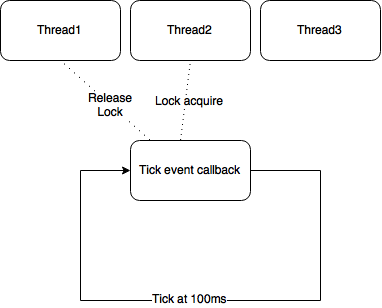

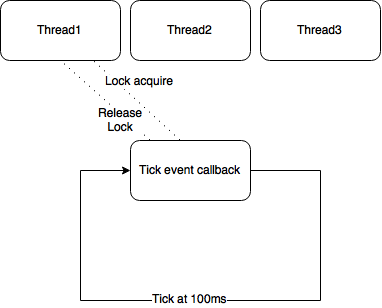

- sys.setcheckinterval()

- Scheduling will be managed by OS

OS

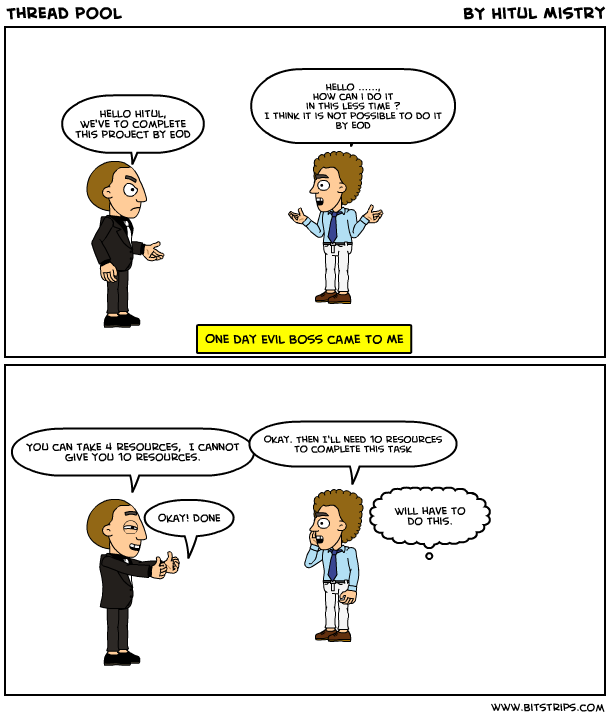

A story of my boss!

A story of my boss!

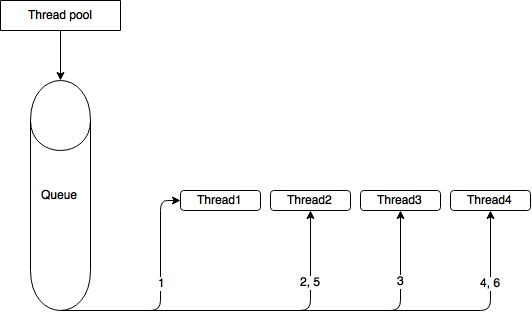

Thread pool

from multiprocessing.pool import ThreadPool

def f(x):

return x*x

if __name__ == '__main__':

p = ThreadPool(4)

print(p.map(f, [1, 2, 3, 4, 5, 6]))

# Output

[1, 4, 9, 16, 25, 36]Process multiprocessing

from multiprocessing import Process

def f(name):

print 'hello world'

if __name__ == '__main__':

p = Process(target=f, args=('bob',))

p.start()

p.join()- Python module for process API

- System processes

- GIL ?

- Linux & Windows

- Own memory

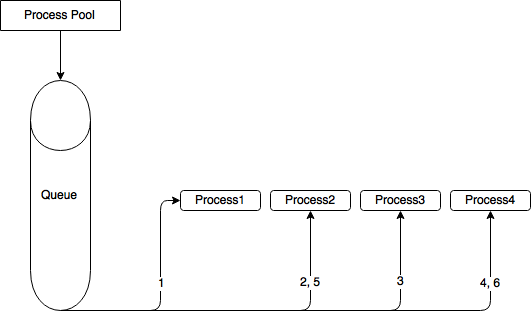

Process pool

- Similar to threadpool with difference that it will start processes instead of threads

from multiprocessing import Pool

def f(x):

return x*x

if __name__ == '__main__':

p = Pool(5)

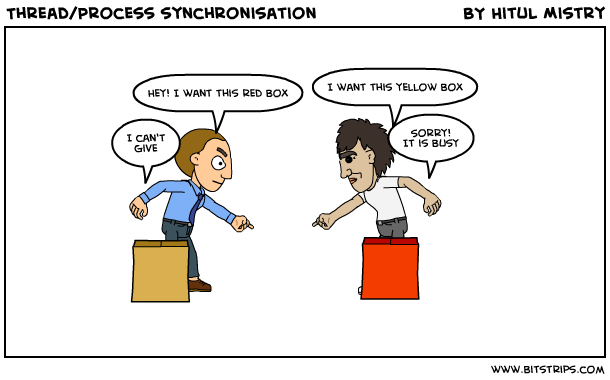

print(p.map(f, [1, 2, 3, 4, 5, 6]))How to handle this ?

- Multiple resource trying to access same code or resource

- Synchronization thread/process

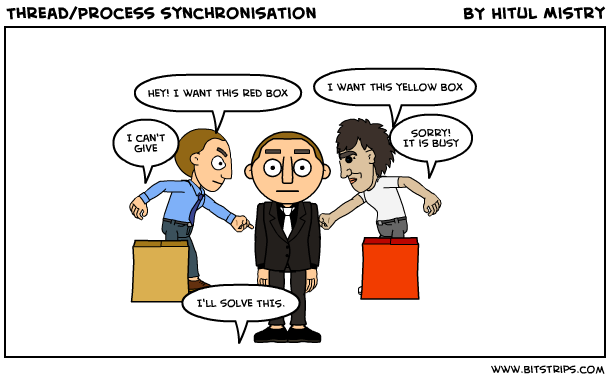

Deadlock

Semaphore

- Solution to handle deadlock

- Semaphore types

- Binary

- Counter

- Mutex

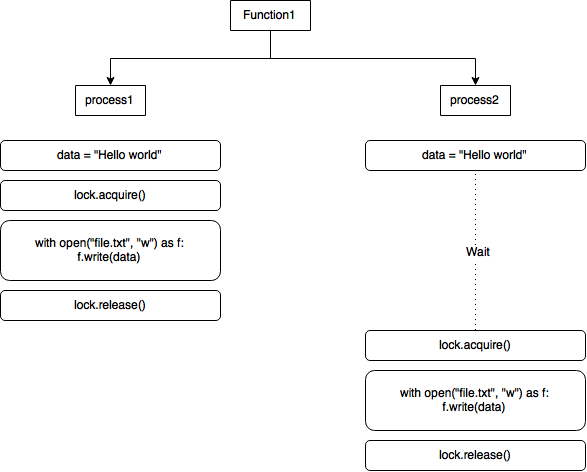

Lock & RLock

- Known as Mutex or Binary semaphore

- release & acquire

- Available in multiprocessing/threading module

def getPart1():

lock.acquire()

try:

... get first part of the data

finally:

lock.release()

def getPart2():

lock.acquire()

try:

... get first part of the data

finally:

lock.release()

Lock & Rlock

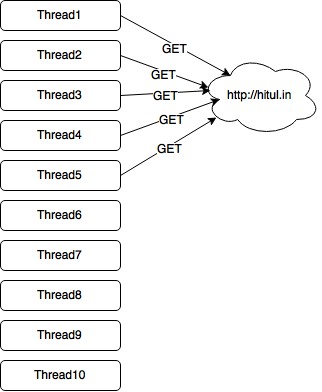

semaphore

import time

from threading import Thread, Semaphore

semaphore = Semaphore(5)

def calc():

semaphore.acquire()

time.sleep(3)

# Send http request to http://hitul.in

semaphore.release()

for _ in range(10):

t1 = Thread(target=calc, args=[])

t1.start()

t1.join()

- python implementation of counter semaphore

- Available in multiprocessing module

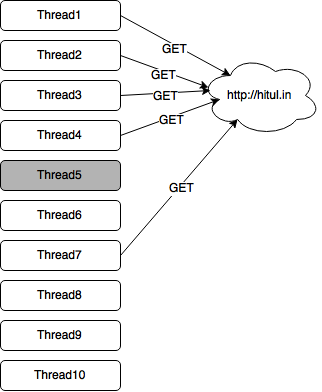

semaphore

BoundedSemaphore

import time

import requests

from threading import Thread, BoundedSemaphore

semaphore = BoundedSemaphore(3)

def send_request():

semaphore.acquire()

r = requests.get("http://hitul.in/")

time.sleep(3)

print("Request sent")

semaphore.release()

# Value error

semaphore.release()

for _ in range(10):

t1 = Thread(target=send_request, args=[])

t1.start()

t1.join()

- Value error will be raised if release and acquire are not same

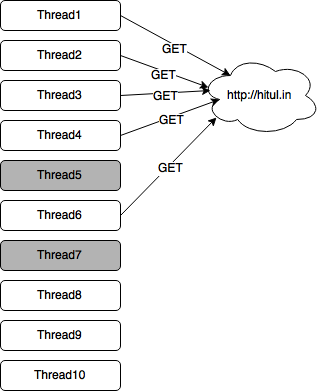

Event

- Thread will wait for flag

import time

from threading import Thread, Event

event = Event()

def hello():

event.wait()

print("Hello world")

t1 = Thread(target=hello, args=[])

t1.start()

t2 = Thread(target=hello, args=[])

t2.start()

time.sleep(5)

event.set()

event.clear()

t1.join()

t2.join()

Timer

from threading import Timer

def hello():

print("Hello World")

t = Timer(2, hello)

t.start()- Run thread after some seconds

- Delay can be there

- cancel()

Thread example

from threading import Thread

data = {}

def print_hello():

data["Status"] = True

t = Thread(target=print_hello, args=[])

t.start()

t.join()

print dataProcess example

from multiprocessing import Process

data = {}

def print_hello():

data["Status"] = True

p = Process(target=print_hello, args=[])

p.start()

p.join()

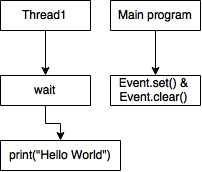

print dataPipes

- Data channel to transfer data

-

os.pipe

- 64 kb limit

- encode/decode

- works on linux & windows

-

multiprocessing.pipe

- sockets

- full duplex

- Pickle

Pipes

import time

from multiprocessing import Pipe, Process

c1, c2 = Pipe()

def write_pipe(c2):

c2.send("Hello world")

def read_pipe(c1):

print c1.recv()

p1 = Process(target=write_pipe, args=[c1])

p2 = Process(target=read_pipe, args=[c2])

p1.start()

time.sleep(1)

p2.start()

p1.join()

p2.join()Pipe

import time

from multiprocessing import Pipe, Process

c1, c2 = Pipe()

def write_pipe(c2):

c2.send("Hey! How are you ?")

print "MSG by read-process : %s" % c2.recv()

def read_pipe(c1):

print "MSG by write-process function : %s" % c1.recv()

c1.send("Thanks you! I'm good.")

p1 = Process(target=write_pipe, args=[c1])

p2 = Process(target=read_pipe, args=[c2])

p1.start()

time.sleep(1)

p2.start()

p1.join()

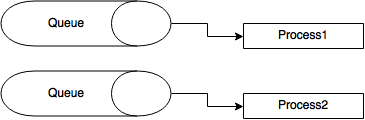

p2.join()Queue

from multiprocessing import Process, Queue

def f(q):

q.put([42, None, 'hello'])

if __name__ == '__main__':

q = Queue()

p = Process(target=f, args=(q,))

p.start()

print q.get() # prints "[42, None, 'hello']"

p.join()- thread/process safe

- Queue types

- LIFO

- FIFO

- Priority

Shared memory

from multiprocessing import Process, Value, Array

def f(n, a):

n.value = 3.1415927

for i in range(len(a)):

a[i] = -a[i]

if __name__ == '__main__':

num = Value('d', 0.0)

arr = Array('i', range(10))

p = Process(target=f, args=(num, arr))

p.start()

p.join()

print num.value

print arr-

Data structures supported

-

Values

-

Array

-

-

Python data structures wrappers

-

Thread/Process safe

Best practices

- Pass pickable objects to multiprocessing pipe

- Zombie process

- Avoid to terminate processes

- Global variable values can be differ

- Close pipes once done with it

- Use pipes with context manager

Contact me

@hitul007

http://hitul.in

Thank you

Copy of Multithreading & Multiprocessing

By Eder Rafo Jose Pariona Espiñal

Copy of Multithreading & Multiprocessing

- 924