Cortical representation learning

& Bayes-optimal cue integration

Deperrois, N., Petrovici, M.A., Senn, W., & Jordan, J. (2021). Learning cortical representations through perturbed and adversarial dreaming. arXiv preprint arXiv:2109.04261.

Jordan, J., Sacramento, J., Wybo, W. A., Petrovici, M. A., & Senn, W. (2021). Learning

Bayes-optimal dendritic opinion pooling. arXiv preprint arXiv:2104.13238.

Jakob Jordan

Department of Physiology, University of Bern, Switzerland

03.03.2022, SCN Retreat, Crans-Montana, Switzerland

- structured neuronal representations underlie our capacity to behave successfully, and our fast/flexible learning capabilities

- latent representations emerge from unsupervised learning principles

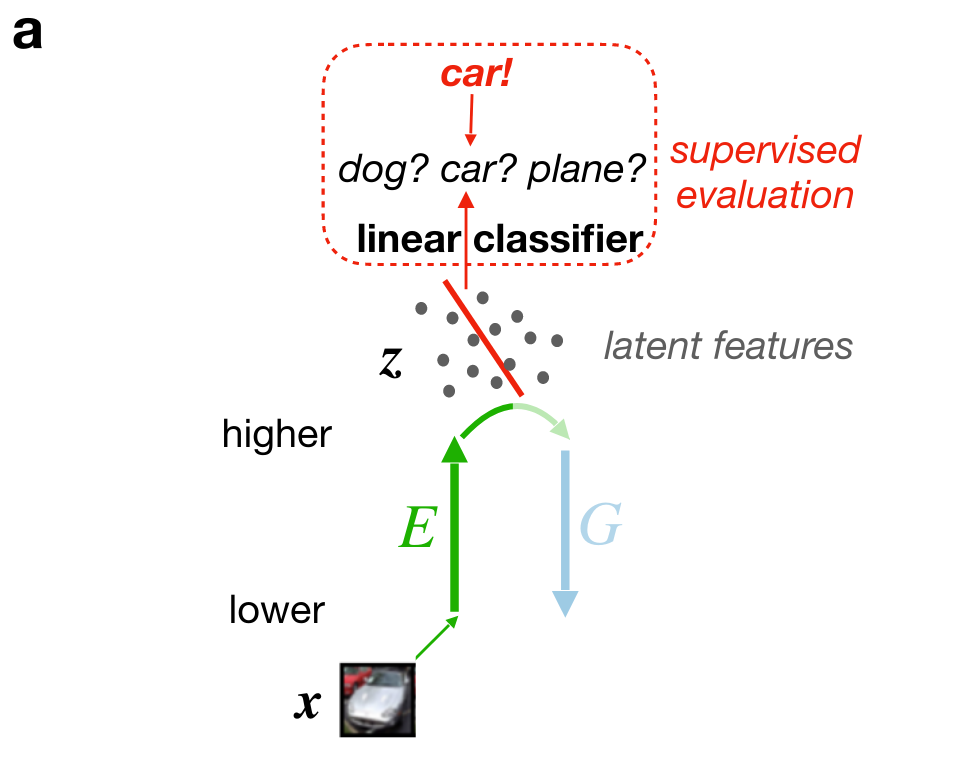

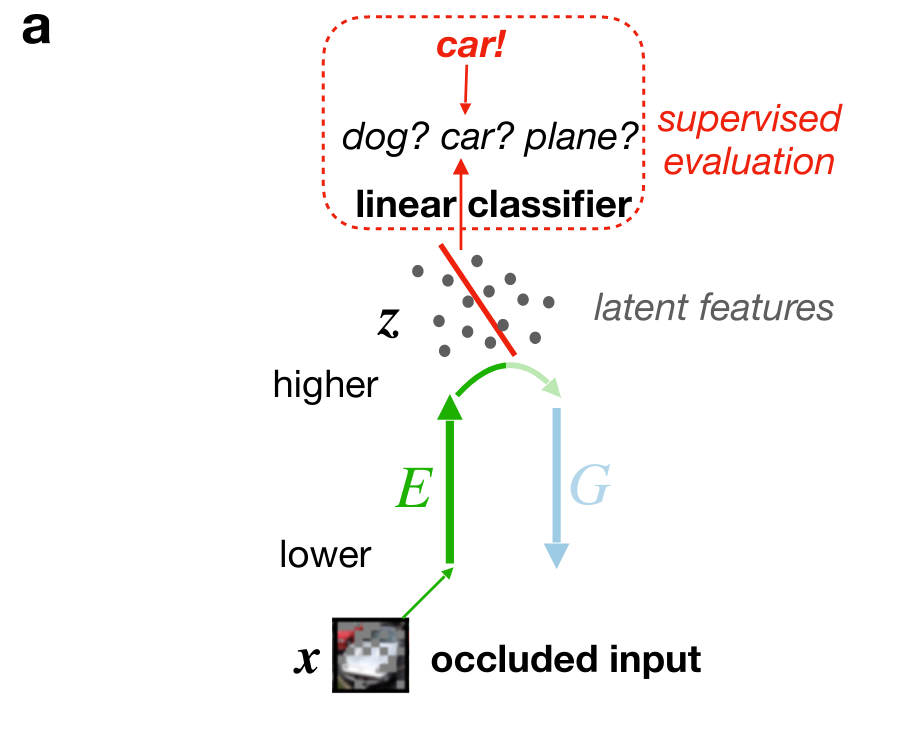

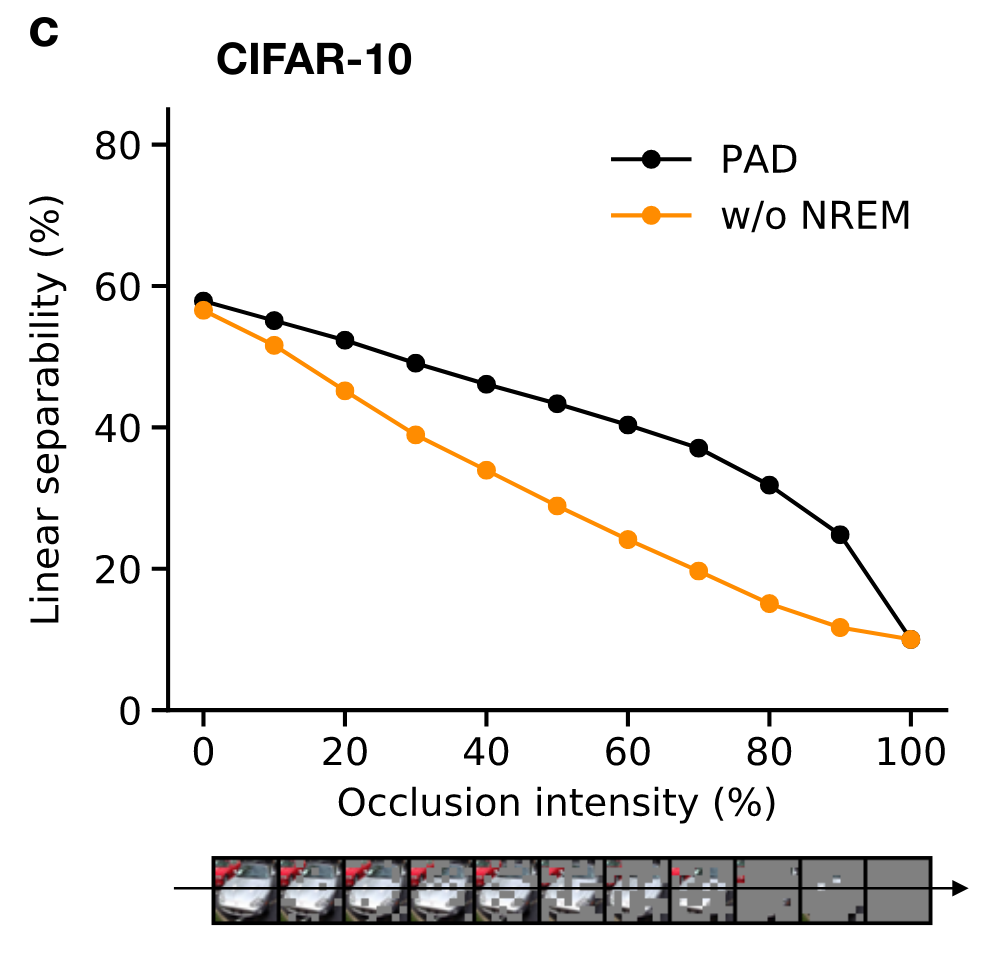

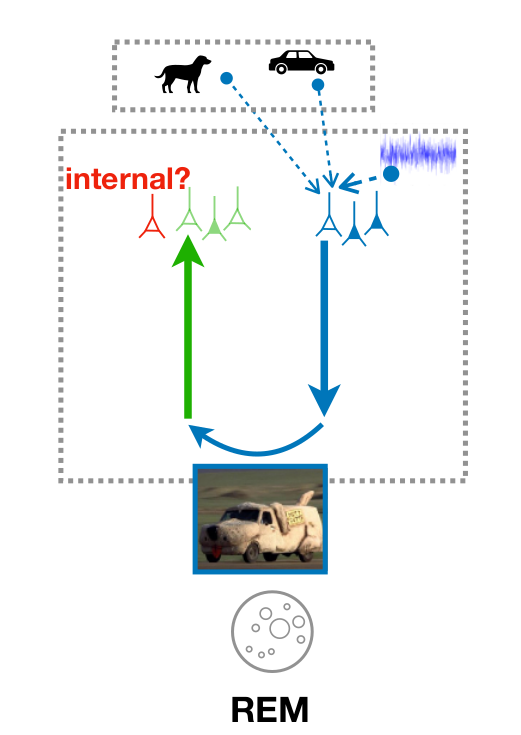

- here: we propose that sleep and in particular REM dreams implement adversarial learning

Motivation

[Illing et al., 2021]

[Goetschalckx et al., 2021]

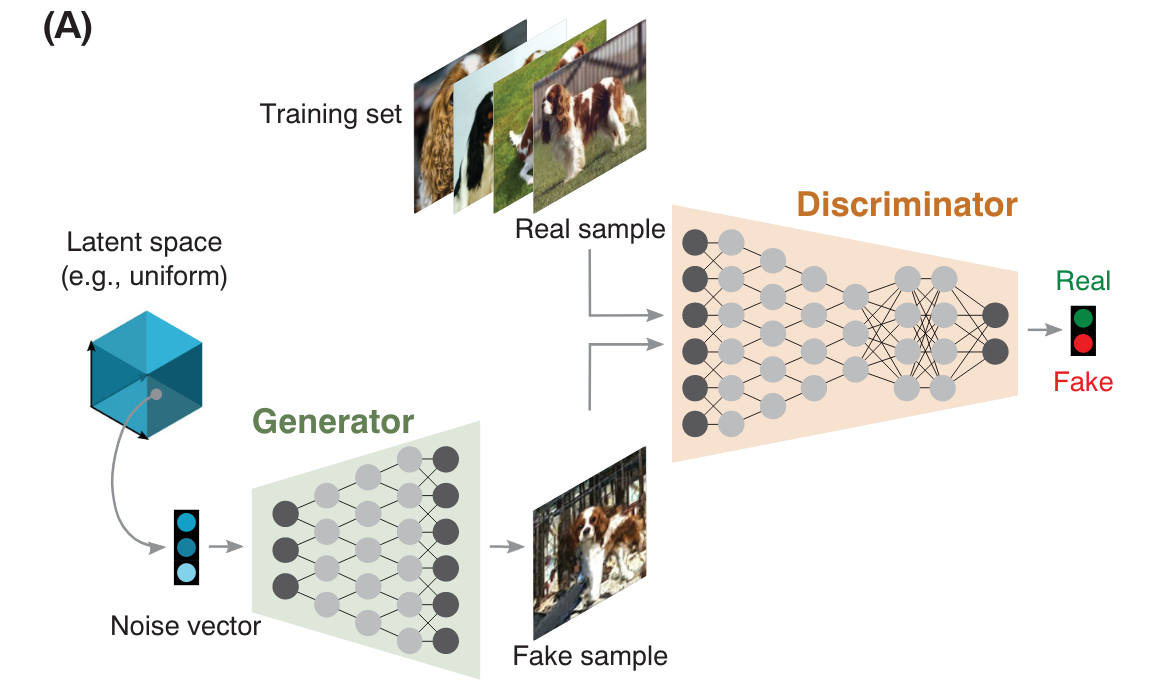

GenerativeAdversarial

Networks

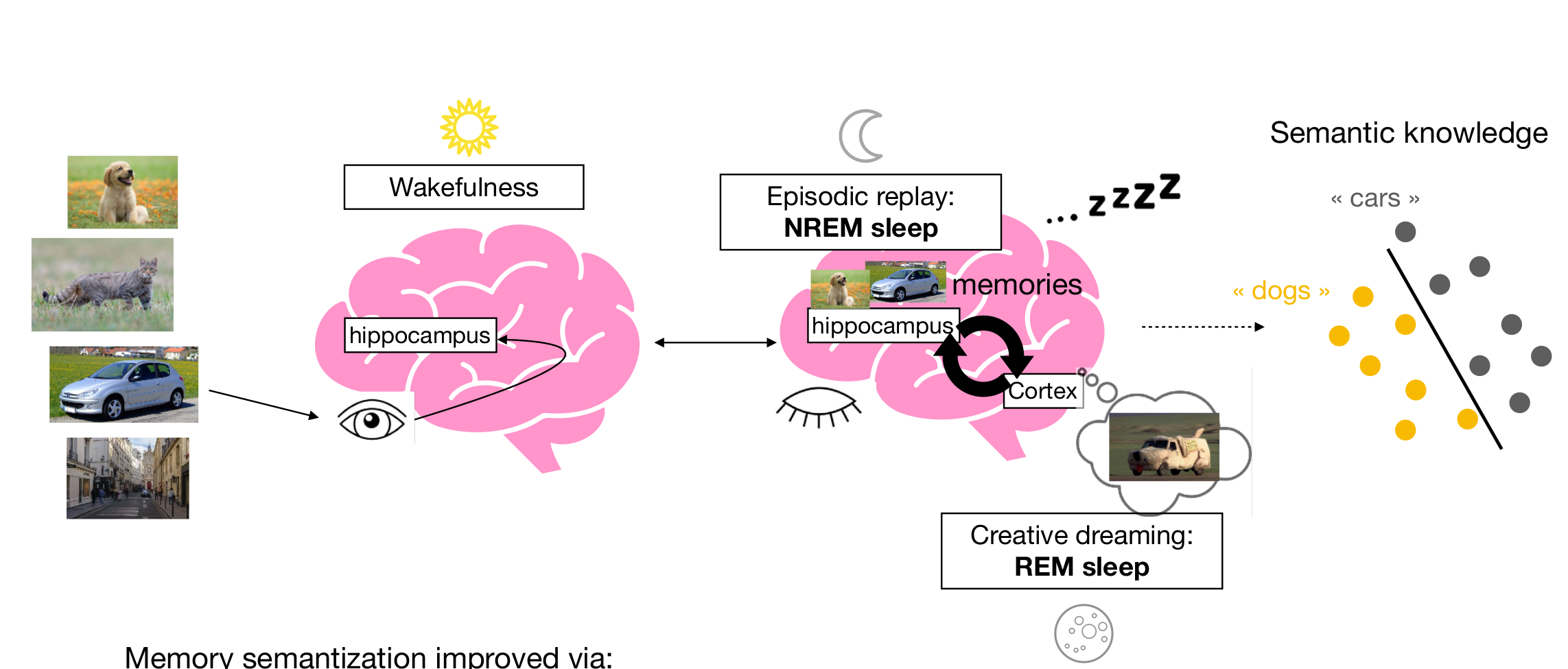

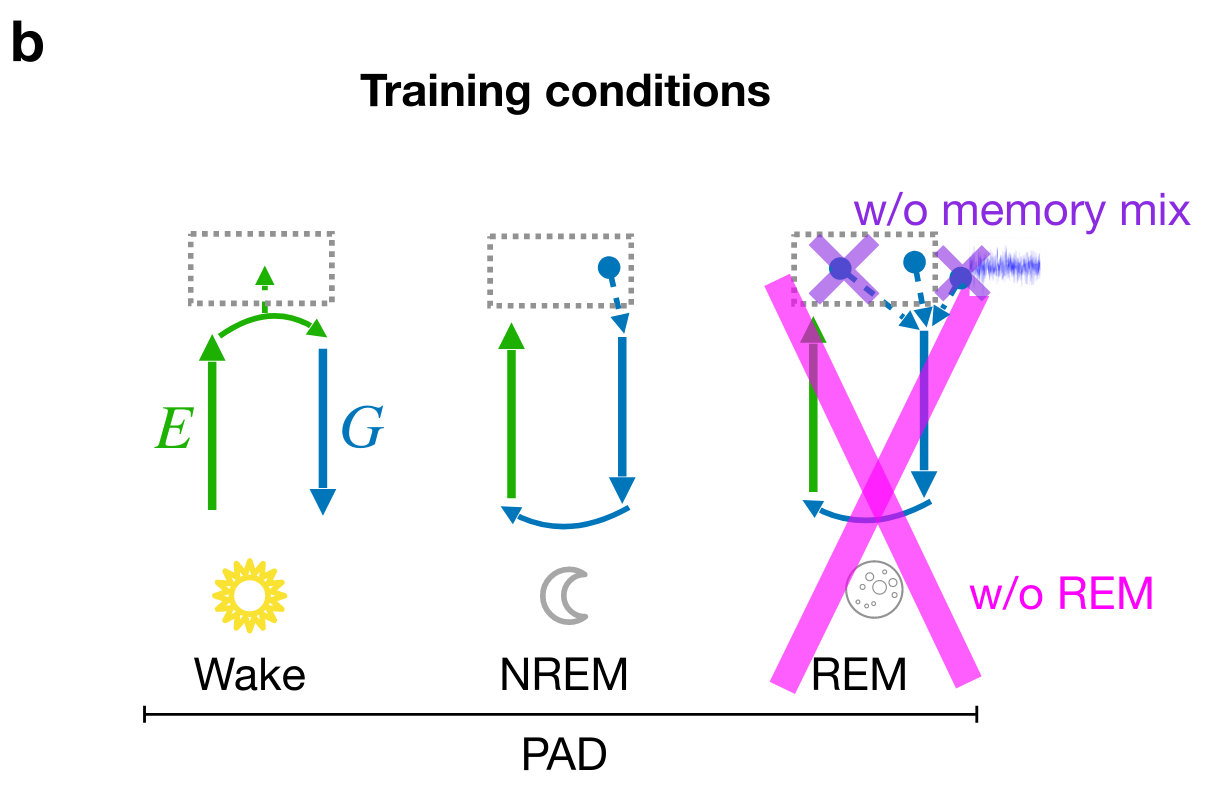

Learning during sleep (sketch)

- sensory experiences are encoded & stored during wakefulness

- preturbed replay during NREM robustifies encoding

- "creative" replay" during REM organizes encoding space

Nicolas Deperrois

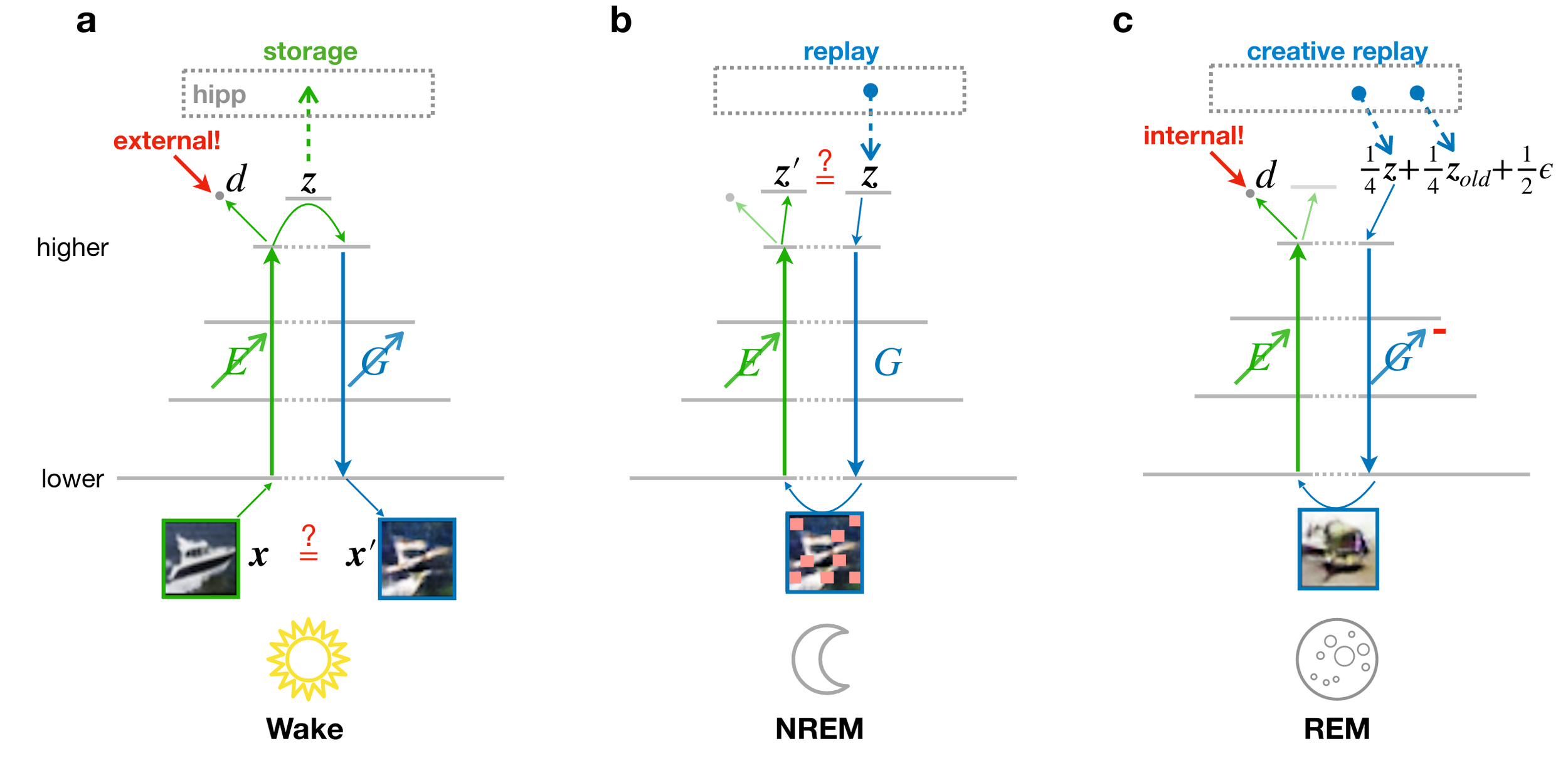

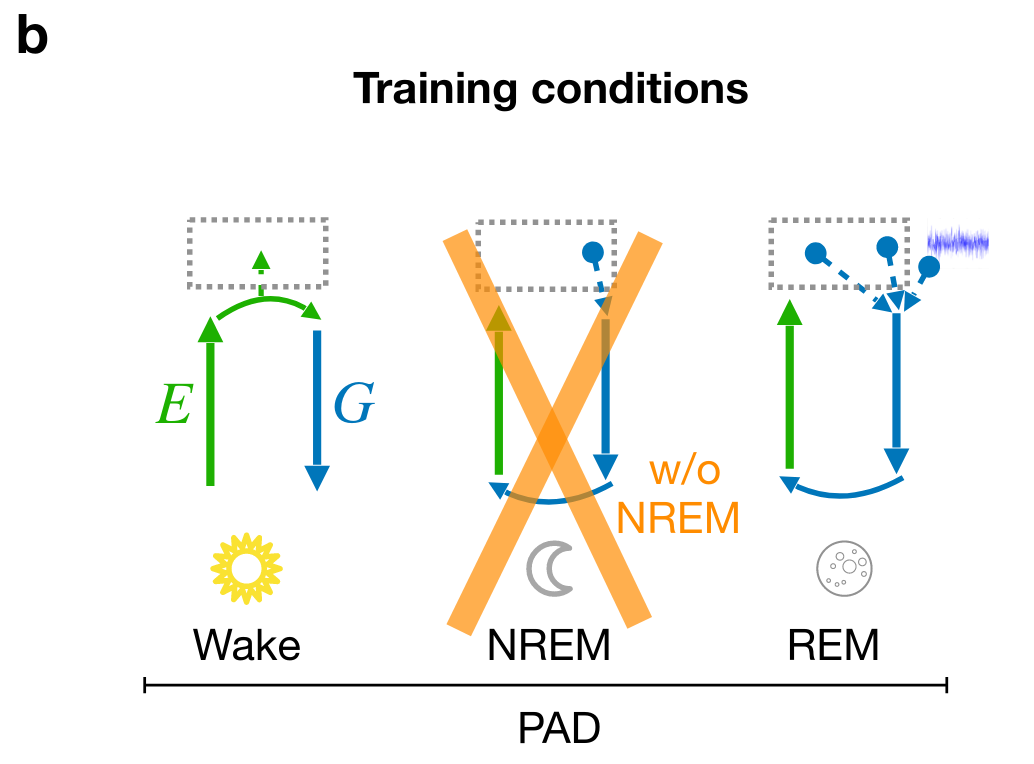

Different, but complementary objectives govern learning during wakefulness and sleep

- discriminator: external

- data reconstruction

- latent reconstruction

- discriminator: internal

- generator: external

Objectives:

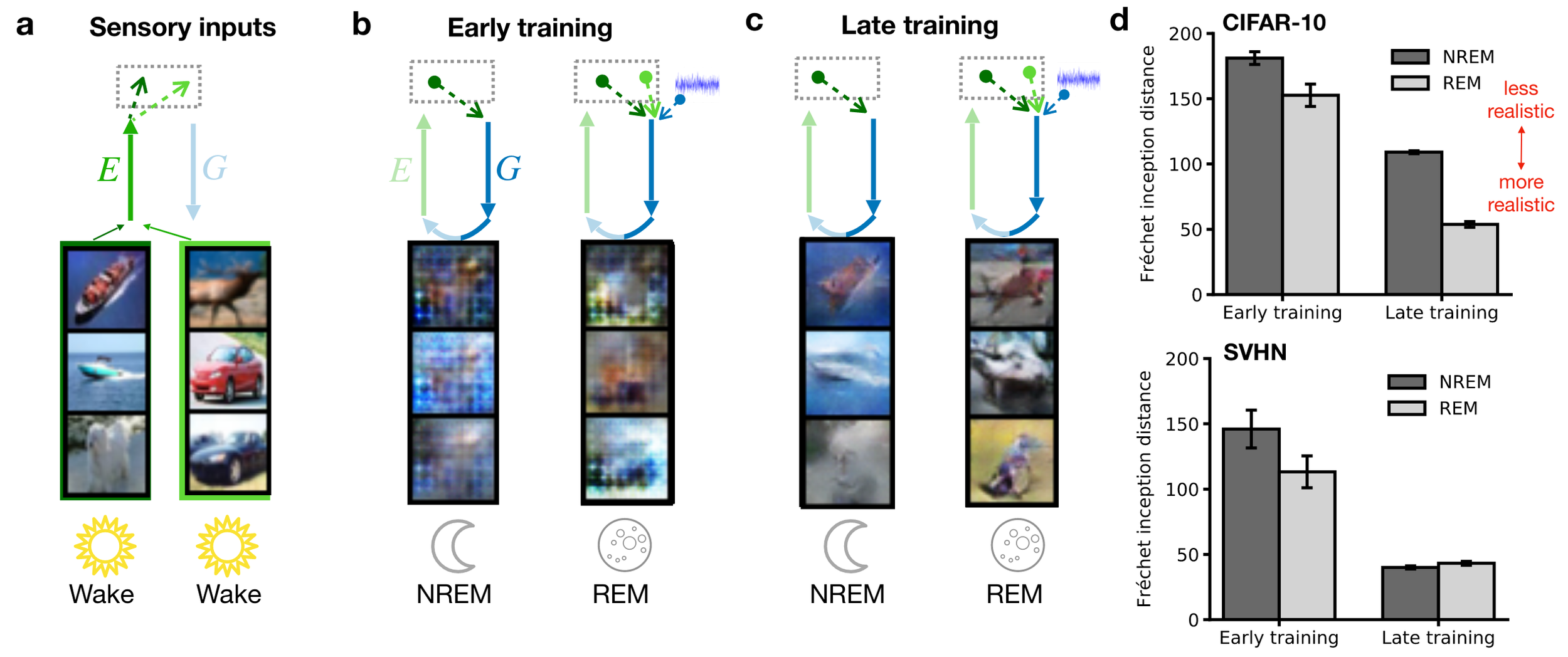

Dreams become more realistic with training

(remark: FID uses an Inception-v3 network, hence likely

focuses on local image statistics, e.g., Brendel & Bethge, 2019)

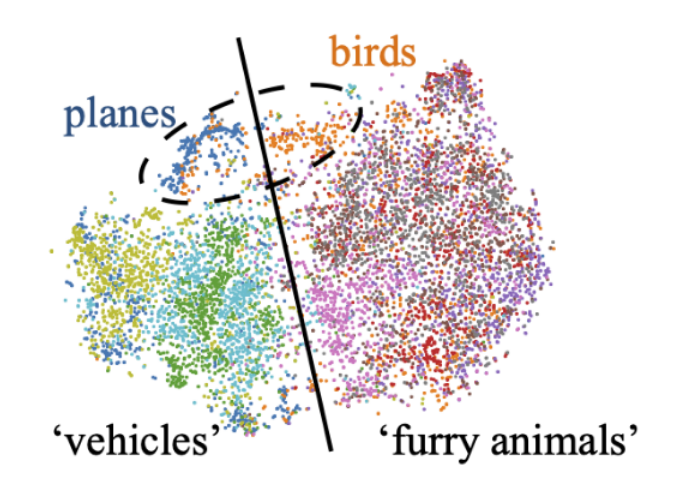

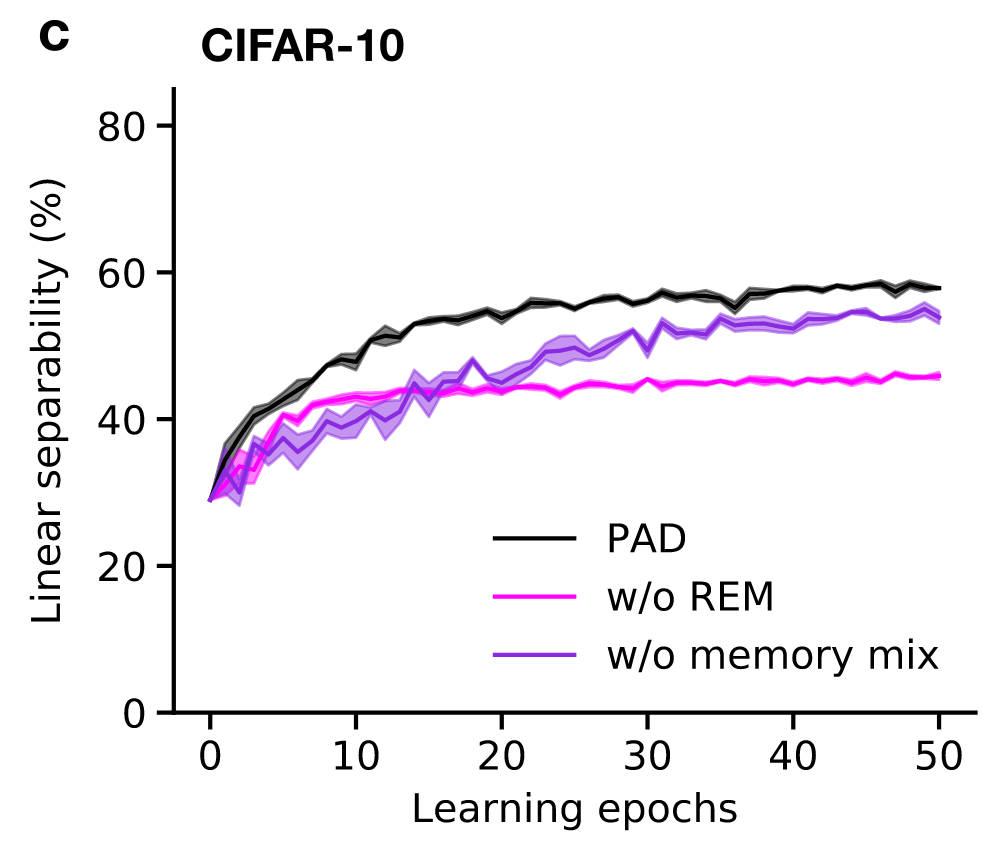

Adversarial dreaming during REM improves the structure of latent representations

Perturbed dreaming during NREM improves the robustness of latent representations

Cortical correlates of adversarial learning

- (trained) discriminator neurons are in different activity regimes during wakefulness and REM sleep; distinguish external from internal, hence may be involved in reality monitoring systems (ACC, mPFC?); impaired discriminator function could thus lead to the formation of delusions

- discriminator learning predicts opposite weight changes on feedforward synapses during wakefulness and REM sleep for identical low-level activity

- generator learning predicts opposite plasticity rule on feedback synapses during wakefulness and REM sleep

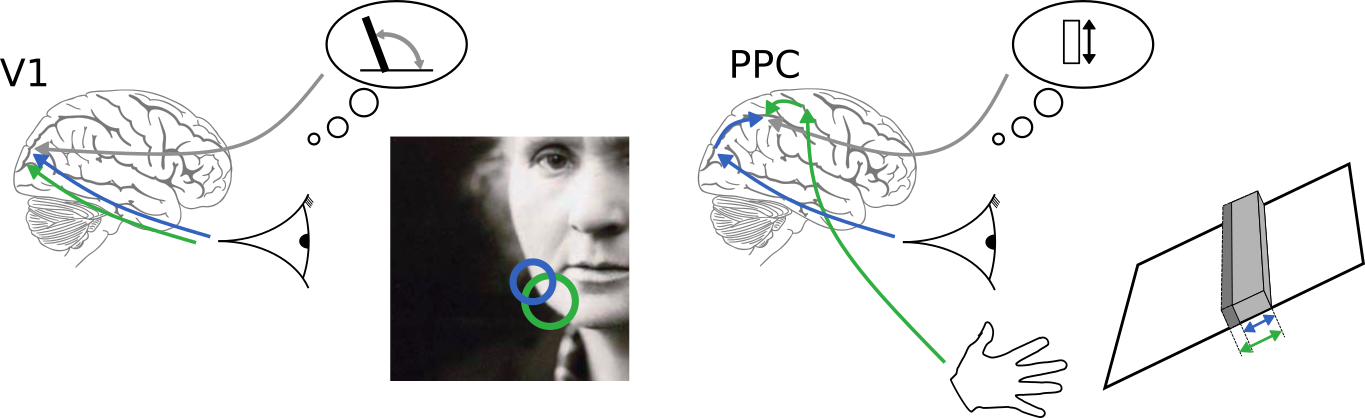

(High-level) neuronal representations reflect contributions from multiple modalities

How to (optimally) combine uncertain information from different sources?

visual

auditory

olfactory

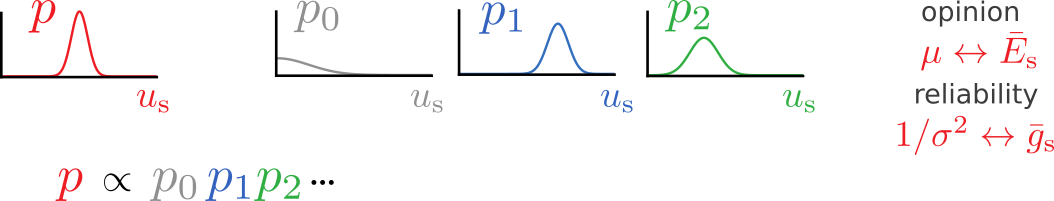

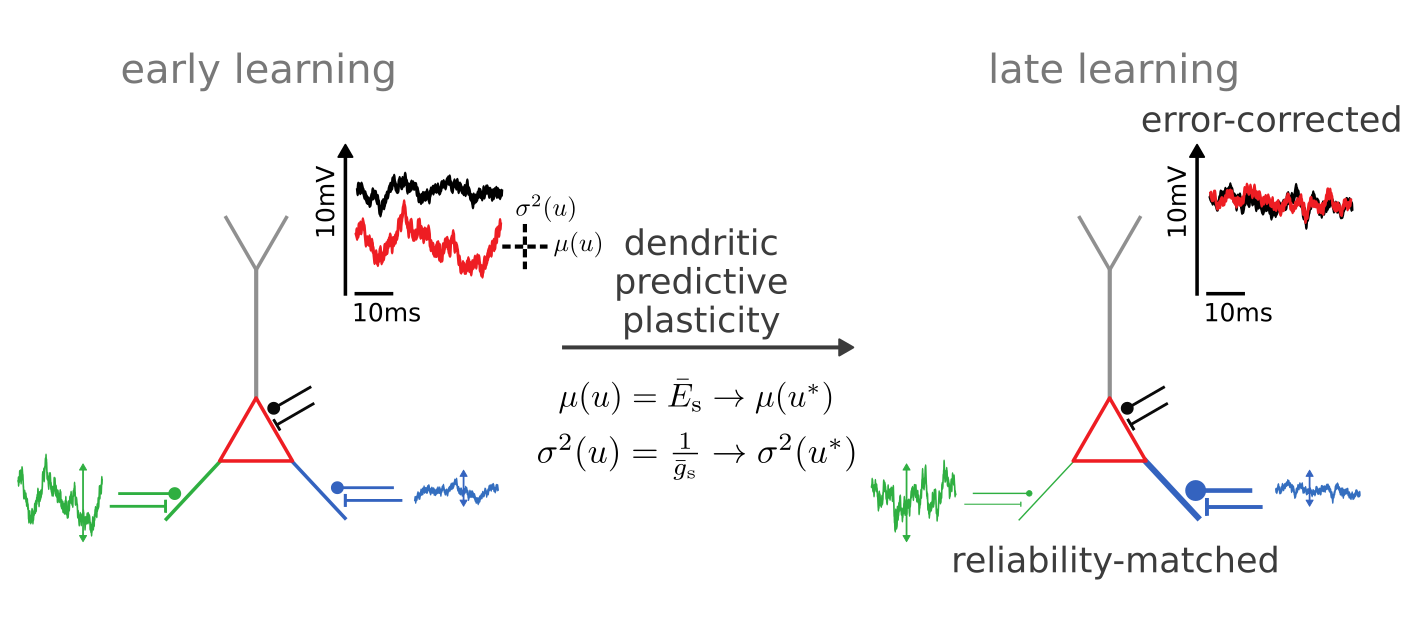

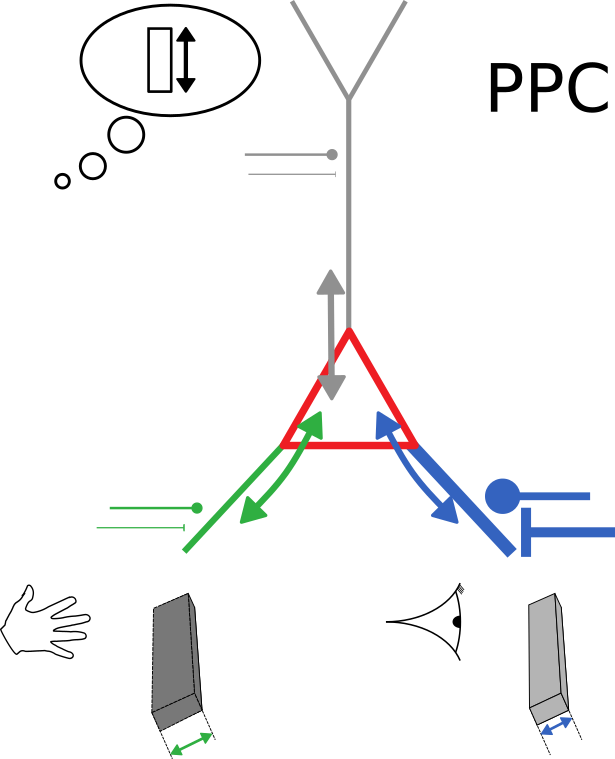

Neurons with conductance-based synapses

naturally implement probabilistic cue integration

An observation

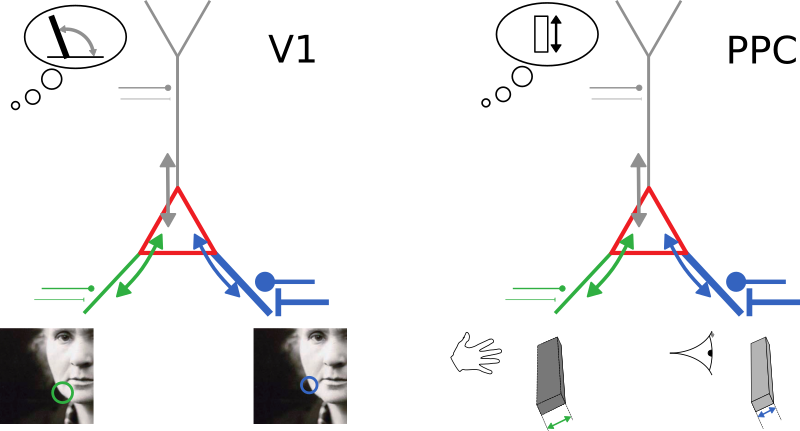

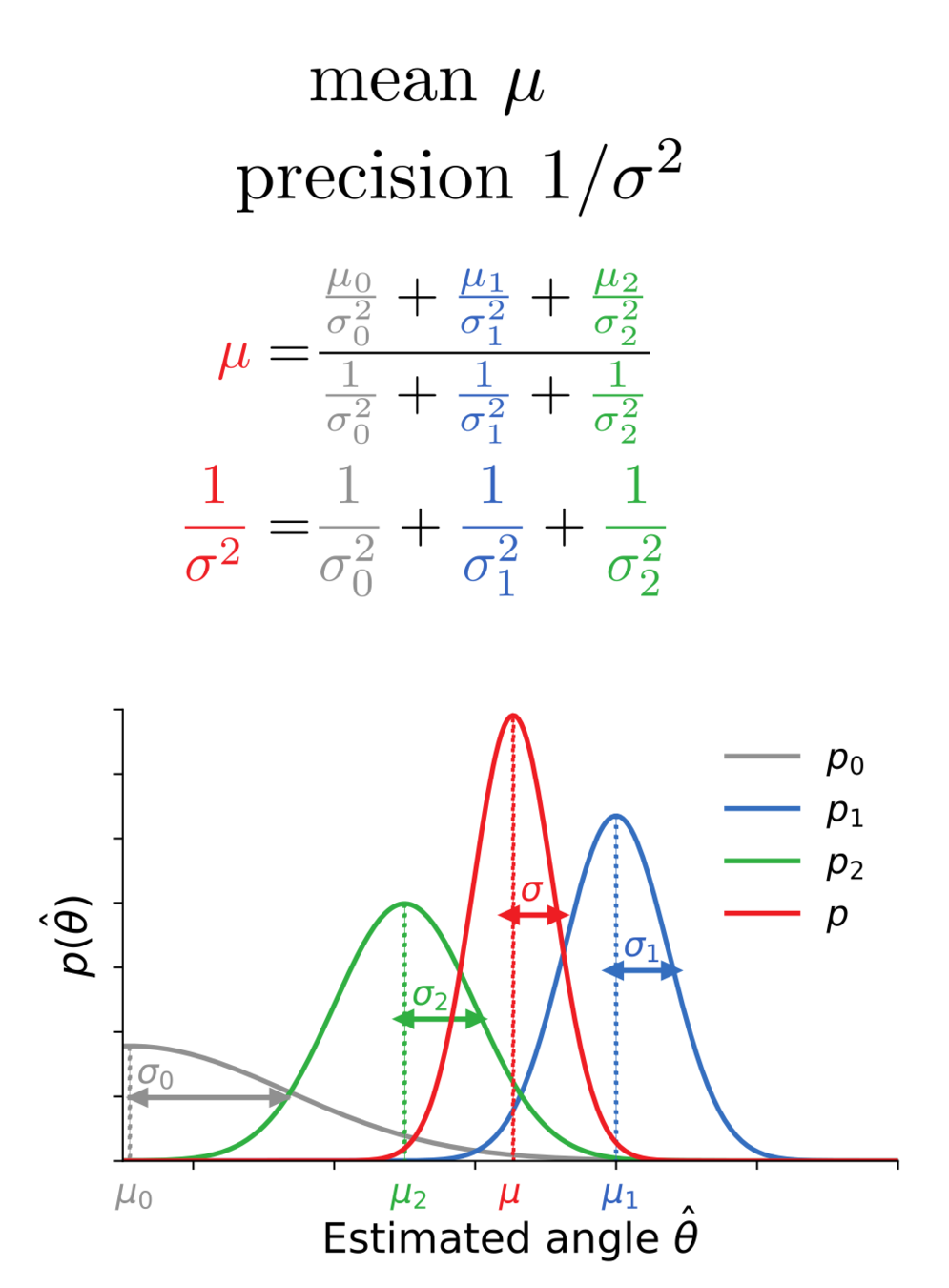

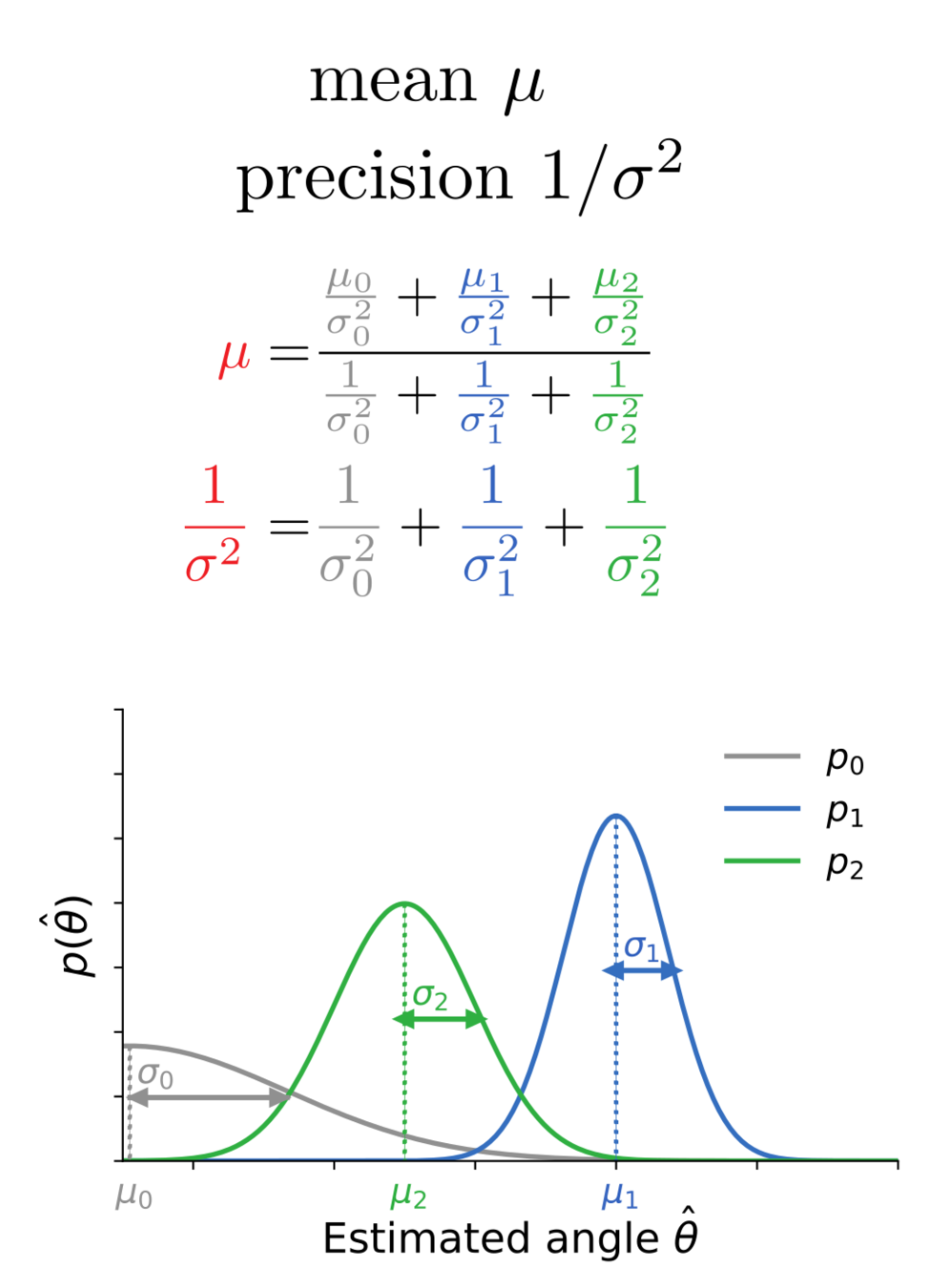

Bayes-optimal inference

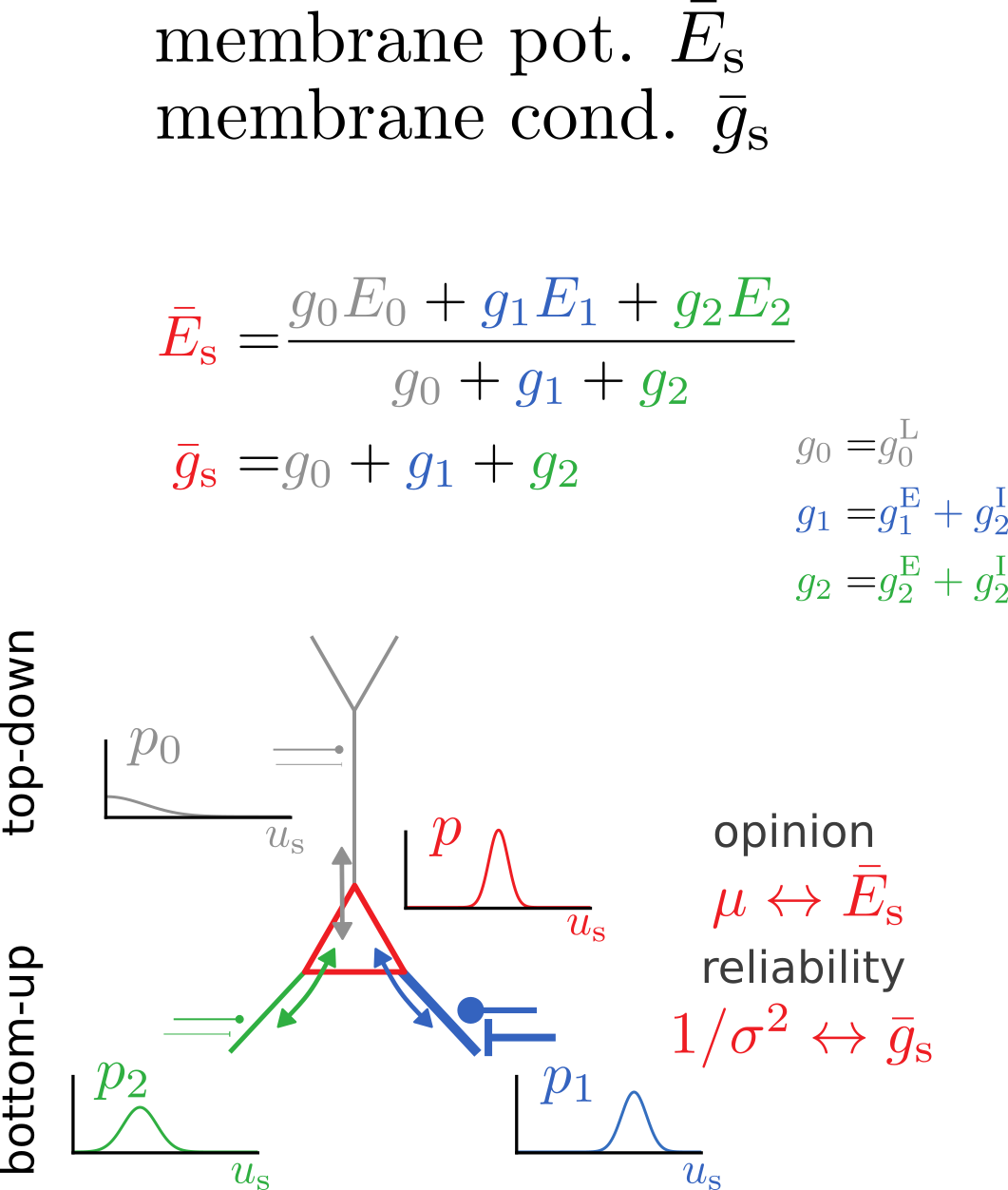

Bidirectional voltage dynamics

Membrane potential dynamics from noisy gradient ascent

Average membrane potentials

= reliability-weighted opinions

Membrane potential variance

= 1/total reliability

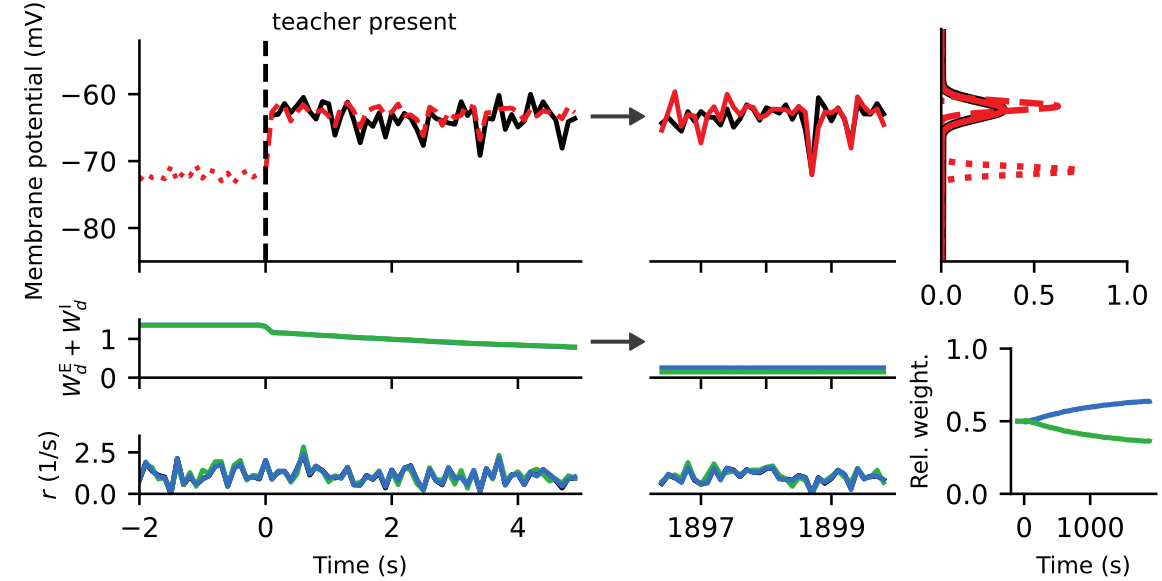

Synaptic plasticity from stochastic gradient ascent

Synaptic plasticity modifies excitatory/inhibitory synapses

- in approx. opposite directions to match the mean

- in identical directions to match the variance

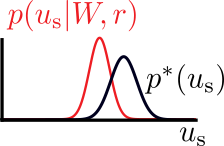

\(u_\text{s}^*\): sample from target distribution \(p^*(u_\text{s})\)

target

actual

Synaptic plasticity performs

error-correction and reliability matching

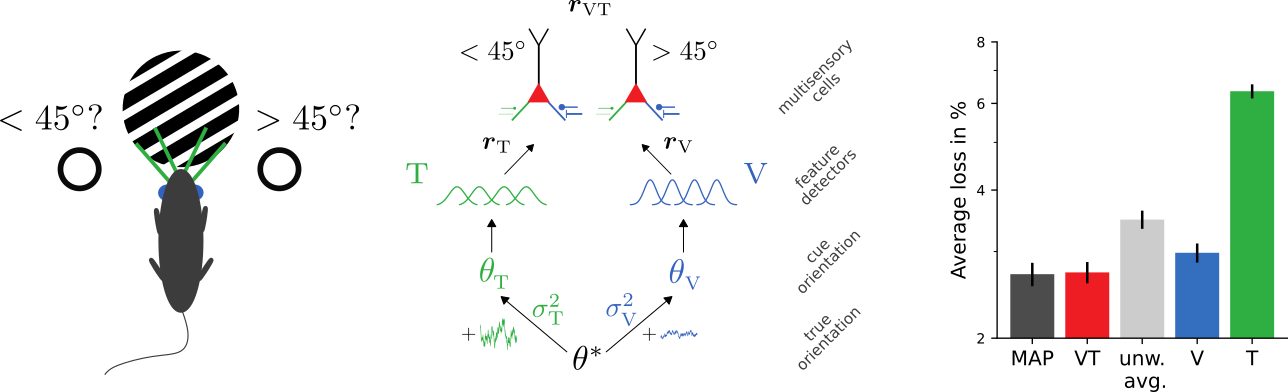

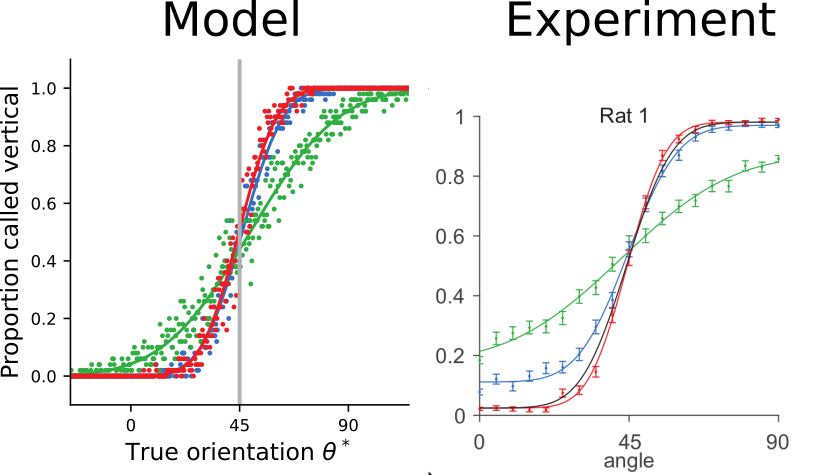

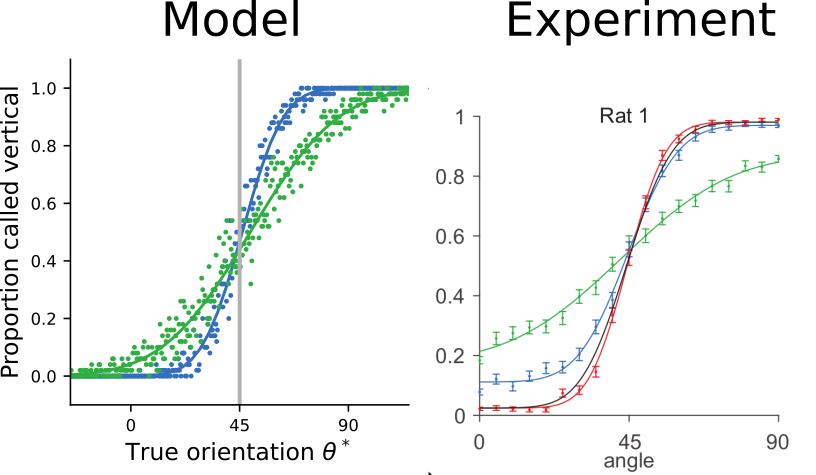

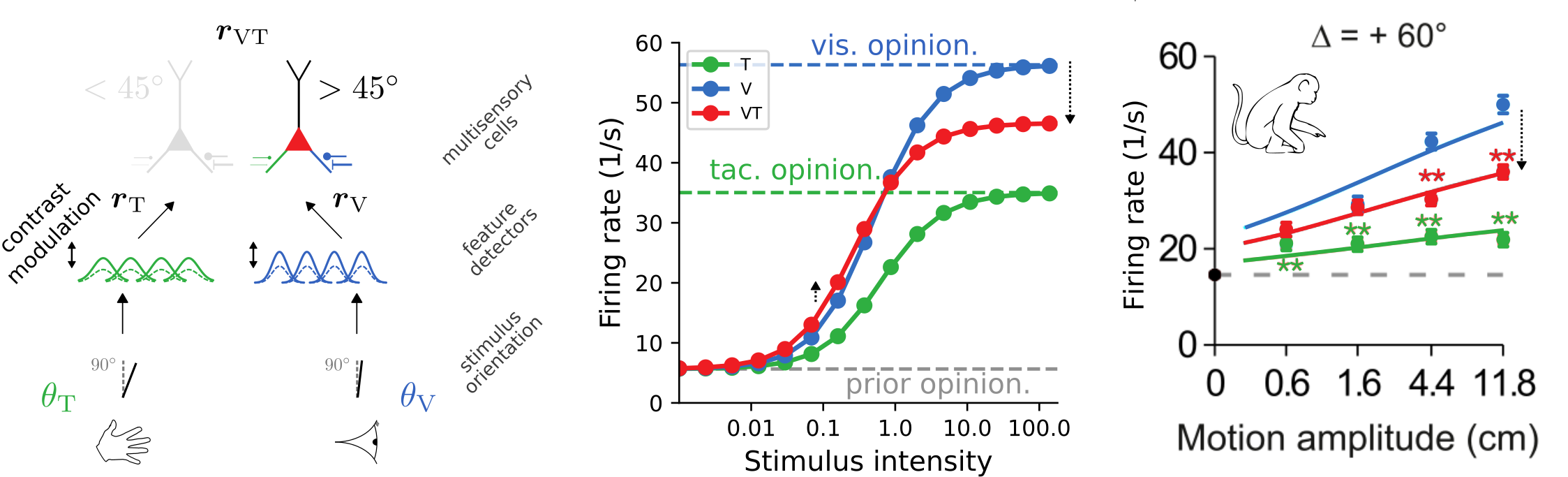

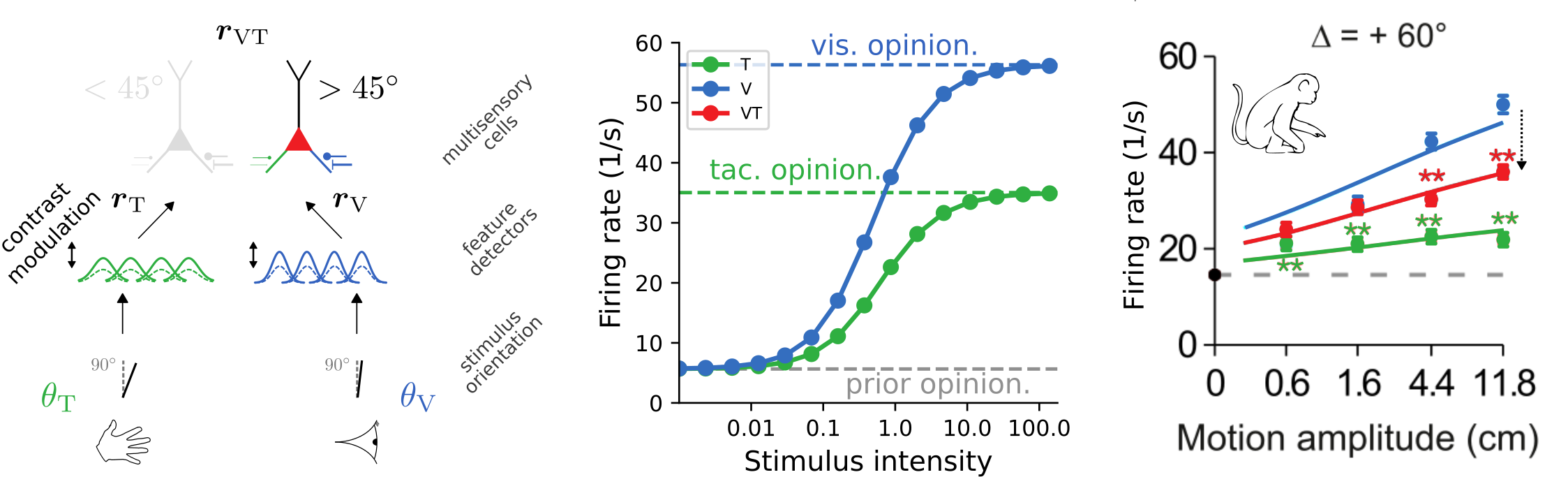

Learning Bayes-optimal inference of orientations from multimodal stimuli

The trained model approximates ideal observers

and reproduces psychophysical signatures of experimental data

[Nikbakht et al., 2018]

Cross-modal suppression as

reliability-weighted opinion pooling

The trained model exhibits cross-modal suppression:

- at low stimulus intensities, firing rate is larger bimodal condition

- at high stimulus intensities, firing rate is smaller in bimodal condition

- example prediction for experiments: strength of suppression depends on relative reliabilities of the two modalities

[Ohshiro et al., 2017]

Summary

Adversarial learning during wakefulness and sleep allows the emergence of organized cortical representations.

Single neurons with conductance-based synapses learn to be optimal cue integrators.

Deperrois, N., Petrovici, M.A., Senn, W., & Jordan, J. (2021). Learning cortical representations through perturbed and adversarial dreaming. arXiv preprint arXiv:2109.04261.

Jordan, J., Sacramento, J., Wybo, W. A., Petrovici, M. A., & Senn, W. (2021). Learning

Bayes-optimal dendritic opinion pooling. arXiv preprint arXiv:2104.13238.

Representations & cue integration

By jakobj

Representations & cue integration

- 219