Ensemble Data Assimilation in

High-Dimensional Chaotic Systems:

Exploiting Low-Dimensional Structures

Kota Takeda

Nagoya University, Japan

* The author was supported by RIKEN Junior Research Associate Program and JST SPRING JPMJSP2110.

Self-introduction

Kota Takeda

Assistant Prof.

at Nagoya Univ., Japan

Research topics:

Uncertainty Quantification

Fluid mechanics

Data assimilation

Nagoya

SIAM activities

(Past) President

of SIAM Student Chapter Kyoto

Joining

SIAM-related Conference held

at Japan, Macau, HongKong, U.S., Italy, & Korea

Establishing publications

in SIAM/JSIAM Journals

This slide is shared.

and so on...

Visit my website!

Contents

-

Introduction

- Numerical Weather Prediction

-

Background

- Mathematical formulation, Ensemble Kalman filter

-

Recent Studies

- Literature, Exploiting low-dimensionality, Conjecture

-

Numerical Result

- Result supporting the conjecture

- Summary

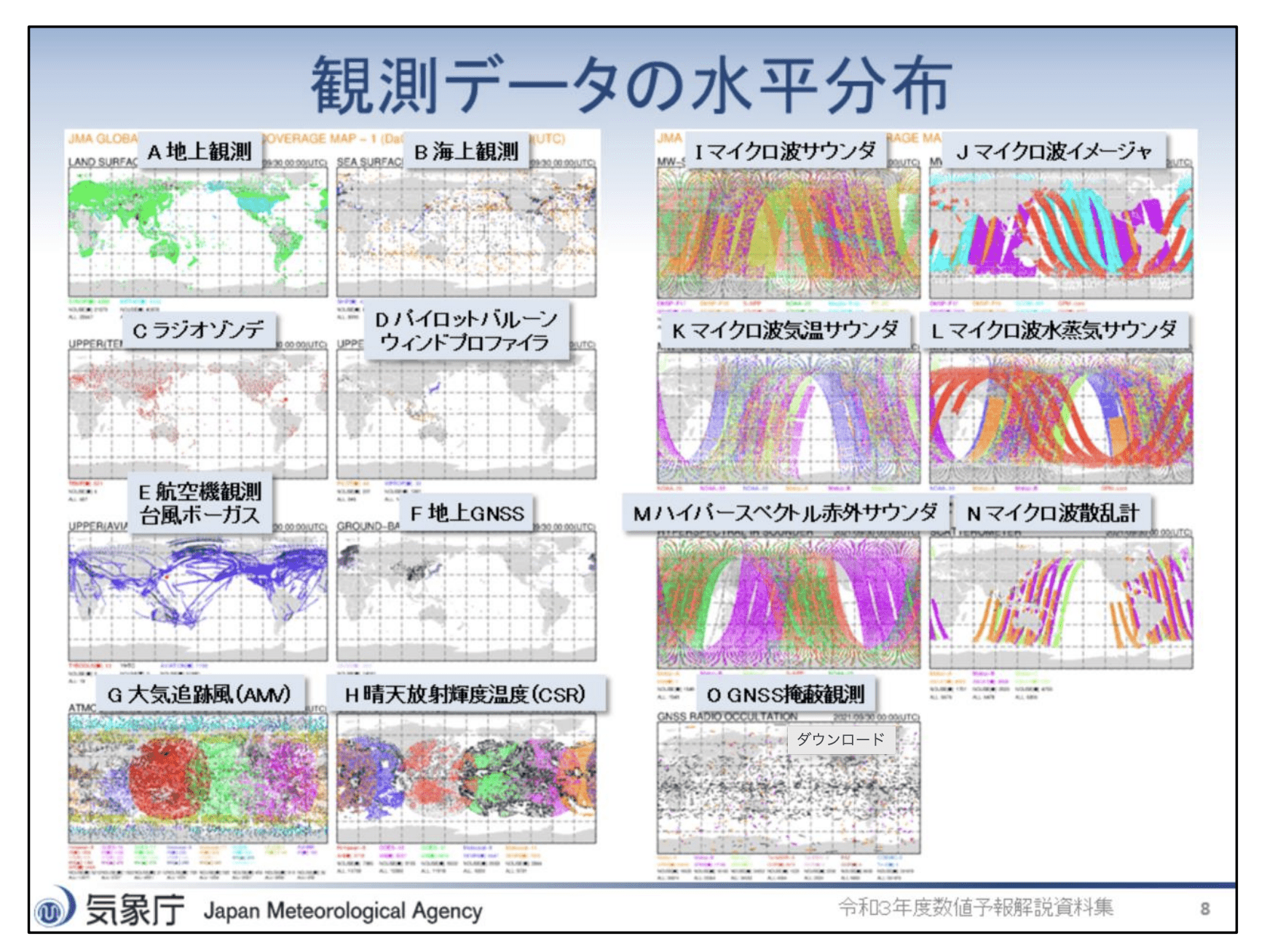

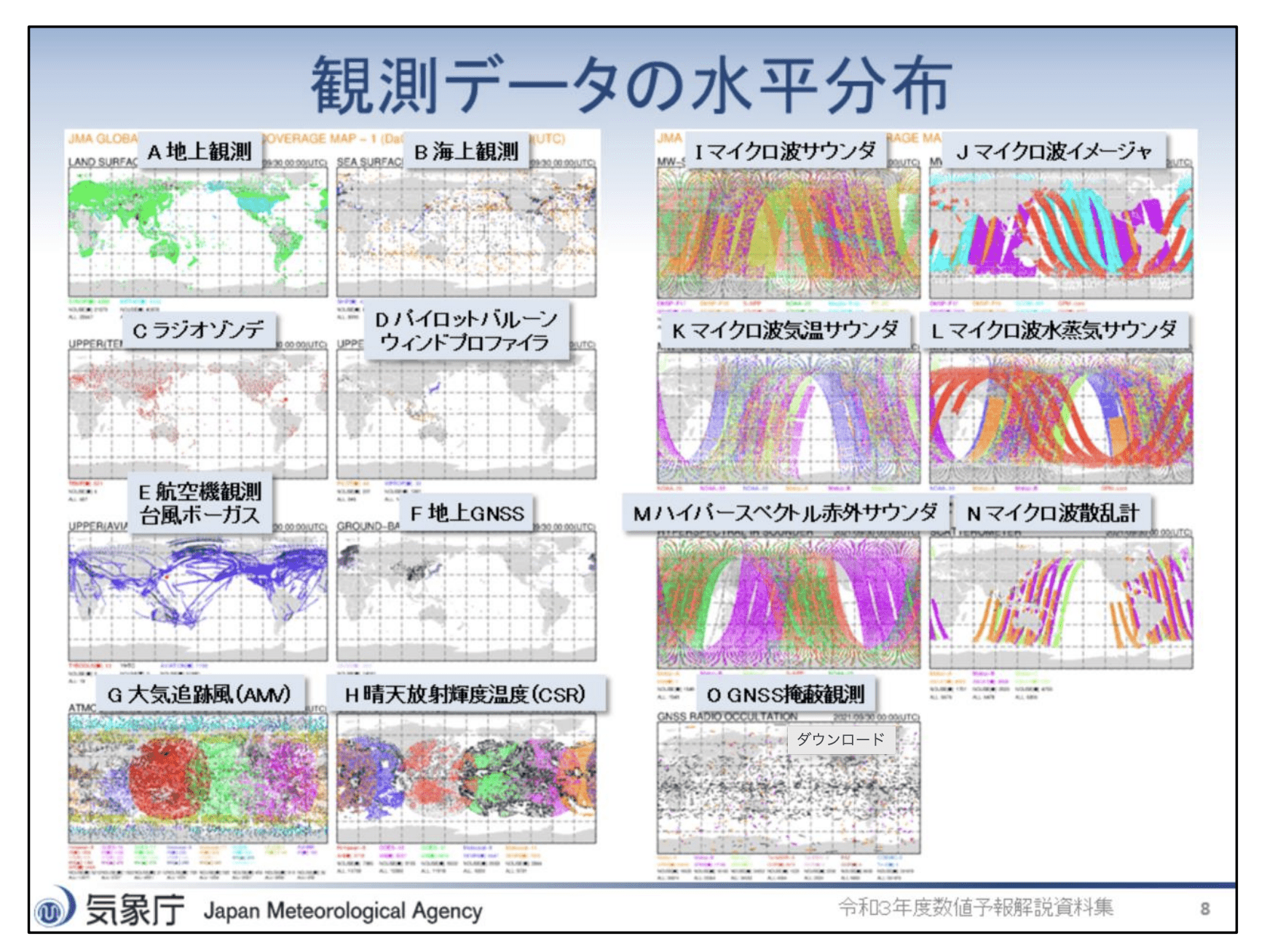

Numerical Weather Prediction

Introduction

Numerical Weather Prediction

Numerical Weather Prediction

State estimation of High dimensional chaotic system

Numerical Weather Prediction

Numerical Weather Prediction

State estimation of High dimensional chaotic system

Unpredictable in long-term

e.g., Typhoon forecast circles

3D grid × variable

Numerical Weather Prediction

Numerical Weather Prediction

State estimation of High dimensional chaotic system

3D grid × variable

We only have partial and noisy observations

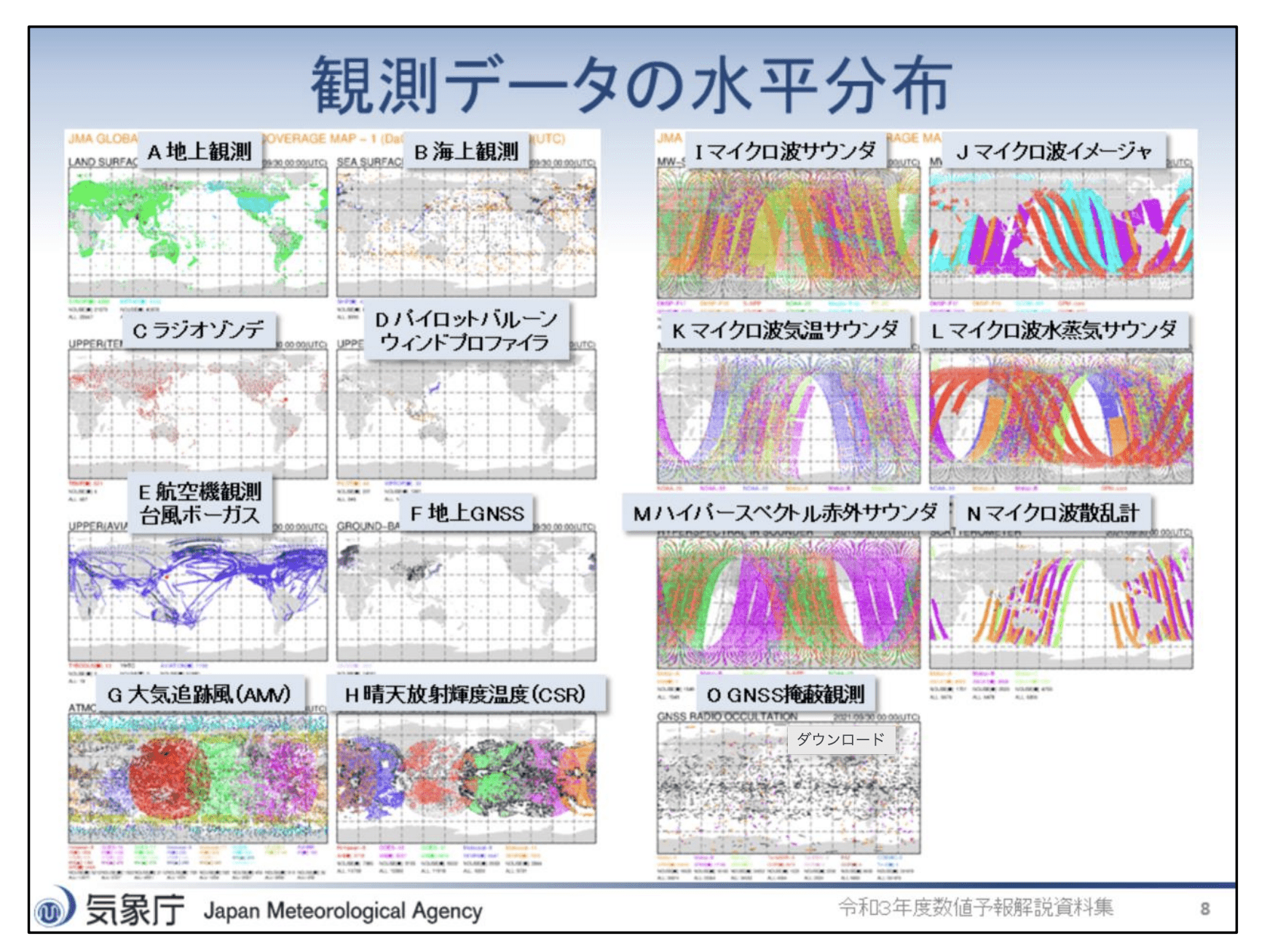

気象庁

Numerical Weather Prediction

Numerical Weather Prediction

State estimation of High dimensional chaotic system

気象庁

noise

partial

idealize

chaotic

3D grid × variable

We only have partial and noisy observations

Numerical Weather Prediction

Numerical Weather Prediction

State estimation of High dimensional chaotic system

We only have partial and noisy observations

気象庁

noise

partial

chaotic

idealize

3D grid × variable

Mathematical formulations

noise

partial

chaotic

Background

Mathematical formulations

The known model generates an unknown true trajectory.

unknown trajectory

Model dynamics

Semi-group

solution

(assume)

unknown

known

noise

partial

chaotic

unknown

Mathematical formulations

known

unknown trajectory

...

Gaussian

at discrete time steps.

observation interval

We have noisy observations in

Observation

Discrete-time model

noise

partial

chaotic

Mathematical formulations

noise

partial

chaotic

...

Sequential state estimation

using the 'background' information

given

Filtering problem

estimate

known:

obs. noise distribution.

Mathematical formulations

...

given

Filtering problem

estimate

known:

'background info.'

Bayesian data assimilation

approximate

(conditional distribution)

Mathematical formulations

Bayesian data assimilation

approximate

※ Estimates of

using

a recursive update of

for efficiency!

Construct

Mathematical formulations

(I) Prediction

(I) Prediction

by model

Bayesian data assimilation

approximate

※ Estimates of

using

using

recursive update of

Introducing auxiliary var.,

'prediction'!

Mathematical formulations

(II)Analysis

(II) Analysis

by Bayes' rule

likelihood function

(I) Prediction

(I) Prediction

by model

Bayesian data assimilation

approximate

※ Estimates of

using

using

recursive update of

Mathematical formulations

(II)Analysis

(II) Analysis

by Bayes' rule

likelihood function

(I) Prediction

(I) Prediction

by model

Bayesian data assimilation

approximate

※ Estimates of

using

using

recursive update of

Repeat (I) & (II)

Prediction

...

Proposition

The n-iterations (I) & (II)

A major ensemble data assimilation algorithm

Background

Ensemble Kalman filter (EnKF)

'Ensemble' → Approximate by a set of particles

'Kalman' → Gaussian approximation

→ Correct mean and covariance

using observation

ensemble

a set of particles (samples)!

A major ensemble data assimilation algorithm

Ensemble Kalman filter (EnKF)

(Evensen2009)

- Approximate by ensemble

- Update:

- Correct mean and covariance

using observation

Repeat (I) & (II)...

(II)Analysis

(I) Prediction

Just evolve each sample

Correct samples based on the least squares

Ensemble Kalman filter (EnKF)

ensemble

(Evensen2009)

ensemble size

Ensemble Kalman filter (EnKF)

Question

How many samples are required

for 'accurate state estimation' using EnKF?

ensemble size

(Asymptotic) filter accuracy

: state estimation error.

: variance of obs. noise,

where

estimate of EnKF

squared error

log-log

Ensemble Kalman filter (EnKF)

Question

How many samples are required

for 'accurate state estimation' using EnKF?

ensemble size

ensemble

Large

Accurate

Small

ensemble

Inaccurate

← Find minimum

achieving accuracy!

squared error

log-log

Ensemble Kalman filter (EnKF)

Question

How many samples are required

for 'accurate state estimation' using EnKF?

Recent Studies

Literature

(de Wiljes+2018, T.+2024)

(Sanz-Alonso+2025)

(González-Tokman+2013)

for

using

'Stability' of

in unobserved sp.

'Lipschitz'

with

too many

additional factor

Accurate initial ensemble

unrealistic

Mathematical analyses have revealed sufficient conditions for .

Dim. of 'unstable directions'

in tangent sp.

Literature

(de Wiljes+2018, T.+2024)

(Sanz-Alonso+2025)

(González-Tokman+2013)

for

using

'Stability' of

in unobserved sp.

'Lipschitz'

with

Dim. of 'unstable directions'

in tangent sp.

too many

additional factor

Accurate initial ensemble

unrealistic

Mathematical analyses have revealed sufficient conditions for .

Focus on

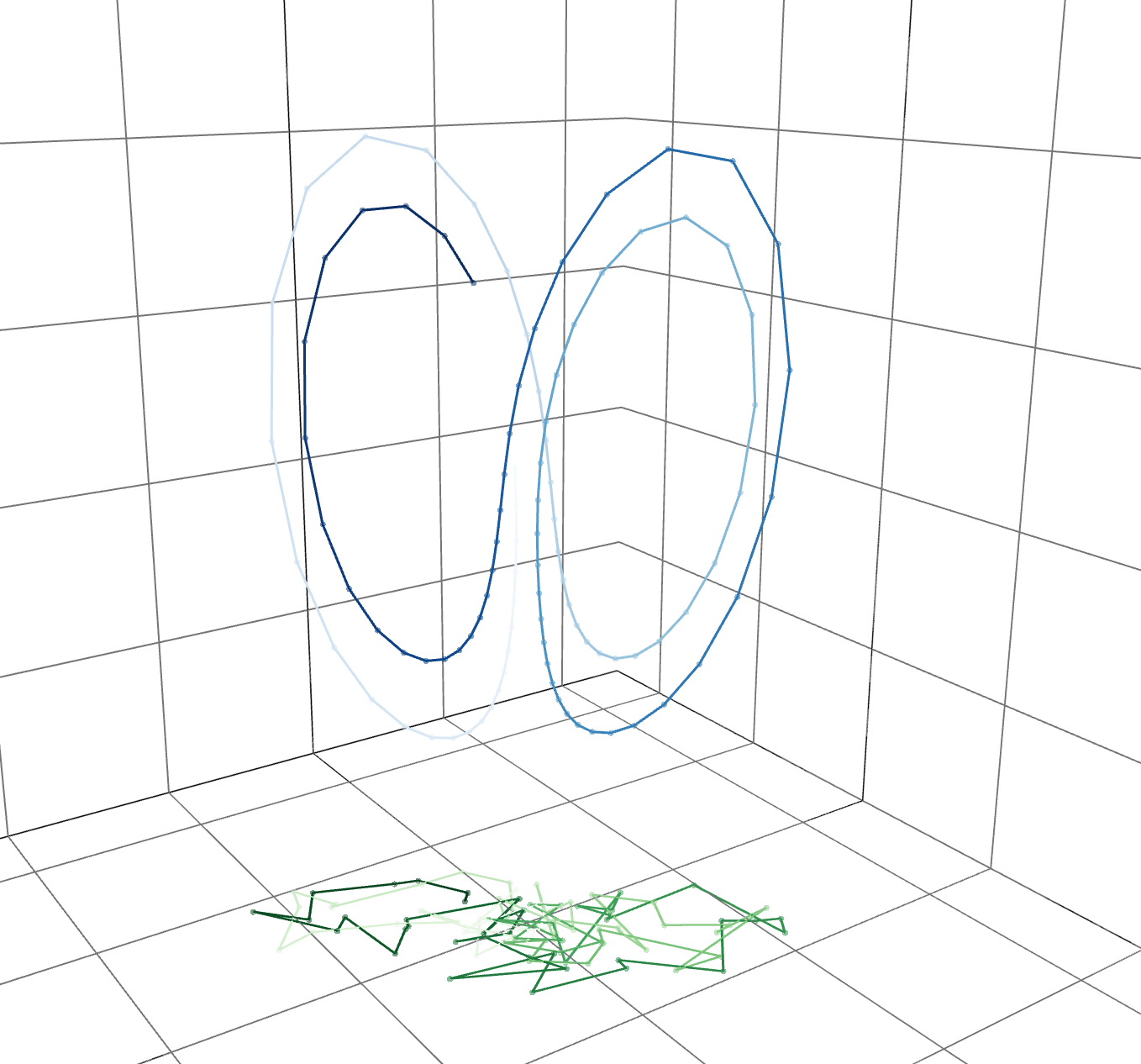

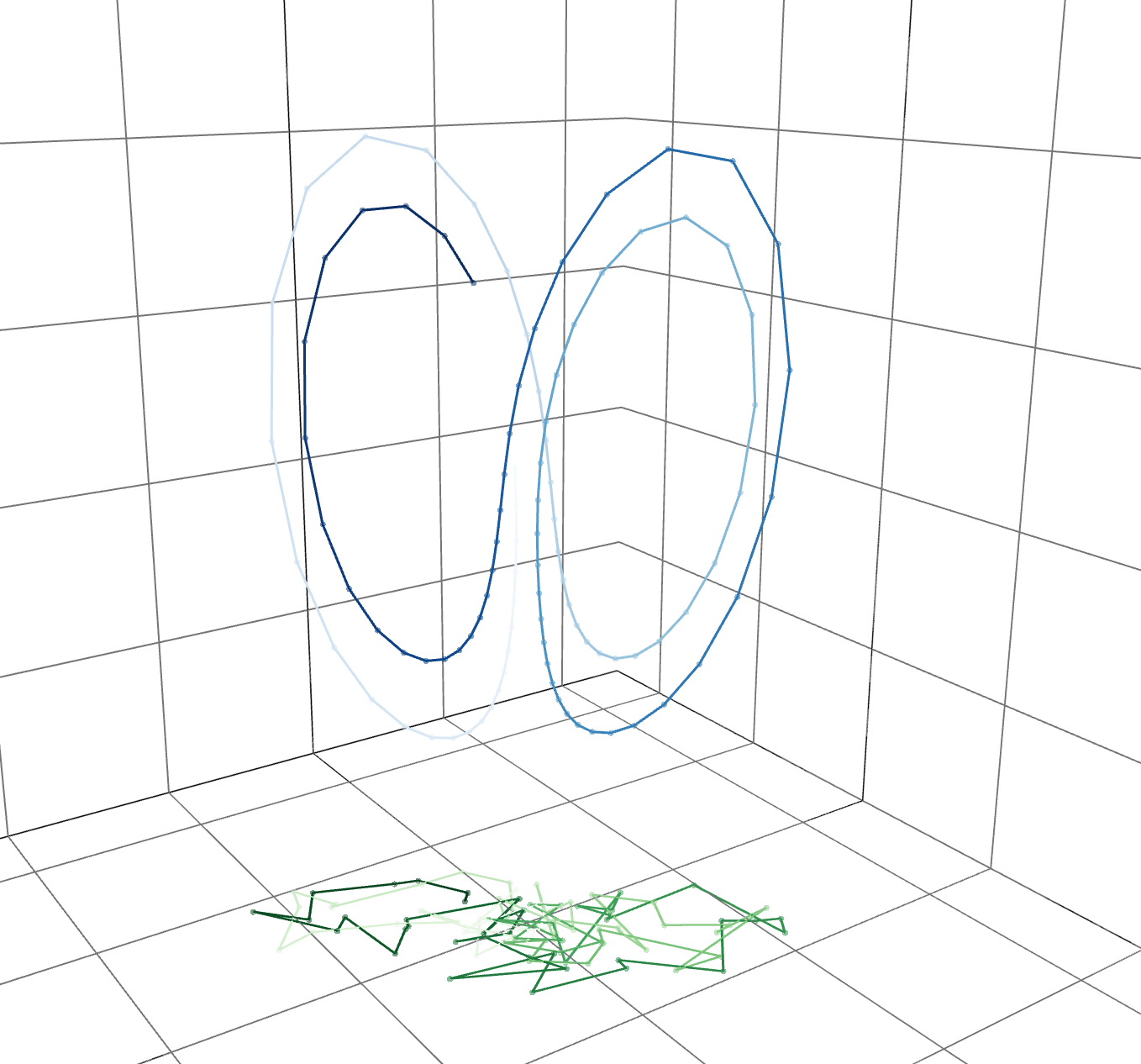

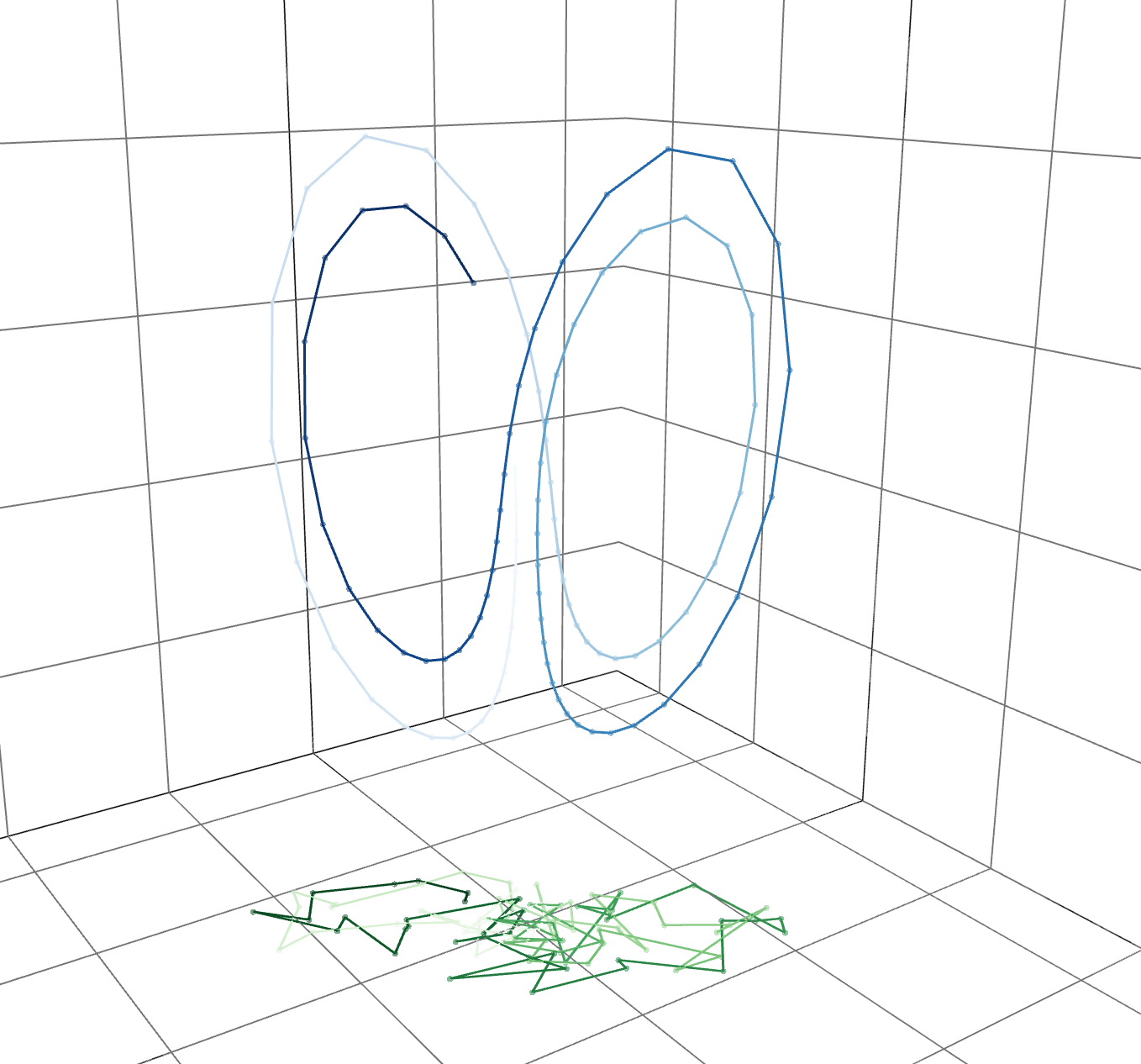

Exploiting Low Dimensionality

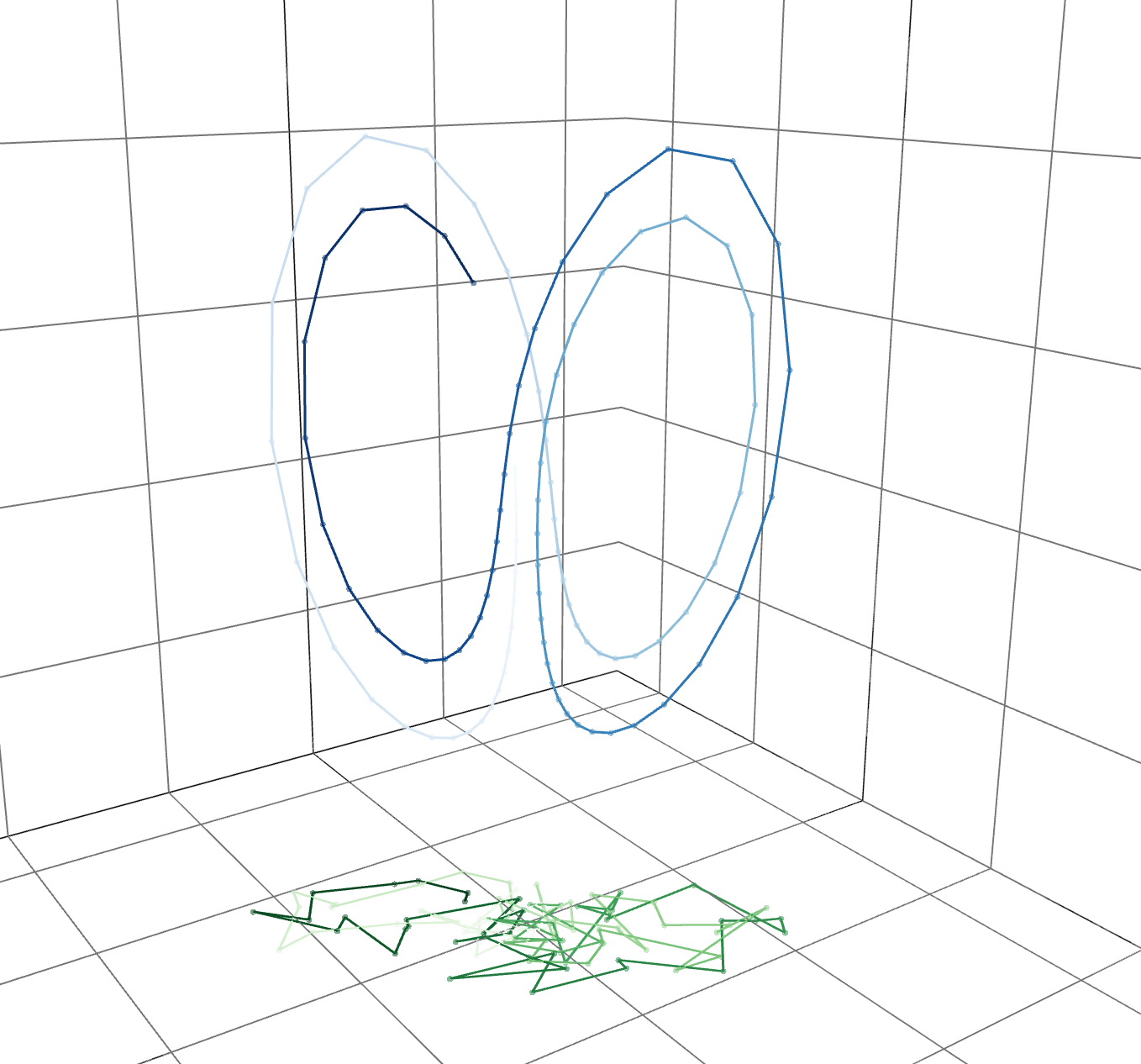

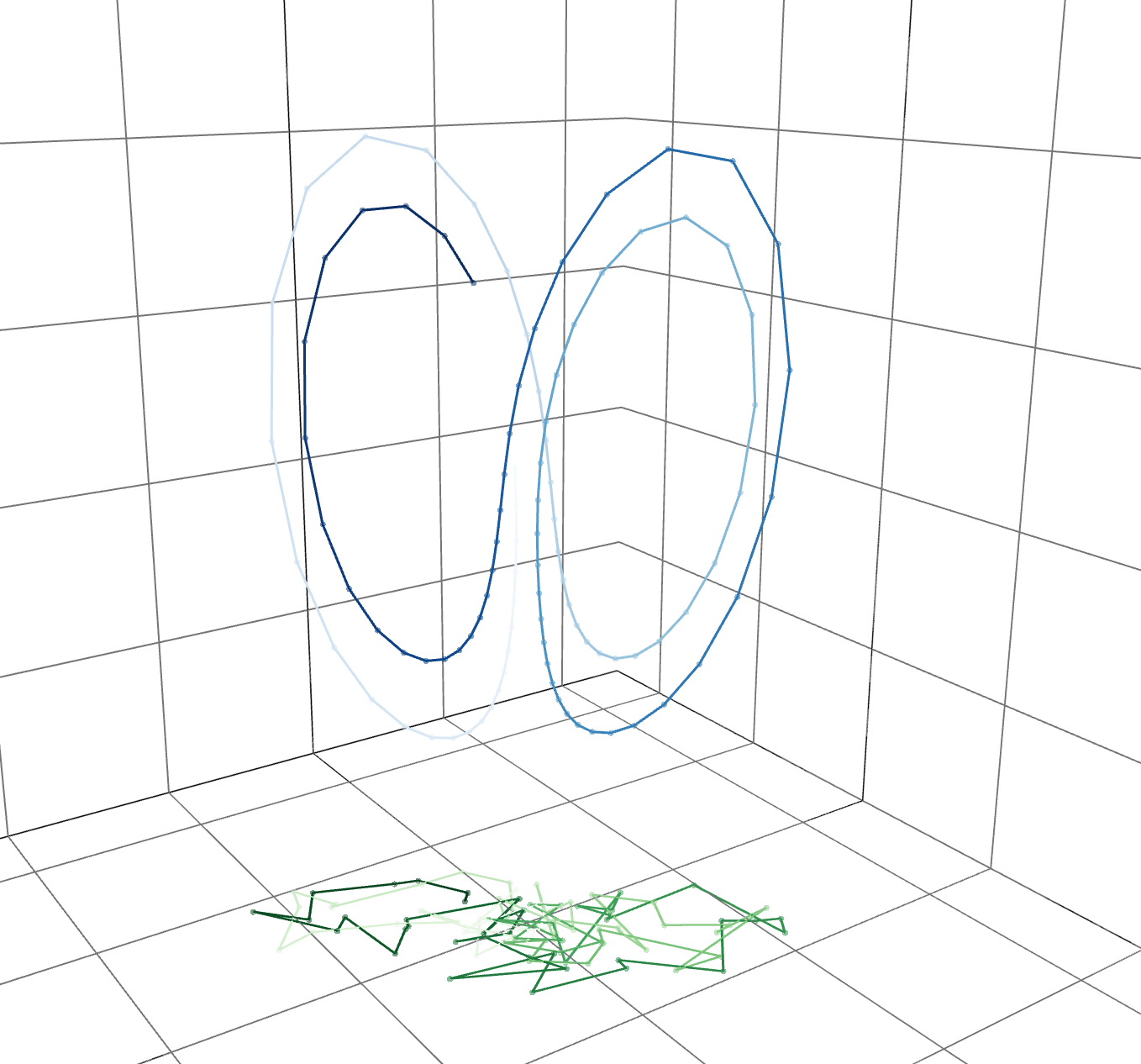

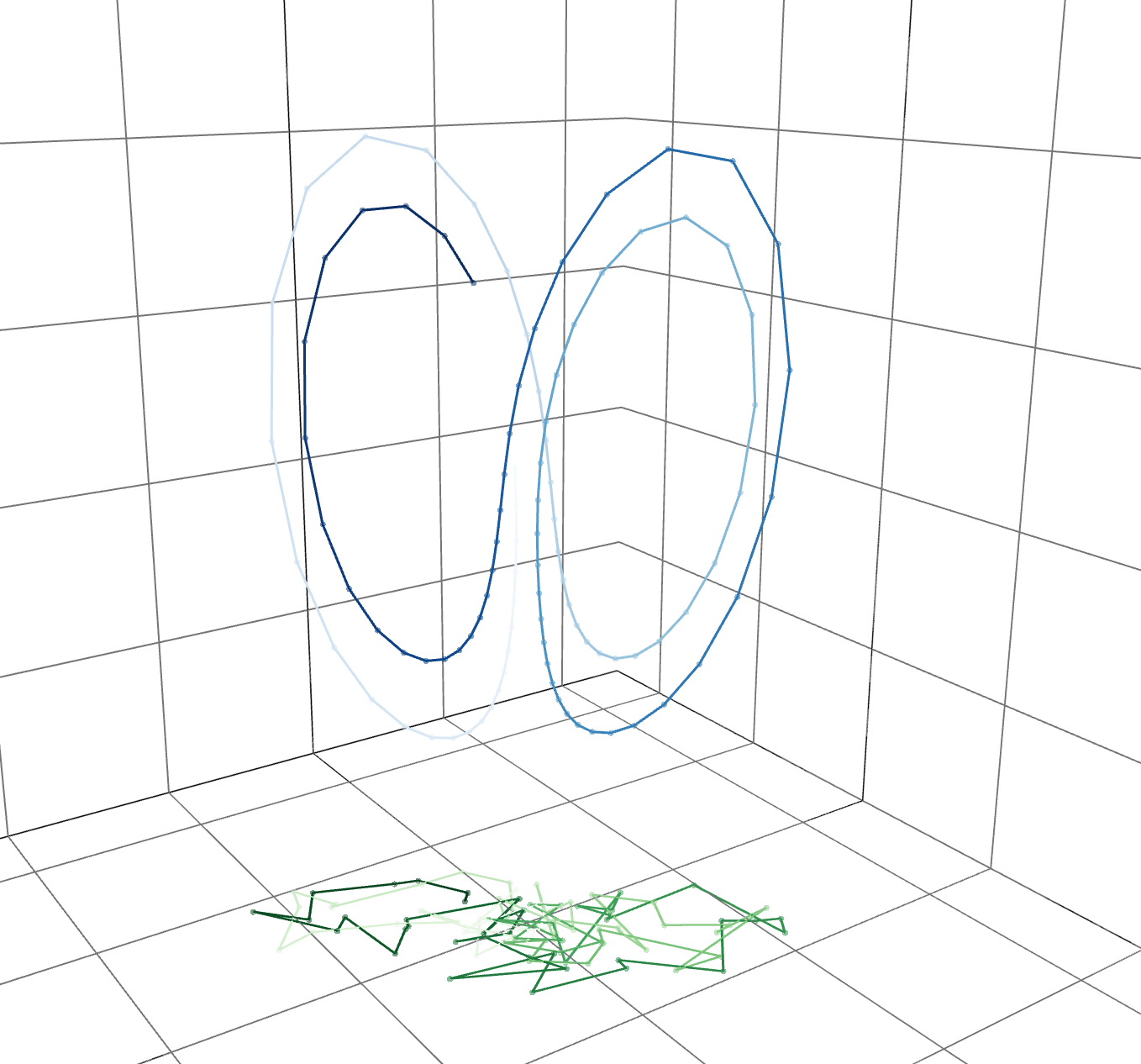

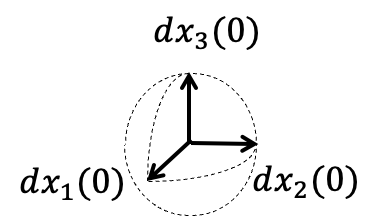

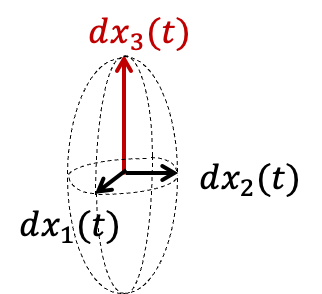

infinitesimal perturbation

expanded

contracted

Dim. of unstable directions in tangent sp.

ex) one unstable direction in 3D

Jacobian matrix

Idea: Measuring 'degrees of freedom' of a chaotic system based on sensitivities to small perturbations

→ High uncertainty of prediction along this direction.

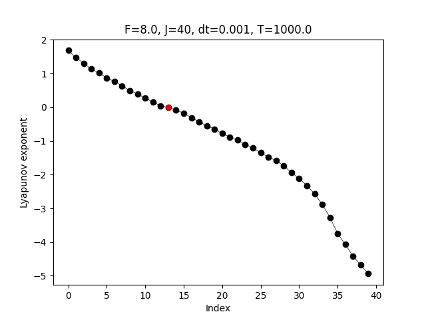

Exploiting Low Dimensionality

Dim. of unstable directions in tangent sp.

Jacobian matrix

Define

Remark positive exponent → unstable

:

-singular value of

Lyapunov exponents:

Definition

Information on

Idea: Measuring 'degrees of freedom' of a chaotic system based on sensitivities to small perturbations

Exploiting Low Dimensionality

Ansatz (low-dimensional structure):

most geophysical flows satisfy

owing to their 'dissipative' property.

Exploiting Low Dimensionality

ex) 40-dim. Lorenz 96 model (chaotic toy model)

unstable

stable

- Navier-Stokes equations

-

Primitive equations

(core of atmospheric model)

Other dissipative systems

Lyapunov exponents

(T.+2025)

Ansatz (low-dimensional structure):

most geophysical flows satisfy

owing to their 'dissipative' property.

Exploiting Low Dimensionality

Conjecture the minimum ensemble size for filter accuracy with the EnKF is

(※ with any initial ensemble)

Tracking only the unstable directions

unstable direction

: critical few ensemble

Exploiting Low Dimensionality

Efficient & Accurate Weather Prediction

Conjecture the minimum ensemble size for filter accuracy with the EnKF is

(※ with any initial ensemble)

Ansatz

squared error

log-log

EnKF with

Numerical Result

Supporting the conjecture

Numerical Result

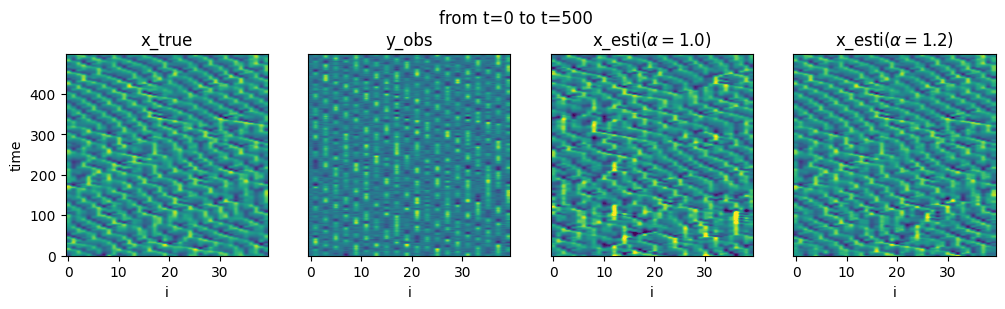

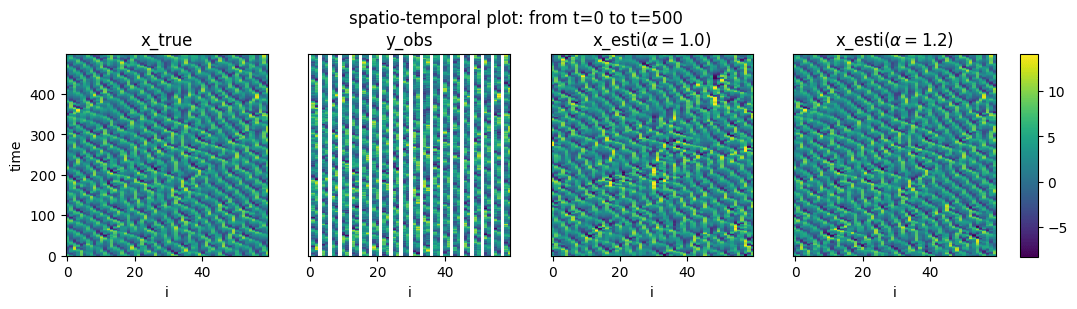

To support the conjecture, we perform numerical experiments estimating synthetic data generated by the Lorenz 96 model.

Numerical Result

To support the conjecture, we perform numerical experiments estimating synthetic data generated by the Lorenz 96 model.

(Lorenz1996, Lorenz+1998)

Mimics chaotic variation of

physical quantities at equal latitudes.

non-linear conserving

linear dissipating

forcing

Lorenz 96 model

Numerical Result

To support the conjecture, we perform numerical experiments estimating synthetic data generated by the Lorenz 96 model.

Spatio-temporal plot

Numerical Result

To support the conjecture, we perform numerical experiments estimating synthetic data generated by the Lorenz 96 model.

Setup (T.+2025)

obs.:

model:Lorenz96 ( )

noise:

EnKF:

(others are chosen appropriately)

numerical integration: RungeKutta

obs. interval:

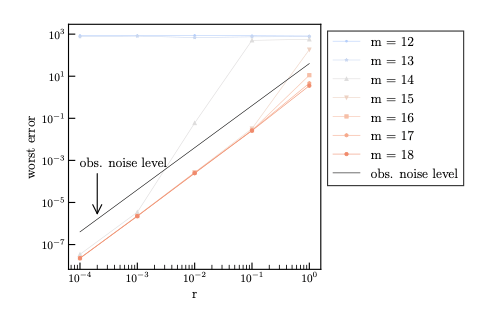

For each , we compute the dependency of the worst error

on .

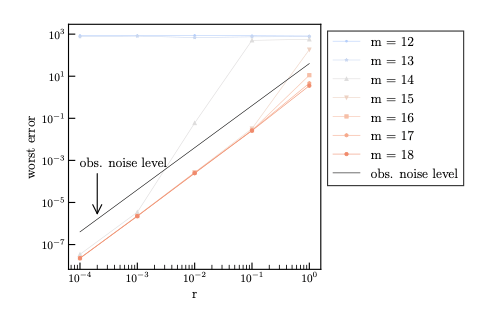

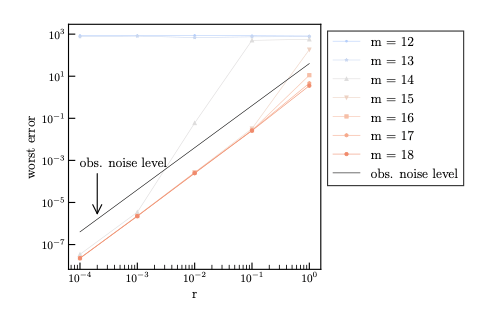

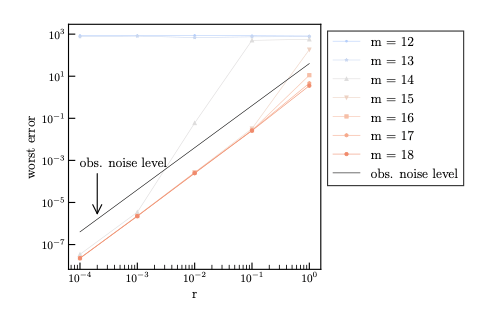

Numerical Result

blue

red

gray

For each , we compute the dependency of the worst error

on → log-log plot.

Numerical Result

blue

red

gray

For each , we compute the dependency of the worst error

on → log-log plot.

Numerical Result

blue

red

gray

accurate

→ This supports the conjecture.

Inaccurate

For each , we compute the dependency of the worst error

on → log-log plot.

(filter accuracy)

Summary

Problem & Question

The sequential state estimation of high-dimensional chaotic systems using EnKF.

→ How many samples are required for filter accuracy?

Conjecture & Result

Determined by the unstable dimension of the dynamics.

→ Numerical evidence

→ EnKF can exploit the low-dimensional structure.

Future

Math: Proving the conjecture for dissipative systems.

Application: Spreading data assimilation in applications.

ensemble

Thank you for your attention

Visit my website!

(T.+2024)

K. T. and T. Sakajo, SIAM/ASA Journal on Uncertainty Quantification, 12(4), 1315–1335,

(T.+2025)

K. T. and T. Miyoshi, EGUsphere preprint, https://egusphere.copernicus.org/preprints/2025/egusphere-2025-5144/.

References

- (T.+2024) K. T. & T. Sakajo, SIAM/ASA Journal on Uncertainty Quantification, 12(4), 1315–1335.

- (T. 2025) Kota Takeda, Error Analysis of the Ensemble Square Root Filter for Dissipative Dynamical Systems, PhD Thesis, Kyoto University, 2025.

- (Kelly+2014) D. T. B. Kelly, K. J. H. Law, and A. M. Stuart (2014), Well-posedness and accuracy of the ensemble Kalman filter in discrete and continuous time, Nonlinearity, 27, pp. 2579–260.

- (Al-Ghattas+2024) O. Al-Ghattas and D. Sanz-Alonso (2024), Non-asymptotic analysis of ensemble Kalman updates: Effective dimension and localization, Information and Inference: A Journal of the IMA, 13.

- (Tong+2016a) X. T. Tong, A. J. Majda, and D. Kelly (2016), Nonlinear stability and ergodicity of ensemble based Kalman filters, Nonlinearity, 29, pp. 657–691.

- (Tong+2016b) X. T. Tong, A. J. Majda, and D. Kelly (2016), Nonlinear stability of the ensemble Kalman filter with adaptive covariance inflation, Comm. Math. Sci., 14, pp. 1283–1313.

- (Kwiatkowski+2015) E. Kwiatkowski and J. Mandel (2015), Convergence of the square root ensemble Kalman filter in the large ensemble limit, Siam-Asa J. Uncertain. Quantif., 3, pp. 1–17.

- (Mandel+2011) J. Mandel, L. Cobb, and J. D. Beezley (2011), On the convergence of the ensemble Kalman filter, Appl.739 Math., 56, pp. 533–541.

References

-

(de Wiljes+2018) J. de Wiljes, S. Reich, and W. Stannat (2018), Long-Time Stability and Accuracy of the Ensemble Kalman-Bucy Filter for Fully Observed Processes and Small Measurement Noise, Siam J. Appl. Dyn. Syst., 17, pp. 1152–1181.

-

(Evensen2009)Evensen, G. (2009), Data Assimilation: The Ensemble Kalman Filter. Springer, Berlin, Heidelberg.

-

(Burgers+1998) G. Burgers, P. J. van Leeuwen, and G. Evensen (1998), Analysis Scheme in the Ensemble Kalman Filter, Mon. Weather Rev., 126, 1719–1724.

-

(Bishop+2001) C. H. Bishop, B. J. Etherton, and S. J. Majumdar (2001), Adaptive Sampling with the Ensemble Transform Kalman Filter. Part I: Theoretical Aspects, Mon. Weather Rev., 129, 420–436.

-

(Anderson 2001) J. L. Anderson (2001), An Ensemble Adjustment Kalman Filter for Data Assimilation, Mon. Weather Rev., 129, 2884–2903.

-

(Reich+2015) S. Reich and C. Cotter (2015), Probabilistic Forecasting and Bayesian Data Assimilation, Cambridge University Press, Cambridge.

-

(Law+2015) K. J. H. Law, A. M. Stuart, and K. C. Zygalakis (2015), Data Assimilation: A Mathematical Introduction, Springer.

References

- (Azouani+2014) A. Azouani, E. Olson, and E. S. Titi (2014), Continuous Data Assimilation Using General Interpolant Observables, J. Nonlinear Sci., 24, 277–304.

-

(Sanz-Alonso+2025), D. Sanz-Alonso and N. Waniorek (2025), Long-Time Accuracy of Ensemble Kalman Filters for Chaotic Dynamical Systems and Machine-Learned Dynamical Systems, SIAM J. Appl. Dyn. Syst., pp. 2246–2286.

-

(Biswas+2024), A. Biswas and M. Branicki (2024), A unified framework for the analysis of accuracy and stability of a class of approximate Gaussian filters for the Navier-Stokes Equations, arXiv preprint, https://arxiv.org/abs/2402.14078.

-

(T.2025) K. T. (2025), Error analysis of the projected PO method with additive inflation for the partially observed Lorenz 96 model, arXiv preprint, https://doi.org/10.48550/arXiv.2507.23199.

-

(González-Tokman+2013) C. González-Tokman and B. R. Hunt (2013) Ensemble data assimilation for hyperbolic systems, Physica D: Nonlinear Phenomena, 243(1), pp. 128–142.

-

(T.+2025) K. T. and T. Miyoshi, Quantifying the minimum ensemble size for asymptotic accuracy of the ensemble Kalman filter using the degrees of instability, EGUsphere preprint, https://egusphere.copernicus.org/preprints/2025/egusphere-2025-5144/.

Ensemble Data Assimilation in High-Dimensional Chaotic Systems: Exploiting Low-Dimensional Structures

By kotatakeda

Ensemble Data Assimilation in High-Dimensional Chaotic Systems: Exploiting Low-Dimensional Structures

20min(including Q&A)

- 94