Error Analysis of

the Ensemble Square Root Filter for Dissipative Dynamical Systems

2025/01/23 @Kyoto University

Supervisors

Professor Takashi Sakajo, Kyoto University

Professor Takemasa Miyoshi, Kyoto University & RIKEN

Professor Sebastian Reich, University of Potsdam

Kota Takeda

Department of mathematics, Kyoto University

Introduction

Setup

Bayesian filtering

Algorithms

Results (Error analysis)

Existing results for EnKF

My results

Summary & Discussion

Contents

Related sections in Thesis

Section 1, 2, 5

Section 3

Section 4

Section 6

Section 7

Section 8

Data Assimilation (DA)

The seamless integration of data and models

Model

Dynamical systems and its numerical simulations

ODE, PDE, SDE,

Numerical analysis, HPC,

Fluid mechanics, Physics

chaos

error

Data

Obtained from experiments or measurements

Statistics, Data analysis,

Radiosonde/Radar/Satellite observation

noise

limited

×

Each has uncertainty! (incomplete)

Numerical Weather Prediction (NWP)

Numerical Weather Prediction (NWP) uses atmospheric models

→ Unpredictable in long-term

Typhoon route circles

A small initial error can

grow exponentially fast.

state

time

true/nature state

simulated state

→ Chaotic

NWP and DA

→ chaotic

→ unpredictable in long-term

Numerical Weather Prediction (NWP) uses atmospheric models

Data Assimilation (DA):

simulate

true state

prediction

(not accessible directly)

Atmospheric

model

observation

Correct using observations (noisy, partial)

Correct using

indirect information from the true state

time

NWP & DA

in DA for Numerical Weather Prediction:

chaotic

noisy

partial

observation

true state

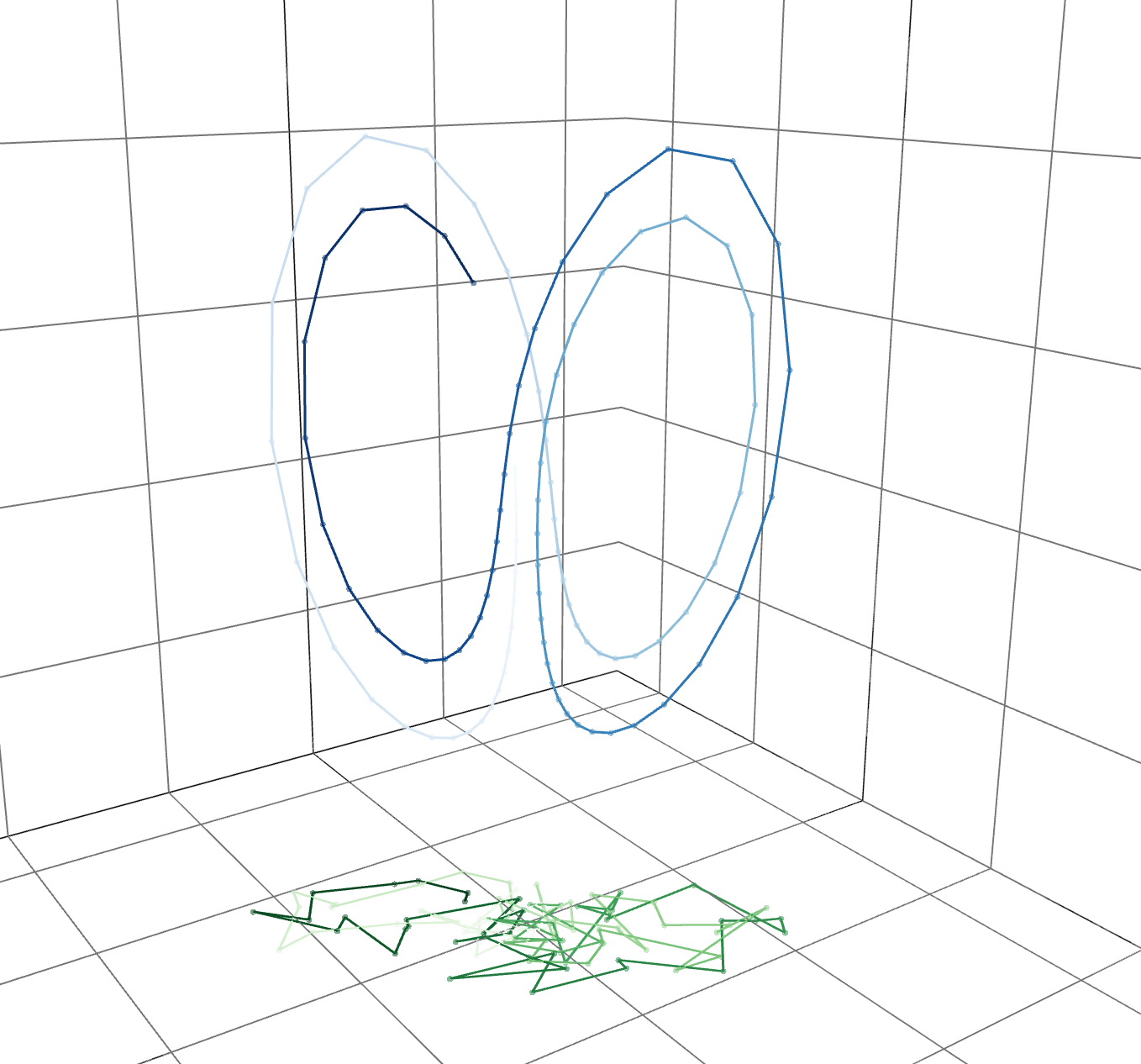

Example: the trajectory of the Lorenz 63 equation and observations.

Estimating the time series of states of

chaotic dynamical system

using noisy and partial observations.

Three difficulties

Considered

in this talk

Not considered

in this talk

Data Assimilation (DA)

-

Control

- Nudging/Continuous Data Assimilation

-

Bayesian Filtering

- Particle Filter (PF)

- Ensemble Kalman filter (EnKF)

-

Variational

- Four-dimensional Variation method (4DVar)

Several formulations & algorithms of DA:

Focus on the main results

(T.+2024) & Thesis

Related to partial observations

cf. Section 5

Not covered

cf. Section 1

Mathematics on DA:

Functional analysis, Probability theory, Dynamical systems,

Partial differential equations, (Numerical analysis)

cf. Section 2, 5

Control formulation

Problem

Keywords/Mathematics

(Olson+2003), (Azouani+2011)

Nudging/Continuous Data Assimilation

Squeezing property, Determining mode/node

AOT-algorithm

If we have noiseless & continuous time observations

through an orthogonal projection

→ copy obs. to the simulated state

(true)

(simulate)

PDE (Partial Differential Equations)

Control theory/Synchronization

Incomplete atmospheric data

(Charney+1969)

copy

converge

observed

unobserved

Variational formulation

Problem

Keywords/Mathematics

(Law+2015), (Korn+2009)

Four-dimensional Variational method (4DVar)

Adjoint method to compute gradient

Optimization, Iteration method

To estimate the initial state

from observation data

minimize a cost function.

Supervised Learning

Back propagation

Introduction

Setup

Bayesian filtering

Algorithms

Results (Error analysis)

Existing results for EnKF

My results

Summary & Discussion

Contents

Related sections in Thesis

Section 1, 2, 5

Section 3

Section 4

Section 6

Section 7

Section 8

State estimation with chaos, noise

Setup: Model

Model dynamics in

Semi-group in

generates

(assume)

: separable Hilbert space

: separable Hilbert space (state space)

The known model generates

the unknown true trajectory.

unknown trajectory

unknown

See Section 3 for other formulations.

Setup: Observation

Discrete-time model (at obs. time)

We have noisy observations in

Noisy observation

observe

...

bounded linear

(another Hilbert space)

at discrete time steps.

(6.4)

(6.2)

Sequential state estimation

...

given

Problem

estimate

known:

obs. noise distribution.

Bayesian Data Assimilation

Approach: Bayesian Data Assimilation

→ construct a random variable so that

In other words, compute (approximate)

the conditional distribution .

(filtering distribution)

given

Problem

Estimate

Sequential Data Assimilation

For n, two steps with auxiliary variable .

Proposition

The n-iterations (I) & (II)

Decomposition into the iterative algorithm

Definition 3.7, 10

(I) Prediction

(I) Prediction

by model

(II)Analysis

(II) Analysis

by Bayes' rule

likelihood function

Prediction

...

Repeat (I) & (II)

Introduction

Setup

Bayesian filtering

Algorithms

Results (Error analysis)

Existing results for EnKF

My results

Summary & Discussion

Contents

Related sections in Thesis

Section 1, 2, 5

Section 3

Section 4

Section 6

Section 7

Section 8

State estimation with chaos, noise.

Compute

Filtering algorithms

Only focus on the Gaussian-based Algorithms

Many filtering algorithms

PF

Ensemble based

EnKF

"Gaussian based"

KF

KF & EnKF

Kalman filter (KF) [Kalman 1960]

- All Gaussian distribution

- Closed update of mean and covariance.

Assume: linear F, Gaussian noises

Ensemble Kalman filter (EnKF)

Non-linear extension by Monte Carlo method

- Update of ensemble

(Reich+2015) S. Reich and C. Cotter (2015), Probabilistic Forecasting and Bayesian Data Assimilation, Cambridge University Press, Cambridge.

(Law+2015) K. J. H. Law, A. M. Stuart, and K. C. Zygalakis (2015), Data Assimilation: A Mathematical Introduction, Springer.

KF & EnKF

Kalman filter(KF)

Assume: linear F, Gaussian noises

Linear F

Bayes' rule

predict

analysis

Ensemble Kalman filter (EnKF)

Non-linear extension by Monte Carlo method

approx.

ensemble

Two variants

→ next slide

Definition 4.1

- Two major variants of EnKF:

- Perturbed Observation (PO): stochastic → sampling error

- Ensemble Transform Kalman Filter (ETKF): deterministic

Two EnKF variants

...

Repeat (I) & (II)

(II)Analysis

(I) Prediction

(Burgers+1998)

(Bishop+2001)

PO (stochastic EnKF)

(Burgers+1998) G. Burgers, P. J. van Leeuwen, and G. Evensen (1998), Analysis Scheme in the Ensemble Kalman Filter, Mon. Weather Rev., 126, 1719–1724.

Stochastic!

Perturbed Observation

: A transpose (adjoint) of a matrix (linear operator)

Definition 4.10

ETKF (deterministic EnKF)

(Bishop+2001) C. H. Bishop, B. J. Etherton, and S. J. Majumdar (2001), Adaptive Sampling with the Ensemble Transform Kalman Filter. Part I: Theoretical Aspects, Mon. Weather Rev., 129, 420–436.

Deterministic!

The explicit representation exists!

Ensemble Transform Kalman Filter

Definition 4.13

ETKF (deterministic EnKF)

Ensemble Transform Kalman Filter

Lemma (Theorem 4.1)

ETKF is one of the deterministic EnKF algorithms known as ESRF (Ensemble Square Root Filters).

※ESRF (deterministic EnKF)

ESRF

ETKF

EAKF (Ensemble Adjustment Kalman Filter)

: bounded linear

(Anderson 2001) J. L. Anderson (2001), An Ensemble Adjustment Kalman Filter for Data Assimilation, Mon. Weather Rev., 129, 2884–2903.

Key perspective of EnKF (KF)

The analysis state is the weighted average of

the prediction and observation

The weight is determined by estimated uncertainties

: uncertainty in observation (prescribed)

: uncertainty in prediction (approx. by ensemble)

Inflation (numerical technique)

Issue: Underestimation of covariance through the DA cycle

→ Overconfident in the prediction → Poor state estimation

(I) Prediction → inflation → (II) Analysis → ...

Rank is improved

Rank is not improved

Idea: inflating the covariance before (II)

Two basic methods of inflation

※depending on the implementation

※

Section 4.4

PO

Two EnKF variants

Simple

Stochastic

Require many samples

ETKF

Complicated

Deterministic

Require few samples

Practical !

Inflation: add., multi.

Inflation: only multi.

※depending on the implementation

※

Filtering algorithms

Many filtering algorithms

PF

Ensemble based

EnKF

"Gaussian based"

KF

Deterministic

Stochastic

PO

ETKF

EAKF

Introduction

Setup

Bayesian filtering

Algorithms

Results (Error analysis)

Existing results for EnKF

My results

Summary & Discussion

Contents

Related sections in Thesis

Section 1, 2, 5

Section 3

Section 4

Section 6

Section 7

Section 8

State estimation with chaos, noise.

Compute

EnKF: Ensemble & Gaussian approximation.

Error analysis of EnKF

Assumptions on model

(Reasonable)

Assumption on observation

(Strong)

We focus on finite-dimensional state spaces

(T.+2024) K. T. & T. Sakajo (2024), SIAM/ASA Journal on Uncertainty Quantification, 12(4), 1315–1335.

Assumptions for analysis

Assumption (obs-1)

"full observation"

Strong!

Assumption (model-1)

Assumption (model-2)

Assumption (model-2')

Reasonable

"one-sided Lipschitz"

"Lipschitz"

"bounded"

or

Dissipative dynamical system

Dissipative dynamical system

Section 3, 5

Dissipative dynamical systems

Lorenz 63, 96 equations, widely used in geophysics,

Example (Lorenz 96)

non-linear conserving

linear dissipating

forcing

Assumptions (model-1, 2, 2')

hold

incompressible 2D Navier-Stokes equations (2D-NSE) in infinite-dim. setting

Lorenz 63 eq. can be written in the same form.

→ We can discuss ODE and PDE in a unified framework.

can be generalized

bilinear operator

linear operator

dissipative dynamical systems

Mimics chaotic variation of

physical quantities at equal latitudes.

(Lorenz1996) (Lorenz+1998)

Dissipative dynamical systems

The orbits of the Lorenz 63 equation.

Literature review

-

Consistency (Mandel+2011, Kwiatkowski+2015)

-

Sampling errors (Al-Ghattas+2024)

-

Stability (Tong+2016a,b)

-

Error analysis (full observation )

-

PO + add. inflation (Kelly+2014) → next slide

-

ETKF + multi. inflation (T.+2024) → current

-

EAKF + multi. inflation (T.)

-

-

Error analysis (partial observation) → future (partially solved, T.)

How EnKF approximate KF?

How EnKF approximate

the true state?

※ Continuous time formulation (EnKBF)

-

EnKBF with full-obs. (de Wiljes+2018)

Section 6

Error analysis of PO (prev.)

(Kelly+2014) D. Kelly et al. (2014), Nonlinearity, 27(10), 2579–2603.

Strong effect! (improve rank)

Theorem (Kelly+2014)

exponential decay

initial error

Error analysis of PO (prev.)

variance of observation noise

uniformly in time

(Kelly+2014) D. Kelly et al. (2014), Nonlinearity, 27(10), 2579–2603.

Strong effect! (improve rank)

Corollary (Kelly+2014)

Introduction

Setup

Bayesian filtering

Algorithms

Results (Error analysis)

Existing results for EnKF

My results

Summary & Discussion

Contents

Related sections in Thesis

Section 1, 2, 5

Section 3

Section 4

Section 6

Section 7

Section 8

State estimation with chaos, noise.

Compute

EnKF: Ensemble & Gaussian approximation.

PO+add. infl. (Kelly+2014)

Error analysis of ETKF (our)

(T.+2024) K. T. & T. Sakajo (2024), SIAM/ASA Journal on Uncertainty Quantification, 12(4), 1315–1335.

Theorem 7.3 (T.+2024)

※ The same result holds for the EAKF (Theisis)

Error analysis of ETKF (our)

(T.+2024) K. T. & T. Sakajo (2024), SIAM/ASA Journal on Uncertainty Quantification, 12(4), 1315–1335.

Corollary 7.3 (T.+2024)

Sketch: Error analysis of ETKF (our)

①

②

Key point!

Error growth at (I)

Error contraction at (II)

norm of error

Sketch: Error analysis of ETKF (our)

Strategy: estimate the changes in DA cycle

expanded

by dynamics

contract &

noise added

Applying Gronwall's lemma to the model dynamics,

+ {additional term}.

①

subtracting yields

From the Kalman update relation

prediction error

② contraction

③ obs. noise effect

estimated by op.-norm

Sketch: Error analysis of ETKF (our)

Key estimates in

Using Woodbury's lemma, we have

② contraction

③ obs. noise

Contraction (see the next slide)

assumption

Sketch: Error analysis of ETKF (our)

estimate the change

(inflation & analysis)

(prediction)

Comparison

ETKF with multiplicative inflation (our)

PO with additive inflation (prev.)

accurate observation limit

Two Gaussian-based filters (EnKF) are assessed in terms of the state estimation error.

due to

due to

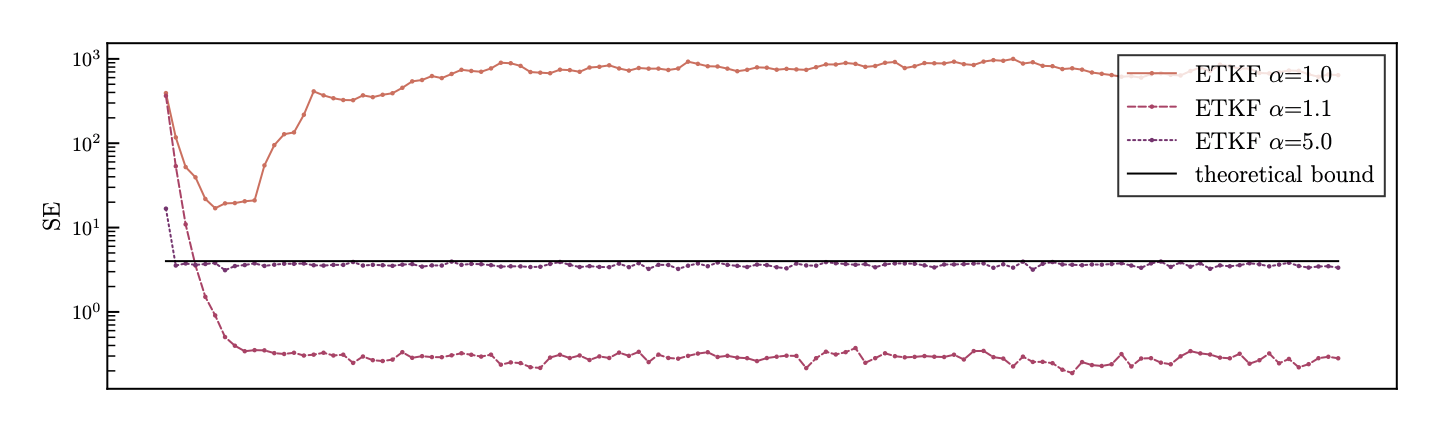

Numerical results

Observing System Simulation Experiment (OSSE)

A standard numerical test framework for DA algorithms.

Numerical results

Observing System Simulation Experiment (OSSE)

(1) Compute the true solution to (6.2).

Numerical results

Observing System Simulation Experiment (OSSE)

(2) Generate random observations as in (6.4).

(1) Compute the true solution to (6.2).

Numerical results

Observing System Simulation Experiment (OSSE)

(3) Assimilate the observed data and obtain the analysis states using a data assimilation algorithm.

(2) Generate random observations as in (6.4).

(1) Compute the true solution to (6.2).

DA algorithm

Numerical results

Observing System Simulation Experiment (OSSE)

(2) Generate random observations as in (6.4).

(1) Compute the true solution to (6.2).

(3) Assimilate the observed data and obtain the analysis states using a data assimilation algorithm.

(4) Compute the error between and for n = 1, 2, ...

Numerical results

OSSE: ETKF for the Lorenz 96 equation

From Theorem 7.3

→ theoretical bound

strong inflation

weak inflation

no inflation

Numerical results

Dependency on observation noise variance .

Numerical results

Animation

Introduction

Setup

Bayesian filtering

Algorithms

Results (Error analysis)

Existing results for EnKF

My results

Summary & Discussion

Contents

Related sections in Thesis

Section 1, 2, 5

Section 3

Section 4

Section 6

Section 7

Section 8

State estimation with chaos, noise.

Compute

EnKF: Ensemble & Gaussian approximation.

PO+add. infl. (Kelly+2014)

ESRF+multi. infl. (T.+2024)

Summary

We focus on

The sequential state estimation using model and data.

Results

Error analysis of a practical DA algorithm (ETKF)

under an ideal setting (T.+2024).

Discussion

Two issues with extending our analysis.

→ should be relaxed in the future

(rank deficient, non-symmetric)

Discussion

Current

(T.+2024)

1. Partial observation

2. Small ensemble

3. Infinite-dimension

partially solved (T.)

extend

Future

→ Issue 1, 2

→ Issue 1

→ Issue 1

Issues:

- rank deficient

- non-symmetric

Discussion

Issue 1 (rank deficient)

② Error contraction in (II)

1. Partial observation

2. Small ensemble

Discussion

Issue 2 (non-symmetric)

③ bound of obs. noise

If non-symmetric, it doesn't hold in general.

Example

Discussion

Infinite-dimension

Theorem (Kelly+2014) for PO + add. infl.

Theorem (T.+2024) for ETKF + multi. infl.

doesn't hold

(due to Issue 1: rank deficient)

※with full-observations

(ex: 2D-NSE with the Sobolev space)

( improve rank)

still holds

Summary

I focus on

Sequential state estimation using model and data.

Results

Error analysis of a practical DA algorithm (ETKF)

under an ideal setting (T.+2024).

Discussion

Two issues with extending our analysis.

→ should be relaxed

(rank deficient, non-symmetric)

→ other problems in the future

Future Plans

NWP /

Fluid Mechanics

Thesis

DA

UQ

UQ (Uncertainty Quantification):

The quantitative studies of uncertainties in the real world applications.

Mathematics:

Functional analysis

Gronwall's inequality

Future Plans

NWP /

Fluid Mechanics

Thesis

DA

UQ

Collaborating with

Numerics, Machine Learning

Contributing to NWP

Continuing

Developing

novel algorithms

Other applications

Mathematics:

Functional analysis

Gronwall's inequality

Numerical analysis

Lyapunov analyis

Large deviations

Operator algebra

Computational topology

...

UQ (Uncertainty Quantification):

The quantitative studies of uncertainties in the real world applications.

Data Assimilation (DA)

-

Control

- Nudging/Continuous Data Assimilation

-

Bayesian

- Particle Filter (PF)

- Ensemble Kalman filter (EnKF)

-

Variational

- Four-dimensional Variation method (4DVar)

PDE

Machine

Learning

Back Prop.

Supervised Learning

Optimization

Dynamical system

SDE

Dynamics

Uncertainty Quantification

Probability/Statistics

& related topic

Random ODE

Numerical Analysis

structure-preserving

Numerical error

Lyapunov

analysis

Operator Algebra

Koopman operator

Quantum DA

Optimal Transport

Diffusion AI

Future plan

References

- (T.+2024) K. T. & T. Sakajo, SIAM/ASA Journal on Uncertainty Quantification, 12(4), 1315–1335.

- (T.) Kota Takeda, Error Analysis of the Ensemble Square Root Filter for Dissipative Dynamical Systems, PhD Thesis, Kyoto University, 2025.

- (Kelly+2014) D. T. B. Kelly, K. J. H. Law, and A. M. Stuart (2014), Well-posedness and accuracy of the ensemble Kalman filter in discrete and continuous time, Nonlinearity, 27, pp. 2579–260.

- (Al-Ghattas+2024) O. Al-Ghattas and D. Sanz-Alonso (2024), Non-asymptotic analysis of ensemble Kalman updates: Effective dimension and localization, Information and Inference: A Journal of the IMA, 13.

- (Tong+2016a) X. T. Tong, A. J. Majda, and D. Kelly (2016), Nonlinear stability and ergodicity of ensemble based Kalman filters, Nonlinearity, 29, pp. 657–691.

- (Tong+2016b) X. T. Tong, A. J. Majda, and D. Kelly (2016), Nonlinear stability of the ensemble Kalman filter with adaptive covariance inflation, Comm. Math. Sci., 14, pp. 1283–1313.

- (Kwiatkowski+2015) E. Kwiatkowski and J. Mandel (2015), Convergence of the square root ensemble Kalman filter in the large ensemble limit, Siam-Asa J. Uncertain. Quantif., 3, pp. 1–17.

- (Mandel+2011) J. Mandel, L. Cobb, and J. D. Beezley (2011), On the convergence of the ensemble Kalman filter, Appl.739 Math., 56, pp. 533–541.

References

-

(de Wiljes+2018) J. de Wiljes, S. Reich, and W. Stannat (2018), Long-Time Stability and Accuracy of the Ensemble Kalman-Bucy Filter for Fully Observed Processes and Small Measurement Noise, Siam J. Appl. Dyn. Syst., 17, pp. 1152–1181.

-

(Burgers+1998) G. Burgers, P. J. van Leeuwen, and G. Evensen (1998), Analysis Scheme in the Ensemble Kalman Filter, Mon. Weather Rev., 126, 1719–1724.

-

(Bishop+2001) C. H. Bishop, B. J. Etherton, and S. J. Majumdar (2001), Adaptive Sampling with the Ensemble Transform Kalman Filter. Part I: Theoretical Aspects, Mon. Weather Rev., 129, 420–436.

-

(Anderson 2001) J. L. Anderson (2001), An Ensemble Adjustment Kalman Filter for Data Assimilation, Mon. Weather Rev., 129, 2884–2903.

-

(Dieci+1999) L. Dieci and T. Eirola (1999), On smooth decompositions of matrices, Siam J. Matrix Anal. Appl., 20, pp. 800–819.

-

(Reich+2015) S. Reich and C. Cotter (2015), Probabilistic Forecasting and Bayesian Data Assimilation, Cambridge University Press, Cambridge.

-

(Law+2015) K. J. H. Law, A. M. Stuart, and K. C. Zygalakis (2015), Data Assimilation: A Mathematical Introduction, Springer.

Appendix

Further result for partial obs.

The projected PO + add. infl. with partial obs. for Lorenz 96

Illustration (partial observations for Lorenz 96 equation)

○

○

×

○

○

×

○

○

×

○

○

×

○: observed

×: unobserved

the special partial obs. for Lorenz 96

Further result for partial obs.

The projected PO + add. infl. with partial obs. for Lorenz 96

Theorem (T.)

The projected add. infl.:

key 2

key 1

Problems on numerical analysis

- Discretization/Model errors

- Dimension-reduction for dissipative dynamics

- Numerics for Uncertainty Quantification (UQ)

- Imbalance problems

1. Discretization/Model errors

Motivation

- Including discretization errors in the error analysis.

- Consider more general model errors and ML models.

- Error analysis with ML/surrogate models.

cf.: S. Reich & C. Cotter (2015), Probabilistic Forecasting and Bayesian Data Assimilation, Cambridge University Press.

Question

- Can we introduce the errors as random noises in models?

- Can minor modifications to the existing error analysis work?

2. Dimension-reduction for dissipative dynamics

Motivation

- Defining "essential dimension" and relaxing the condition:

- should be independent of discretization.

cf.: C. González-Tokman & B. R. Hunt (2013), Ensemble data assimilation for hyperbolic systems, Physica D: Nonlinear Phenomena, 243(1), 128-142.

Question

- Dissipativeness, dimension of the attractor?

- Does the dimension depend on time and state? (hetero chaos)

- Related to the SVD and the low-rank approximation?

Attractor

3. Numerics for UQ

Motivation

- Evaluating physical parameters in theory.

- Computing the Lyapunov spectrum of atmospheric models.

- Estimating unstable modes (e.g., singular vectors of Jacobian).

cf.: T. J. Bridges & S. Reich (2001), Computing Lyapunov exponents on a Stiefel manifold. Physica D, 156, 219–238.

F. Ginelli et al. (2007), Characterizing Dynamics with Covariant Lyapunov Vectors, Phys. Rev. Lett. 99(13), 130601.

Y. Saiki & M. U. Kobayashi (2010), Numerical identification of nonhyperbolicity of the Lorenz system through Lyapunov vectors, JSIAM Letters, 2, 107-110.

Question

- Can we approximate them only using ensemble simulations?

- Can we estimate their approximation errors?

4. Imbalance problems

Motivation

- Assimilating observations can break the balance relations inherited from dynamics. → numerical divergence

(e.g., the geostrophic balance and gravity waves)

- Explaining the mechanism (sparsity or noise of observations?).

- Only ad hoc remedies (Initialization, IAU).

|

cf.: E. Kalney (2003), Atmospheric modeling, data assimilation and predictability, Cambridge University Press. Hastermann et al. (2021), Balanced data assimilation for highly oscillatory mechanical systems, Communications in Applied Mathematics and Computational Science, 16(1), 119–154. |

Question

- Need to define a space of balanced states?

- Need to discuss the analysis state belonging to the space?

- Can we utilize structure-preserving schemes?

balanced states

Error Analysis of the Ensemble Square Root Filter for Dissipative Dynamical Systems

By kotatakeda

Error Analysis of the Ensemble Square Root Filter for Dissipative Dynamical Systems

1h+

- 470