Flatiron Cluster Intro

Lehman Garrison

Scientific Computing Core, Flatiron Institute

CCA Fluid Dynamics Summer School

July 31, 2023

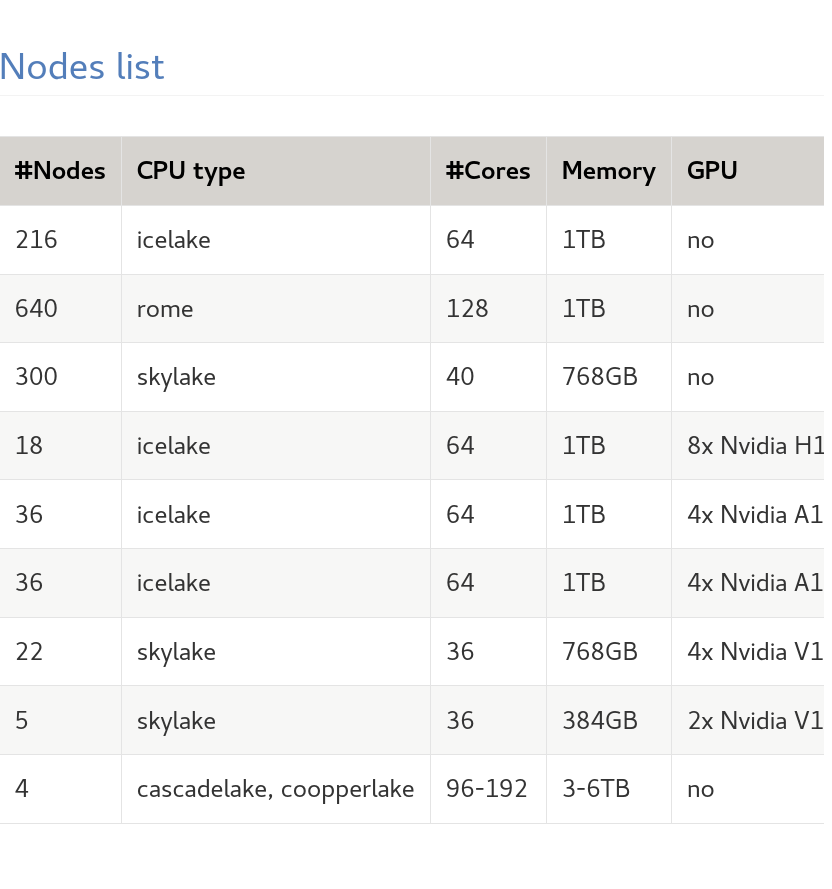

Intro to rusty

- This week you'll be logging into the "rusty" cluster

- A partition of nodes has been set aside for you!

- 24 x Intel Skylake nodes, 40 cores each, 768 GB RAM

- Each user can allocate 10 CPUs

- Submit to the "temp" partition—we'll see how shortly

- Two filesystems:

- Home ($HOME): keep code, scripts, git repos, etc, here

- Ceph ($HOME/ceph): keep data here

- Storage will be purged on Saturday

- First, let's set up your accounts

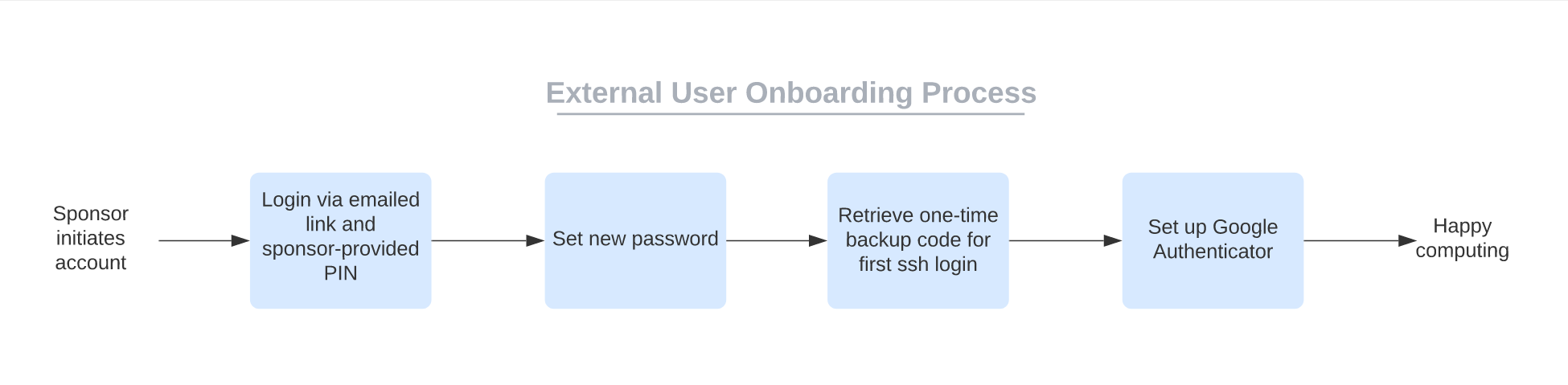

rusty Account Setup

rusty Account Setup

- Find the FIDO email with your login link

- Email is from fido@flatironinstitute.org

- Use the PIN from the spreadsheet to login

- Lehman will show the spreadsheet now

- Set a new password

- On FIDO, click "Retrieve google-authenticator backup code". Copy the code.

- SSH into the cluster

ssh -p 61022 USERNAME@gateway.flatironinstitute.org- Use password and backup code

- Run the following to generate a QR code

-

google-authenticator -td -w3 -r3 -R30 -f -

Scan the QR code with Google Authenticator (or any authenticator app)

-

-

Done! Try closing your terminal and logging in again.

Intro to Slurm

- You'll submit jobs to the cluster using Slurm

- Let's try it now! First SSH to rusty from gateway:

ssh rusty

- Create a file called

hello.sbatch - Submit it with:

sbatch hello.sbatch

- Check the status with:

squeue --me

- Look for a file named

"slurm-<JODIB>.out"

#!/bin/bash

#SBATCH -p temp # partition

echo Hello from $(hostname)

hello.sbatch:

Intro to Slurm (2)

- Take a look at the more realistic example on the right

- How many times will you see "

Hello from $(hostname)" in the output? - Your instructors will give you Slurm scripts during the classes

- Remember, each summer school user uses the "temp" partition and is limited to 10 cores

#!/bin/bash

#SBATCH -p temp # partition

#SBATCH -t 0-1 # 1 hour

#SBATCH -n 5 # 5 tasks

#SBATCH -c 2 # 2 cores/task

#SBATCH --mem-per-cpu 16G

echo Hello from $(hostname)

parallel.sbatch:

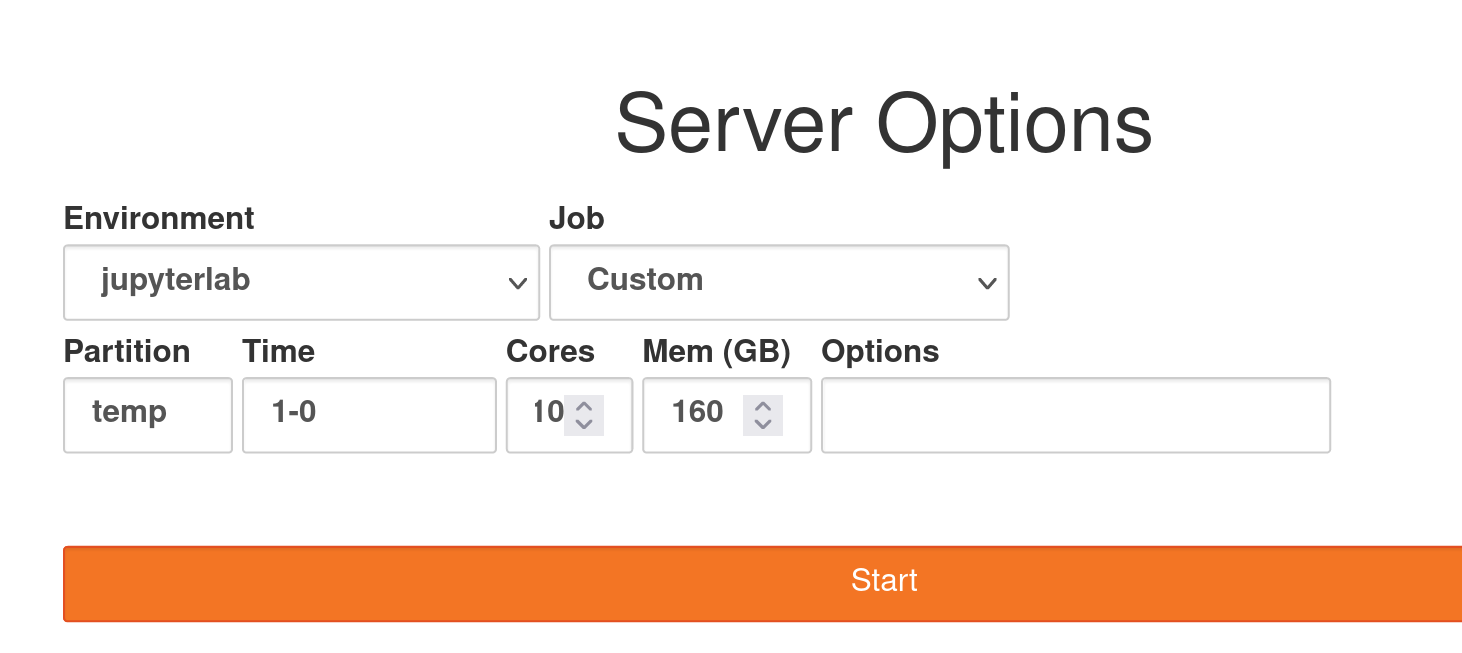

JupyterHub

- Easiest way to run Jupyter notebooks on the cluster is through JupyterHub

- Use the "temp" partition

Create a Jupyter Kernel

To install Python packages and make them visible to Jupyter, need to create a venv and custom kernel

module load python

python -m venv fluidenv --system-site-packages

source fluidenv/bin/activate

1. Create a venv:

module load jupyter-kernels

python -m make-custom-kernel fluid

2. Create a kernel:

3. Open a notebook on JupyterHub and select the fluid kernel

4. In the future, to install a package, run:

source fluidenv/bin/activate

pip install ...

rusty Cheat Sheet

- Connecting

- Use "-p 61022" when SSH-ing to gateway to select the right port

- Use "ssh rusty" from gateway

- Storage

- Scripts and code go in your home directory ($HOME)

- Data goes in ceph ($HOME/ceph)

- Slurm

- Submit to the "temp" partition with "-p temp"

- Ask for <= 10 cores

- Jupyter

- Launch Jupyter sessions from jupyter.flatironinstitute.org

- Activate your venv before installing pip packages

- Have fun!

Flatiron Cluster Intro

By Lehman Garrison

Flatiron Cluster Intro

- 335