memory hierarchy and

virtual memory

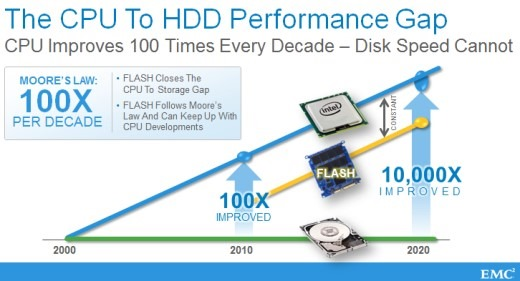

Since 1980, CPU has outpaced DRAM

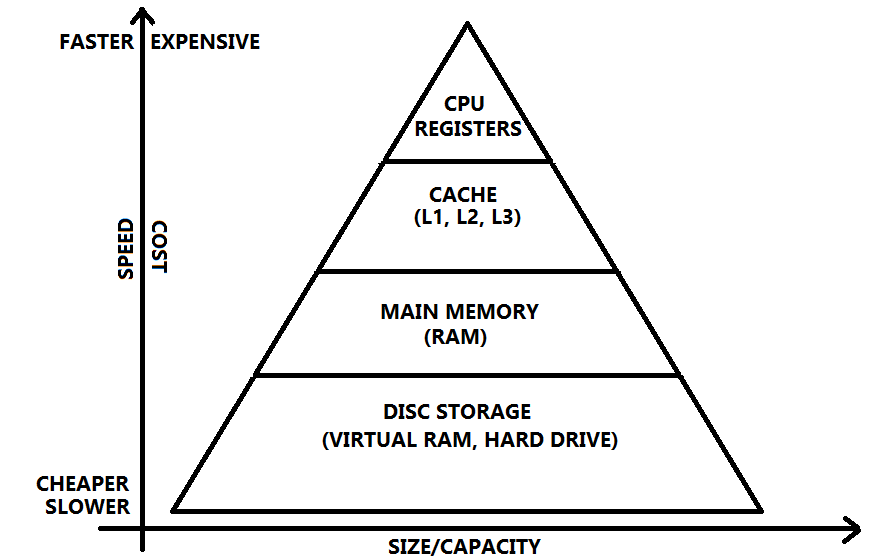

what is memory hierarchy?

In computer architecture the memory hierarchy is a concept used for storing & discussing performance issues in computer architectural design, algorithm predictions, and the lower level programming constructs such as involving locality of reference.

temporal (time)

Recently accessed items will be accessed in the near future.

ex. code in loops, top of stack

spatial (space)

Items at addresses close to the addresses of recently accessed items will be accessed in the near future (sequential code, elements of arrays)

principle of locality

Programs tend to use data and instructions with addresses near or equal to those they have used recently

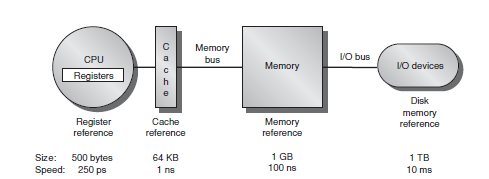

memory hierarcyhy

memory hierarcyhy

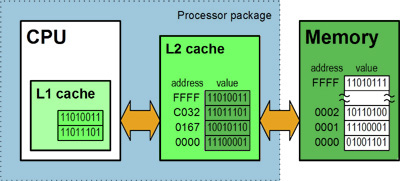

CACHE MEMORY

Cache memory is memory actually embedded inside the CPU. Cache memory is very fast, typically taking only once cycle to access, but since it is embedded directly into the CPU there is a limit to how big it can be. In fact, there are several sub-levels of cache memory (termed L1, L2, L3) all with slightly increasing speeds.

CACHE FUNDAMENTALS

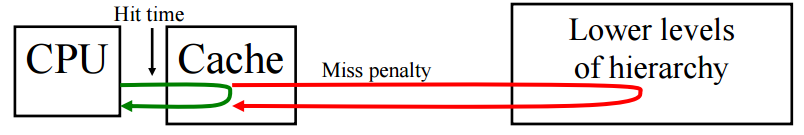

| hit | data found in cache |

| hit time | time to access the higher cache |

| hit rate | % of time data is found in the higher cache |

| miss | data not found in cache |

| miss penaly | time to move data from lower to upper level, then to cpu |

| miss rate | 1 - (hit rate) |

types of cache misses (Three c's)

| Compulsory | The first reference to a block of memory, starting with an empty cache. |

| Capacity | The cache is not big enough to hold every block you want to use. |

| Conflict | Two blocks are mapped to the same location and there is not enough room to hold both. |

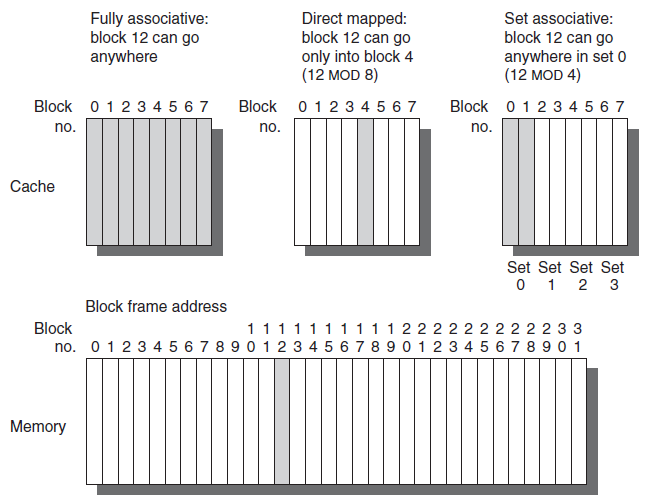

CACHE block placement

(Where can a block be placed in a cache?)

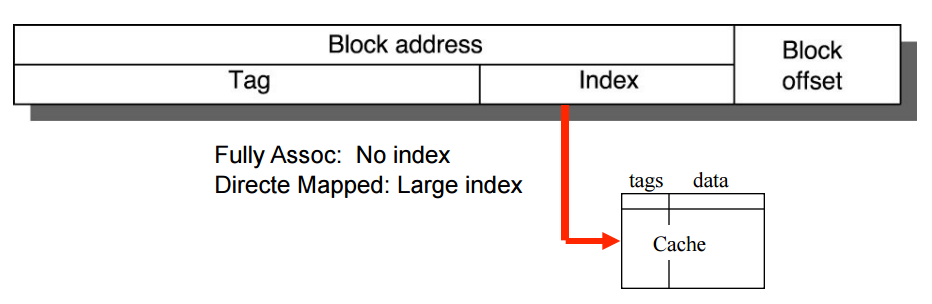

CACHE block Identification

(How is a block found if it is in the cache?)

| Tag | used to check all the blocks in the set |

| Index | used to select the set |

| Block Offset | address of the desired data within the block |

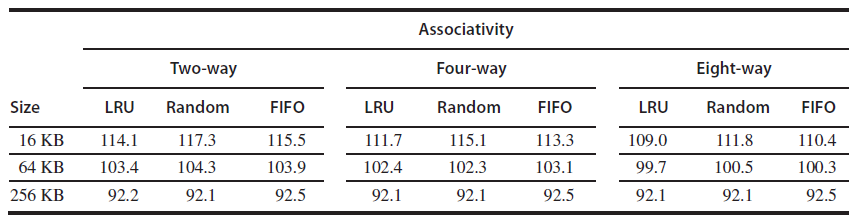

CACHE block REPLACEMENT

(Which block should be replaced on a miss?)

| Direct Mapped | Easy (only one choice) |

| Set Associative or Fully Associative |

Random LRU (Least Recently Used) FIFO (First-In First-Out) |

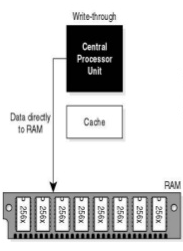

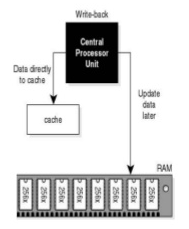

CACHE WRITE STRATEGY

(What happens on a write?)

| Write-Back | Write-Through | |

|---|---|---|

| Policy | Data written to cache also written to lower level memory | Write data only to cache Update lower level when a block falls out of the cache |

| Debug | Easy | Hard |

| Do read misses produces writes? | No | Yes |

| Do repeated writes make it to lower level? | Yes | No |

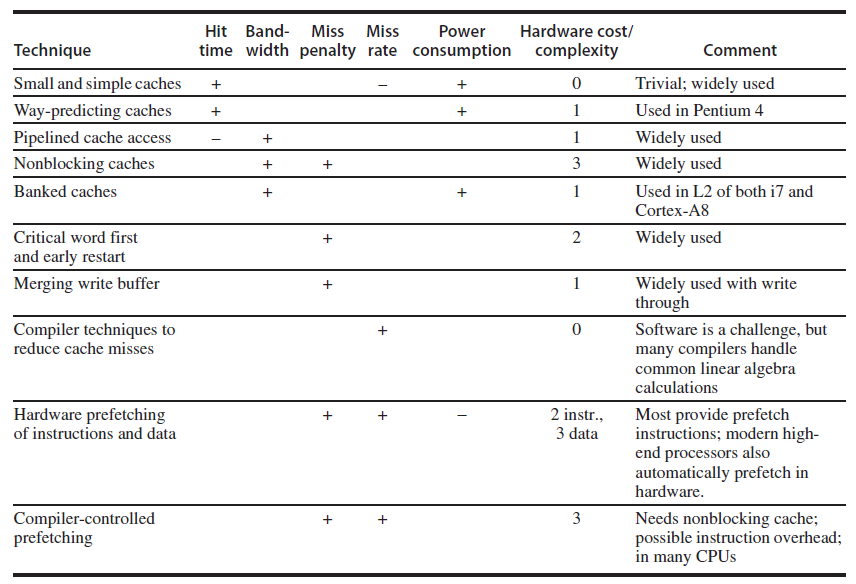

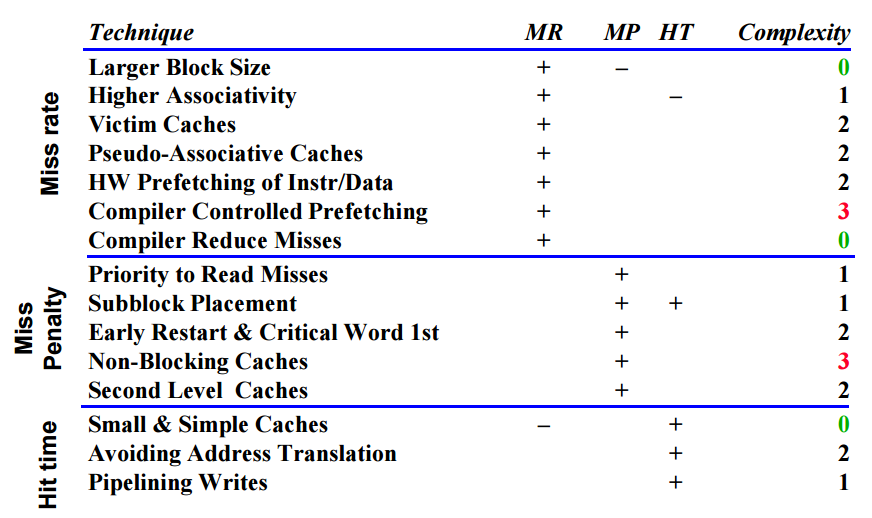

how to improve cache performance?

- Reduce the miss rate

- Reduce the miss penalty

- Reduce the hit time

cache performance optimizations

MAIN MEMORY

All instructions and storage addresses for the processor must come from RAM. Although RAM is very fast, there is still some significant time taken for the CPU to access it (this is termed latency). RAM is stored in separate, dedicated chips attached to the motherboard, meaning it is much larger than cache memory.

SRAM (used by cache)

static RAM

1-5 ns access time

6 transistors/bit

no periodic refresh

expensive

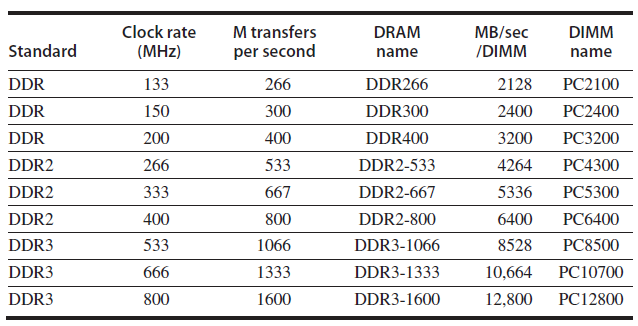

DRAM

dynamic RAM

40-60 ns

1 transistor/bit

need periodic refresh

cheaper

ex. PC2100 comes from 133 MHz × 2 × 8 bytes or 2100 MB/sec

frequency

cycles/second (ex. 1000MHz/s)

T = 1/f

= 1/1000MHz

= 0.1 ns (clock period)

latency

timing of the RAM

delay in number of clock cycles

CAS (Column Address Strobe) Latency or CL (ex. CL20)

Response Time = T * CL

= 0.1 ns * 10

= 10 ns

Response Time = T * CL

= 0.5 ns * 20

= 10 ns

DISC MEMORY

We are all familiar with software arriving on a floppy disk or CDROM, and saving our files to the hard disk. We are also familiar with the long time a program can take to load from the hard disk -- having physical mechanisms such as spinning disks and moving heads means disks are the slowest form of storage. But they are also by far the largest form of storage.

FLASH MEMORY

Expensive to manufacture

Not yet fully available as of 2015Source: http://theydiffer.com/difference-between-flash-memory-and-hard-drive/

Fast operating speed

Lasts a long time

Small in size

HARD DRIVE

Relatively cheap

Abundantly available

Slow operating speed

Short lifespan

Large in size

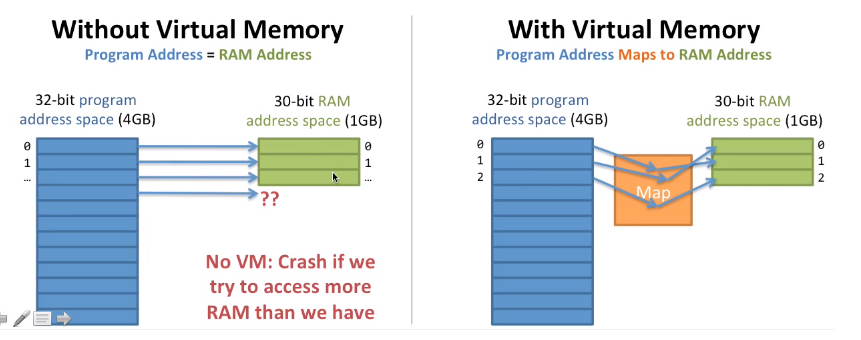

VIRTUAL MEMORY

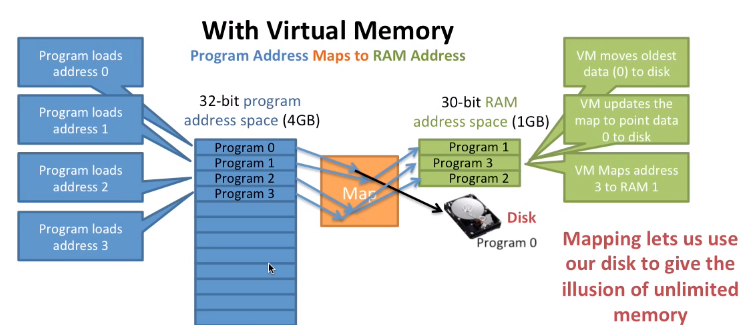

Virtual Memory gives the illusion of unlimited memory.

Virtual memory is a feature of an operating system (OS) that allows a computer to compensate for shortages of physical memory by temporarily transferring pages of data from random access memory (RAM) to disk storage.

Text

Virtual memory takes the program address and maps it to an address space

- Maps some of the program's address space to the disk

- When we need it, we bring it into memory

Solving not enough memory

- How do we use the holes left when programs quit?

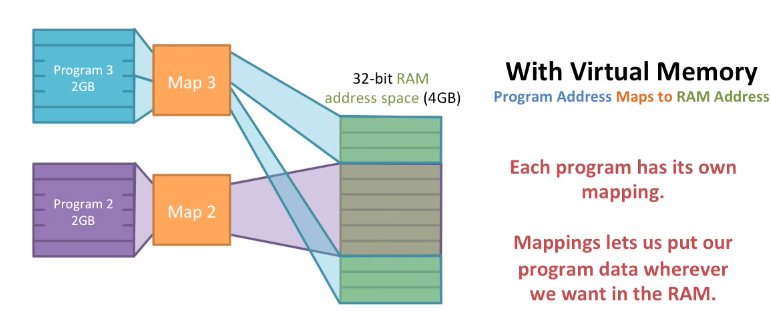

- We can map a program's addresses to RAM addresses however we like.

Solving Holes in the address space

- Program 1's and Program 2's addresses map to different RAM addresses

- Because each program has its own address space, they cannot access each other's data: security and reliability!

keeping programs secure

How virtual memory works?

conclusion

The speed gap between CPU, memory and mass storage continues to widen. Well written programs exhibit a property called locality. Memory hierarchies based on caching close the gap by exploiting locality.

Memory Hierarchy and Virtual Memory

By Mylene King

Memory Hierarchy and Virtual Memory

COE218 Advanced Computer Systems Architecture

- 2,612