1.3 Six Elements of ML

A defining framework for understanding concepts in the course

A jargon cloud

How do you make sense of all the jargon?

(c) One Fourth Labs

Make an actual cloud of all keywords that we will see through the course (list down all the keywords from the table of contents on my course homepage

From jargons to jars

What are the six jars of Machine Lerarning

(c) One Fourth Labs

Show six empty or shaded jars

* I want images which look like this but this is an expensive image and not available for free

Data data everywhere

What is the fuel of Machine Learning?

(c) One Fourth Labs

Data data everywhere

How do you feed data to machines ?

(c) One Fourth Labs

We encode all data into numbers - typically high dimension

For instance, in this course you will learn to embed image and text data as large vectors

Data entries are related - eg. given a MRI scan whether there is a tumour or not

Include a table that shows two/three MRI scans in first col, shows large vectors in second column, 1/0 for last column of whether there is tumour or not

Include a table that shows two/three reviews in first col, shows large vectors in second column, 1/0 for last column for whether review is positive or negative

Title the columns as x and y

tumor/no tumor

Now show a matrix of numbers here

the last column is tumor/no tumor

an arrow here

Show the ML system from your Slide 10 of Expert Systems

an arrow here

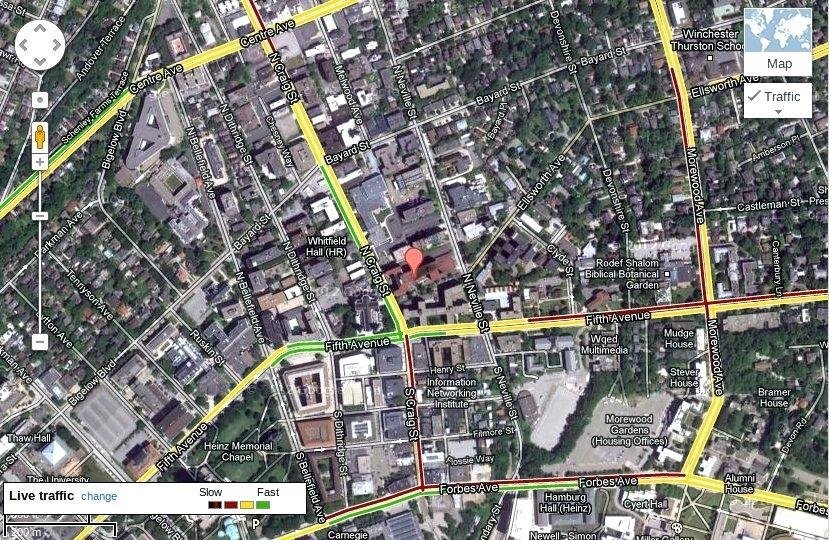

Data curation

Where do I get the data from?

(c) One Fourth Labs

I am lucky

I am rich

I am smart

+ मुंबई

= मुंबई

In this course

Data data everywhere

What is the fuel of Machine Learning?

(c) One Fourth Labs

Show data jars

Tasks

What do you do with this data?

(c) One Fourth Labs

We want to show that we can do different tasks with same data by making different input output papers. Show the following input output pairs one-by one. Feel free to redefine the input output pairs given below suitably

1. From product description to structured specs

2. From specs + reviews to writing FAQs

3. From specs + reviews + FAQs to question answering

4. From specs + reviews + personal data to recommendations

Amazon product data with description, reviews, product specs

Input

Output

Tasks

What do you do with this data?

(c) One Fourth Labs

We want to show that we can do different tasks with same data by making different input output papers. Show the following input output pairs one-by one. Feel free to redefine the input output pairs given below suitably

1. From photos identify people, places, activities

2. From posts + personal data recommend posts

3. From video detect profanity, etc.

Facebook profiles and photos

Input

Output

Tasks

What do you do with this data?

(c) One Fourth Labs

Different types of tasks:

1. Supervised

- Classification - text or no text

- Regression - fitting bounding boxes (more later)

2. Unsupervised

- Clustering - clustering news articles by similarity

- Generation - deep art, deep poetry

Most of the realworld ML tasks (90%) are supervised. This course will exclusively focus on this class of problems. Except for easter eggs.

In supervised ML it is about finding y given x

Supervised

1. Show data matrix with x and y

2. images with and without signboards

Here show a SVM like line separator with signboard images on one side and no-signboard on other

Classification

Tasks

What do you do with this data?

(c) One Fourth Labs

Different types of tasks:

1. Supervised

- Classification - text or no text

- Regression - fitting bounding boxes (more later)

2. Unsupervised

- Clustering - clustering news articles by similarity

- Generation - deep art, deep poetry

Most of the realworld ML tasks (90%) are supervised. This course will exclusively focus on this class of problems. Except for easter eggs.

In supervised ML it is about finding y given x

Supervised

1. Show data matrix with x and left_x, left_y, width, height

2. images with signboards and bounding boxes

Image

Regression

Output

lx, lr, w, h values

Now show bounding box in the images

Tasks

What do you do with this data?

(c) One Fourth Labs

Different types of tasks:

1. Supervised

- Classification - text or no text

- Regression - fitting bounding boxes (more later)

2. Unsupervised

- Clustering - clustering news articles by similarity

- Generation - deep art, deep poetry

Most of the realworld ML tasks (90%) are supervised. This course will exclusively focus on this class of problems. Except for easter eggs.

In supervised ML it is about finding y given x

Unsupervised

1. Show data matrix with only x

2. images with and without signboards

Here show 3 to 4 clusters such that yellowfins signboard in one cluster, blue in another and so on

Clustering

Tasks

What do you do with this data?

(c) One Fourth Labs

Different types of tasks:

1. Supervised

- Classification - text or no text

- Regression - fitting bounding boxes (more later)

2. Unsupervised

- Clustering - clustering news articles by similarity

- Generation - deep art, deep poetry

Most of the realworld ML tasks (90%) are supervised. This course will exclusively focus on this class of problems. Except for easter eggs.

In supervised ML it is about finding y given x

Unsupervised

1. Show data matrix with only x

Show picasso style images

Show output of deep art or of the painting which recently got sold for x million dollars

Generation

Tasks

What do you do with this data?

(c) One Fourth Labs

Different types of tasks:

1. Supervised

- Classification - text or no text

- Regression - fitting bounding boxes (more later)

2. Unsupervised

- Clustering - clustering news articles by similarity

- Generation - deep art, deep poetry

Most of the realworld ML tasks (90%) are supervised. This course will exclusively focus on this class of problems. Except for easter eggs.

In supervised ML it is about finding y given x

Unsupervised

Show many trump tweets

Show output of Deep Trump

Generation

Tasks

What do you do with this data?

(c) One Fourth Labs

\( `` \)

Photo of Andrew Ng

Supervised Learning has created 99% of economic value in AI

In this course

Classification

Regression

RHS from classification slide

RHS from regression slide

Tasks

What do you do with this data?

(c) One Fourth Labs

Show data, tasks jars

What is the mathematical formulation of a task?

(c) One Fourth Labs

\( x \)

\( y \)

bat

car

dog

cat

Models

\( \left[\begin{array}{lcr} 2.1, 1.2, \dots, 5.6, 7.2 \end{array} \right]\)

\( \left[\begin{array}{lcr} 0, 0, 1,0, 0 \end{array} \right]\)

\( y = f(x) \) [true relation, unknown]

\( \hat{y} = \hat{f}(x) \) [our approximation]

ship

\( \left[\begin{array}{lcr} 0, 1, 0, 0, 0 \end{array} \right]\)

\( \left[\begin{array}{lcr} 0, 0, 0, 0, 1 \end{array} \right]\)

\( \left[\begin{array}{lcr} 1, 0, 0, 0, 0 \end{array} \right]\)

\( \left[\begin{array}{lcr} 0, 0, 1, 0, 0 \end{array} \right]\)

\( \left[\begin{array}{lcr} 0.1, 3.1, \dots, 1.7, 3.4\end{array} \right]\)

\( \left[\begin{array}{lcr} 0.5, 9.1,\dots, 5.1, 0.8 \end{array} \right]\)

\( \left[\begin{array}{lcr} 1.2, 4.1, \dots, 6.3, 7.4 \end{array} \right]\)

\( \left[\begin{array}{lcr} 3.2, 2.1, \dots, 3.1, 0.9 \end{array} \right]\)

Models

What are the choices for \( \hat{f} \) ?

(c) One Fourth Labs

\( \hat{y} = mx + c \)

\(\hat{ y} = ax^2 + bx + c \)

\( y = \sigma(wx + b) \)

\( y = Deep\_NN(x) \)

\( \hat{y} = \hat{f}(x) \) [our approximation]

\( \left [\begin{array}{lcr} 0.5\\ 0.2\\ 0.6\\ \dots\\0.3\ \end{array} \right]\)

\( \left [\begin{array}{lcr} 14.8\\ 13.3\\ 11.6\\ \dots\\6.16 \end{array} \right]\)

\( x \)

\( y \)

\(\hat{ y} = ax^3 + bx^2 + cx + d \)

\(\hat{ y} = ax^4 + bx^3 + cx + d \)

Data

In this course

\( y = Deep\_CNN(x) \) ...

\( y = RNN(x) \) ...

Data is drawn from the following distribution

\(\hat{ y} = ax^{25} + bx^{24} + \dots + cx + d \)

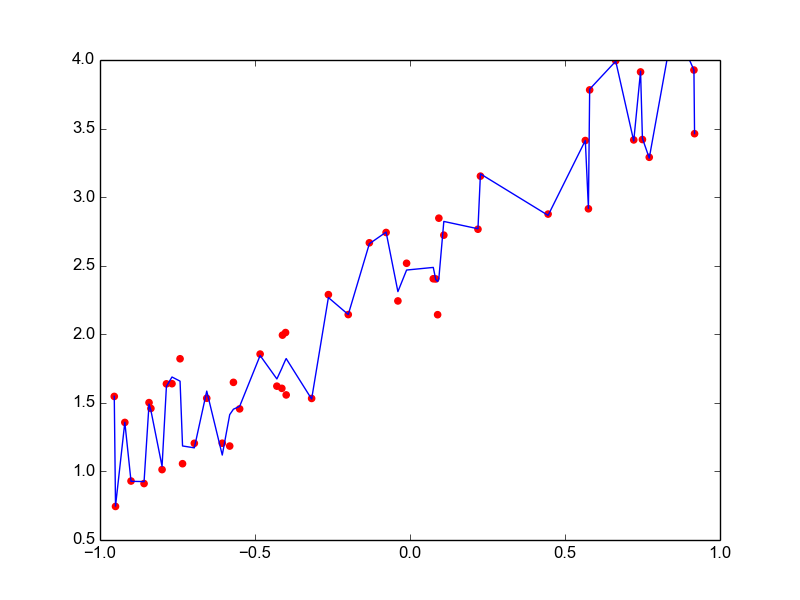

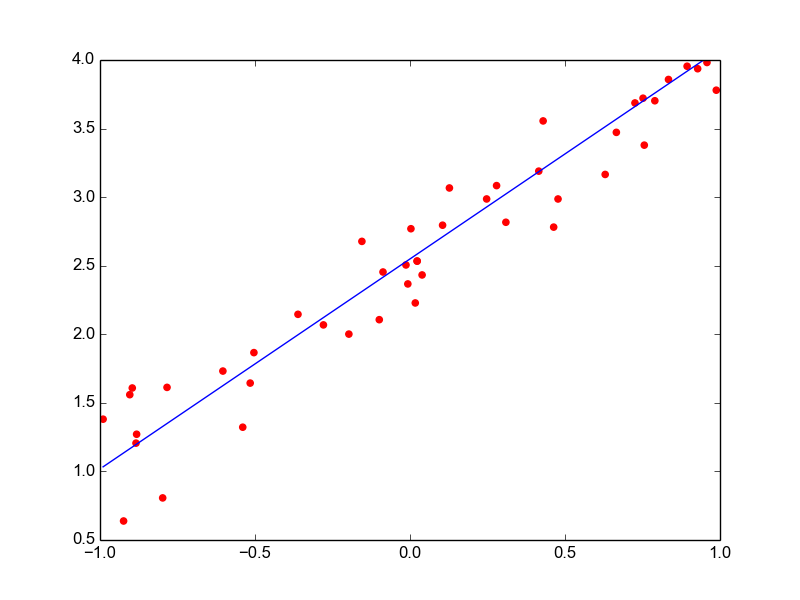

Models

Why not just use a complex model always ?

(c) One Fourth Labs

\( \left [\begin{array}{lcr} 0.1\\ 0.2\\ 0.4\\ ....\\0.8 \end{array} \right]\)

\( \left [\begin{array}{lcr} 2.6\\ 2.4\\ 3.1\\ ....\\4.1 \end{array} \right]\)

\( x \)

\( y \)

\( y = mx + c \) [true function, simple]

\(\hat{y} = ax^{100} + bx^{99} + ... + c \)

[our approximation, very complex]

Later in this course

Bias-Variance Tradeoff

Overfitting

Regularization

Models

Why not just use a complex model always ?

(c) One Fourth Labs

\( \left [\begin{array}{lcr} 0.1\\ 0.2\\ 0.4\\ ....\\0.8 \end{array} \right]\)

\( \left [\begin{array}{lcr} 2.6\\ 2.4\\ 3.1\\ ....\\4.1 \end{array} \right]\)

\( x \)

\( y \)

\( y = mx + c \) [true function, simple]

\(\hat{y} = ax^{100} + bx^{99} + ... + c \)

[our approximation, very complex]

Later in this course

Bias-Variance Tradeoff

Overfitting

Regularization

Models

What are the choices for \( \hat{f} \) ?

(c) One Fourth Labs

Data

Task

Model

Loss Function

How do we know which model is better ?

\( \left [\begin{array}{lcr} 0.00\\ 0.10\\ 0.20\\ ....\\6.40 \end{array} \right]\)

\( \left [\begin{array}{lcr} 0.24\\ 0.08\\ 0.12\\ ....\\0.36 \end{array} \right]\)

\( x \)

\( y \)

?

\( \hat{f_1}(x) \)

\( \left [\begin{array}{lcr} 0.25\\ 0.09\\ 0.11\\ ....\\0.36 \end{array} \right]\)

\( \left [\begin{array}{lcr} 0.32\\ 0.30\\ 0.31\\ ....\\0.22 \end{array} \right]\)

\( \left [\begin{array}{lcr} 0.08\\ 0.20\\ 0.14\\ ....\\0.15 \end{array} \right]\)

\( \hat{f_1}(x) = a_1x^{25} + b_1x^{24} + ... + c_1x + d_1 \)

\( \hat{f_2}(x) = a_1x^{25} + b_1x^{24} + ... + c_1x + d_1 \)

\( \hat{f_3}(x) = a_1x^{25} + b_1x^{24} + ... + c_1x + d_1 \)

\( \begin{array}{lcr} 1\\ 2\\ 3\\ ....\\n \end{array} \)

\( \mathscr{L}_1 = \sum_{i=1}^{n} (y_i - \hat{f}_1(x_i))^2 \)

\( \hat{f_2}(x) \)

\( \hat{f_3}(x) \)

\( \mathscr{L}_2 = \sum_{i=1}^{n} (y_i - \hat{f}_2(x_i))^2 \)

\( \mathscr{L}_3 = \sum_{i=1}^{n} (y_i - \hat{f}_3(x_i))^2 \)

True Function

\( \hat{f_1}(x) \)

\( \hat{f_2}(x) \)

\( \hat{f_3}(x) \)

Loss Function

How do we know which model is better ?

(c) One Fourth Labs

\( \mathscr{L}_1 = \sum_{i=1}^{n} (y_i - \hat{f}_1(x_i))^2 = 1.38\)

\( \mathscr{L}_2 = \sum_{i=1}^{n} (y_i - \hat{f}_2(x_i))^2 = 2.02\)

\( \mathscr{L}_3 = \sum_{i=1}^{n} (y_i - \hat{f}_3(x_i))^2 = 2.34 \)

In this course

Square Error Loss

Cross Entropy Loss

KL divergence

\( \left [\begin{array}{lcr} 0.00\\ 0.10\\ 0.20\\ ....\\6.40 \end{array} \right]\)

\( \left [\begin{array}{lcr} 0.24\\ 0.08\\ 0.12\\ ....\\0.36 \end{array} \right]\)

\( x \)

\( y \)

\( \hat{f_1}(x) \)

\( \left [\begin{array}{lcr} 0.25\\ 0.09\\ 0.11\\ ....\\0.36 \end{array} \right]\)

\( \left [\begin{array}{lcr} 0.32\\ 0.30\\ 0.31\\ ....\\0.22 \end{array} \right]\)

\( \left [\begin{array}{lcr} 0.08\\ 0.20\\ 0.14\\ ....\\0.15 \end{array} \right]\)

\( \begin{array}{lcr} 1\\ 2\\ 3\\ ....\\n \end{array} \)

\( \hat{f_2}(x) \)

\( \hat{f_3}(x) \)

\( \mathscr{L}_1 = \sum_{i=1}^{n} (y_i - \hat{f}_1(x_i))^2 \)

\( = (0.24-0.25)^2 + (0.08-0.09)^2 + \newline (0.12-0.11)^2 + ... + (0.36-0.36)^2 \)

\( = 1.38 \)

Loss Function

What does a loss function look like ?

(c) One Fourth Labs

Data

Task

Loss

Model

Learning Algorithm

How do we identify parameters of the model?

(c) One Fourth Labs

Animation:

- first the data matrix appears

- then the model equation with a,b,c as parameters appears

- then the friend appears

- then the loss function appears

- now the red cross appears and then the friend disappears

- now the box for the learning algorithm appears

- now the logo for search appears

- now an animation where the values of a,b,c are adjusted till the loss reaches some low value

- data, model and loss function feed int othe pink box

\( \hat{f_1}(x) = 3.5x_1^2 + 2.5x_2^{3} + 1.2x_3^{2} \)

\( \hat{f_1}(x) = ax_1^2 + bx_2^{3} + cx_3^{2} \)

\( \mathscr{L}_1 = \sum_{i=1}^{n} (y_i - \hat{f}_1(x_i))^2 \)

Show adjustable scales for a,b,c and create a python video here if you adjust the scale the loss function value changes and you hit some value for which the error is zero. You can actually cheat by creating the y value using some values of a,b,c so that you can then get 0 error for these values of error

| Budget (100crore) |

Box Office Collection(100 crore) | Action Scene times (100 mins) | IMDB Rating |

|---|---|---|---|

| 0.1 | 0.1 | 0.3 | 0 |

| 0.1 | 0.3 |

0.2 |

0 |

| 0.4 |

0 | 1.3 |

0 |

Learning Algorithm

How do you formulate this mathematically ?

(c) One Fourth Labs

Animation:

- data, model, pink box, loss function, logo for search and animation for a,b,c appears as it if from previous slide

- now the cross and the message appears

- now the text in red appears (but make it black)

Show a a matrix with 3 inputs: budget* (0 to 1), box office collection* (0 to 1), action scene time+ (0 to 1)

* the unit is 100 crores so 0.1 means 1 crore (mention this in the head row of the table

+ the unit here is 100 minutes

The output is the imdb rating

Show x and y above the header

\( \hat{f_1}(x) = ax_1^2 + bx_2^{3} + cx_3^{2} \)

\( \mathscr{L}_1 = \sum_{i=1}^{n} (y_i - \hat{f}_1(x_i))^2 \)

Show a gear box inside this to indicate learning algorithm

Show adjustable scales for a,b,c and create a python video here if you adjust the scale the loss function value changes and you hit some value for which the error is zero. You can actually cheat by creating the y value using some values of a,b,c so that you can then get 0 error for these values of error

logo for search

In practice, brute force search is infeasible

Find \(a, b, c \) such that

is minimized

Learning Algorithm

How do you formulate this mathematically ?

(c) One Fourth Labs

Animation:

Only the green part gets added on this slide (but show it in black except for the tick mark)

Show a a matrix with 3 inputs: budget* (0 to 1), box office collection* (0 to 1), action scene time+ (0 to 1)

* the unit is 100 crores so 0.1 means 1 crore (mention this in the head row of the table

+ the unit here is 100 minutes

The output is the imdb rating

Show x and y above the header

\( \hat{f_1}(x) = ax_1^2 + bx_2^{3} + cx_3^{2} \)

\( \mathscr{L}_1 = \sum_{i=1}^{n} (y_i - \hat{f}_1(x_i))^2 \)

Show a gear box inside this to indicate learning algorithm

Show adjustable scales for a,b,c and create a python video here if you adjust the scale the loss function value changes and you hit some value for which the error is zero. You can actually cheat by creating the y value using some values of a,b,c so that you can then get 0 error for these values of error

logo for search

Many optimization solvers are available

\(min_{a,b,c}\)

Learning Algorithm

How do you formulate this mathematically ?

(c) One Fourth Labs

Animation:

Only the green part gets added on this slide (but show it in black except for the tick mark)

Show a a matrix with 3 inputs: budget* (0 to 1), box office collection* (0 to 1), action scene time+ (0 to 1)

* the unit is 100 crores so 0.1 means 1 crore (mention this in the head row of the table

+ the unit here is 100 minutes

The output is the imdb rating

Show x and y above the header

\( \hat{f_1}(x) = ax_1^2 + bx_2^{3} + cx_3^{2} \)

\( \mathscr{L}_1 = \sum_{i=1}^{n} (y_i - \hat{f}_1(x_i))^2 \)

Many optimization solvers are available

\(min_{a,b,c}\)

In this course

Gradient Descent ++

Adagrad

RMSProp

Adam

Learning Algorithm

How do we identify parameters of the model?

(c) One Fourth Labs

Add jar for Learning Algorithm

Evaluation

How do we compute a score for our ML model?

(c) One Fourth Labs

Show a matrix for x and y (consider cat, dog, giraffe, lion, tiger, etc. prediction)

Now add a columns y for model predictions

Now show ticks and crosses and show we can compute accuracy (show formula)

End by saying that there are other metrics such as precision, recall, etc.

Standard evaluation (example ImageNet)

Evaluation

What are some other evaluation metrics ?

(c) One Fourth Labs

Show a matrix for x and y (consider cat, dog, giraffe, lion, tiger, etc. prediction)

Now add a set of columns y for model predictions which is a ranked list

Now show ticks and crosses for top-1, top-3

In this course

Accuracy

Precision/Recall

Top-k accuracy

Evaluation

How is this different from loss function ?

(c) One Fourth Labs

#( )

Evaluation

Brake

/Go

__________

#( )

Loss function

\( maximize \)

#( )

____________________

#( ) + #(___)

Evaluation

Should we learn and test on the same data?

(c) One Fourth Labs

Show some training data from before, preferably something containing image classification

\( \hat{f_1}(x) = ax_1^2 + bx_2^{3} + cx_3^{2} \)

\( \mathscr{L}_1 = \sum_{i=1}^{n} (y_i - \hat{f}_1(x_i))^2 \)

Show a gear box inside this to indicate learning algorithm

\(min_{a,b,c}\)

Now show test data

Now show formula for accuracy here

Animation:

Only the green part will com on animation, the rest of it will be shown at the beginning itself

Evaluation

How is this different from loss function ?

(c) One Fourth Labs

Add jar for evaluation

Putting it all together

How does all the jargon fit into these jars?

(c) One Fourth Labs

Linear Algebra

Probability

Calculus

Data

Model

Loss

Learning

Task

Evaluation

Data, democratisation, devices

Why ML is very successful?

(c) One Fourth Labs

Data

Model

Loss

Learning

Task

Evaluation

Improvised

Democratised

Abudance

Typical ML effort

How to distribute your work through the six jars?

(c) One Fourth Labs

Your Job

Model

Loss

Learning

Evaluation

Data

Task

Connecting to the Capstone

How to distribute your work through the six jars?

(c) One Fourth Labs

Mumbai

/

/

मुंबई \( \rightarrow \) Mumbai

\( \sum_{i=1}^{n} (y_i - \hat{f}(x_i))^2 \)

\( -\sum_{i=1}^{n} \log \hat{f}(x_i) \)

Accuracy

Precision/Recall

Top-k accuracy

Data

Model

Loss

Learning

Task

Evaluation

Assignment

How do you apply the six jars to a problem that you have encountered?

(c) One Fourth Labs

Explain the problem

Give link to the quiz

1. Formulate 3 problems from data.gov.in

2. In the dataturks labelled data, define tasks that you can perform and collect 10 data points for each

// Binary classification of whether there is text

// Detect text with bounding box - is accuracy easy to define here?

Sir's copy last one

By preksha nema

Sir's copy last one

- 1,685