1.9 Multilayered Network of Neurons

Your first Deep Neural Network

Recap: Complex Functions

What we saw in the previous chapter?

(c) One Fourth Labs

Repeat slide 5.1 from the previous lecture

The Road Ahead

What's going to change now ?

(c) One Fourth Labs

Show the six jars and convey the following by animation

Non-linear

Real inputs

Real outputs

Back-propagation

Task specific loss functions

The Road Ahead

What's going to change now ?

(c) One Fourth Labs

\( \{0, 1\} \)

Loss

Model

Data

Task

Evaluation

Learning

Linear

Only one parameter, b

Real inputs

Weights for every input

Real outputs

Data and Task

What kind of data and tasks have DNNs been used for ?

(c) One Fourth Labs

- Show MNIST dataset sample on LHS

- for the small black box mentions that it is 32x32

- on the RHS show how you will convert each image to a vector (of course you cannot show the full vector so just show "...." ). You can draw a grid on the image and show that each cell is a pixel which will have a corresponding numeric value

- also show how you will standardize this value to a number between 0 and 1

- and now slowly fill the full matrix

- now show that the y columns can take values from 0 to 10

- now show that for such k catagorical labels it helps to instead think of the output label as a 1 hot k dimensional vector.

- Show MNIST dataset sample on LHS

- Show by animation how you will flatten each image and convert it to a vector (of course you cannot show that

-

Data and Task

What kind of data and tasks have DNNs been used for ?

(c) One Fourth Labs

- Now have two more slides on other Kaggle tasks for which DNNs have been tried (preferably, some non-image tasks and at least one regression task. You could also repeat the churn prediction task from before)

- Finally have 1 slide on our task which is multi character classification

- Same layout and animations repeated from the previous slide only data changes

- Show MNIST dataset sample on LHS

- Show by animation how you will flatten each image and convert it to a vector (of course you cannot show that

-

Model

How to build complex functions using Deep Neural Networks?

(c) One Fourth Labs

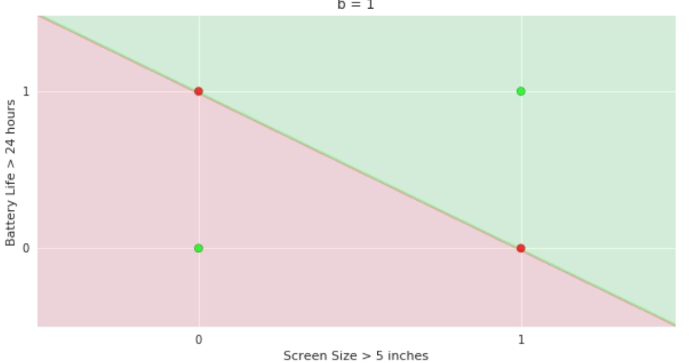

1) Start with some non-linearly separable 2 dimensional data (can repeat something from our previous lecture's examples)

2) Now show 2 input neurons and one output sigmoid neuron. Show the equations and the corresponding plot where the function does not separate the points (same as done in a previous lecture)

3) Now show that this model has two parameters and how you can change the function shape by varying these parameters

4) Now add one more layer in between which has only one neuron. Now show what the h = f(x), y = g(h) so y = f(g(h)). Write down f and g in detail.

5) Now show how many parameters this function has and setting different values for these parameters you can get different functions (so even though there are 5 paramters now w11, w12, b1, w21, b2 the plot is still of x1,x2 v/s y so you can still show the plot (intuitively some functions would look better than the others in terms of loss function)

6) Now add one more node in the hidden layer. Now show the functions again. Show the parameters again. Show the family of functions in the plots by varying values of the parameters.

7) Now add one more hidden layer with one node and continue this till you get a complex enough function

Model

Can we clarify the terminology a bit ?

(c) One Fourth Labs

1) Now we will show the DNN network form my lectures where I introduce all the notations (Slides 4 and 5 form lecture 4)

2) On the LHS you can show the network

3) on the RHS you can show the terminology crisply (minimal text, not as verbose as in my lecture slides)

4) also leave some space for me to do some pen work here (for example, write down the dimensions).

Model

How do we decide the output layer ?

(c) One Fourth Labs

1) Show the deep layer on LHS and highlight the output layer

2) Show the output function as a generic function O on the RHS

3) Now show a pink box on RHS bottom stating that "The output function depends on the task at hand"

Model

How do we decide the output layer ?

(c) One Fourth Labs

- On RHS show the imdb example from my lectures

- ON LHS show the apple example from my lecture

- Below LHS example, pictorially show other examples of regression from Kaggle

- Below RHS example, pictorially show other examples of classification from Kaggle

- Finally show that in our contest also we need to do regression (bounding box predict x,y,w,h) and classification (character recognition)

Model

What is the output layer for regression problems ?

(c) One Fourth Labs

1) On the LHS show the same network from before

2) On the RHS show simple code which takes the input and computes all the way upto the output. Show very simple intuitive code. Write comments in the code where we say that we assume that the weights are learnt.

3) Now on the next slide I will use the animation which Ganga is preparing.

Model

What is the output layer for classification problems ?

(c) One Fourth Labs

0) I am assuming that the entire lecture on cross entropy has already been done at this point

1) Show LHS of slide 18 from my course (but instead of the DNN box show an actual network)

On RHS show

2) True output: A probability distribution with all mass on the outcome "apple"

3) Predicted output: Some probability distribution with mass distributed to these outcomes

4) Cartoon Question: What kind of function do we use to output such a distribution

Next slide:

1) We will assume that it is a small 10x10 input, a network with two hidden layers of 10 neurons each and one output layer of 4 neurons

2) Show the computation at every layer. First show how the value for the 1st neuron in the first layer is computed. Then show how all the values in the 1st layer are computed. Then show how the value of the 1st neuron in the second layer is computed. Then show how the values of all the neurons in the second layer are computed. Now show the computation of the pre-activation in the output layer and stop. (For all the computations, I would show the network on LHS and the computation on the RHS)

3) Now we have the pre-activations at the output layer. We want to choose the activation function O such that the output is a probability (write and highlight the function O - We don't know what this function is at this point)

4) Now on LHS ask What if we take each entry and divide it by the sum - we will get a probability distribution (show this computation for all the 4 outputs one by one)

5) Now pass another input to the network and now some of the pre-activation weights would be negative (now try the above again and show that some prob values will be negative)

6) Now show the softmax function. Take a negative value and show how e^x becomes positive. Also show a plot of the e^x function.

Next slide

0) Same figure on LHS

1) On RHs show a 4 dimensional vector called h

2) show the function softmax(h) which also returns a 4 dimensional vectore

3) show one more vector below this where every entry is the appropriate softmax formula

4) Pink bubble: softmax(h)_i is the i-th element of the softmax output

5) Finally summarize the full network with equations for x, h1, h2, o (may be this can be on a separate slide)

Model

What is the output layer for regression problems ?

(c) One Fourth Labs

1) Show LHS of slide 15 from my course (but instead of the DNN box show an actual network and only one output which is box office collection instead of the 4 outputs in the original slide)

On RHS show

2) True output: box office collection

3) Predicted output: some real number

4) Cartoon Question: What kind of function do we use to output such a real number

Next slide:

1) We will assume that it is a small 10 dimensional input, a network with two hidden layers of 5 neurons each and one output layer of 1 neurons

2) Show the computation at every layer. First show how the value for the 1st neuron in the first layer is computed. Then show how all the values in the 1st layer are computed. Then show how the value of the 1st neuron in the second layer is computed. Then show how the values of all the neurons in the second layer are computed. Now show the computation of the pre-activation in the output layer and stop. (For all the computations, I would show the network on LHS and the computation on the RHS)

3) Now we have the pre-activations at the output layer. We want to choose the activation function O such that the output is a real number

4) Cartoon: Can we use the sigmoid function ? No

5) Cartoon: Can we use the softmax function ? No

6) Cartoon: Can we use the real numbered pre-activation as it is? Yes, its a real number after all

7) Cartoon: What happens if we get a negative output? Should we not normalize it?

Next slide

1) Finally summarize the full network with equations for x, h1, h2, o

Model

Can we see the model in action?

(c) One Fourth Labs

1) We will show the demo which Ganga is preparing

Model

In practice how would you deal with extreme non-linearity ?

(c) One Fourth Labs

- Here I want to show that you don't really know how non-linear the data is ? (So show plots with different types of non-linear data)

- In fact in some cases you can't even visualize it (show multiple axes x1, x2, x3, x4 to suggest high dimensional data and then put a Q mark on this plot (because you cannot even visualize this)

- Now at the bottom show different types of neural networks, with different configurations such as number of layers, different number of neurons per layer

- Now show that you can change the code form one configurations with minimal changes in code (in pytorch with Keras)

Model

In practice how would you deal with extreme non-linearity ?

(c) One Fourth Labs

- In the boxes below show different NN configurations in different colors

- Now in this box show a bar plot with models on the x-axis and loss function on the y-axis (juts to give an idea that some models may be better than the others) - the colors of the bars should correspond to the colors of the networks below

Model

Why is Deep Learning also called Deep Representation Learning ?

(c) One Fourth Labs

- On LHS we will first show a shallow neural network, where the input is an image and the output is a multi-class classification layer

- The number of input neurons is equalto number of pixels

- Now show a deep network where you have 2-3 layers before the outpur layer

- Show a pink box on RHS saying that "In addition to predicting the output we are also learning these intermediate representations which help us in predicting the output better )

Loss Function

What is the loss function that you use for a regression problem ?

(c) One Fourth Labs

1) Take a simple binary classification problem

2) Take a small (one hidden layer) network for this task, the output layer will have two neuron

3) Show computation upto output

4) Now show the squared error formula

5) Now compute the cross-entropy

6) Repeat the above process for two more inputs

7) Now show very simple code to do the above

Loss Function

What is the loss function that you use for a binary classification problem ?

(c) One Fourth Labs

1) Take a simple binary classification problem

2) Take a small (one hidden layer) network for this task, the output layer will have two neuron

3) Show computation upto output

4) NOw show the cross entropy formula

5) Now compute the cross-entropy

6) Repeat the above process for two more inputs

7) Now show very simple code to do the above

Loss Function

What is the loss function that you use for a multi-class classification problem ?

(c) One Fourth Labs

1) Take a simple multi-class classification problem

2) Take a small (one hidden layer) network for this task, the output layer will have two neuron

3) Show computation upto output

4) NOw show the cross entropy formula

5) Now compute the cross-entropy

6) Repeat the above process for two more inputs

7) Now show very simple code to do the above

Loss Function

What have we learned so far?

(c) One Fourth Labs

1) On LHS show a network and one of the tasks

In Pink Bubble

2) Given weights, we know how to compute the model's output for a given input

3) Given weights, we know ho to compute the model's loss for a given input

4) Question: But who will give us these weights? The Learning algorithm

(Partial )Derivatives, Gradients

Can we do a quick recap of some basic calculus ?

(c) One Fourth Labs

1) Pink bubble on LHS

-Recall Gradient Descent update rule

-w = w + \delta w and the definition of \deltaw(p.d. of L w.r.t. w)

RHS (feel free to split this across multiple slides if you want)

2) derivatives of e^x, x^2, 1/x

3) what about e^x^2 ? write the chain rule (derivative of e^x^2 wrt x^2 multiplied by derivative of x^2 wrt x)

(Now substitute x^2 by z and write the chain rule in terms of z)

4) what about e^e^x^2 ? [repeat the same story as above, now the chain rule expands a bit]

5) what about 1/e^x^2 --> inv(exp(sqr(x))) --> f(h(g(x))) --> now write the chain rule in terms of these functions

6) what about 1/e^-x^2 --> inv(exp(neg(sqr(x)))) --> f(h(g(x))) --> now write the chain rule in terms of these functions

7) sin(1/e^-x^2) [now one by one keep adding outer functions, cos, tan, e, log and so on and show how only one term gets added in the chain rul]

(Partial )Derivatives, Gradients

Can we do a quick recap of some basic calculus ?

(c) One Fourth Labs

1) Start with the long composite function that you showed at the end of the previous slide ?

2) No introduce w1, w2, etc in the function (for example (sin (w1*cos(....)) and so on

3) now show this as a chain (should look like a multilayered network with one neuron in each layer) and the corresponding w on the edge

4) now show how y is a function of many variables y = f (w1,...)

5) So now it is valid to ask questions about dy/dw1, w2 and so on (here show the symbol 'd' for derivative as opposed to the symbol delta for partial derivative)

6) Now replace d by delta (in the background I will say that this is a partial derivative)

7) Pink bubble: How do you compute the partial derivative? Assume that the other variables are constants.

8) Now show the computation of the partial derivatives w.r.t. different variables.

(Partial )Derivatives, Gradients

Can we do a quick recap of some basic calculus ?

(c) One Fourth Labs

1) Now have multi-path in the chains. For example, h1=w1x, h2 = w2h1 + w3h1^2, y = w4h1

2) Now compute derivative of y w.r.t. w1. Show the paths through which it will have to go.

3) Now show the equivalent neural network style diagram

4) show the chain rule as a summation of the two paths

5) Now add one more path and show how the chain rule changes. Add one more path.

6) By now you should be able to give then a generalized formula for the chain rule (summation over some i)

Next slide (Keep the diagram from the previous slide)

1) Cartoon Question: Wouldn't it be tedious to compute such a partial derivative w.r.t. to all the variables? Wel, not really? We can reuse some of the work?

2) show a situation where you have one input x, two hidden neurons in layer 1, one hidden neuron in layer 2 and then the output layer. Suppose the two neirons in hidden layer 1 connect to the 2nd hidden layer by weight w1, w2 and let the output of the second hidden layer be h2. Now show that you want to compute derivative wrt w1 and w2. Now show that suppose you already know the derivative then you can do dy/dh1*dh1/dw1 and same for w2 so the first part is shared

3) Now show an even more complex network with 8 neurons and 8 weights in layer 1 - the rest of the network is same as before. Again show ho wyou can reuse things for w1,w2, ..., w8

4) Now I would like to show two neurons in the secon layer and show how the computation is done

(Partial )Derivatives, Gradients

What are the key takeaways ?

(c) One Fourth Labs

Last network from previous slide on LHS

Pink Bubble on RHS

- Now matter how complex the function is or how many variables it depends on, you can always compute the derivative wrt any variable using the chain rule

- You can reuse a lot of work by starting backwards and computing simpler elements in the chain

(Partial )Derivatives, Gradients

What is a gradient ?

(c) One Fourth Labs

Last network from previous slide on LHS

Pink Box on RHS

- It is simply a collection of partial derivatives

Below the box

- show variables w1, w2, w3... in a vertical vector (call it theta)

- now show corresponding partial derivatives

- now put a vector around these partial derivatives (call it grad\theta)

Learning Algorithm

Can we use the same Gradient Descent algorithm as before ?

(c) One Fourth Labs

Initialise

0. On LHS show the GD algorithm from my lecture slides

1. Show the simple sigmoid neuron

2. Now show the complex network, maybe with 1-2 hidden layers

3. In pink bubble

Earlier: w, b

Now: w11, w12, ......

4. In pink bubble

Earlier: L(w, b)

Now: L(w11, w12, ......)

5. Now on LHS show the animations from my lecture slides

\(w_1, w_2, b \)

Iterate over data:

\( \mathscr{L(w, b)} = compute\_loss(x_i) \)

\( update(w_1, w_2, b, \mathscr{L}) \)

till satisfied

Learning Algorithm

How many derivatives do we need to compute and how do we compute them?

(c) One Fourth Labs

1) Show that huge matrix of partial deriatives that I show in the lecture using the same step-by-step animation as before

2) Now on the LHS highlight one of the weights in the second layer and show you are interested in the partial derivative w.r.t.loss function. Then show the update rule for this partial derivative.

3) Now show the path for this weight and how you will compute the partial derivative using the chain rule

Learning Algorithm

How do we compute the partial derivatives ?

(c) One Fourth Labs

Show a very small DNN on LHS

Show the full forward pass computation

Show the loss computations

Show the formula for the partial derivative

Now substitute every value in it and compute the partial derivative

Now update the rule

Learning Algorithm

Can we see one more example ?

(c) One Fourth Labs

Repeat the process from the previous slide for one more weight: Preferably in a different layer

Show a very small DNN on LHS

Show the full forward pass computation

Show the loss computations

Show the formula for the partial derivative

Now substitute every value in it and compute the partial derivative

Now update the rule

Learning Algorithm

What happens if we change the loss function ?

(c) One Fourth Labs

change the loss function to cross entropy and show that now in the chain rule only one element of the chain changes

now repeat the same process as on the previous slide

Learning Algorithm

Isn't this too tedious ?

(c) One Fourth Labs

Show a small DNN on LHS

ON RHS now show a pytorch logo

Now show the compute graph for one of the weights

nn.backprop() is all you need to write in PyTorch

Evaluation

How do you check the performance of a deep neural network?

(c) One Fourth Labs

Similar (not same) slide as that in MP neuron

Take-aways

What are the new things that we learned in this module ?

(c) One Fourth Labs

Show the 6 jars at the top (again can be small)

\( \{0, 1\} \)

\( \in \mathbb{R} \)

Tasks with Real inputs and real outputs

show the complex function which can handle non-linearly separable data

show squared error loss, cross entropy loss and then ....

show gradient descent update rule in a for loop

show accuracy formula

Copy of Multilayered Network of Neurons

By preksha nema

Copy of Multilayered Network of Neurons

- 802