Web and Cloud Computing: Group 13

Architecture Explanation Slides

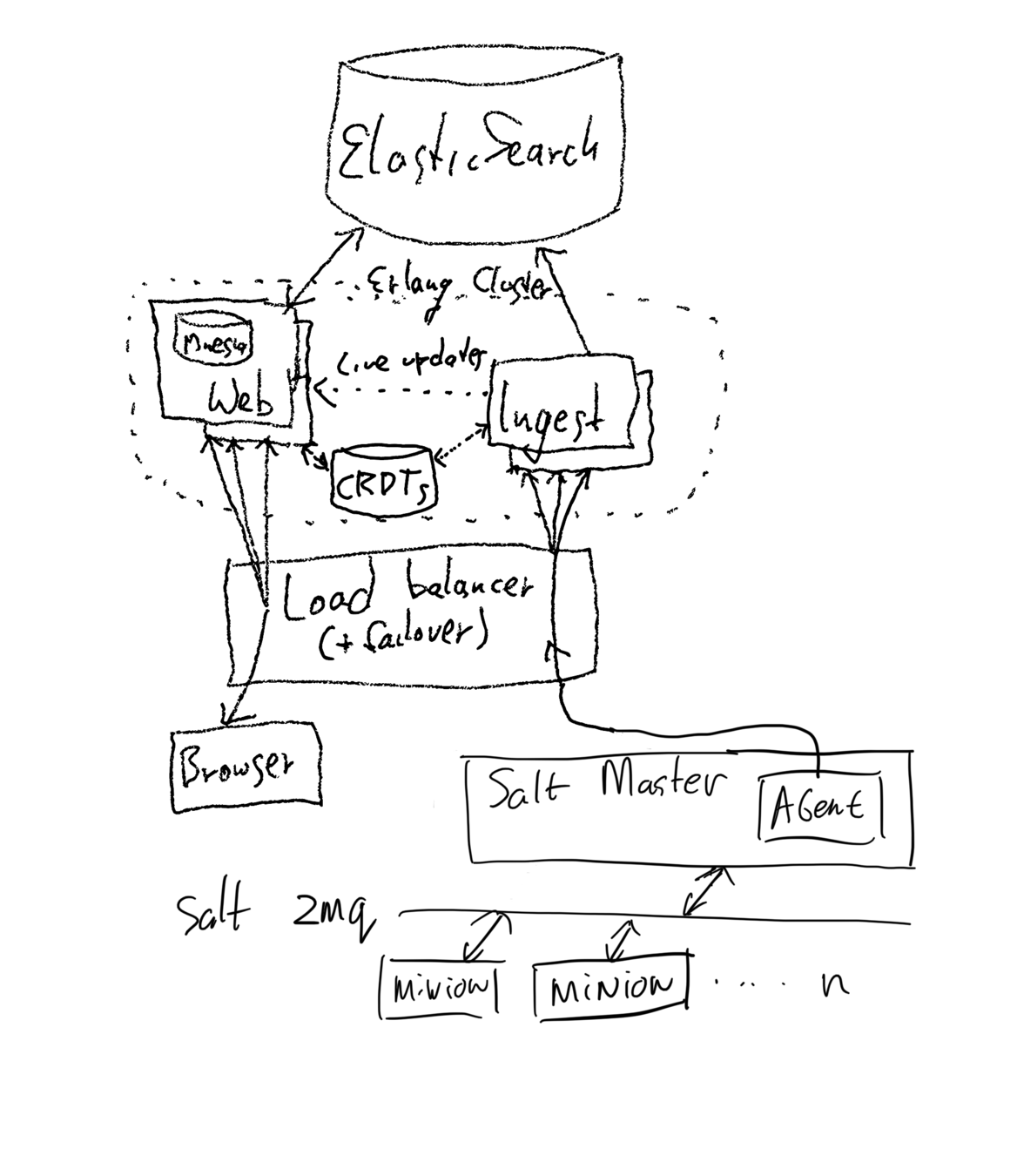

Architecture Overview

- Agent 'Seascape Wave'.

- 'Ingest' listening/computing nodes.

- Analytics-focused distributed database (ElasticSearch).

- 'Web' user-interaction nodes.

Four key components:

Agent: 'Seascape Wave'

- Built using Python/SaltStack

- Built-in cryptography

- Built-in message queue to retry the sending of event-messages until a connection is re-established.

- Runs on the user's host (the 'Salt master') which might manage many containers:

- Reason: Quality of metrics is better when running outside of a container, and certain metrics can only be obtained this way.

- We want to construct the system to easily allow adding other agents in the future (that for instance might run inside environments where you do not have access to the host machine).

Ingest nodes

- Built using Elixir.

- Part of the BEAM cluster (see later slide).

- Horizontally scalable by running many behind a load-balancer (distributing sessions in a round-robin fashion).

- Listens for a request from an Agent.

Analytics-focused distributed database

- For this task we've chosen to use Elasticsearch.

Reasons:- Built to work with metric-based data like what we have in this project.

- Built to scale to large mountains amounts of data.

- Built to refine the interpretation of data (e.g what kind of queries you might want to do) later.

Web nodes

- Built using Elixir/Phoenix LiveView.

- A user connects to it with their browser.

- LiveView serves a static HTML page containing the SPA on first visit which then starts up a WebSocket connection through which all later interaction takes place:

- DOM-diffing happens server-side, simplifying implementation.

- Does not interfere with .e.g. SEO

- Part of the BEAM cluster (see later slide).

- Horizontally scalable by running many behind a load-balancer (distributing sessions in a round-robin fashion).

- Requests data from the database whenever required, caches data locally.

The BEAM cluster as a whole

- Elixir works using the Actor model, allowing for a scalable and fault-tolerant implementation of concurrent services that is comprehensible and therefore maintainable.

- Ingest and Web nodes are connected to the same Erlang/BEAM cluster, enabling:

- Transparent message-passing, in particular:

- Transparent pub-sub for real-time data updates which can be sent without going through the database.

- CRDT-based real-time information about what agents (e.g. the user's clusters and containers within them) are currently 'up' and their health.

- On top of this, we run the distributed database Mnesia on all nodes in the cluster, to enable:

- Persistent user sessions (without complicating the load balancers).

- Storage of ephemeral (e.g. current 'real-time' data).

General Remarks

- For our particular project, there is no functionality we can provide to the user when they are offline. (Except telling them that they are disconnected and that the current metrics/graphs thus are stale.

- This in part influenced our design decisions.

- We are not adding extra 'message queues' (like e.g. RabbitMQ) to our stack, besides the message queue that is part of the Agent (internally in Saltstack in our case).

- Of course, all Elixir processes (actors) have their own internal message queue ('mailbox').

Fun fact: RabbitMQ is itself written in Erlang and thus uses these techniques under the hood

- Of course, all Elixir processes (actors) have their own internal message queue ('mailbox').

- We are not adding external caches/message brokers (like e.g. Redis) to our application. Instead, we use direct message passing instead of an external message broker and Mnesia for persistent user sessions and ephemeral caching.

Copy of Web and Cloud Computing: Group 13

By qqwy

Copy of Web and Cloud Computing: Group 13

- 1,180