To Explore, or To Exploit:

Reinforcement Learning Basics

First Things First

The possibilities of Reinforcement Learning may be unlimited, but slide real estate is not.

From hereon out,

Reinforcement Learning is abbreviated RL.

Let's Get R(ea)L:

I am still learning.

All knowledge imparted during this session was amassed over a period of two weeks.

Thus, you may be familiar with some of this content already.

The machines are still learning.

However, I have 99% confidence that

you will learn >= 1 new thing during this presentation.

What is RL?

RL is an area of machine learning focused on how agents can take actions in an environment to maximize cumulative rewards.

RL is concerned with goal-oriented algorithms.

RL addresses the problem of correlating immediate actions with the delayed returns that those actions produce.

Supervised

Predict a target value or class, given labeled training data.

Unsupervised

Find patterns and cluster unlabeled data.

Reinforcement

Find the best method to optimize rewards.

Machine Learning Methods

Why Should I Care About RL?

The robots are coming...

... for your games!

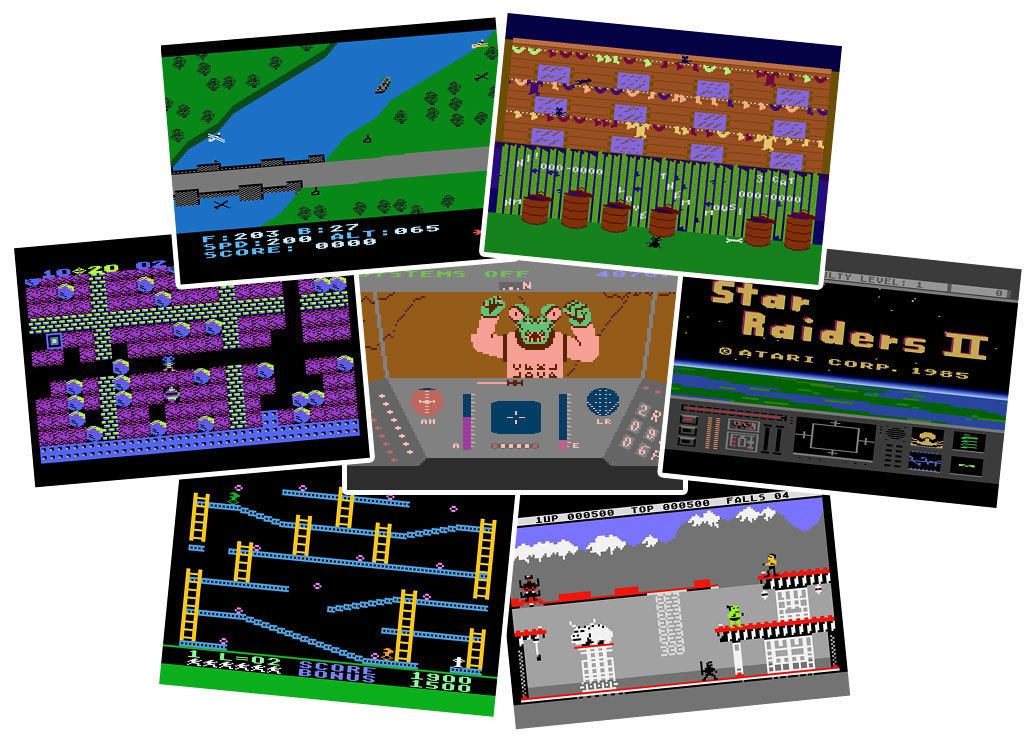

2014

DQN | Atari 2600 Games

2016

AlphaGo

2017

OpenAI PPO

RL has fueled many recent breakthroughs in gaming tasks, and state-of-the-art is advancing rapidly.

And of course, there are RL applications other than gaming.

Making the perfect pancake.

Autonomous vehicles and helicopters.

Automated financial trading.

Relate-ability Factor

For non-technical audiences,

the notion of "training" a machine or model

makes general sense in the context of RL.

The concept of learning through reward and punishment is universally understood.

Of all machine learning fields,

RL is the closest to how humans and animals learn.

Building Blocks of RL

Now that we've covered the purpose of RL, let's look at the building blocks.

To help relate these building blocks,

we'll take a trip down (my) memory lane.

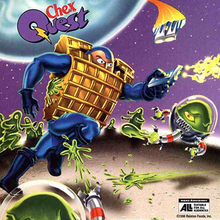

Chex Quest.

Cerealously.

Enter...

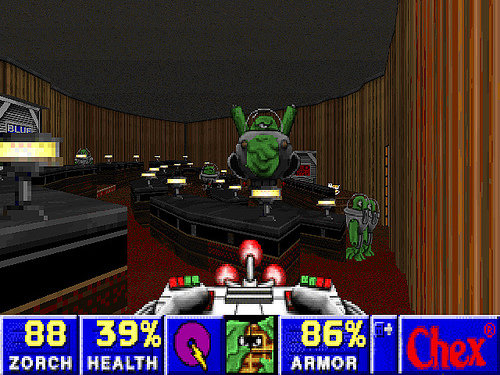

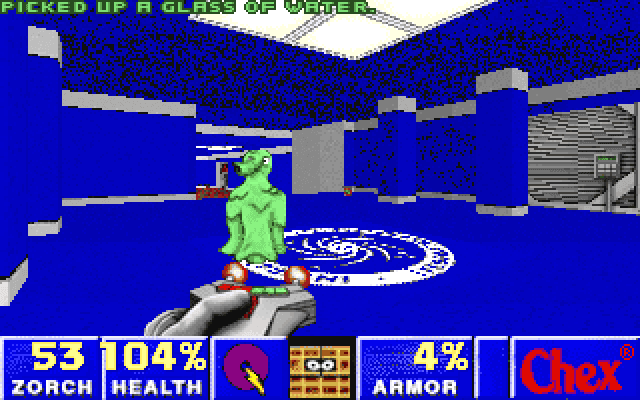

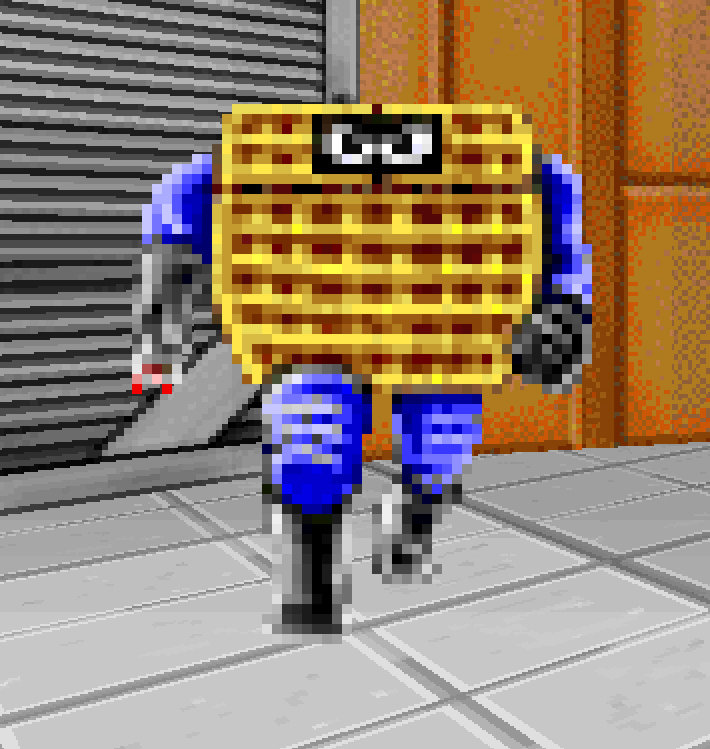

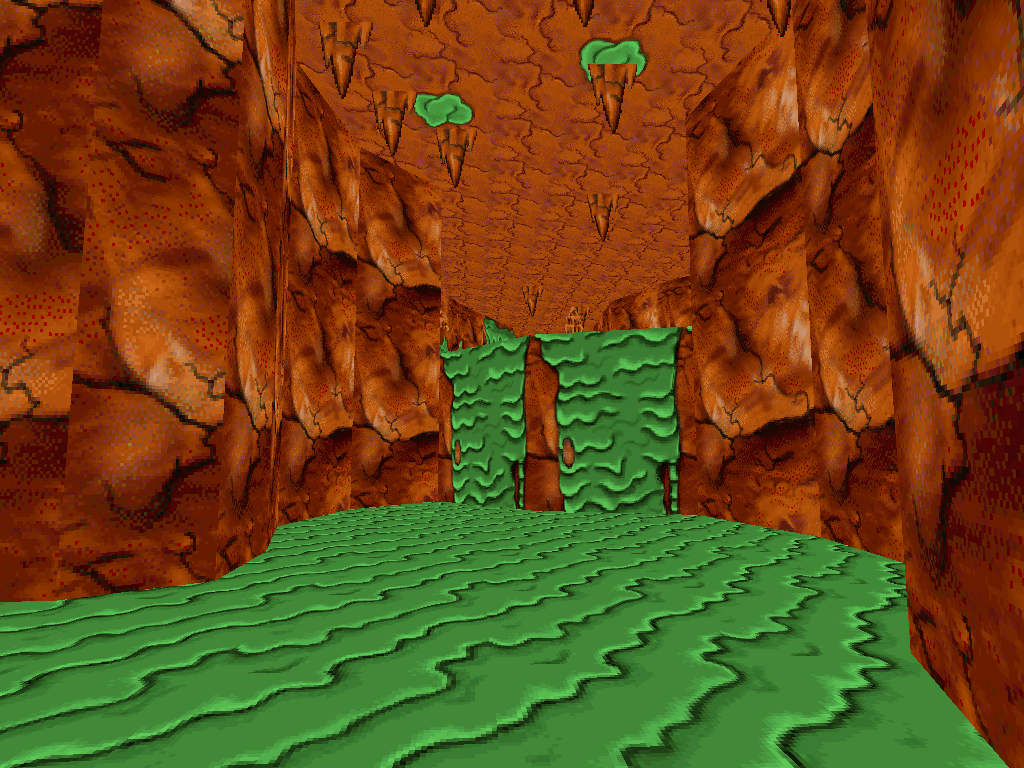

Chex Quest

- Non-violent first person shooter

- Created as a 1996 Chex promotion

- Distributed in cereal boxes

- Developed using the Doom engine

"The game's cult following has been remarked upon by the press as being composed of unusually devoted fans of this advertising vehicle from a bygone age."

- Wikipedia

Ready.

Aim.

Zorch!

But, back to RLity.

The agent is an entity that takes actions.

- Chex Warrior

The agent is an entity that takes actions.

An action is a possible move an agent can make.

- Walk forward

- Zorch a flemoid

- Change weapons to power spork

The agent is an entity that takes actions.

An action is a possible move an agent can make.

- A distant planet called Bazoik

The environment is the world through which the agent moves.

The agent is an entity that takes actions.

An action is a possible move an agent can make.

- 90% gooed

The environment is the world through which the agent moves.

A state is a situation in which the agent finds itself; the situation is returned by the environment.

The agent is an entity that takes actions.

An action is a possible move an agent can make.

- +1 zorch

- Lost health points

- Increase in Chex armor

The environment is the world through which the agent moves.

A state is a situation in which the agent finds itself; the situation is returned by the environment.

A reward is the feedback used to measure the success or failure of the agent's actions.

Environments are functions that transform an action taken in the current state into the next state and a reward.

Agents are functions that transform the new state and reward into the next action.

Agent

Environment

action

state

reward

Building on the Building Blocks

So... how does an agent determine what actions to take?

A policy defines how the agent behaves.

A policy is mapping from the perceived states of the environment to the action to be taken when in those states.

Policy implementation can range from simple to complex.

extensive computation

search process

lookup table

simple function

Let's consider the idea of reward-driven behavior again.

RL is based on the notion of the reward hypothesis, where all goals can be described by the maximization of the expected cumulative reward.

To achieve the best behavior, we need to maximize the expected cumulative reward.

However, we can't necessarily add up all future rewards at the present time.

Short term rewards are more probable to achieve, since they are more predictable than long term future rewards.

The discount rate is multiplied against future rewards in order to dampen long term rewards' effect

on the current choice of action.

Enter the discount rate, (gamma).

The discount rate is used to control how much the agent cares about long-term rewards versus short-term rewards.

Immediate Hedonism

Delayed Gratification

Recall that the agent's core objective is to maximize its total reward over the long run.

The reward signal indicates what is good immediately;

the value function specifies what is good in the long run.

Value is the expected long-term return (with discount).

is the expected long term return of the current state under policy

It is much harder to determine values than it is to determine rewards.

Rewards are returned directly by the environment;

values need to be estimated and re-estimated from the sequences of observations made by an agent over its lifetime.

Value estimation is a key element of reinforcement learning.

To Explore, or To Exploit?

That is the question.

An agent explores to discover more information about the environment.

An agent exploits known information to maximize its rewards.

To obtain maximum rewards, an agent must prefer actions that it has tried in the past and found to be effective (in producing rewards).

But, to discover such actions, it has to try actions that it has not selected before.

Level End

Neither exploration nor exploitation can be pursued exclusively without failing at a task.

An agent needs to find the balance between exploration and exploitation to achieve success.

Approaches to RL

Model-Based

The model captures the state transition function and rewards function.

During learning, the agent attempts to understand its environment and creates a model to represent it.

From this model, the agent can use a planning algorithm to dictate policy while acting.

But, one of the exciting things about RL is that we don't have to have a model of the environment to generate a good policy!

Model-Free

The agent learns a policy or value function from experience.

The agent does not use any generated predictions of next state or reward to alter behavior.

Instead, by sampling experience, the agent learns about the environment implicitly via observing which actions accrue the best cumulative rewards.

Q-Learning

Let's take a look at a basic RL algorithm.

Q-Learning is a model-free RL algorithm.

The goal of Q-Learning is to learn the optimal action-selection policy.

The "Q" stands for the quality of an action taken in a given state.

During Q-Learning, the agent creates a Q Table which specifies a maximum expected future reward for each action at each state.

The agent can then use the populated

Q Table as a cheat sheet for completing the episode with maximum cumulative rewards.

The

Q-Learning Algorithm

Initialize the Q-Table

Choose an action

Perform action

Measure reward

Update the Q-Table

Useful Q-Table

(after learning)

The Q function takes two inputs: state and action.

It returns the expected future reward

of the action at that state, or the Q value.

After the Q-Table is populated during learning, it renders the Q function as just a table lookup on state and action.

As the agent explores the environment, it improves the approximation of Q(s, a) by iteratively updating the Q Table.

Imagine this scenario:

You are playing frisbee next to a frozen lake. One of your friends overshoots their pass and the frisbee ends up out on the lake. You now need to navigate a path to safely retrieve your frisbee. However, the ice is perilous, and there are several holes.

Each position (square) out on the ice is a state.

The frozen lake (grid) is your environment.

You are the agent.

There are four possible actions:

move right, left, up, or down.

You get a reward of 1 if you reach the frisbee.

You get no reward if you fall down a hole, or if you take too long and expire from hypothermia (t=100).

Code Interlude,

And now, time for a refreshing

where we can see Q-Learning in action!

(and learn what happens on the frozen lake...)

Questions?

Resources

https://skymind.ai/wiki/deep-reinforcement-learning

https://towardsdatascience.com/introduction-to-reinforcement-learning-chapter-1-fc8a196a09e8

https://medium.freecodecamp.org/an-introduction-to-reinforcement-learning-4339519de419

https://towardsdatascience.com/reinforcement-learning-with-openai-d445c2c687d2

Reinforcement Learning: An Introduction, Richard S. Sutton and Andrew G. Barto

http://incompleteideas.net/book/the-book-2nd.html

Resources Used & Recommended for Further Study

To Explore or To Exploit: Reinforcement Learning Basics

By Rachel House

To Explore or To Exploit: Reinforcement Learning Basics

If you're curious about reinforcement learning, or have an unnaturally enthusiastic nostalgia for Chex Quest, you'll find something to enjoy and learn in this informal introduction to RL.

- 775