Network anomalies detection

Workflow

- Gathering data

- Cleaning data

- Detection

- Unsupervised

- Supervised

- Evaluation

Data

- SNMP

- Fortinet API

- Packet captures

- Synthetic data

Example 1

Anomaly detection in Network Traffic Using Unsupervised Machine learning Approach. Aditya Vikram, None Mohana. Published online June 1, 2020. doi:https://doi.org/10.1109/icces48766.2020.9137987

Example 1

Data

- NSL-KDD

- 41 features

- https://www.kaggle.com/datasets/hassan06/nslkdd

Anomalies

- DoS

- R2L - Remote to local

- Probe - find open ports

- U2R - user to root

Classifiers

- Isolation Forest

- One-Class SVM

Example 1

80/20 data split

PCA used for reducing dimensions

Example 1

Isolation forest

- Unsupervised decision-tree based

- Consists of multiple isolation trees

- Split data until each partition contains one data point

- Random split on a random feature

- Feature chosen at random

- Random point between min and max for a split

- Idea is that outliers require less splits to separate

Isolation forest

Isolation forest

Anomaly score

- E(h(x)) - average path length to isolate x across trees

- c(n) - normalization factor

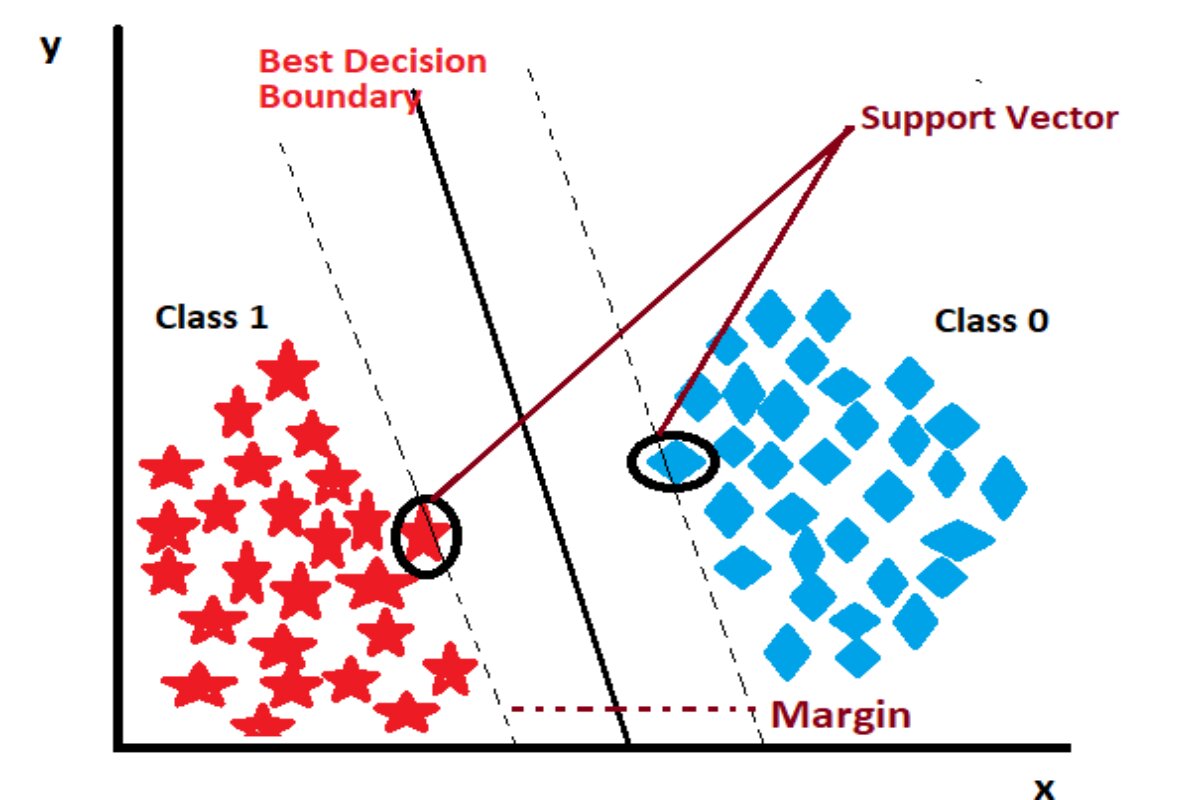

One-class SVM

In general SVM is supervised

One-class SVM

In One-class SVM we only have 1 class so we use origin as a "fake" second class.

Parameters: kernel, ν

Example 2

Combining Unsupervised Approaches for Near Real-Time Network Traffic Anomaly Detection. Carrera F, Dentamaro V, Stefano Galantucci, Iannacone A, Donato Impedovo, Pirlo G. Applied Sciences. 2022;12(3):1759-1759. doi:https://doi.org/10.3390/app12031759

Example 2

Data

- KDD99, NSL-KDD, CIC-IDS2017

- https://www.kaggle.com/datasets/hassan06/nslkdd

Anomalies

- Zero-day attacks

Classifiers

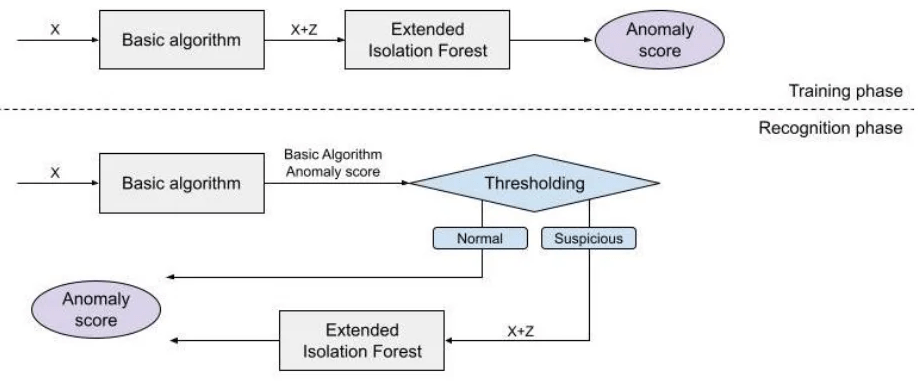

- Deep Autoencoding Gaussian Mixture Model with Extended Isolation Forest (DAGMM-EIF)

- Deep Autoencoder with Extended Isolation Forest (DA-EIF)

- Memory-augmented Deep Autoencoder with Extended Isolation Forest (MemAE-EIF)

- .....

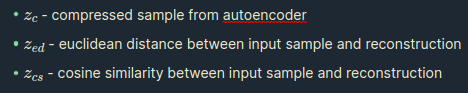

- capture important features in the lower dimensional representation

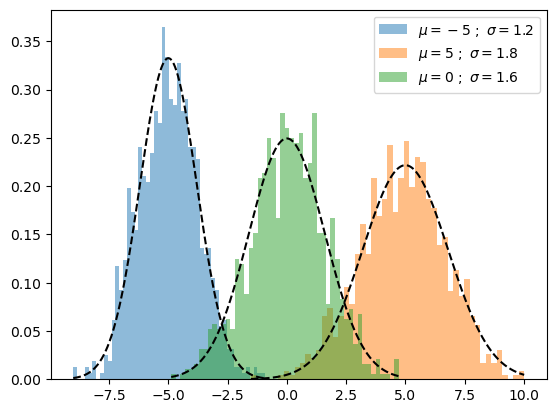

Autoencoder

- When decoding data we end up with some reconstruction error E(x, x')

- If the reconstruction error is above some threshold value we call that anomaly

Autoencoder

- clustering ML method

- composed of multiple Gaussians

- in our case data is features + reconstruction error

Gaussian Mixture Model

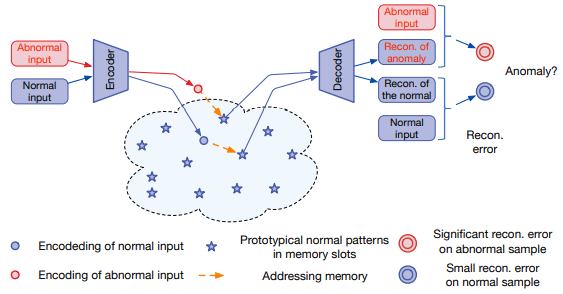

- Issue: Autoencoder can generalize and reconstruct anomalies

- Memory module, add normal items during training

- During reconstruction retrieve these normal items

Memory Augmented Deep Autoencoder

Extended Isolation Forest

Isolation Forest but we add slope

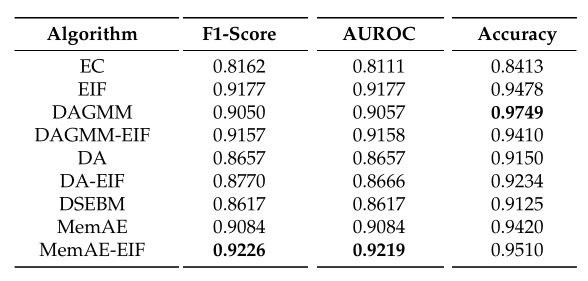

Algorithms tested

- Extended Isolation Forest (EIF)

- Ensemble Consensus (EC)

- Deep Autoencoding Gaussian Mixture Model (DAGMM)

- Deep Autoencoding Gaussian - Extended Isolation Forest (DAGMM-EIF)

- Deep Autoencoder (DA)

- Deep Autoencoder - Extended Isolation Forest (DA-EIF)

- Deep Structured Energy Based Models (DSEBM)

- Memory-Augmented Deep Autoencoder (MemAE)

- Memory-Augmented Deep Autoencoder—Extended Isolation Forest (MemAE-EIF)

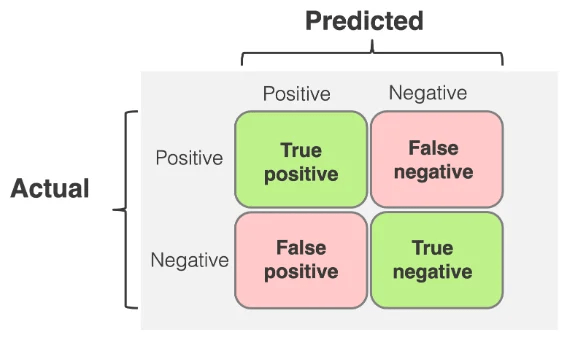

Evaluation metrics

- Precision

- Recall

- F1-Score

- Accuracy

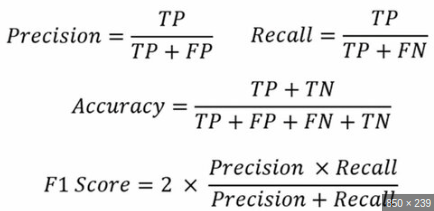

- AUC for ROC

Receiver-operating characteristic

- visual representation of model performance across all thresholds

- higher AUC means better model

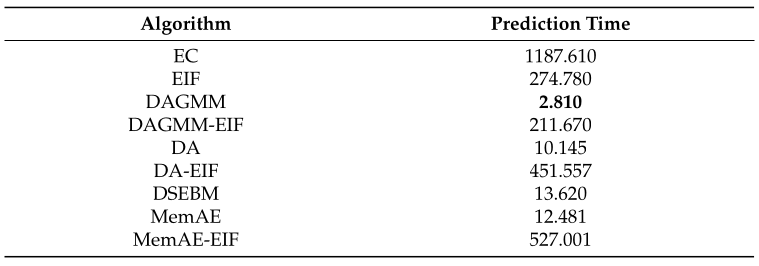

Results

Results

The results obtained show that the MemAE-EIF algorithm achieves the best performance in terms of accuracy and F1-score for all the datasets examined. A high precision rate is equivalent to a low number of false positives, which are false alarms that experts in the field must handle.

Results

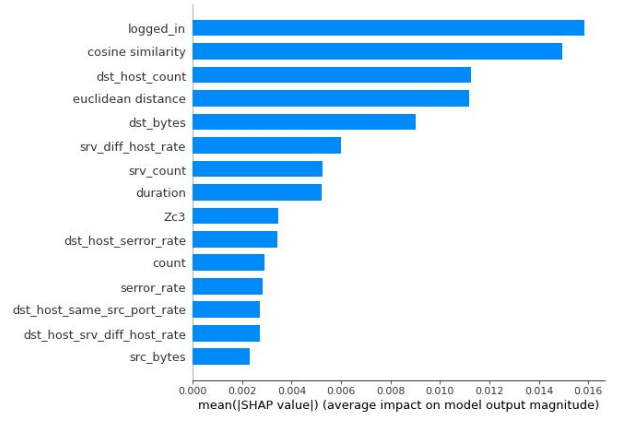

SHAP values for EIF model of MemAE-EIF algorithm

(KDDCUP99 dataset)

Example 3

Exploiting SNMP-MIB Data to Detect Network Anomalies using Machine Learning Techniques. Al-Naymat G, Al-kasassbeh M, Al-Hawari E. arXiv.org. Published 2018. Accessed October 21, 2024. https://arxiv.org/abs/1809.02611

Example 3

Data

- SNMP-MIB dataset

Al-Kasassbeh, M., Al-Naymat, G., & Al-Hawari, E. (2016). Towards Generating Realistic SNMP-MIB Dataset for Network Anomaly Detection. International Journal of Computer Science and Information Security, 14(9), 1162)

Anomalies

- DoS (TCP-SYN flooding, UDP flooding, ICMP-ECHO flooding, HTTP flood, Slowloris, Slowpost)

- Brute force attack

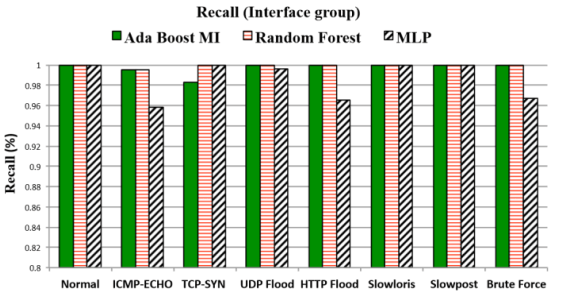

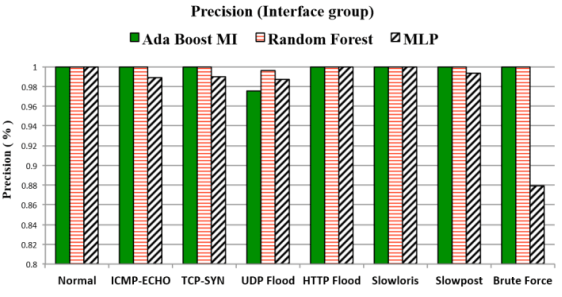

Classifiers

- AdaboostM1 with J48

- Random Forest

- MLP

AdaboostM1 with J48

J48 (same as C4.5?)

- decision tree algorithm

- maximize information gain on each split

AdaBoost

- combine multiple weak learners (decision trees)

- each next learner focuses on the samples that were misclassifed

- prediction = weighted combination of all learners

Random Forest™

- ensemble learning method made out of decision trees

- bootstrap aggregation

- random feature selection

- majority vote

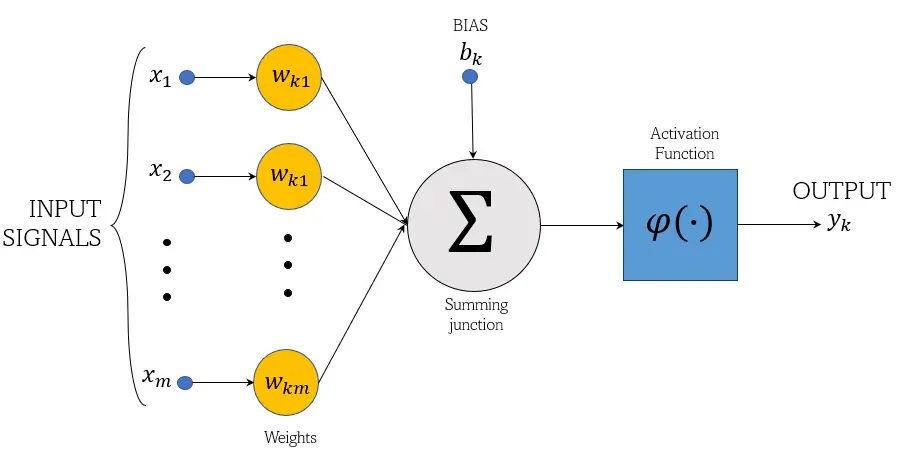

MLP - Multilayer Perceptron

- feedforward neural network

- fully connected

- nonlinear activation function

Data

- 4998 records

- 34 MIB variables

- But only using 8 variables from the interface group

- 70/30 split

| Var | Name |

|---|---|

| 1 | ifInOctets |

| 2 | ifOutOctets |

| 3 | ifOutDiscards |

| 4 | ifInUcastPkts |

| 5 | ifInNUcastPkts |

| 6 | ifInDiscards |

| 7 | ifOutUcastPkts |

| 8 | ifOutNUcastPkts |

Results

Example 4

Detecting network anomalies using machine learning and SNMP-MIB dataset with IP group. Manna A, Alkasassbeh M. arXiv.org. Published 2019. Accessed October 22, 2024. https://arxiv.org/abs/1906.00863

Example 4

Data:

- same as previous paper

- https://www.kaggle.com/datasets/malkasasbeh/network-anomaly-detection-dataset

Anomalies:

- icmp-echo, tcp-syn, udp-flood, httpFlood, slowloris, slowost, bruteforce

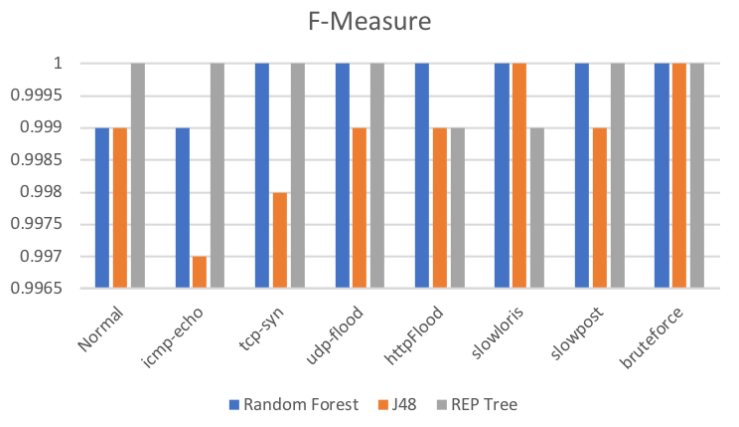

Classifiers

- Random Forest

- Decision tree

- REP Tree

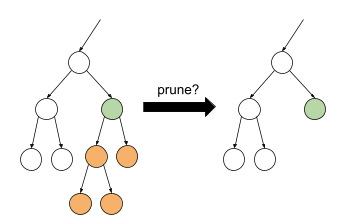

REP Tree

- decision tree built on training data that aims for high accuracy

- REP - reduced error pruning

- start from bottom of the tree, evaluate if each subtree can be replaced with a leaf

- compare accuracy against test data

Data

| Variable Name | Variable Description |

|---|---|

| ipInReceives | The total number of input datagrams that are received from the interfaces, including those received in error. |

| ipInDelivers | The total number of input datagrams that are delivered to the IP user protocols successfully (including ICMP). |

| ipOutRequests | The total number of IP datagrams supplied to IP in requests for transmission, not including ipForwDatagrams. |

| ipOutDiscards | The number of output datagrams that do not have errors preventing their transmission to their destination. |

| ipInDiscards | The number of input datagrams that do not have errors preventing their transmission to their destination. |

| ipForwDatagrams | The number of input datagrams for which this entity was not their final destination. |

| ipOutNoRoutes | The number of datagrams discarded because no route could be found to transmit them to their destination. |

| ipInAddrErrors | The number of input datagrams discarded because the IP address in their destination field was not valid. |

Results

Similar results for 5 and 3 variables (ReliefFAttributeEval, InfoGainAttributeEval)

Example 5

Evaluation of Machine Learning Algorithms for Anomaly Detection. Nebrase Elmrabit, Zhou F, Li F, Zhou H. Zenodo (CERN European Organization for Nuclear Research). Published online June 1, 2020. doi:https://doi.org/10.1109/cybersecurity49315.2020.9138871

Example 5

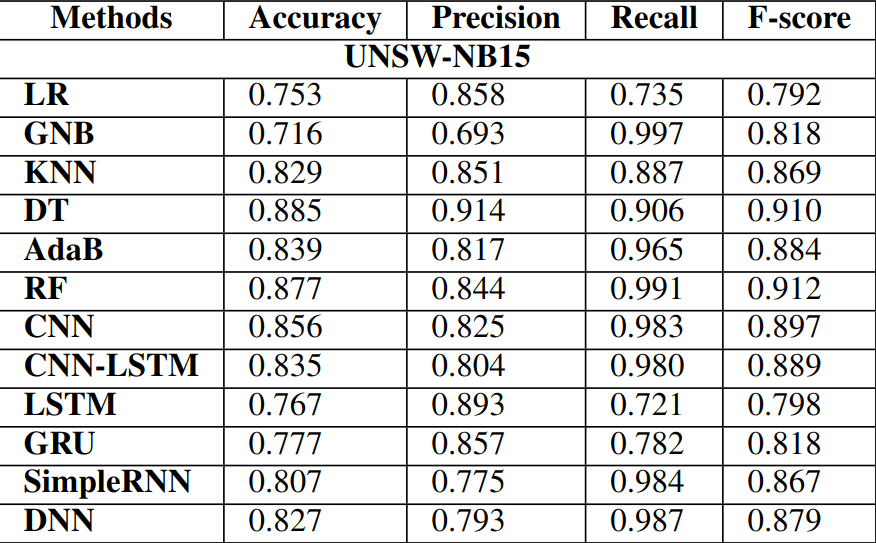

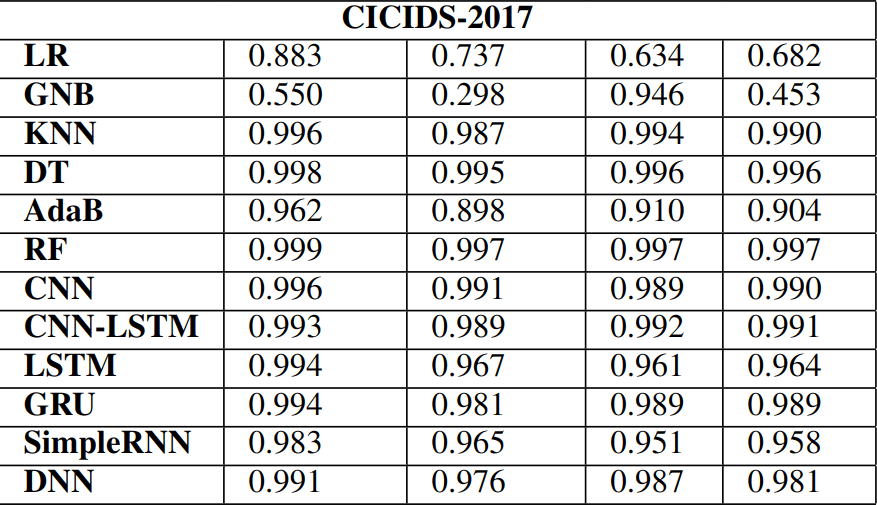

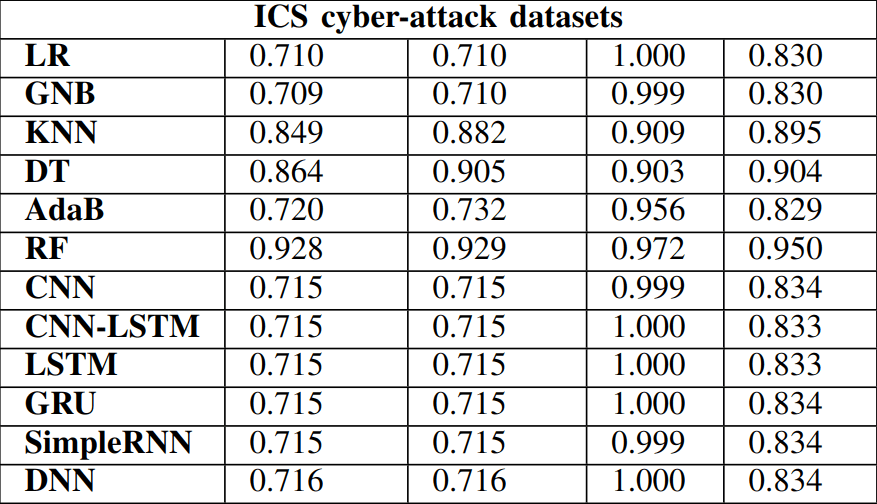

Data:

- NSW-NB15

- CICIDS2017

- ICS cyber-attack dataset

Anomalies:

- DoS, port scanning, SQL injection, brute force, worms and other associated vulnerabilities found in datasets

Algorithms

- Logistic regression

- Gaussian Naive Bayes

- K-nearest neighbors

- Decision tree

- Adaptive boosting

- Random forest algorithm

- Convolutional neural network

- Convolutional neural network and Long short-Term Memory

- Long short-Term memory

- Gated recurrent units

- Simple recurrent neural network

- Deep neural network

Results

Results

Results

Network anomalies detection

By Sasa Trivic

Network anomalies detection

- 206