Lecture 6: Neural Networks II

Shen Shen

March 7, 2025

11am, Room 10-250

Intro to Machine Learning

Outline

- Recap: Multi-layer perceptrons, expressiveness

- Forward pass (to use/evaluate)

-

Backward pass (to learn parameters/weights)

- Back-propagation: (gradient descent & the chain rule)

- Practical gradient issues and remedies

Outline

- Recap: Multi-layer perceptrons, expressiveness

- Forward pass (to use/evaluate)

- Backward pass (to learn parameters/weights)

- Back-propagation: (gradient descent & the chain rule)

- Practical gradient issues and remedies

A neuron:

\(w\): what the algorithm learns

- \(x\): input (a single datapoint)

- \(a\): post-activation output

- \(f\): activation function

- \(w\): weights (i.e. parameters)

- \(z\): pre-activation output

\(f\): what we engineers choose

\(z\): scalar

\(a\): scalar

Choose activation \(f(z)=z\)

learnable parameters (weights)

e.g. linear regressor represented as a computation graph

neuron

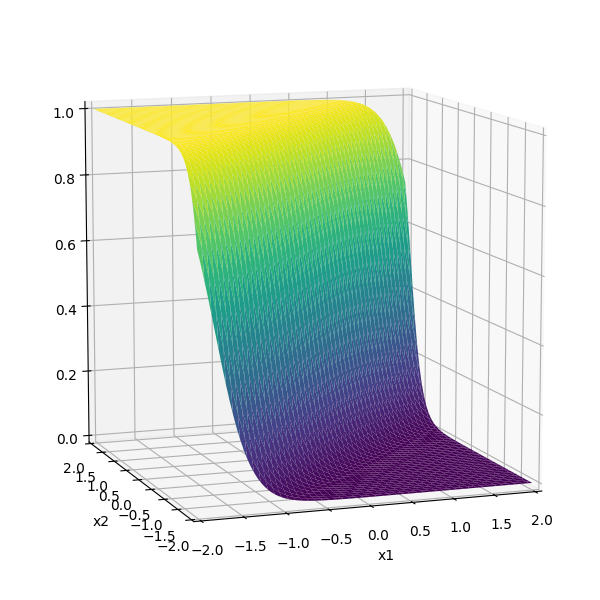

Choose activation \(f(z)=\sigma(z)\)

learnable parameters (weights)

e.g. linear logistic classifier represented as a computation graph

neuron

A layer:

learnable weights

A layer:

- (# of neurons) = (layer's output dimension).

- typically, all neurons in one layer use the same activation \(f\) (if not, uglier algebra).

- typically fully connected, where all \(x_i\) are connected to all \(z^j,\) meaning each \(x_i\) influences every \(a^j\) eventually.

- typically, no "cross-wiring", meaning e.g. \(z^1\) won't affect \(a^2.\) (the output layer may be an exception if softmax is used.)

layer

linear combo

activations

A (fully-connected, feed-forward) neural network

layer

input

neuron

learnable weights

We choose:

- # of layers

- # of neurons in each layer

- activation \(f\) in each layer

hidden

output

(aka, multi-layer perceptrons MLP)

\(-3(\sigma_1 +\sigma_2)\)

recall this example

\(f =\sigma(\cdot)\)

\(f(\cdot) \) identity function

Recall

\(-3(\sigma_1 +\sigma_2)\)

Outline

- Recap: Multi-layer perceptrons, expressiveness

- Forward pass (to use/evaluate)

- Backward pass (to learn parameters/weights)

- Back-propagation: (gradient descent & the chain rule)

- Practical gradient issues and remedies

- Activation \(f\) is chosen as the identity function

- Evaluate the loss \(\mathcal{L} = (g^{(i)}-y^{(i)})^2\)

- Repeat for each data point, average the sum of \(n\) individual losses

e.g. forward-pass of a linear regressor

- Activation \(f\) is chosen as the sigmoid function

- Evaluate the loss \(\mathcal{L} = - [y^{(i)} \log g^{(i)}+\left(1-y^{(i)}\right) \log \left(1-g^{(i)}\right)]\)

- Repeat for each data point, average the sum of \(n\) individual losses

e.g. forward-pass of a linear logistic classifier

\(\dots\)

Forward pass: evaluate, given the current parameters

- the model outputs \(g^{(i)}\) =

- the loss incurred on the current data \(\mathcal{L}(g^{(i)}, y^{(i)})\)

- the training error \(J = \frac{1}{n} \sum_{i=1}^{n}\mathcal{L}(g^{(i)}, y^{(i)})\)

linear combination

loss function

(nonlinear) activation

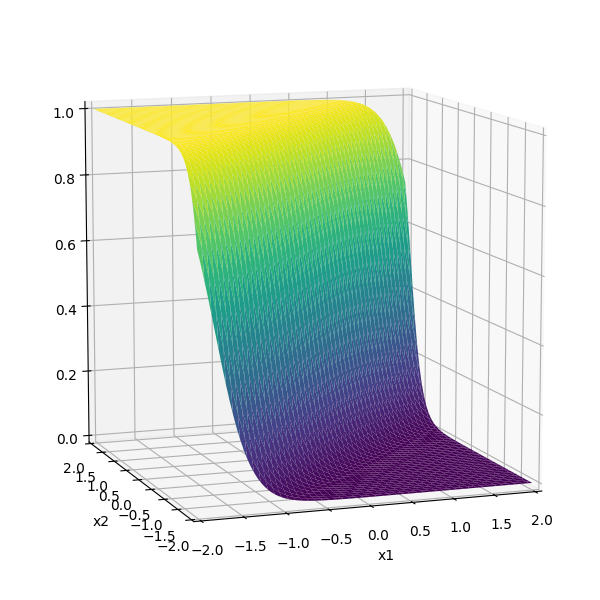

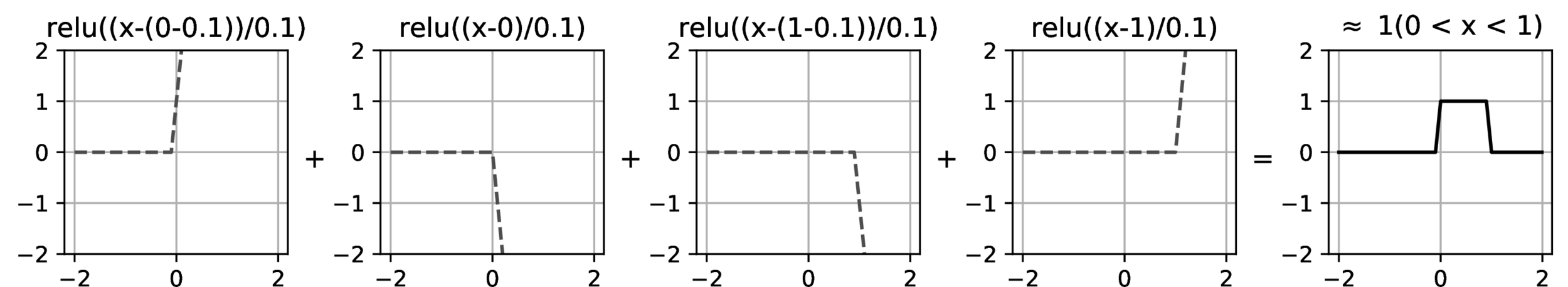

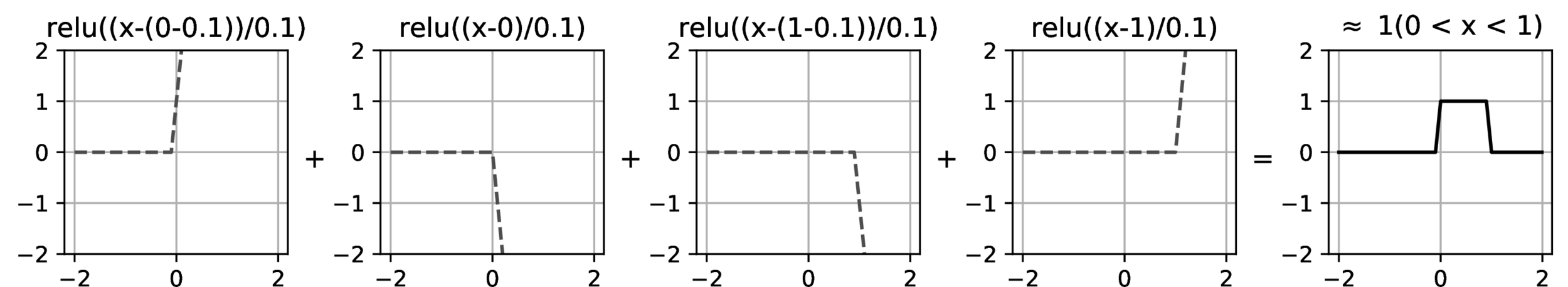

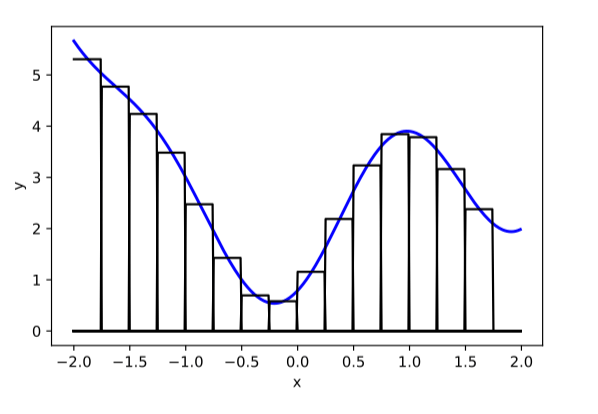

compositions of ReLU(s) can be quite expressive

in fact, asymptotically, can approximate any function!

image credit: Phillip Isola

Recall:

Outline

- Recap: Multi-layer perceptrons, expressiveness

- Forward pass (to use/evaluate)

-

Backward pass (to learn parameters/weights)

- Back-propagation: (gradient descent & the chain rule)

- Practical gradient issues and remedies

stochastic gradient descent to learn a linear regressor

- Randomly pick a data point \((x^{(i)}, y^{(i)})\)

- Evaluate the gradient \(\nabla_{w} \mathcal{L(g^{(i)},y^{(i)})}\)

- Update the weights \(w \leftarrow w - \eta \nabla_w \mathcal{L(g^{(i)},y^{(i)}})\)

for simplicity, say the dataset has only one data point \((x,y)\)

example on black-board

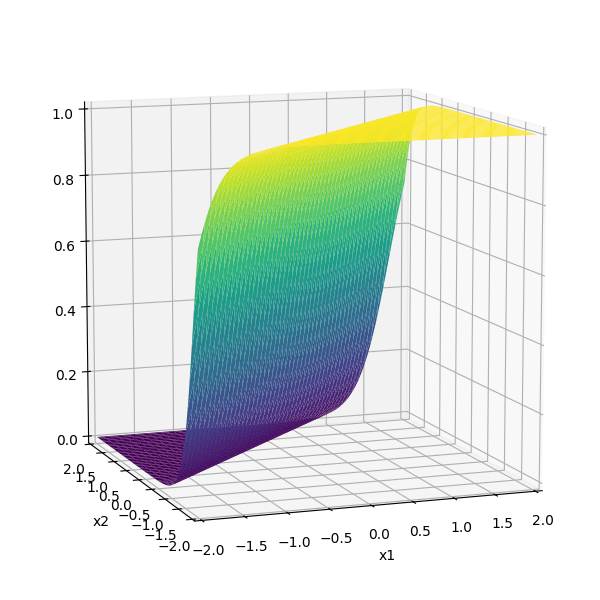

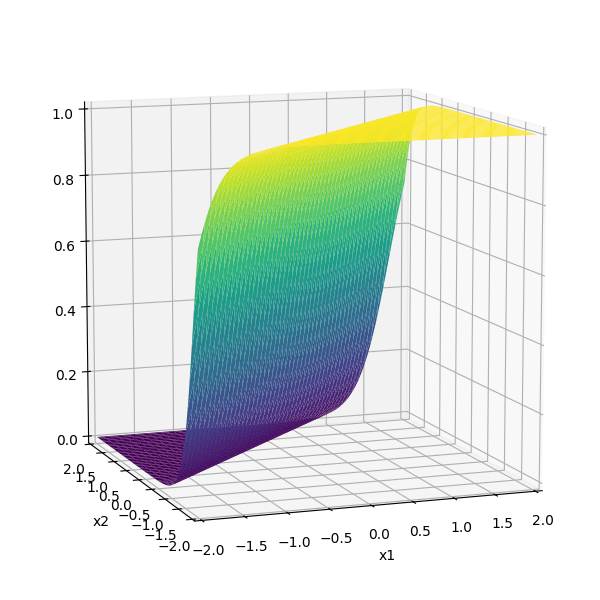

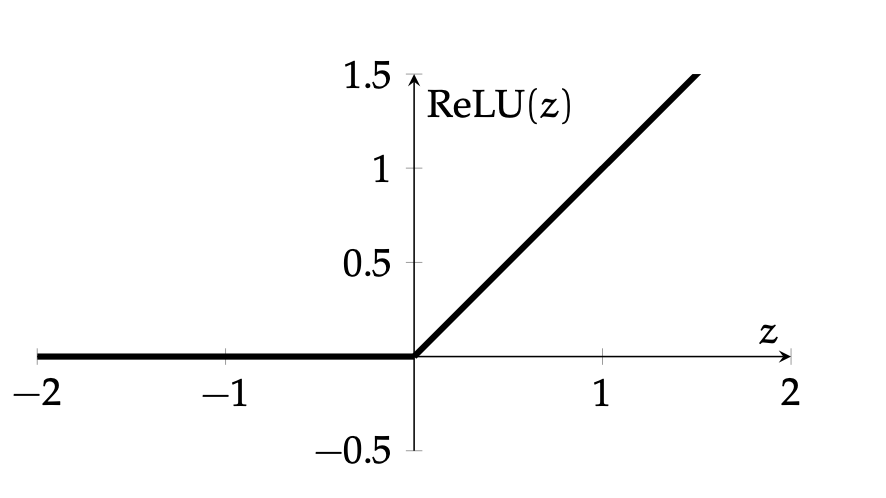

- default choice in hidden layers

- very simple function form, so is the gradient.

Recall:

now, slightly more interesting activation:

example on black-board

- Randomly pick a data point \((x^{(i)}, y^{(i)})\)

- Evaluate the gradient \(\nabla_{W^2} \mathcal{L(g^{(i)},y^{(i)})}\)

- Update the weights \(W^2 \leftarrow W^2 - \eta \nabla_{W^2} \mathcal{L(g^{(i)},y^{(i)}})\)

\(\dots\)

\(\nabla_{W^2} \mathcal{L(g^{(i)},y^{(i)})}\)

Backward pass: run SGD to update all parameters

- e.g. to update \(W^2\)

\(\dots\)

\(\nabla_{W^2} \mathcal{L(g,y)}\)

Backward pass: run SGD to update all parameters

- e.g. to update \(W^2\)

Evaluate the gradient \(\nabla_{W^2} \mathcal{L(g,y)}\)

Update the weights \(W^2 \leftarrow W^2 - \eta \nabla_{W^2} \mathcal{L(g,y})\)

\(\dots\)

how to find

?

\(\nabla_{W^1} \mathcal{L(g,y)}\)

Now, how to update \(W^1?\)

\(\dots\)

Evaluate the gradient \(\nabla_{W^1} \mathcal{L(g,y)}\)

Update the weights \(W^1 \leftarrow W^1 - \eta \nabla_{W^1} \mathcal{L(g,y})\)

\(\dots\)

how to find

?

Previously, we found

\(\dots\)

how to find

Now

\(\dots\)

back propagation: reuse of computation

\(\dots\)

back propagation: reuse of computation

Outline

- Recap: Multi-layer perceptrons, expressiveness

- Forward pass (to use/evaluate)

-

Backward pass (to learn parameters/weights)

- Back-propagation: (gradient descent & the chain rule)

- Practical gradient issues and remedies

Let's revisit this:

example on black-board

now, slightly more complex network:

example on black-board

now, slightly more complex network:

example on black-board

if \(z^2 > 0\) and \(z_1^1 < 0\), some weights (grayed-out ones) won't get updated

now, slightly more complex network:

example on black-board

if \(z^2 < 0\), no weights get updated

- Width: # of neurons in layers

- Depth: # of layers

- Typically, increasing either the width or depth (with non-linear activation) makes the model more expressive, but it also increases the risk of overfitting.

However, in the realm of neural networks, the precise nature of this relationship remains an active area of research—for example, phenomena like the double-descent curve and scaling laws

- To combat vanishing gradient is another reason networks are typically wide.

- Still have vanishing gradient tendency if the network is deep.

Recall:

Residual (skip) connection :

example on black-board

Now, \(g= a^1 + \text{ReLU}(z^2),\)

even if \(z^2 < 0\), with skip connection, weights in earlier layers can still get updated

Summary

- We saw that multi-layer perceptrons are a way to automatically find good features/transformations for us!

- In fact, roughly speaking, can asymptotically learn anything (universal approximation theorem).

- How to learn? Still just (stochastic) gradient descent!

- Thanks to the layered structure, turns out we can reuse lots of computation in gradient descent update -- back propagation.

- Practically, there can be numerical gradient issues. There're remedies, e.g. via having lots of neurons, or, via residual connections.

Thanks!

We'd love to hear your thoughts.

6.390 IntroML (Spring25) - Lecture 6 Neural Networks II

By Shen Shen

6.390 IntroML (Spring25) - Lecture 6 Neural Networks II

- 405