HAProxy vs NGINX

Table of Content

- what is HAProxy?

- what is load balancing?

- what is high availability?

- when to use?

- what is NGINX?

- what is webserver?

- NGINx vs NGINX Plus

- comparison between HAProxy and NGINX

- which one to choose?

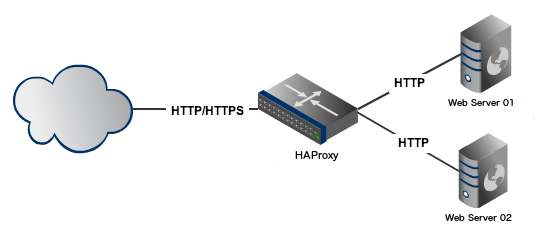

What is HAProxy?

HAProxy stands for High Availability Proxy, is a popular open source software.

- Proxying for TCP and HTTP-based application

- Load balancing

- High availability

Improve performance and reliability of a server by distributing workload across multiple server

What is Load Balancing?

- efficiently distributing incoming network traffic across multiple server

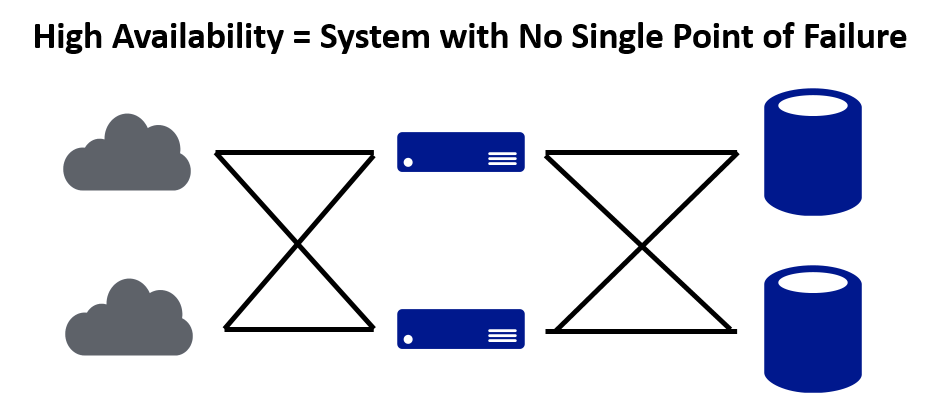

What is High Availability?

- High availability is a quality of a system that assures a high level of operational performance for a given period of time.

What is High Availability?

When to use?

- Websites with very high loads

- Multiple connection to website

- Load Balancing required at HTTP and TCP level

- Fault Tolerance

- Maximize Availability

- Maximize throughput

- Horizontal Scaling

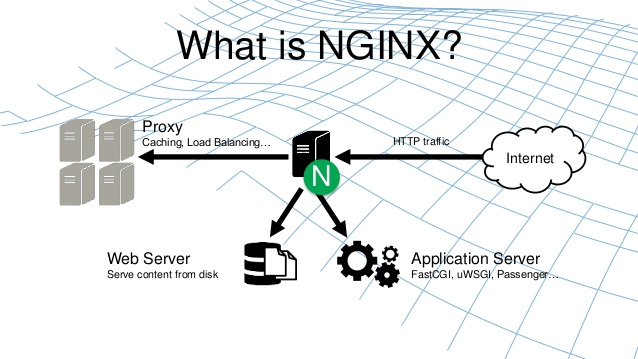

What is Nginx?

NGINX is open source software for web serving, reverse proxying, caching, load balancing, media streaming, and more.

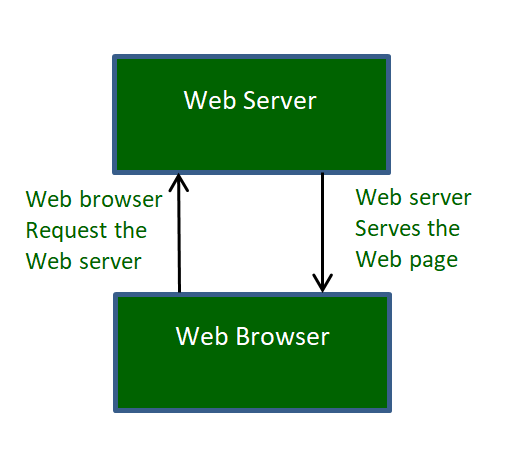

What is Web Server?

Why choose NGINX?

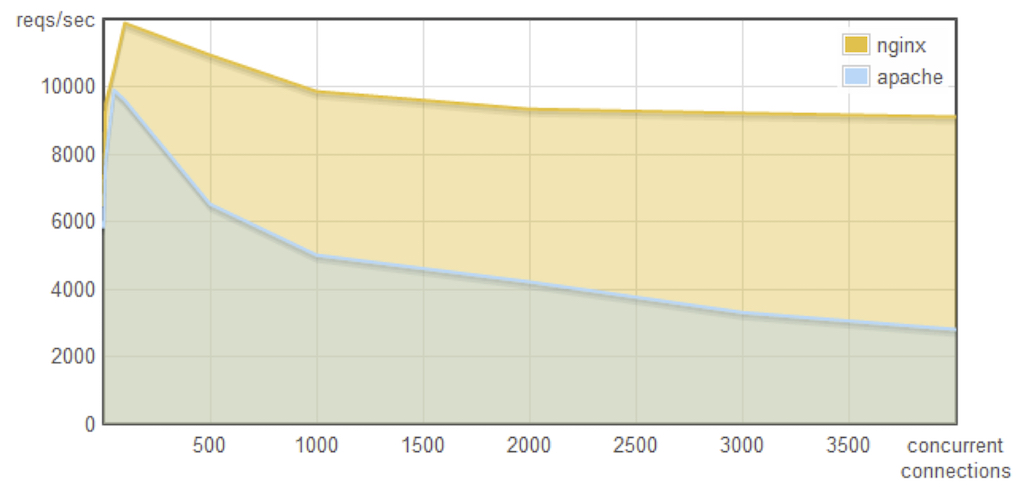

High Concurrency

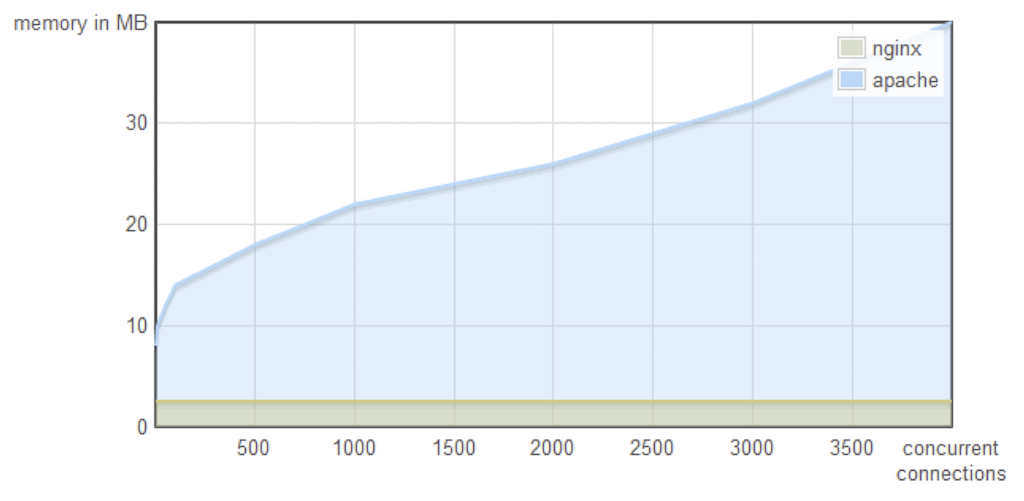

Low memory used

NGINX as load balancer

simple configuration

simple control and monitoring

not suited for complex or large system

no status on connection rate

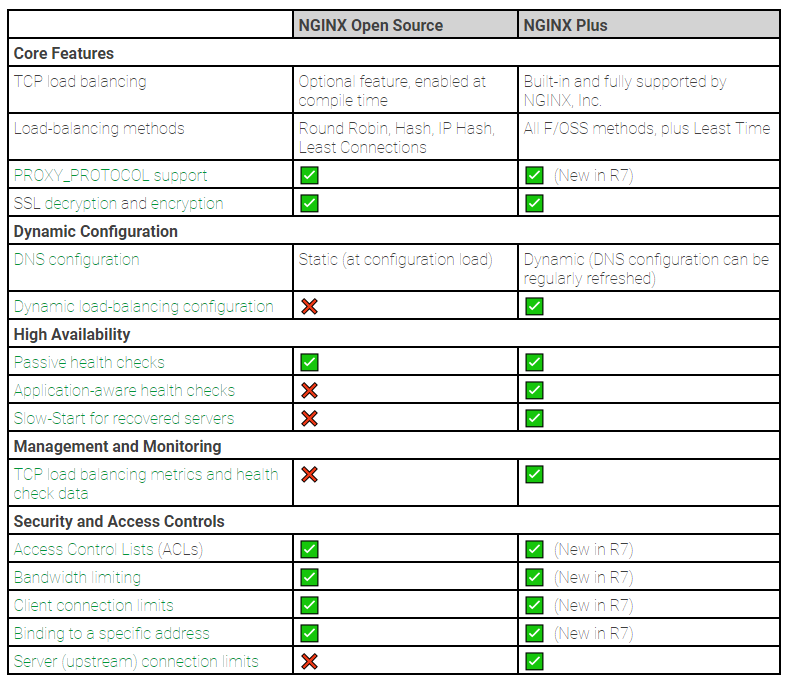

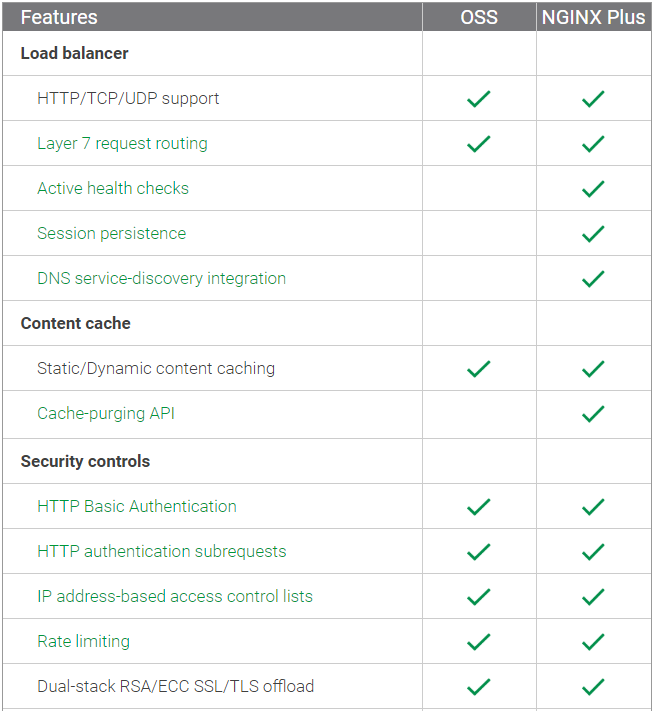

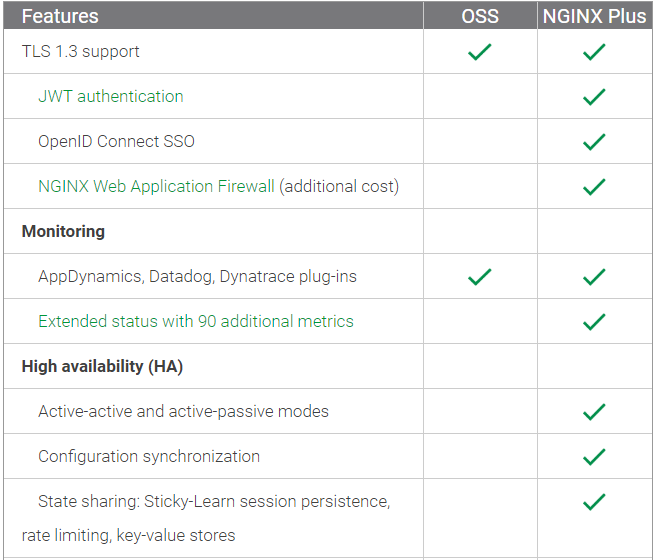

NGINX vs NGINX Plus

NGINX Plus includes exclusive features not available in NGINX Open Source and award-winning support.

Exclusive features includes active health checks, session persistence, JWT authentication, and more.

NGINX vs NGINX Plus

NGINX vs NGINX Plus

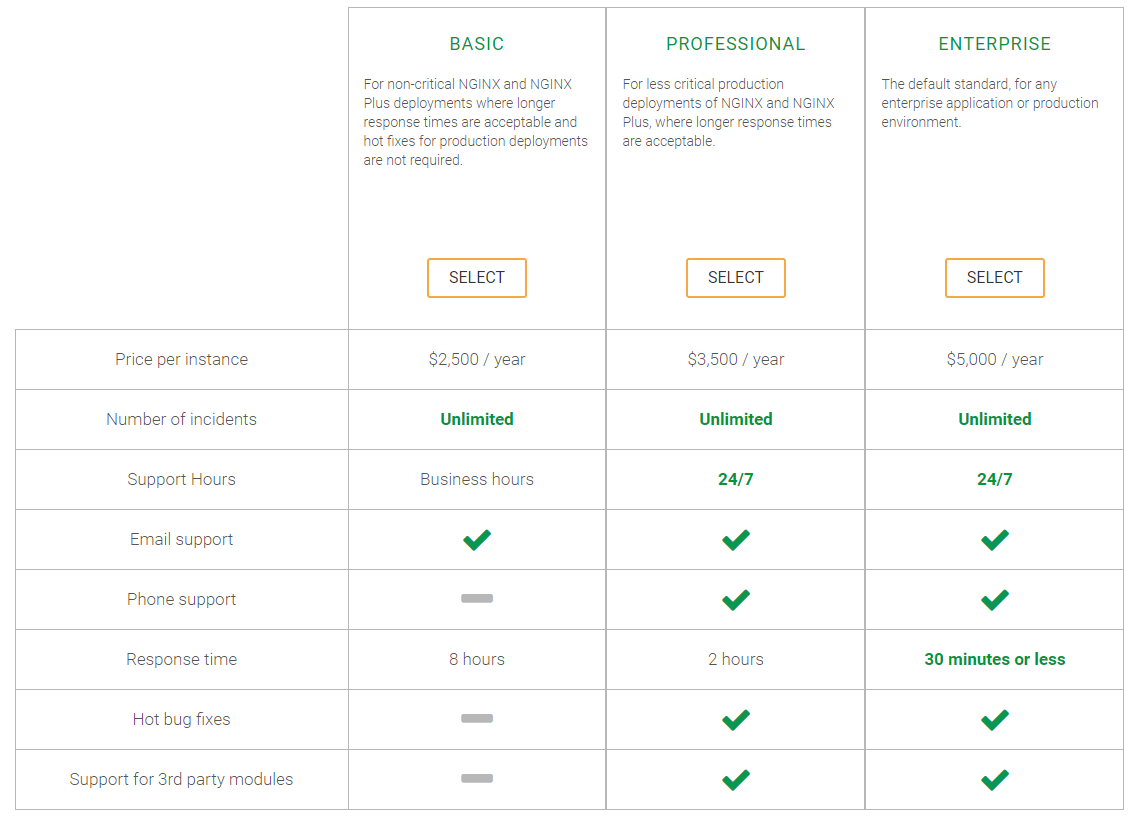

NGINX Plus Pricing

Comparison between HAProxy and NGINX

| HaProxy | NGINX | |

|---|---|---|

| Full web server |

|

|

| Concurrency |

|

|

| Load balancer |

|

|

| SSL offloading |

|

|

| Able to run in windows | ||

| Metrics | 61 different metrics | 7 different metrics |

Proxying traffic

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

log 127.0.0.1 local2 #Log configuration

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy #Haproxy running under user and group "haproxy"

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

#HAProxy Monitoring Config

#---------------------------------------------------------------------

listen haproxy3-monitoring *:8080 #Haproxy Monitoring run on port 8080

mode http

option forwardfor

option httpclose

stats enable

stats show-legends

stats refresh 5s

stats uri /stats #URL for HAProxy monitoring

stats realm Haproxy\ Statistics

stats auth howtoforge:howtoforge #User and Password for login to the monitoring dashboard

stats admin if TRUE

default_backend app-main #This is optionally for monitoring backend

#---------------------------------------------------------------------

# FrontEnd Configuration

#---------------------------------------------------------------------

frontend main

bind *:80

option http-server-close

option forwardfor

default_backend app-main

#---------------------------------------------------------------------

# BackEnd roundrobin as balance algorithm

#---------------------------------------------------------------------

backend app-main

balance roundrobin #Balance algorithm

option httpchk HEAD / HTTP/1.1\r\nHost:\ localhost #Check the server application is up and healty - 200 status code

server server1 172.20.32.109:80 check

server server2 172.20.32.110:80 check

http {

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

upstream backend {

server 172.20.32.109:80;

server 172.20.32.110:80;

}

}NGINX

HAProxy

Monitoring

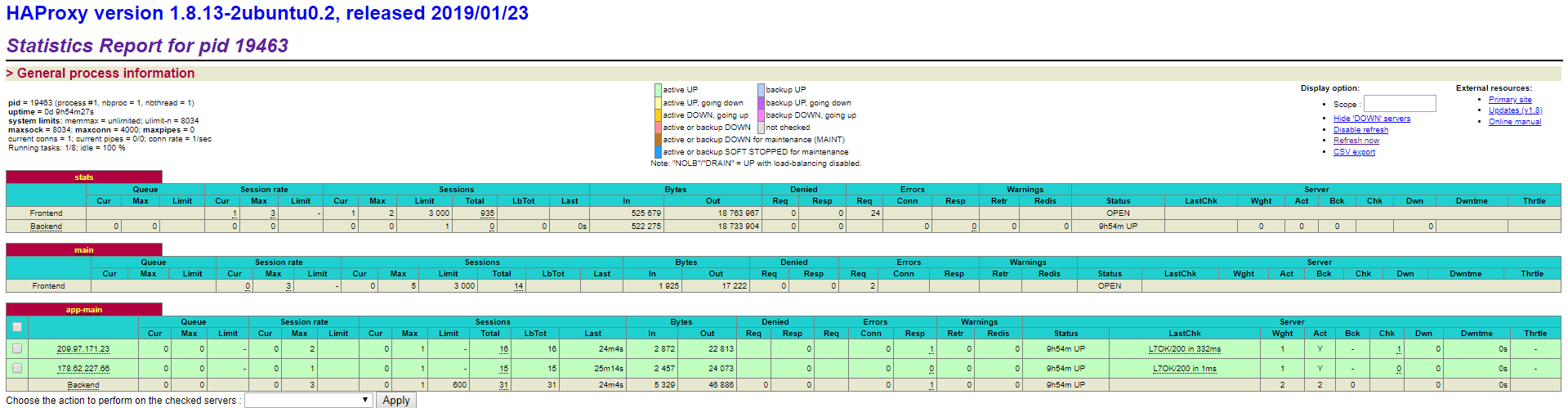

HAProxy stats page

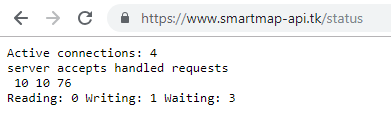

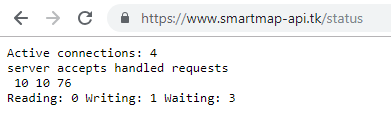

NGINX status page

username: howtoforge

Which one to choose?

THERE IS NO RIGHT OR WRONG

- load balancer that provide more information

- simpler & easy to setup

- serving static file

- running dynamic apps

Depends on

what you want..

- You may also used both HAProxy and NGINX

Reference

- https://www.freelancinggig.com/blog/2017/04/26/haproxy-vs-nginx-software-load-balancer-better/

- https://www.keycdn.com/support/haproxy-vs-nginx

- https://www.nginx.com/blog/using-nginx-plus-web-server/

- https://www.geeksforgeeks.org/web-servers-work/

- http://linuxbsdos.com/2015/09/25/nginx-plus-vs-open-source-nginx/

- https://www.nginx.com/blog/load-balancing-with-nginx-plus/

- https://www.keycdn.com/support/nginx-status

- https://www.nginx.com/products/nginx/#compare-versions

- https://www.nginx.com/resources/faq/

APPENDIX

-

Active connections – Number of all open connections. This doesn’t mean number of users. A single user, for a single pageview can open many concurrent connections to your server.

-

Server accepts handled requests – This shows three values.

-

First is total accepted connections.

-

Second is total handled connections. Usually first 2 values are same.

-

Third value is number of and handles requests. This is usually greater than second value.

-

Dividing third-value by second-one will give you number of requests per connection handled by Nginx. In above example, 10993/7368, 1.49 requests per connections.

-

-

Reading – nginx reads request header

-

Writing – nginx reads request body, processes request, or writes response to a client

-

Waiting – keep-alive connections, actually it is active – (reading + writing).This value depends on keepalive-timeout. Do not confuse non-zero waiting value for poor performance. It can be ignored. Although, you can force zero waiting by setting keepalive_timeout 0;

HAProxy Features

-

Layer 4 (TCP) and Layer 7 (HTTP) load balancing

-

URL rewriting

-

Rate limiting

-

SSL/TLS termination

-

Gzip compression

-

Proxy Protocol support

-

Health checking

-

Connection and HTTP message logging

-

HTTP/2

- Multithreading

- Hitless Reloads

- gRPC Support

- Lua and SPOE Support

- API Support

- Layer 4 Retries

- Simplified circuit breaking

HAProxy vs Nginx

By shirlin1028

HAProxy vs Nginx

- 849