Meeting with Russ

Thesis Topic

- Efficient

- Model-based

- Generalizable

- Learning

- Contact-Rich

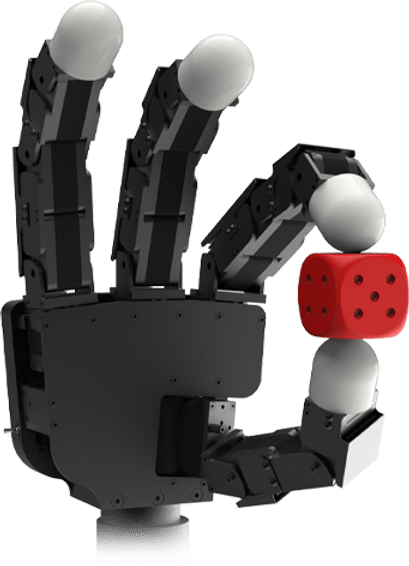

- Dexterous

- Manipulation

- Differentiable

1. Do Differentiable Simulators Give Better Policy Gradients?

2. Global Planning for Contact-Rich Manipulation via Local Smoothing of Quasidynamic Contact Models

3. Motion Sets for Dexterous Contact-Rich Manipulation

4. Value Gradient Learning for Long-Horizon Manipulation

5. Generalizing beyond demonstrations with models

Efficient and Generalizable Dexterous Contact-Rich Manipulation

Graduation Timeline

June

Feb

May

Sep

Feb

June 20th

First Committee Meeting

Oct/ Nov

Second Committee Meeting

Jan/ Feb

Defense

Imitation Learning

- Foundation Models, ChatGPT for Robotics, big data, etc.

- Robotics has scarcity of data

- Co-training with sim can increase data efficiency, we can augment?

- How can we do better when we know model structure, etc.?

What is the extent to which we can generalize the same demonstration data to different settings?

Can we use more efficient use of data when we know the environment dynamics?

Project 1. Model-Based Imitation Learning

Demonstration data

Overall goal: Imitation Learning provides a dense reward to model the long-horizon behavior, while we can do local reward-driven refinements that allow slight generalization

Example: Off-line / Off-Policy RL

Off-line RL

"When off-line data is of 'good quality' RL can improve much faster."

Project 1. Model-Based Imitation Learning

Demonstration data

Behavior Cloning

- Only utilizes what action is taken given state

- But potentially more things we can learn from the data (e.g. dynamics)

Model-Based Imitation Learning

- Learns dynamics from demonstration data

- Planning to stay near demonstrations

- Stochastic Planning naturally multi-modal

Project 1. Model-Based Imitation Learning

Reward Weighting

Terminal Value Formulation

- Attempts to use additional rewards to guide local refinements when environment dynamics are known, on-line adaptation is faster than RL.

- Cannot recover if the change in the environment requires significantly different demonstrations

Project 2. What should we imitate?

Imitating slightly higher-level actions can allow us to generalize to different embodiments / objects

Demonstration

Track higher-level actions with e.g. inverse dynamics

Watch

Understand

Extract higher-level actions

(contact points, forces, etc.)

Do

Project 2. What should we imitate?

Demonstration

Track higher-level actions with e.g. inverse dynamics

Watch

Understand

Extract higher-level actions

(contact points, forces, etc.)

Do

1. How much do we need to instrument humans / build tools to understand what they did?

2. What is a good intermediate action that we can imitate?

Project 2. What should we imitate?

Version 1. Imitating Contact Points

From videos / tactile gloves + mocap, extract where the human made contact

Watch

Understand

Find out contact forces and points so that the observed understanding of the scene is physically consistent

Do

Re-target contact forces and points to a physical robot so that the same object motion can be achieved.

Russ Meeting

By Terry Suh

Russ Meeting

- 378