Complexity

A problem may have multiple solutions

But how to decide which one is "better" ?

Some Valuation

- Spend Less Time (Run Speed)

- Use Less Space

- Higher Correctness

- Higher Compatibility

- Low developing cost

Balance is important

- When there is no computer, it takes 7.5 years to finish the census in US.

- When you finished... children have become adult...

- If we just want to know the distribution of population, sampling is much useful.

- After card reader (the earliest computer) came out, it takes only six weeks to finish census

But how to measure

- Run it and measure is the most precise way.

- But how to forecast?

- Compatibility, developing cost highly depends on the implementation method and who implements it.

- Accuracy has many perspectives.

- Implementing detail

- Number of cases

- Theory Analysis

- How about time and spaces?

Some notes

The time each operation takes is not the same.

ex. division is much slower than addition.

ex. accessing different parts of memory takes different time.

Imput size

- Generally, when the input size is small the deviation of time is small

- We usually focus on those input size is huge.

The concept of time complexity

Summer homework

For most of us, doing addition is much faster than multiplication.

But...

The teacher offers two types of homework

- 10 multiplication problems / day

- 1st day 1 addition problem, 2nd day 2 addition problems, 3rd day 3 addition problems... and so on

Which one you will choose ?

addtion v.s. multiplication

Assume that it takes 5 secs for you to do an addition problem and 20 secs for a multiplication problem.

At the n-th day

Solution 1 takes

Solution 2 takes

At the n-th day

If n is small, ex n = 3 solution 2 is better

What if n is large ? ex. n = 365

We say that the growth(complexity) of operations needed of the solution is higher.

So for the large n, we should choose solution 1

Growth ?

Precisely, the growth of function

Briefly speaking, they grow in different level of speed

Concept of Infinity

- For two function f(n) and g(n) when

which one is bigger ?

Comparison

Concept of limit

if is close to 0 when n close to inf., we say that f(n) less than g(n)

if it is close to inf. then n close to inf., we say that f(n) is larger than g(n). (not presicely)

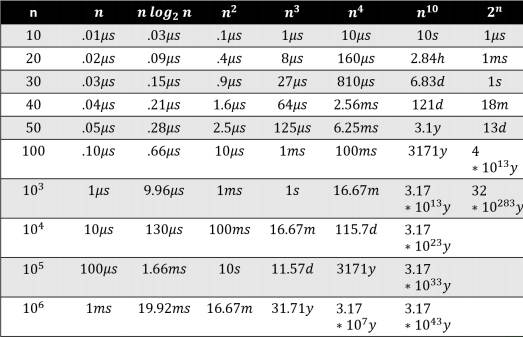

On a computer that performs 10^9 operation per sec.

Concept of complexity

We assume all operations take the same time.

- addition, subtraction, multiplication, division

- modulation

- bit operation

- access memory

- branch operation (if, else)

- assign operation ( x= 5)

We calculate the numbers of operations and

check its complexity and measure runtime.

ex.

now computation power of a computer is generally

asymptotic notation

The constant is not so important

Big-O notation

the upper bound of complexity

Suppose the real time function is g(n)

then as

example

Usually we take the "tight" upper bound.

Practice

Little-o notation

the rigorous upper bound of f(n)

Suppose the real time function is g(n)

then as

Other asymptotic notations

Example

Example

Big-omega notation

the lower bound of f(n)

Other asymptotic notations

Little-omega notation

the strict lower bound of f(n)

Other asymptotic notations

Big-theta notation

the strict upper bound and strict lower bound of f(n)

Other asymptotic notations

Usually use

Big-O and Big-theta

Worst Case

When analyzing complexity, we consider the worst case

Even the probability of it isn't large.

ex. Insertion Sort

from 1 ~ n, take the i-th element

move it to the correct position

ex. sorting [3, 2 ,1 ,4]

- [3, 2 ,1, 4]

- [2, 3 ,1, 4]

- [2, 1, 3, 4]

- [1, 2, 3, 4]

- [1, 2, 3, 4]

Pseudo Code

for i from 1 to n

for j from i-1 to 1

if( seq[j] < seq[i])

switch seq[j] and seq[i]

else

breakex. sorting [3, 2 ,1 ,4]

- [3, 2 ,1, 4]

- [2, 3 ,1, 4]

- [2, 1, 3, 4]

- [1, 2, 3, 4]

- [1, 2, 3, 4]

Best case

Already sorted

ex. [1, 2, 3, 4, 5]

O(n)

Worst case

Reverse Order

ex. [5, 4, 3, 2 ,1]

(n, n-1, n-2, ... 1)

Think About it

- "Asymptotic" complexity, why ?

- What's the pros and cons of complexity analysis? It's always good to choose the lower time complexity?

- Try to analyze your stack program.

In real word

If an algorithm has high space complexity and low time complexity.

Another one has low space complexity and high time complexity.

Which to choose?

when range large/small ? input size large/small?

Homework more practice with stack & queue

We will step in forest next week

Complexity

By tunchin kao

Complexity

- 68